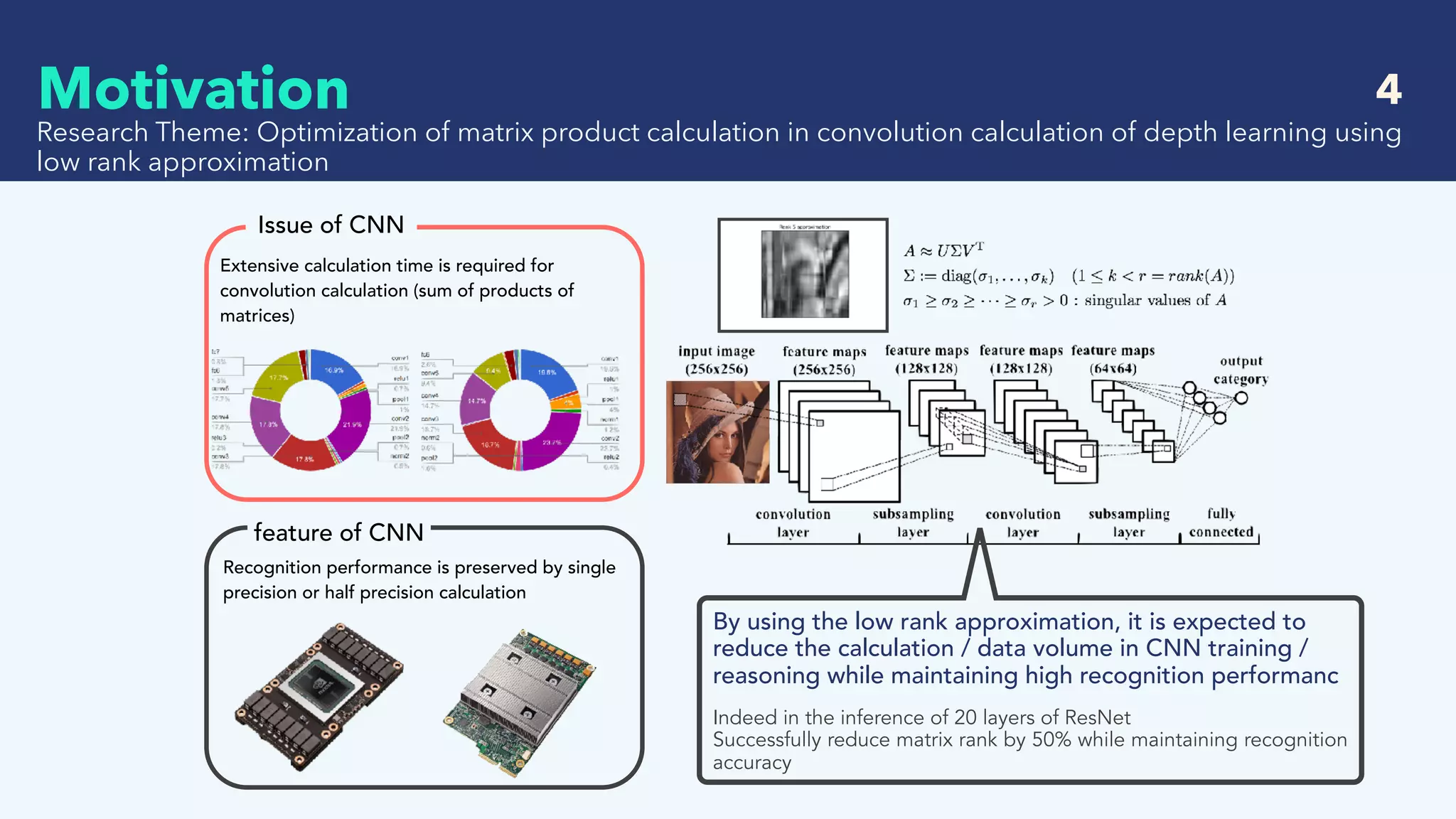

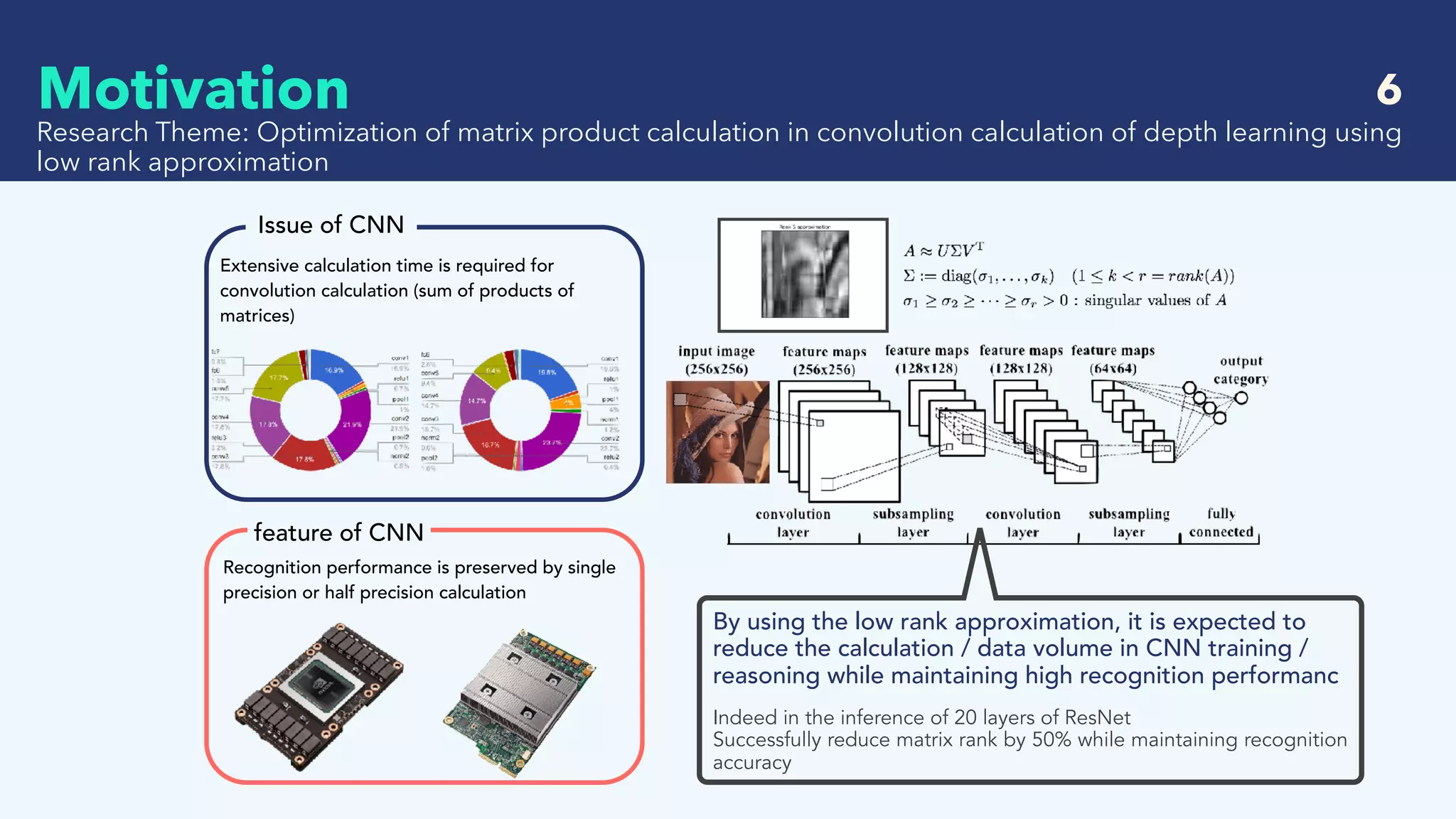

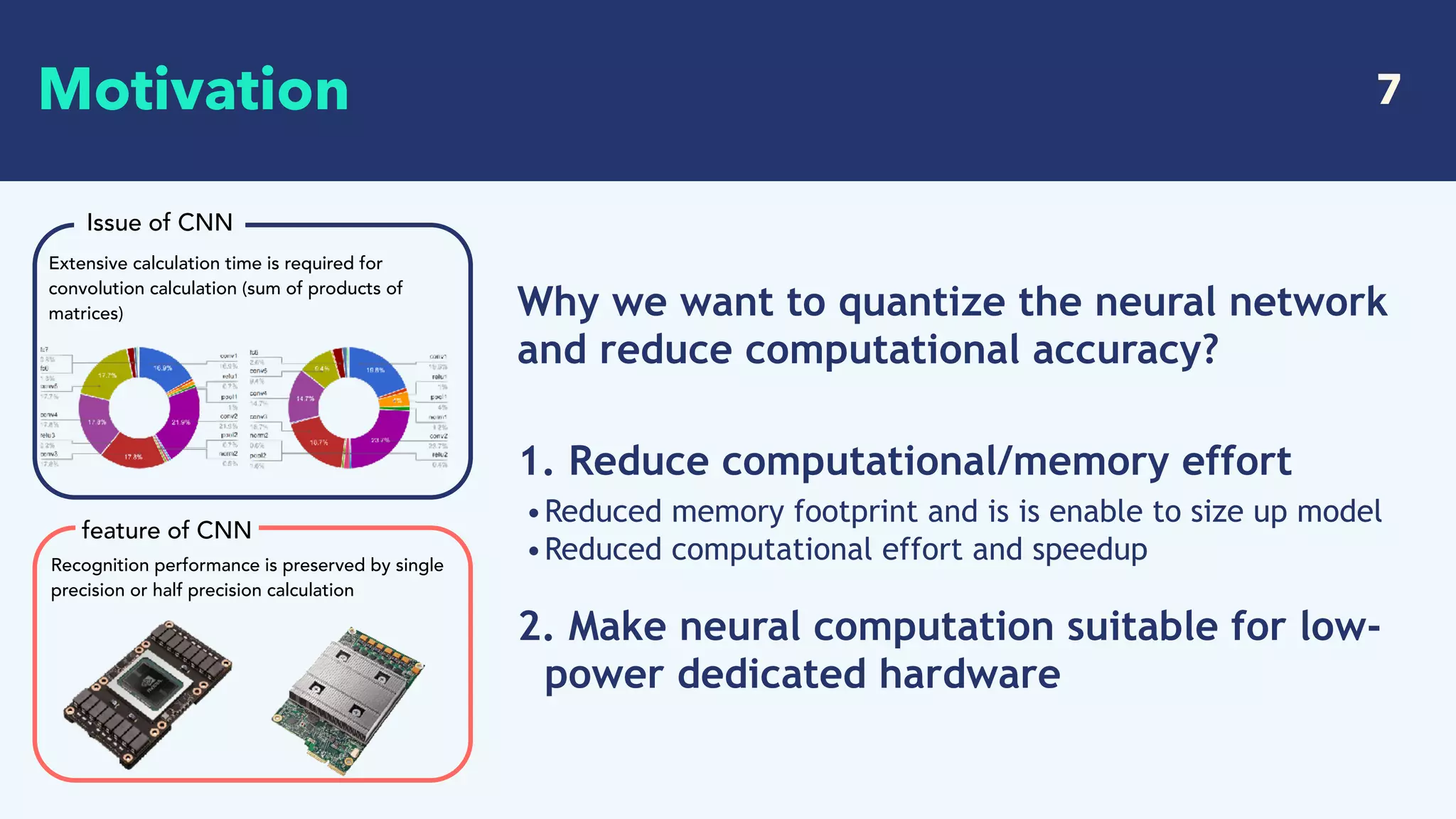

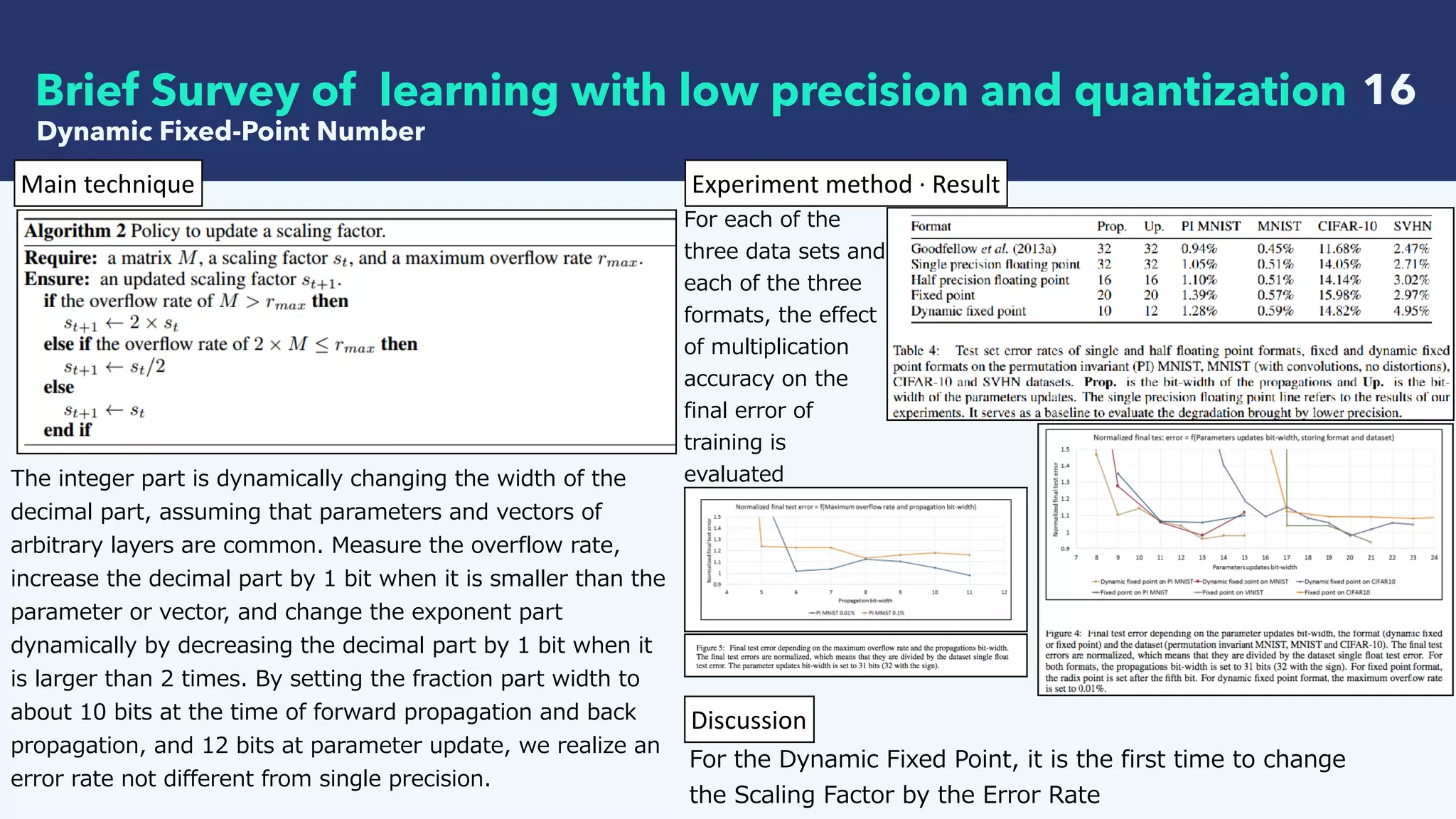

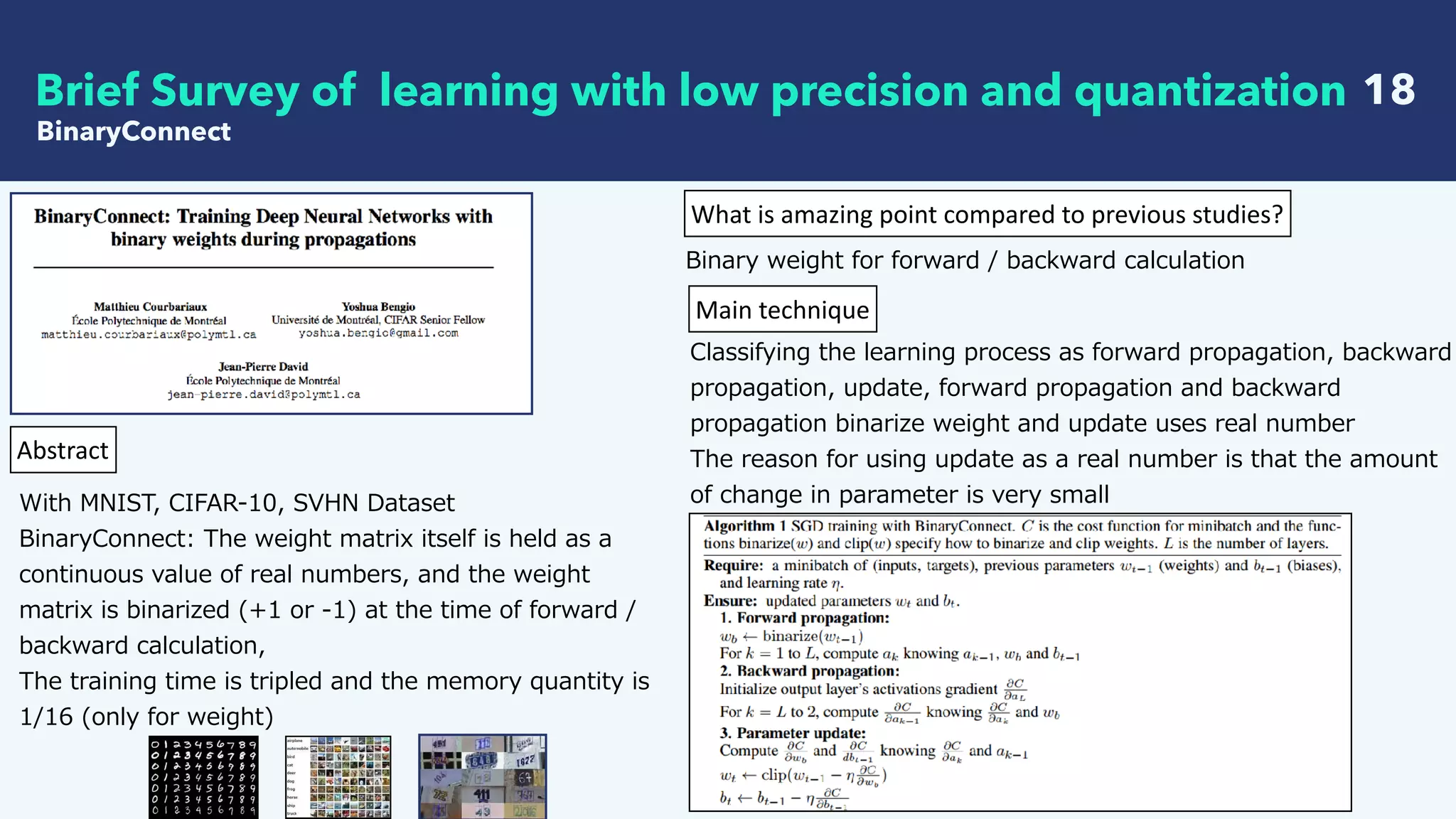

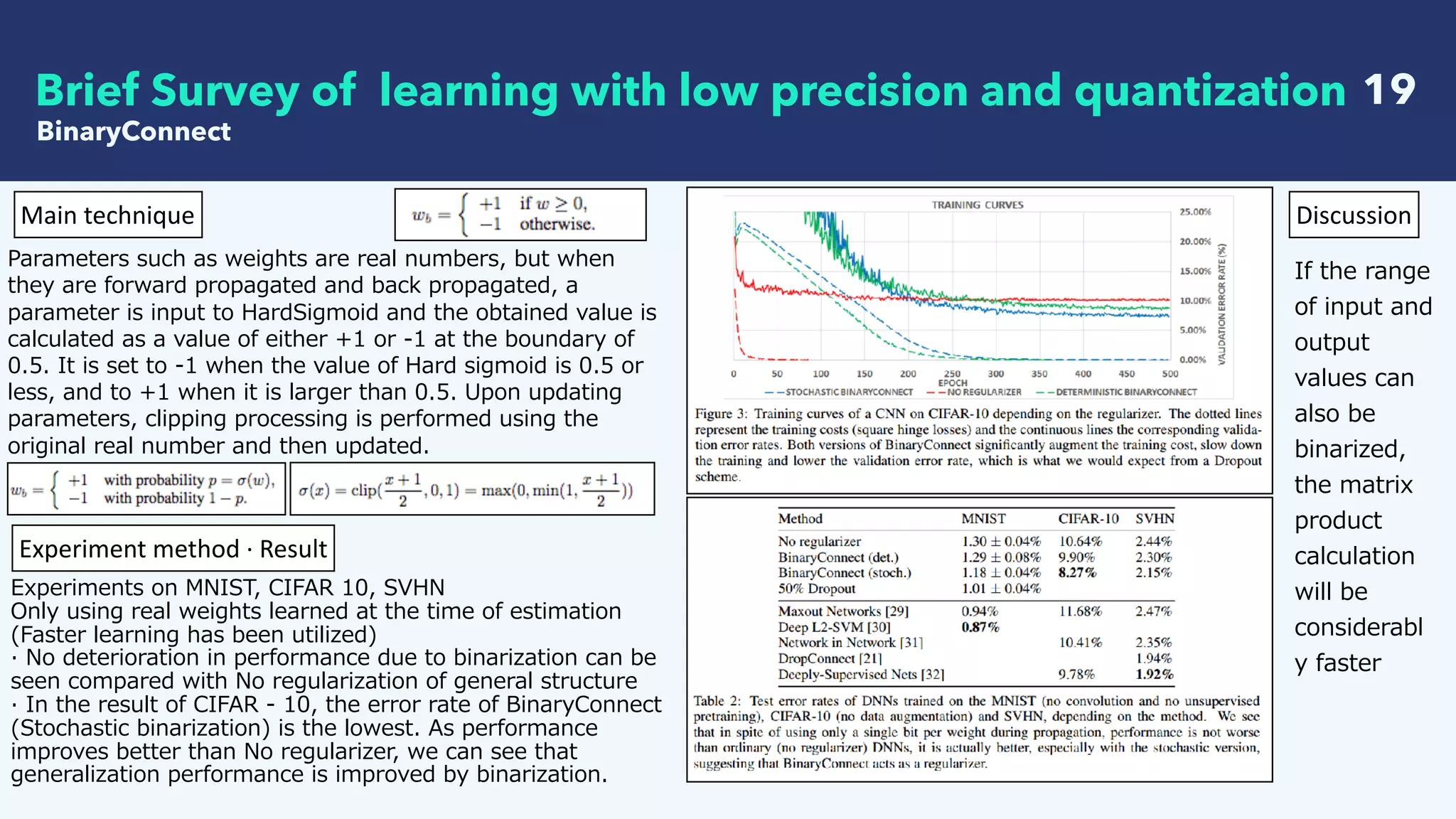

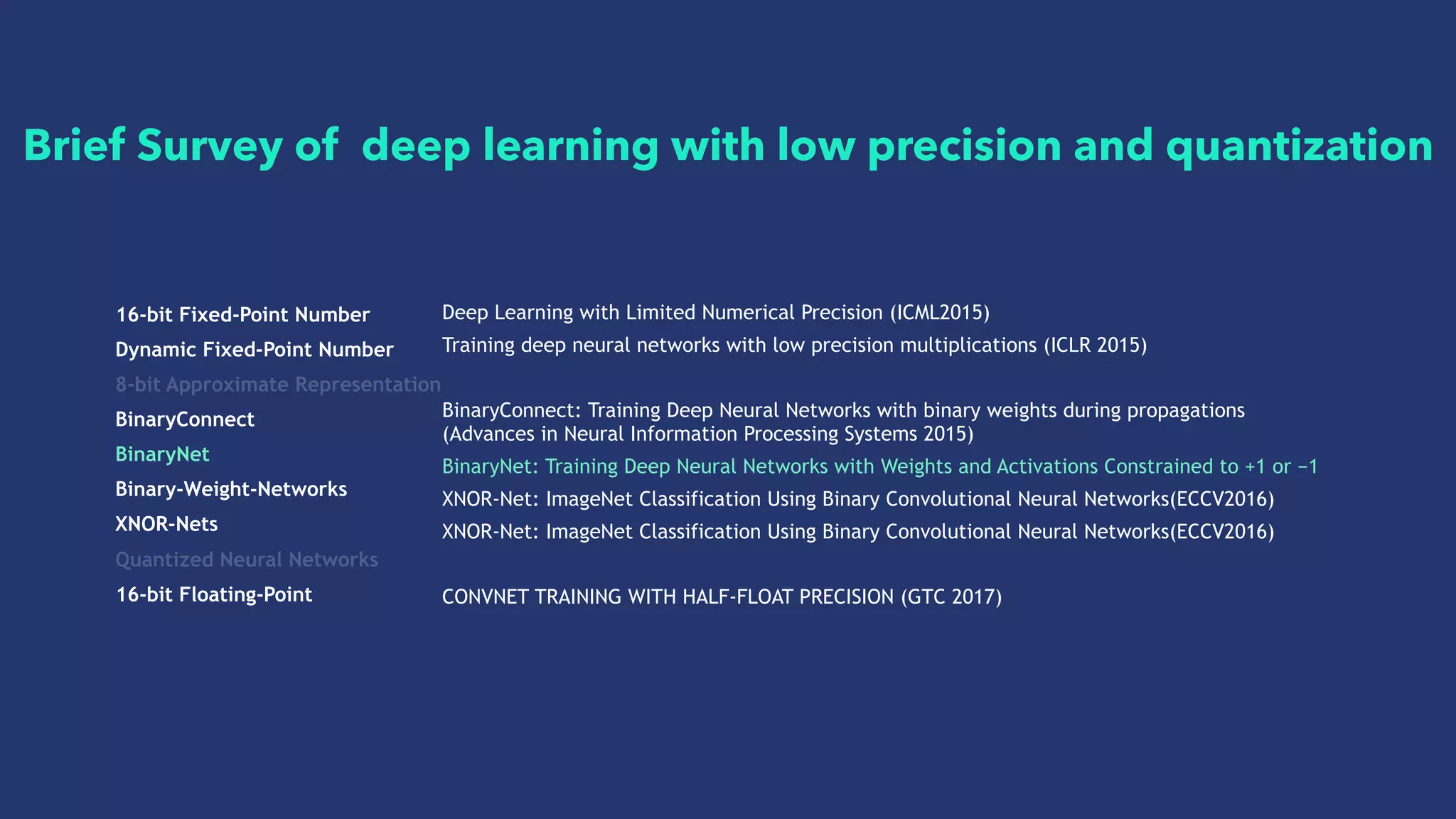

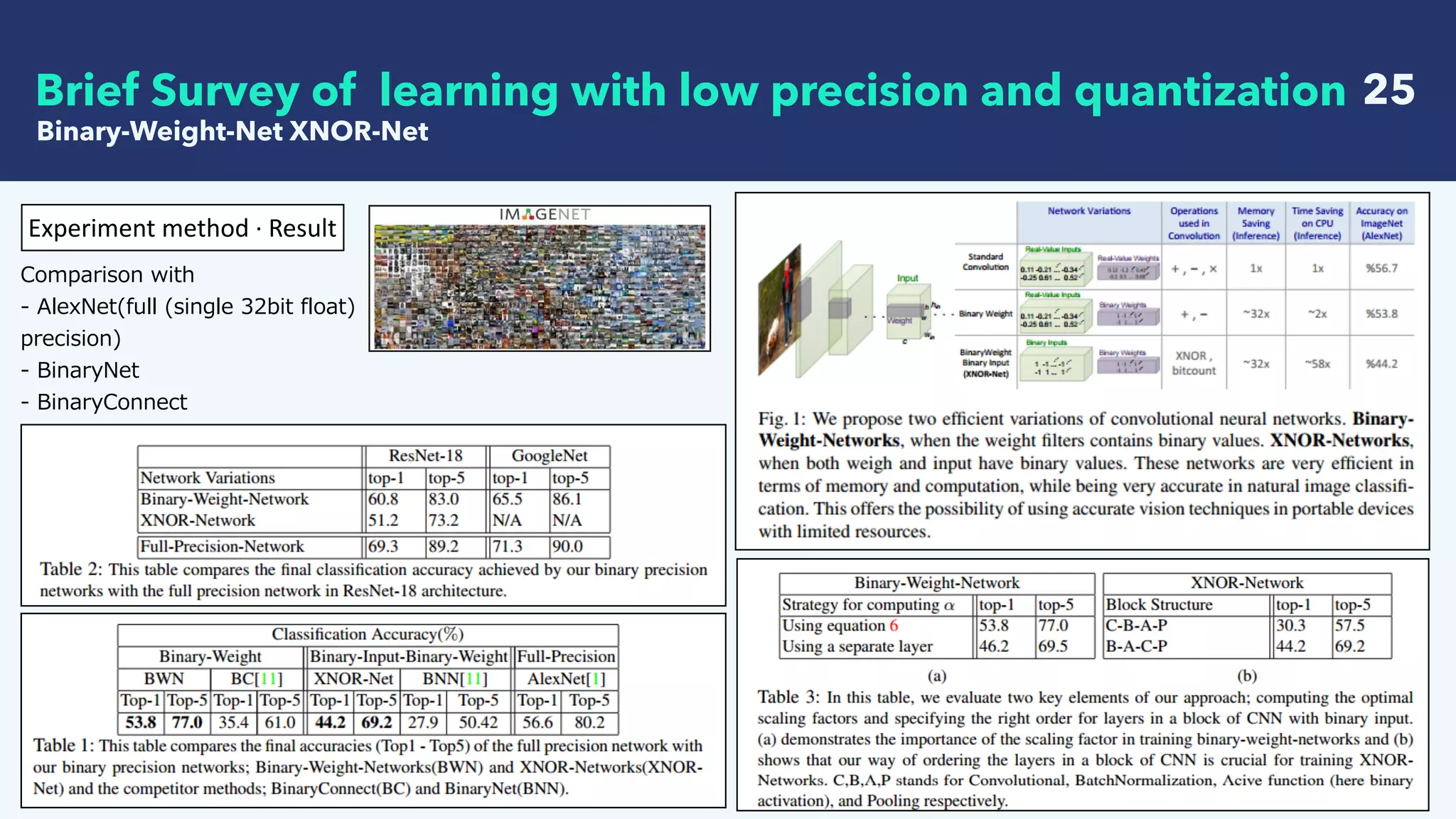

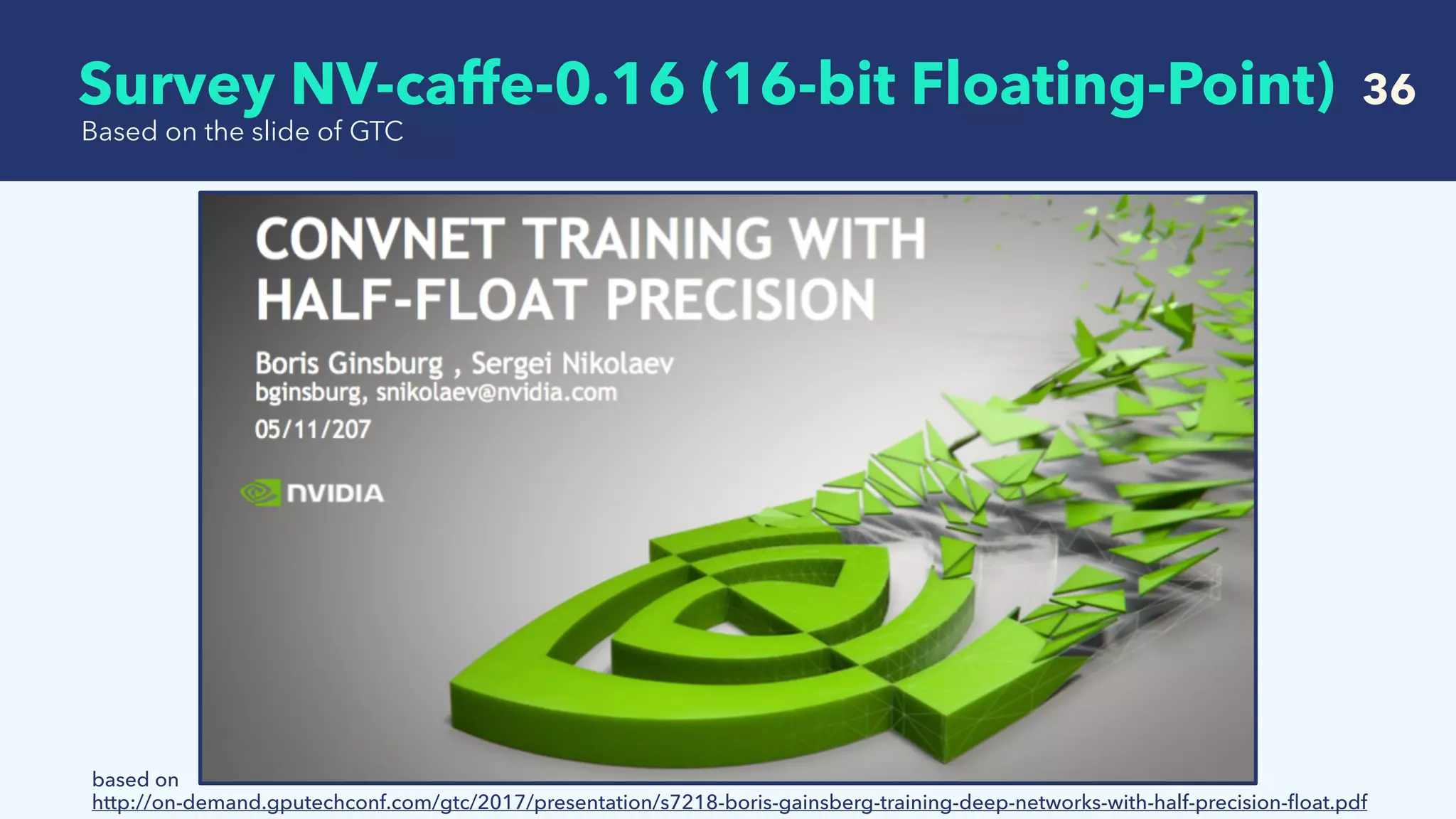

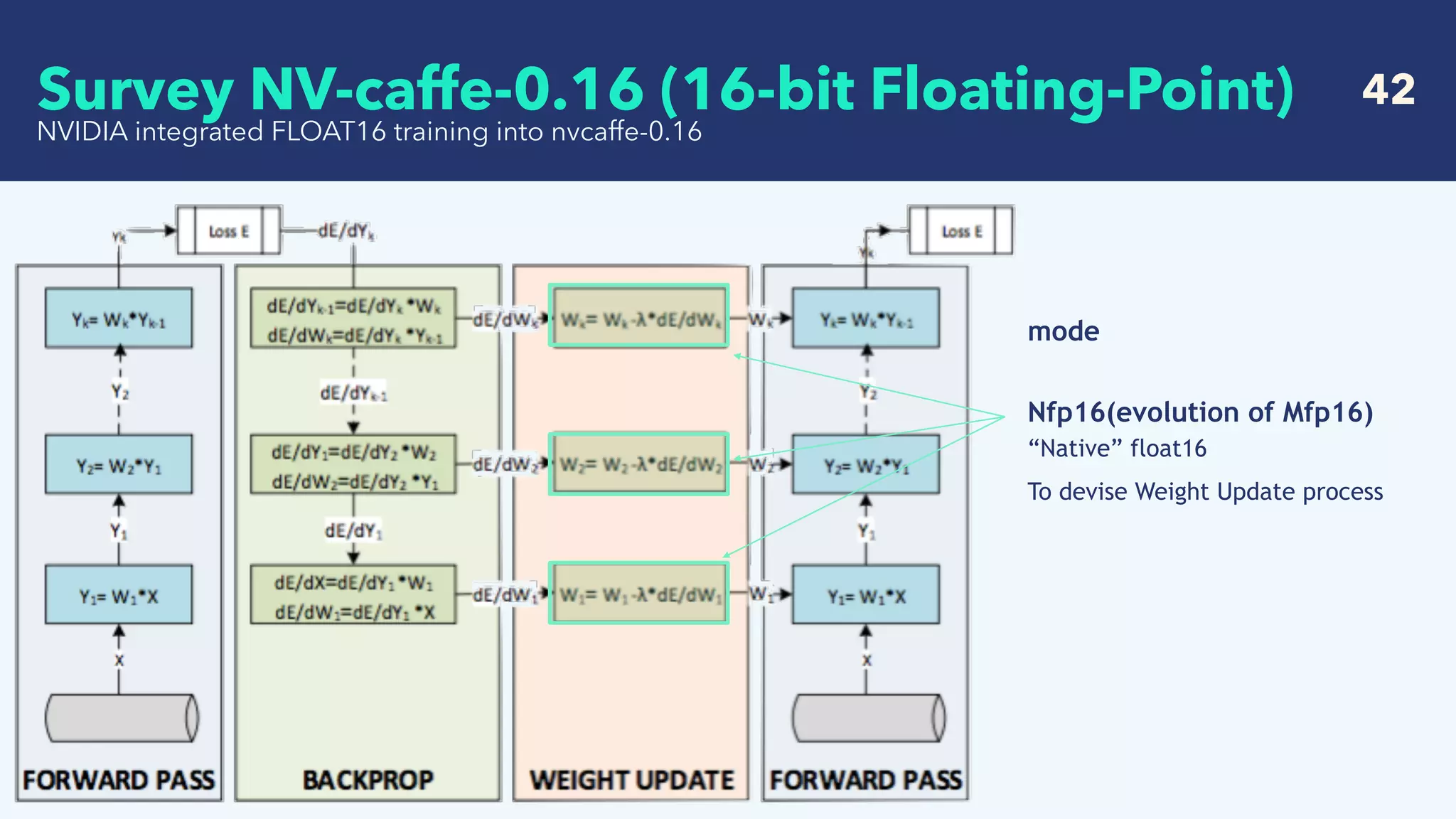

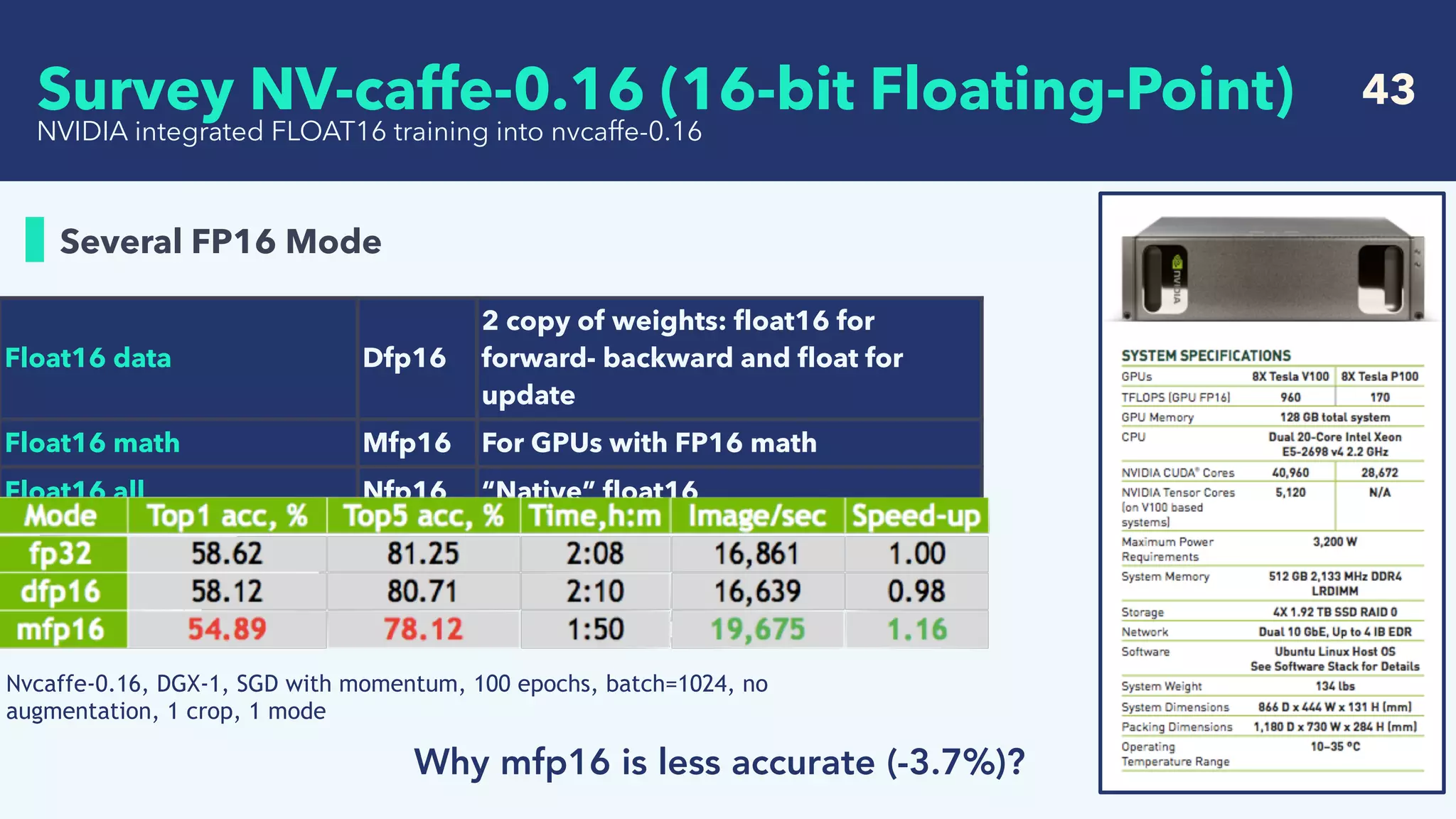

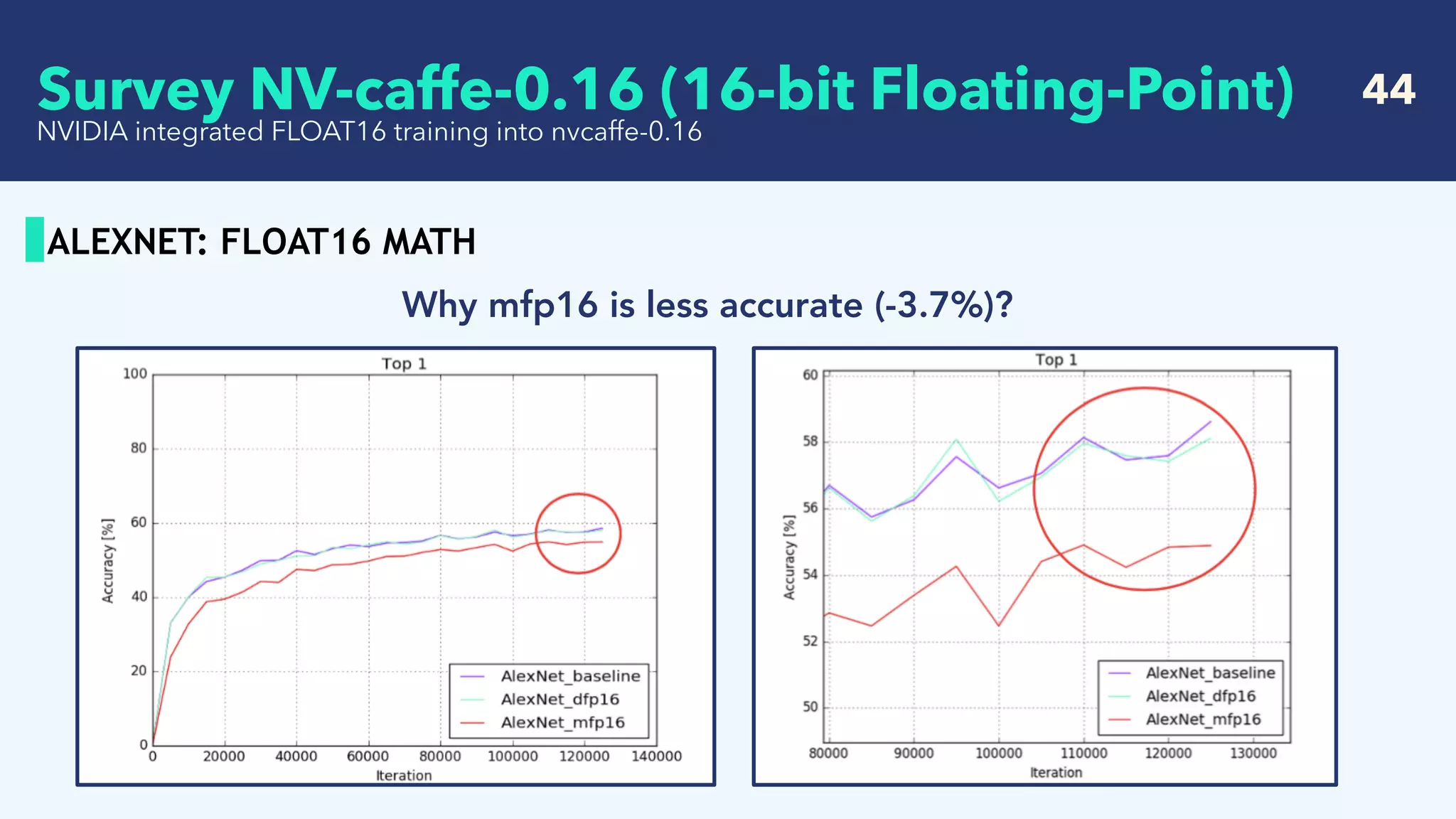

This document summarizes recent research on deep learning with low precision numerical representations. It discusses 6 different methods for reducing precision: 16-bit fixed-point, dynamic fixed-point, 8-bit approximate representation, BinaryConnect, BinaryNet, and XNOR-Nets. For each method, it provides an abstract, the key techniques used, experimental results on datasets like MNIST and CIFAR-10, and a discussion of findings. The overall goal of these methods is to reduce computational and memory costs while maintaining high recognition performance in neural networks.

![Survey cuDNN, NVIDIA recent news 31

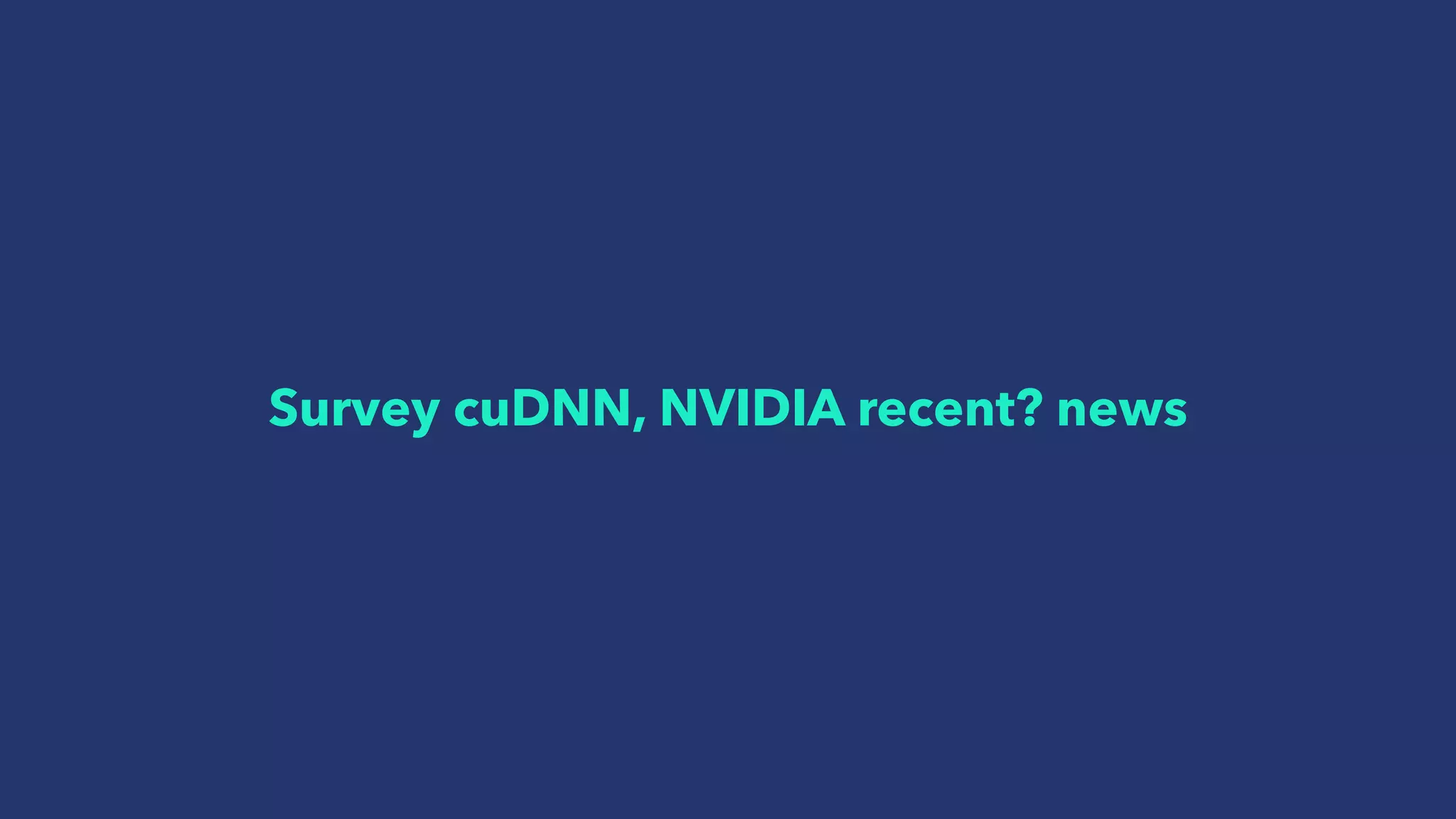

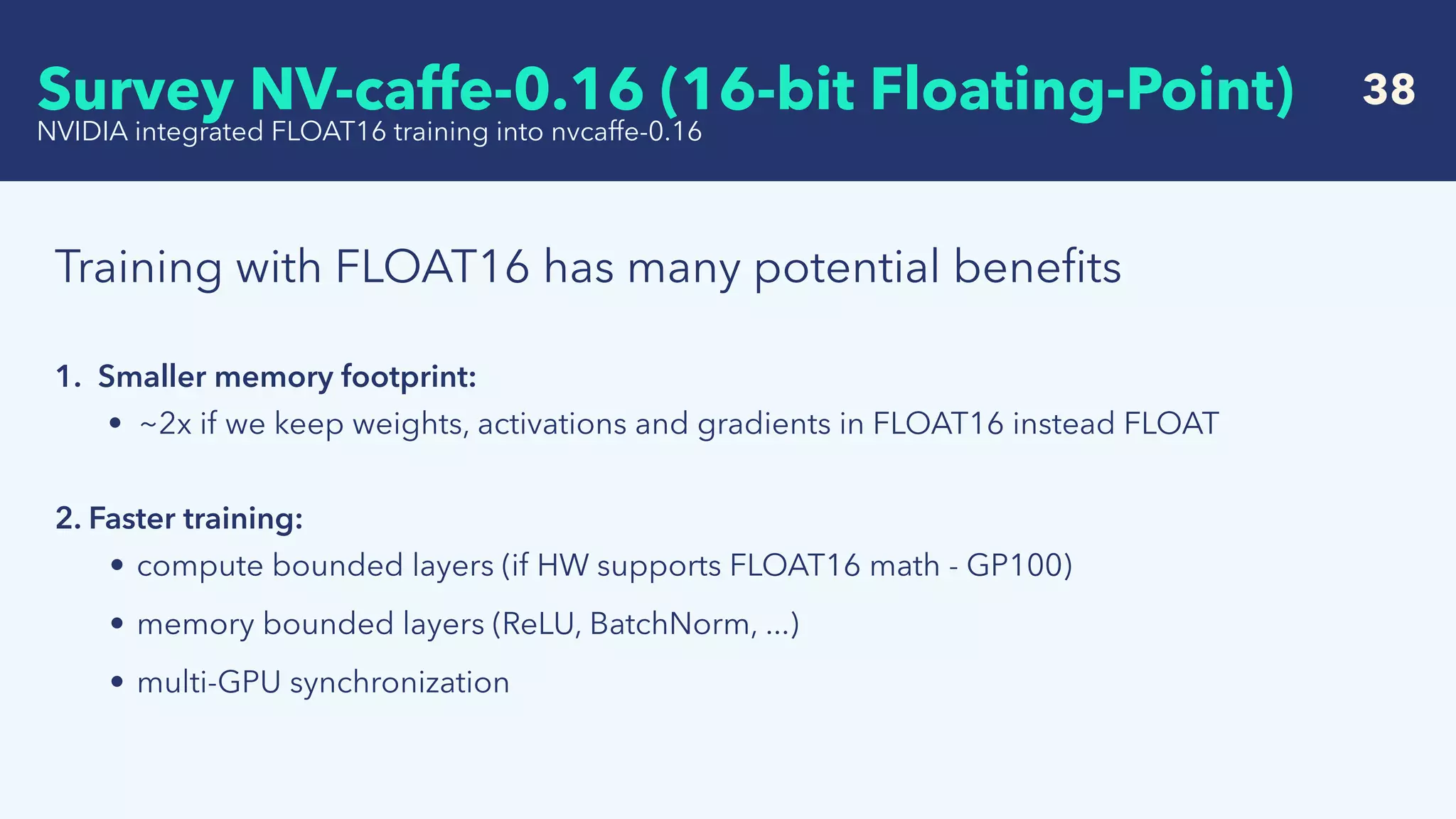

FP16 Support

Mixed-Precision Programming with CUDA 8 / Posted on October 19, 2016 by Mark Harris

A fused multiply–add (sometimes known as FMA or fmadd)[2] is a floating-point multiply–add operation

performed in one step, with a single rounding. That is, where an unfused multiply–add would compute the

product b×c, round it to N significant bits, add the result to a, and round back to N significant bits, a fused

multiply–add would compute the entire expression a+b×c to its full precision before rounding the final result

down to N significant bits.

A fast FMA can speed up and improve the accuracy of many computations that involve the accumulation of

products:

• Dot product

• Matrix multiplication

• Polynomial evaluation (e.g., with Horner's rule)

• Newton's method for evaluating functions.

• Convolutions and artificial neural networks

Fused multiply–add can usually be relied on to give more accurate results. However, William Kahan has

pointed out that it can give problems if used unthinkingly.[3] If x2 − y2 is evaluated as ((x×x) − y×y) using fused

multiply–add, then the result may be negative even when x = y due to the first multiplication discarding low

significance bits. This could then lead to an error if, for instance, the square root of the result is then evaluated.

When implemented inside a microprocessor, an FMA can actually be faster than a multiply operation followed

by an add. However, standard industrial implementations based on the original IBM RS/6000 design require a

2N-bit adder to compute the sum properly.[4][5]

A useful benefit of including this instruction is that it allows an efficient software implementation of division (see

division algorithm) and square root (see methods of computing square roots) operations, thus eliminating the

need for dedicated hardware for those operations.[6]

Fused multiply–add](https://image.slidesharecdn.com/crestsurveylowprecision-180424085127/75/Survey-of-recent-deep-learning-with-low-precision-31-2048.jpg)

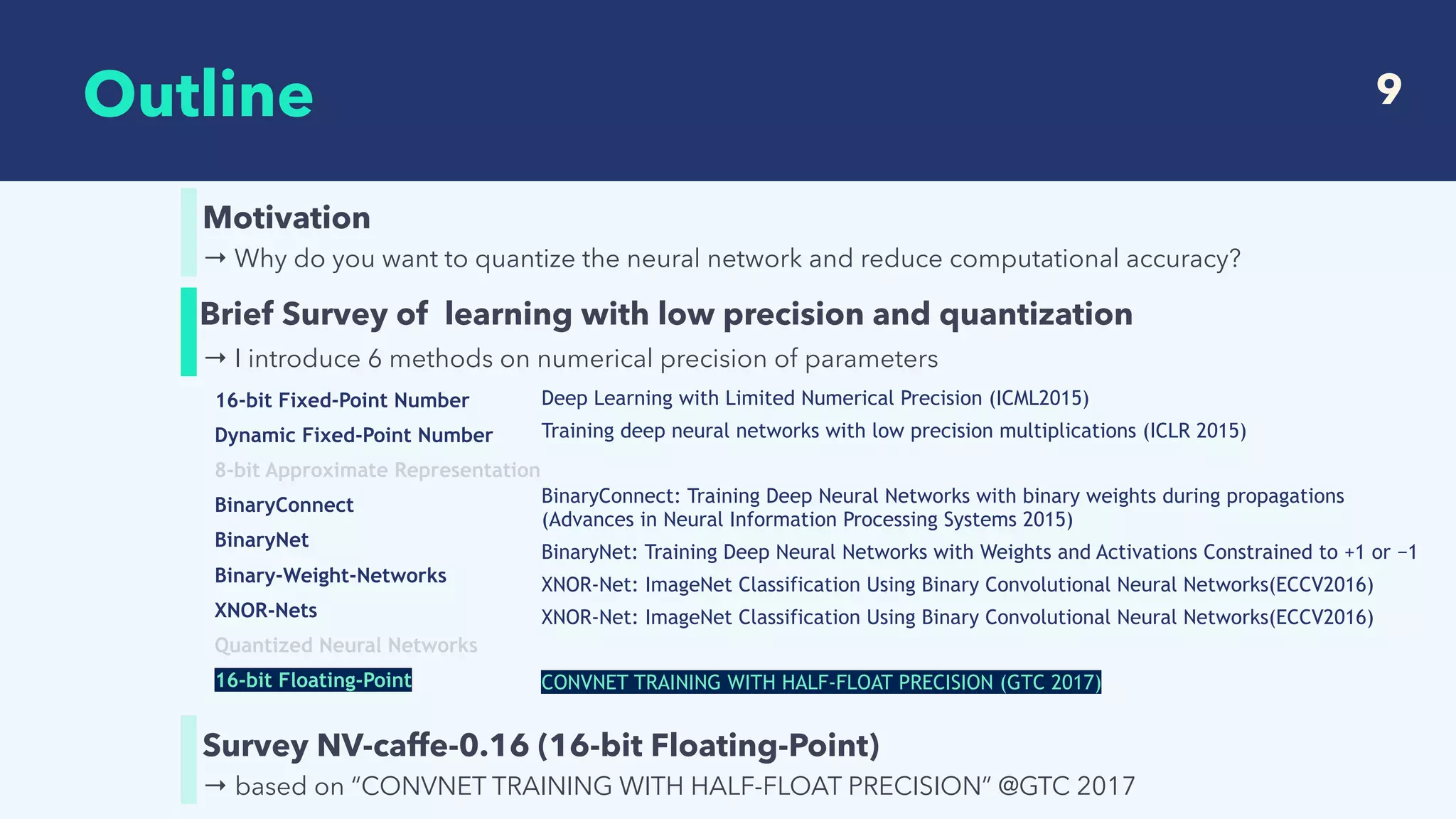

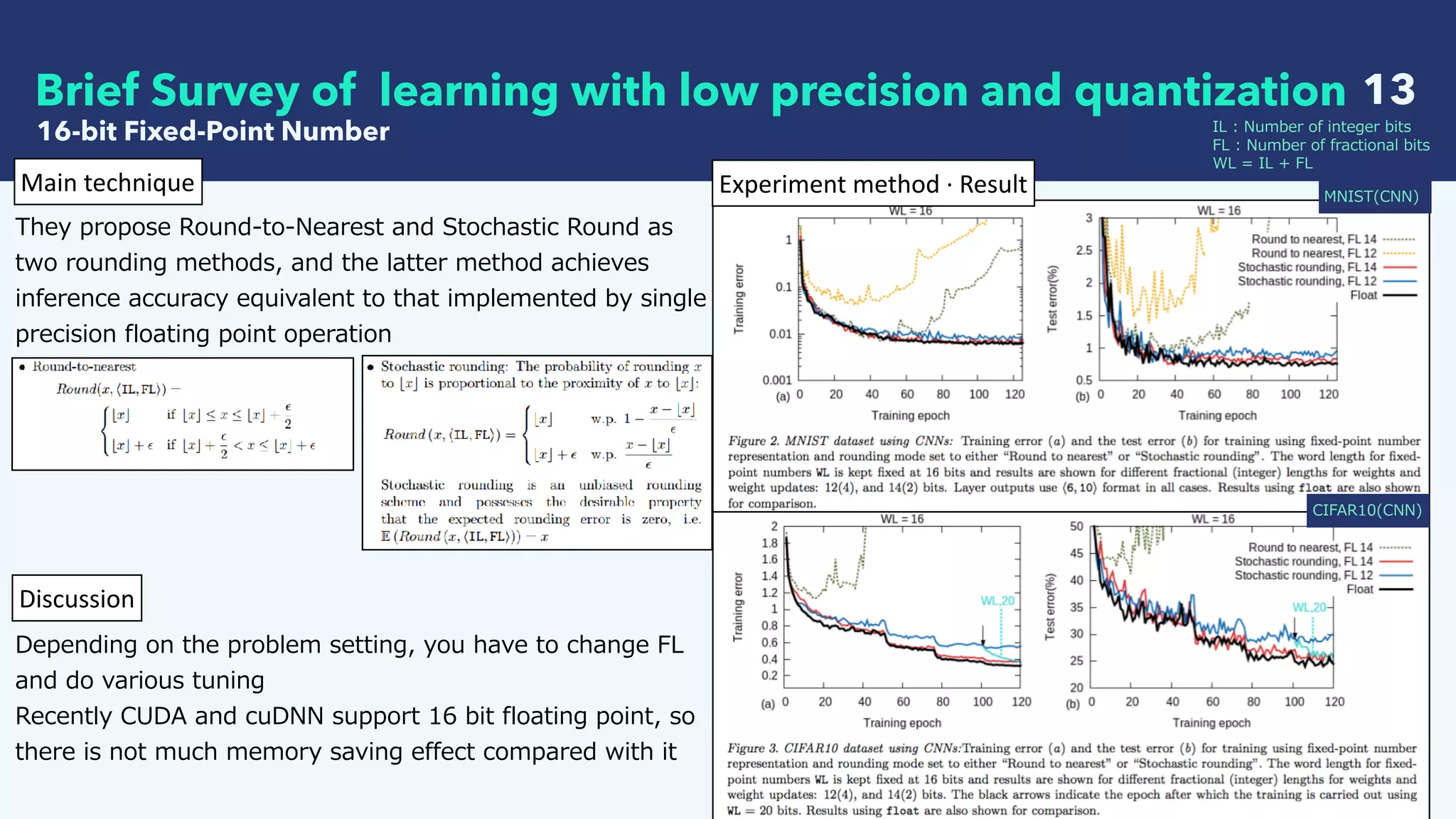

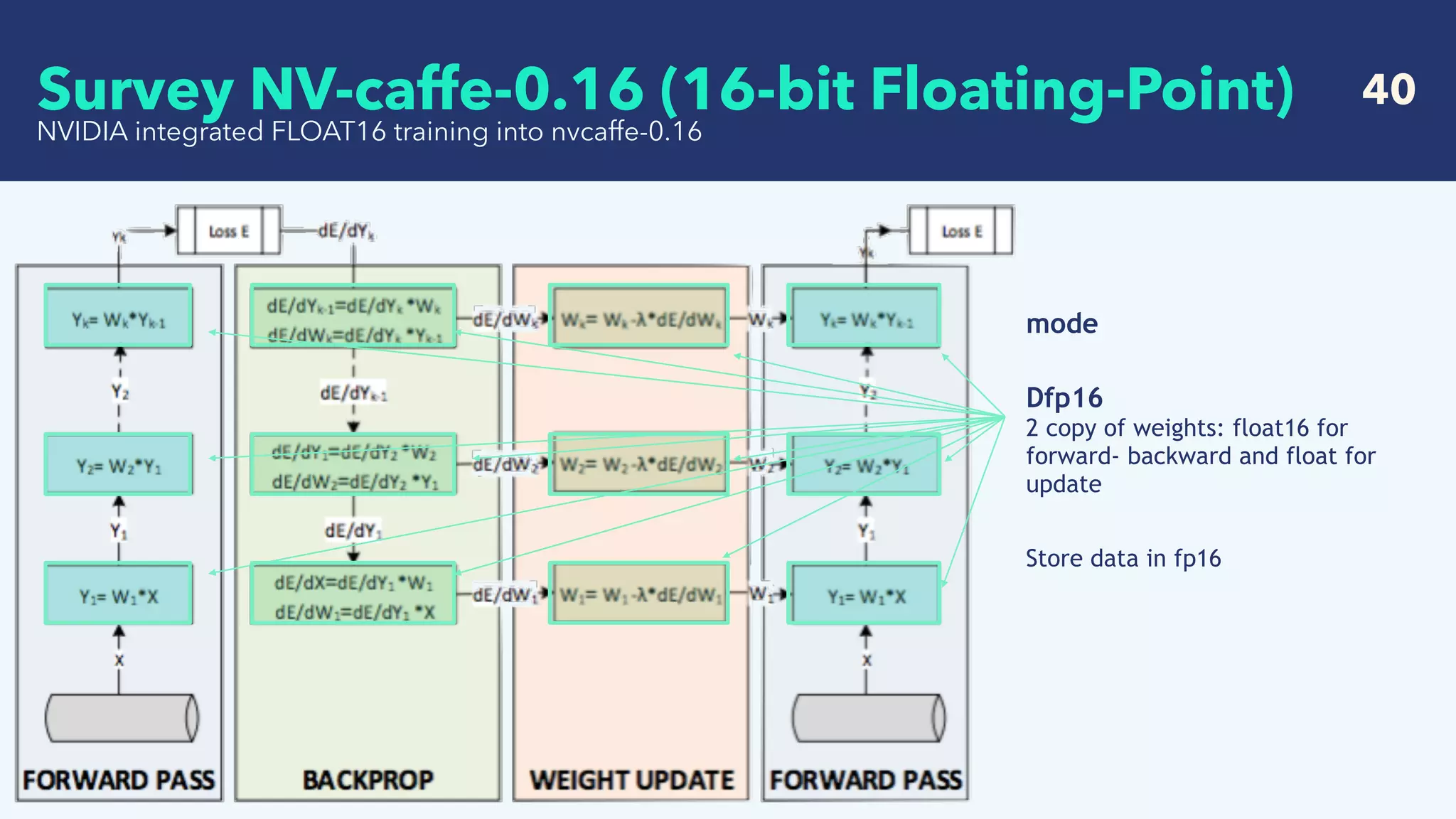

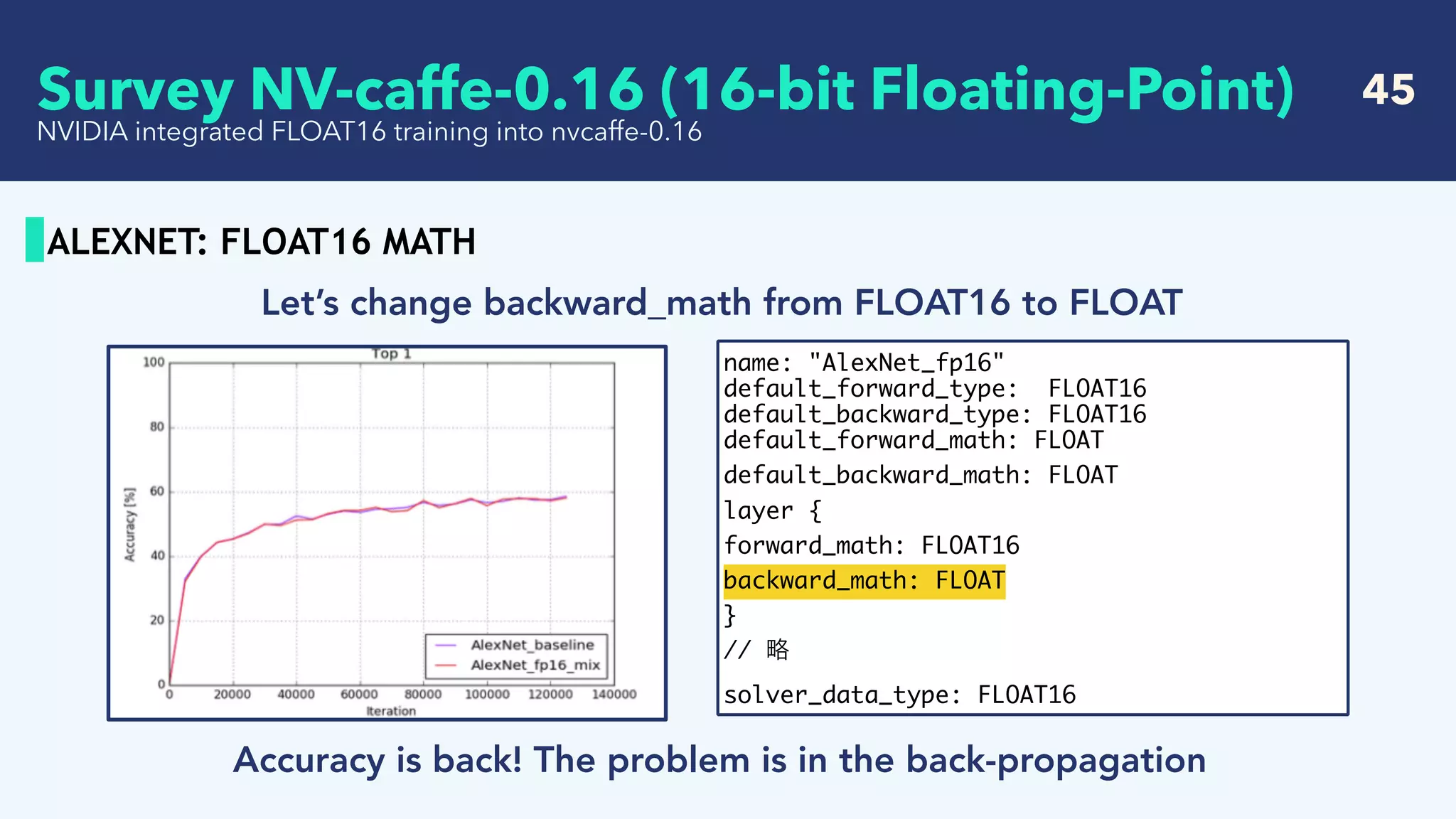

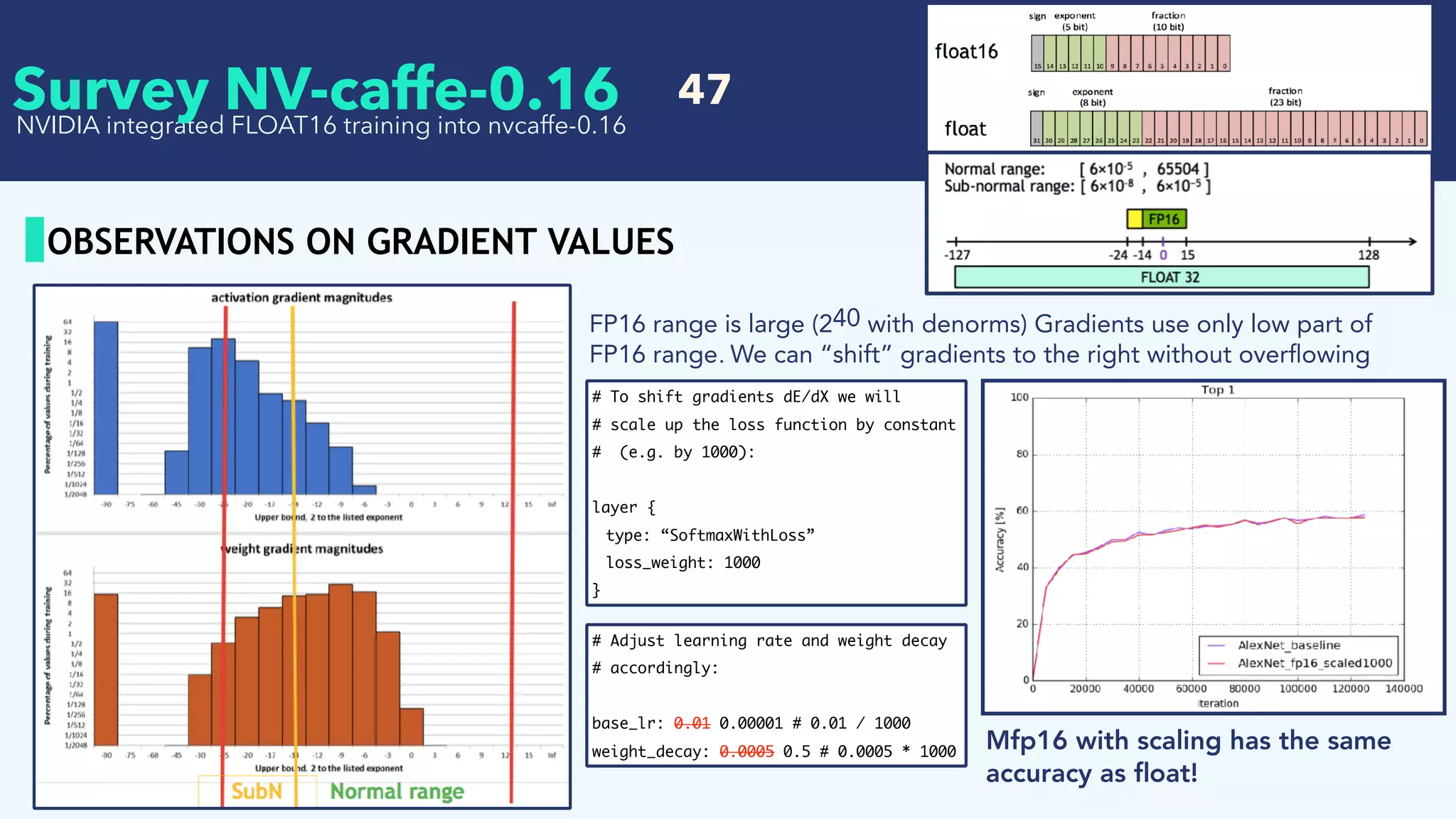

![46

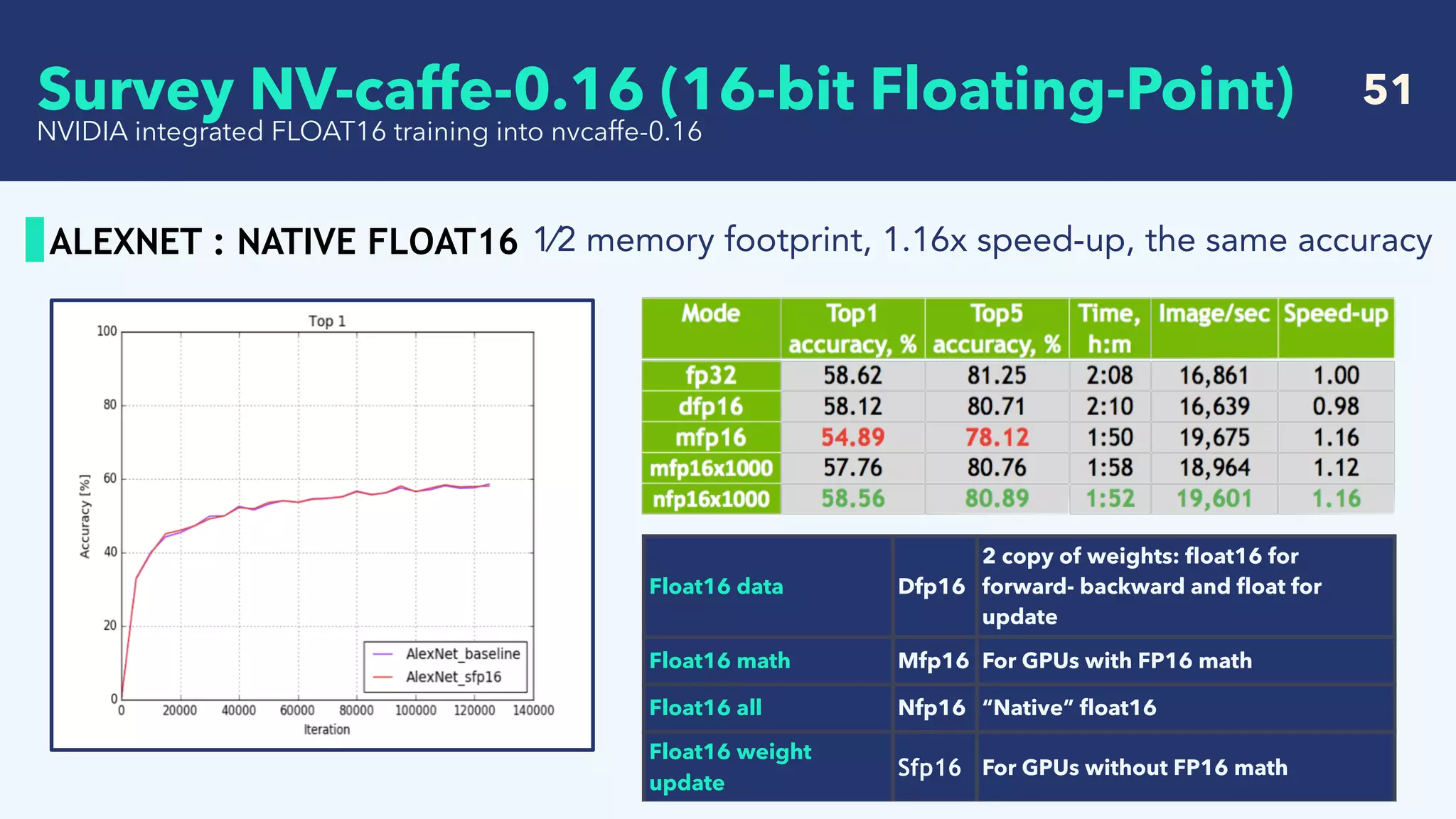

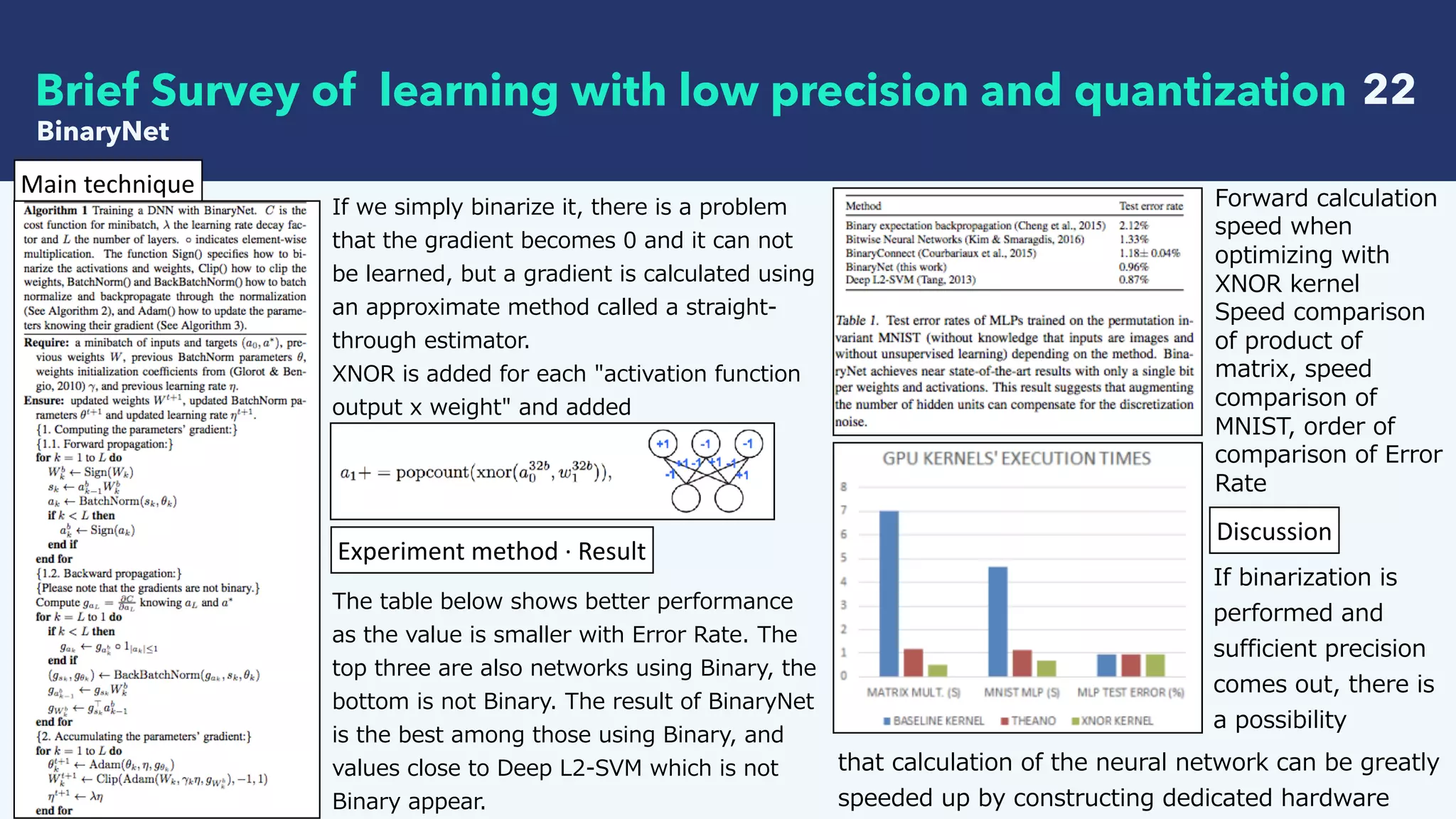

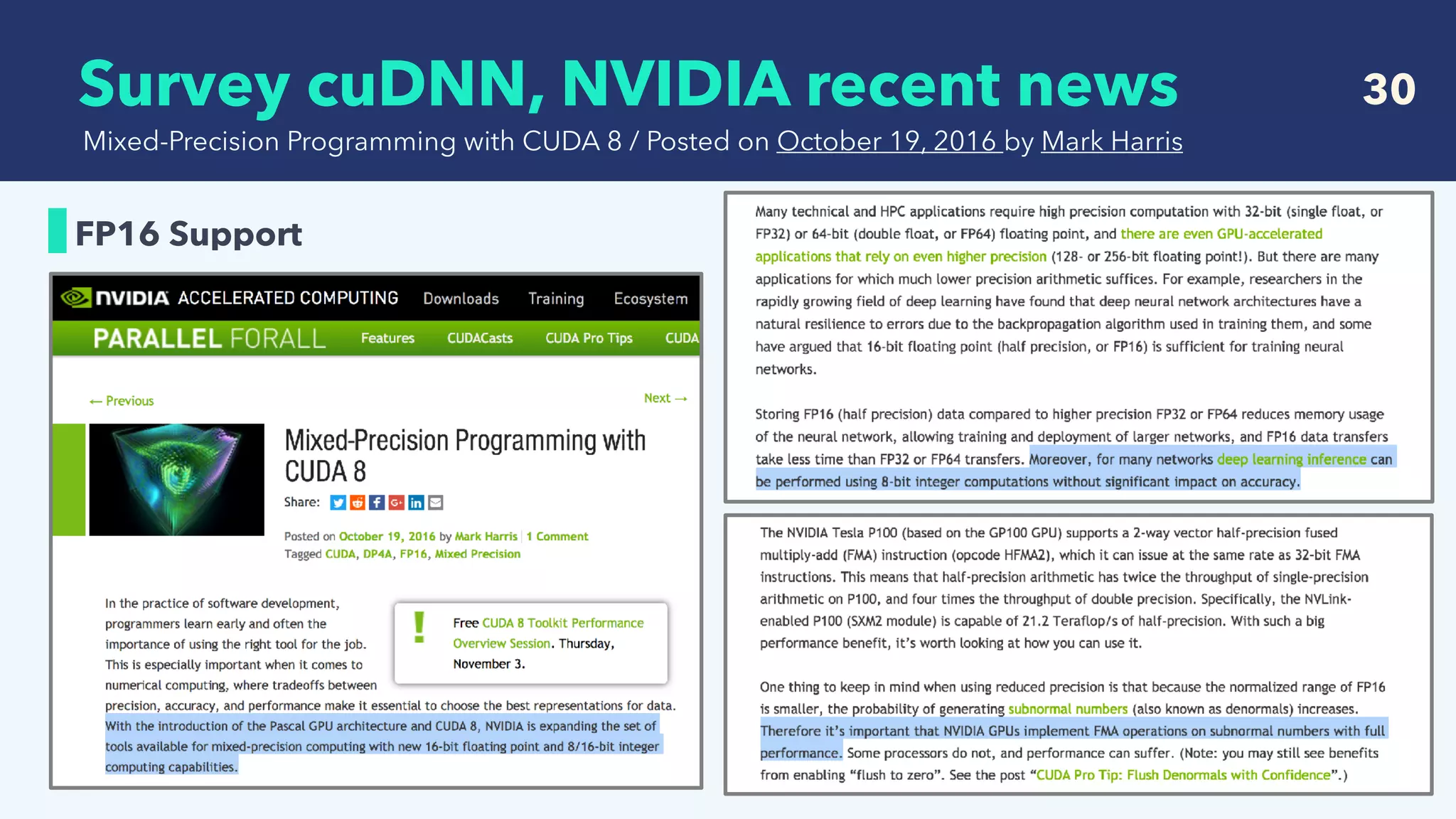

ALEXNET: FLOAT16 MATH

NVIDIA integrated FLOAT16 training into nvcaffe-0.16

The problem is in the back-propagation

Mfp16

For GPUs with FP16 math

- weight [ok]

- activation [ok]

- dE/dw(gradient of weight) [ok]

- dE/db(gradient of bias) [ok]

- dE/dx(gradient of input) [underflow]

compute in fp16

weights activation dE/dw

dE/dbdE/dx

Gradients dE/dx are very small, in the sub-normal range of float16. Potential underflow and loss in

accuracy

Survey NV-caffe-0.16 (16-bit Floating-Point)](https://image.slidesharecdn.com/crestsurveylowprecision-180424085127/75/Survey-of-recent-deep-learning-with-low-precision-46-2048.jpg)

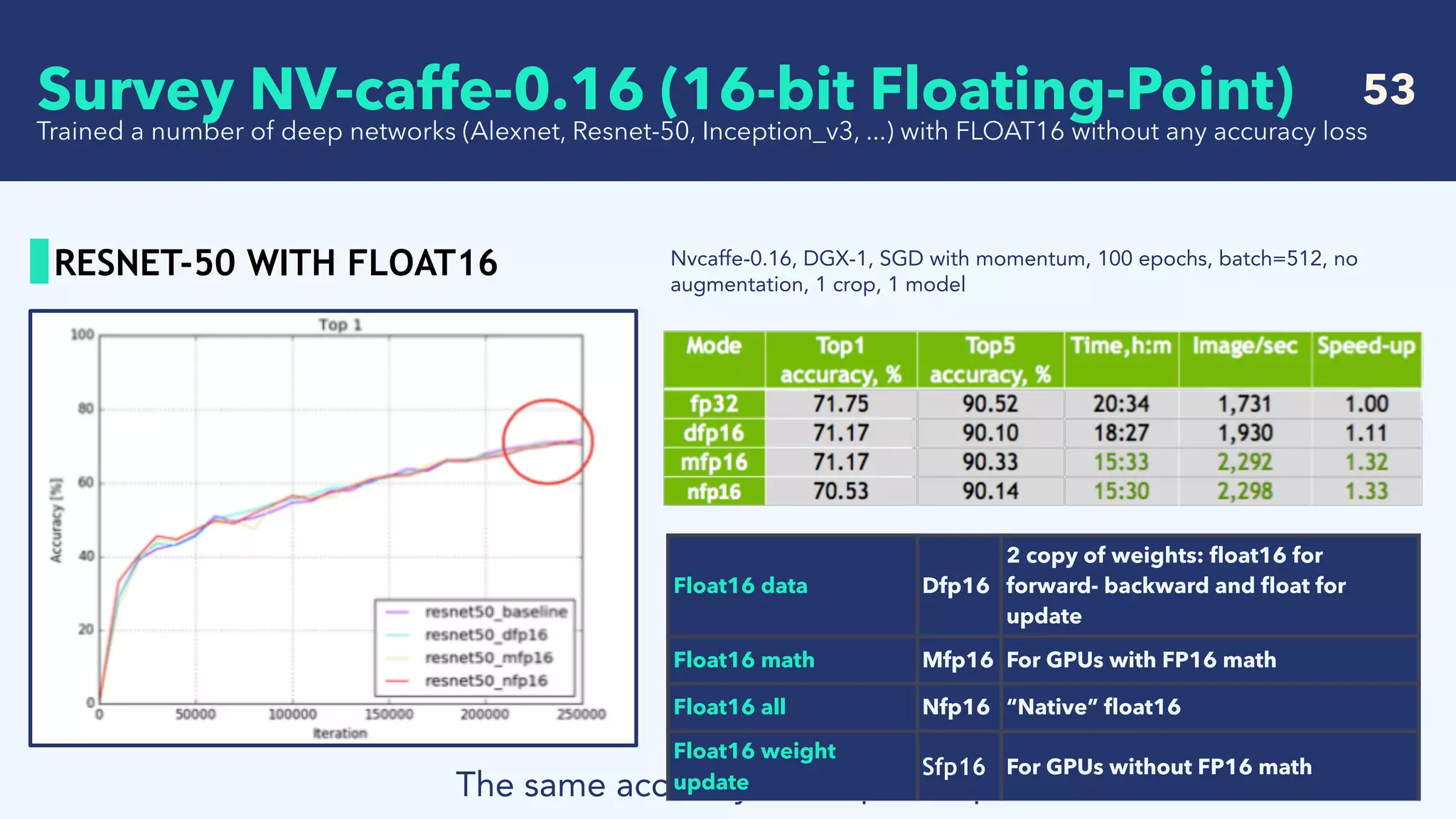

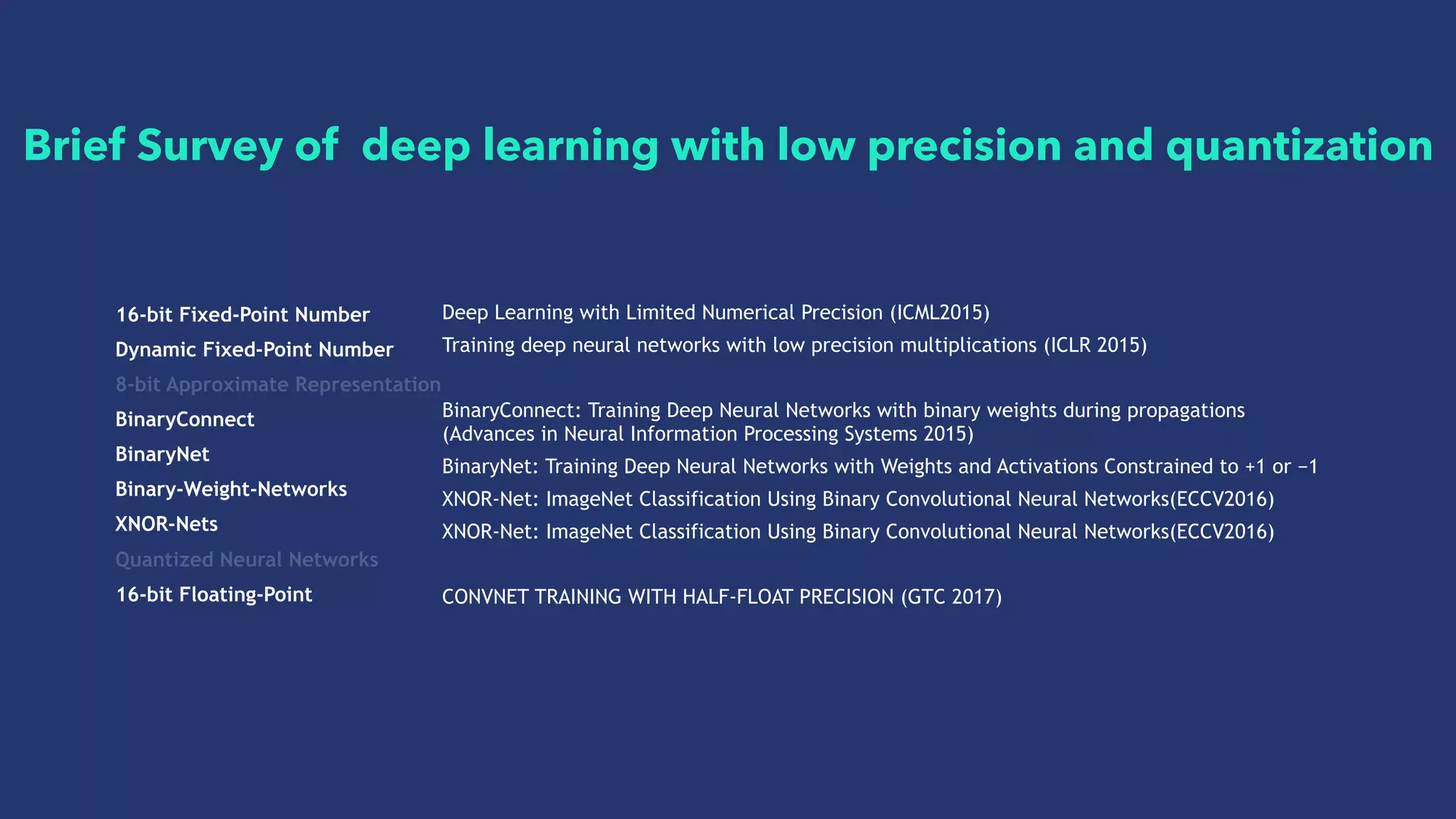

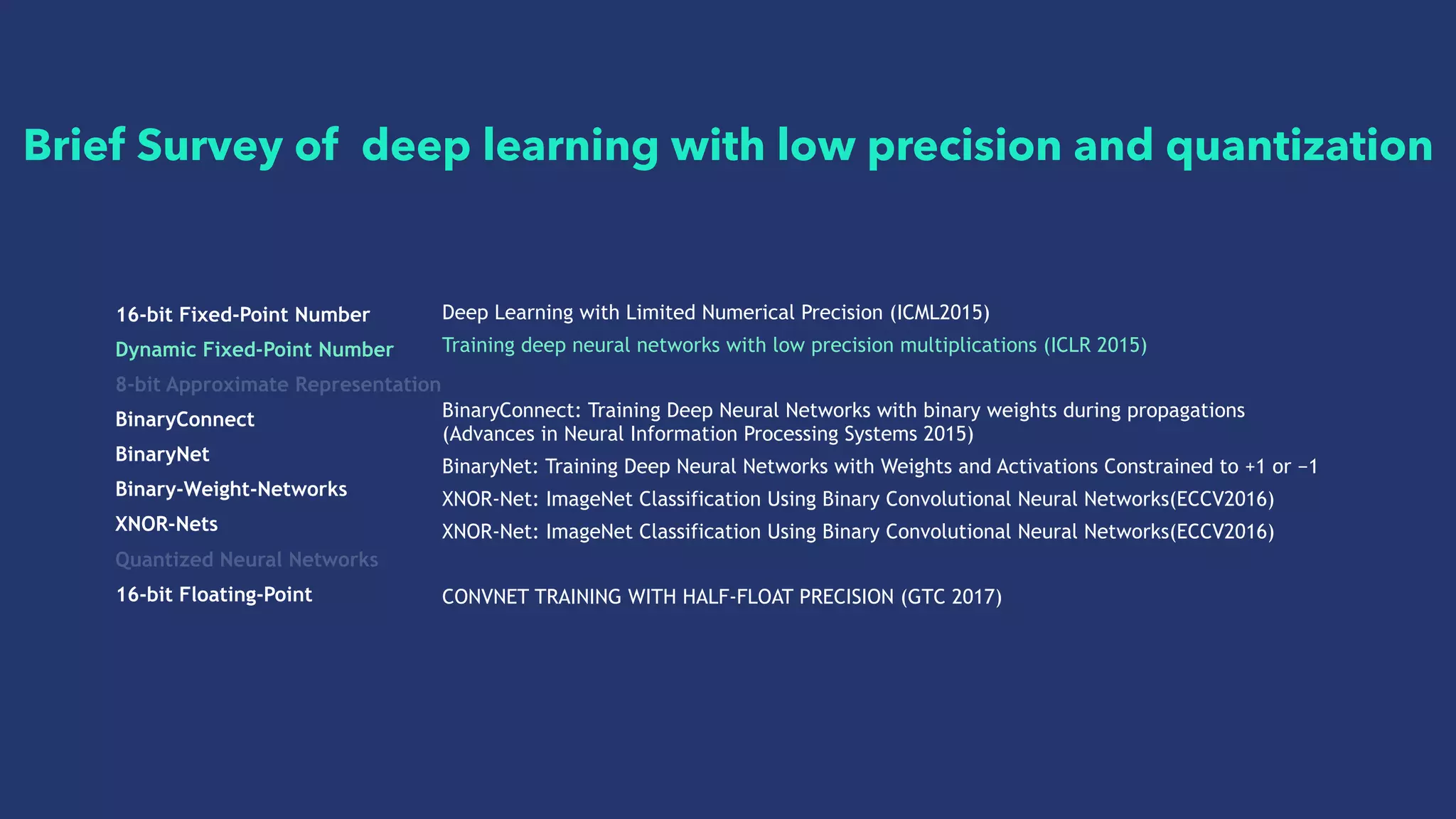

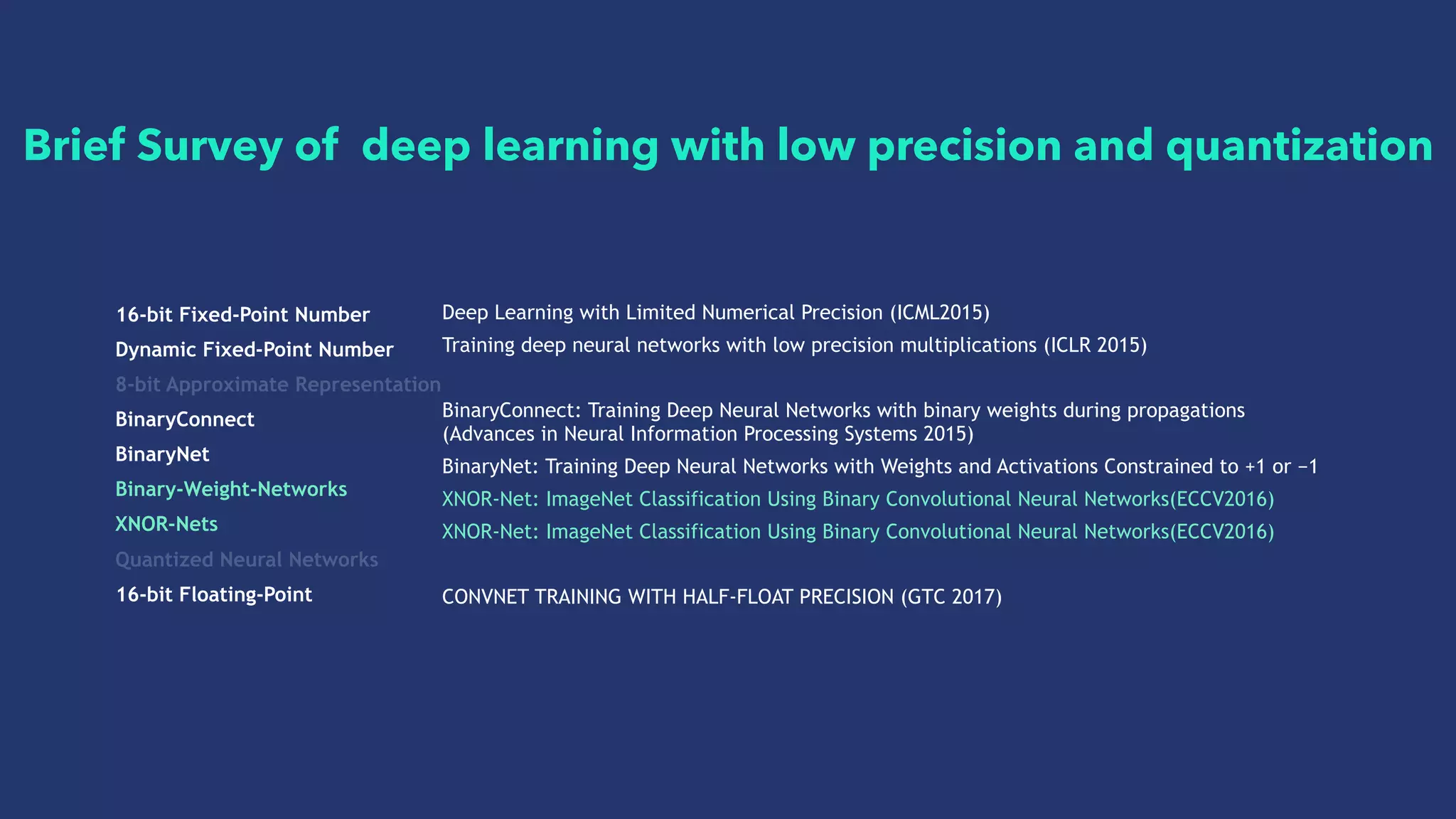

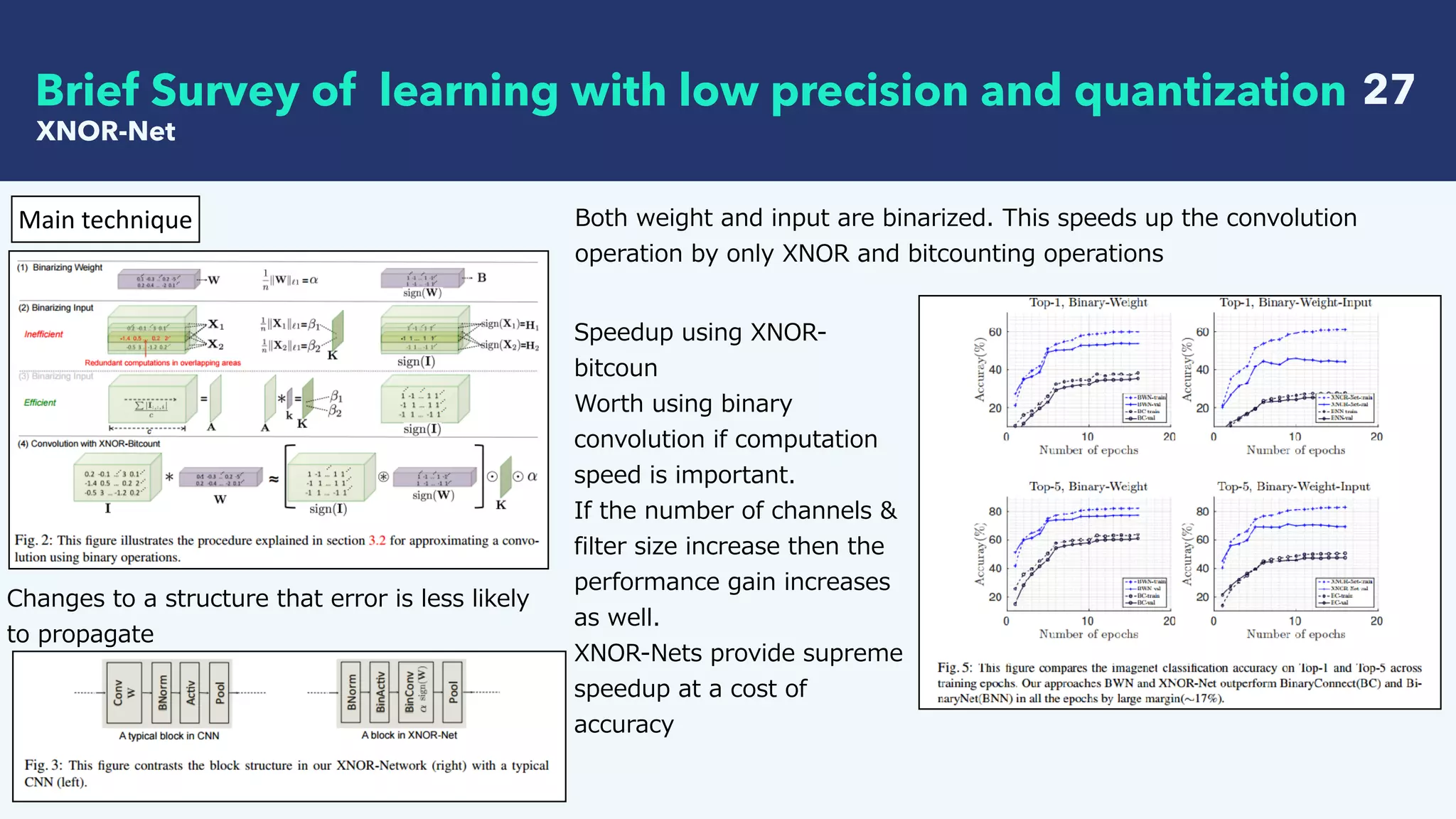

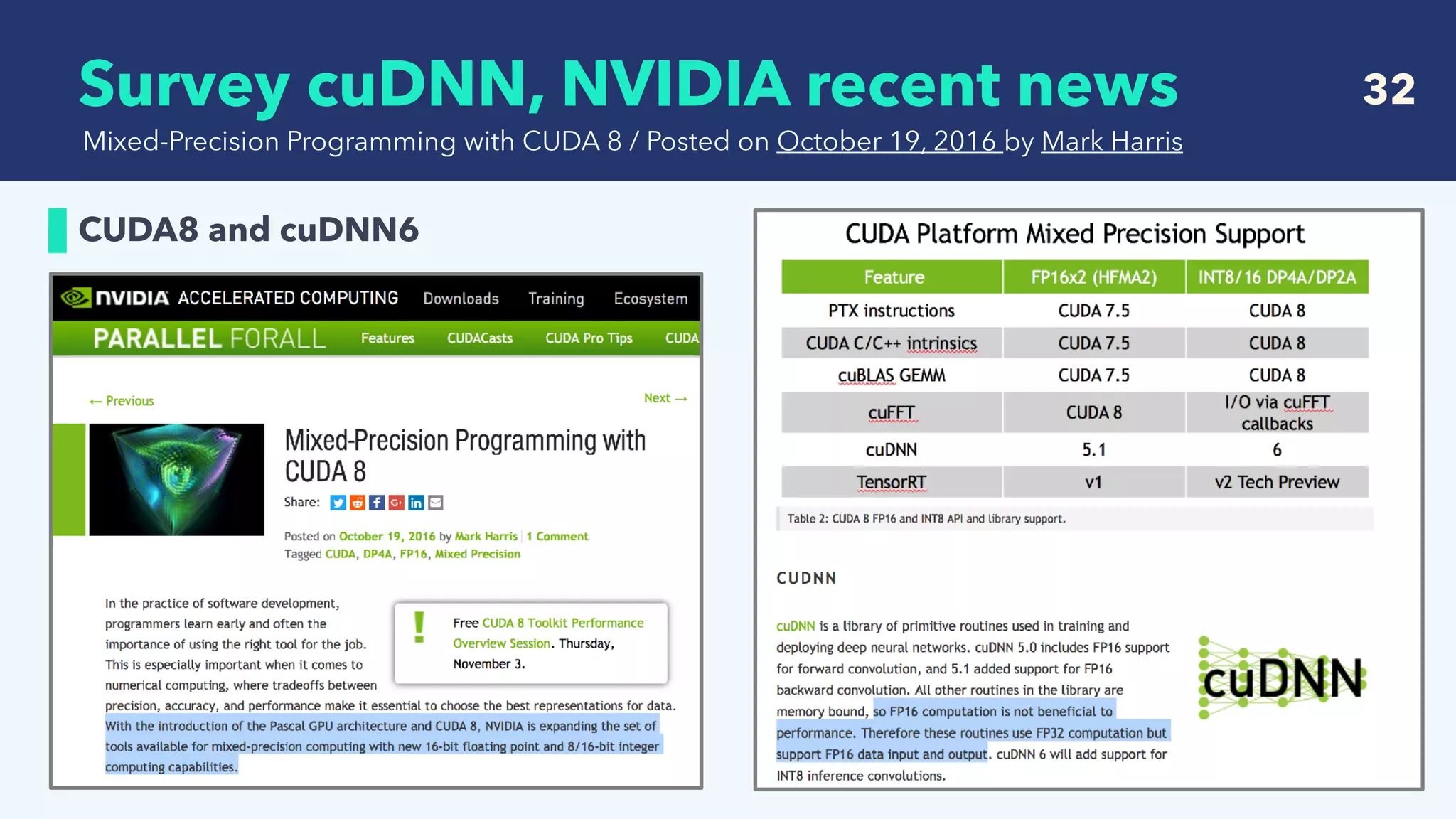

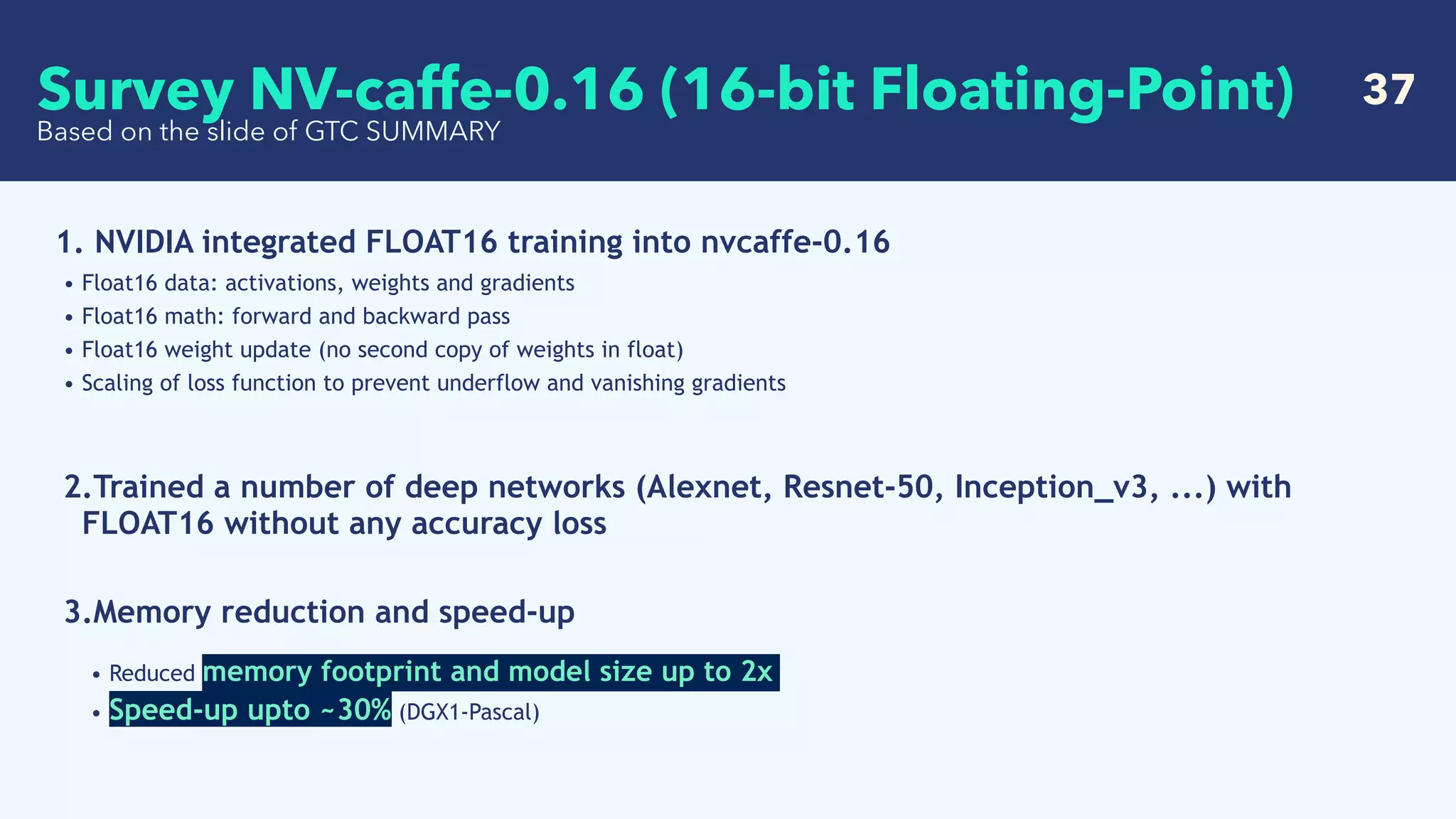

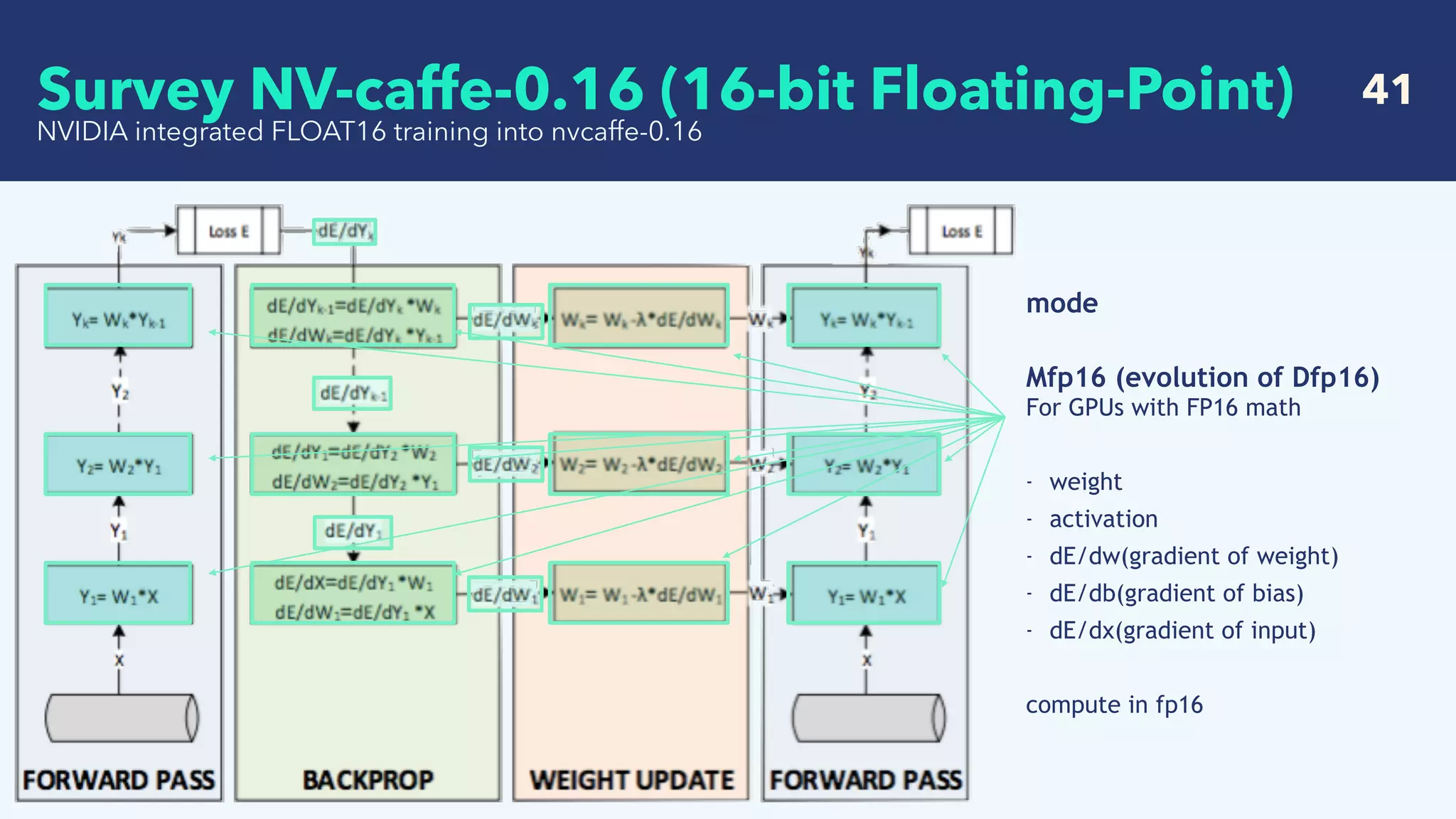

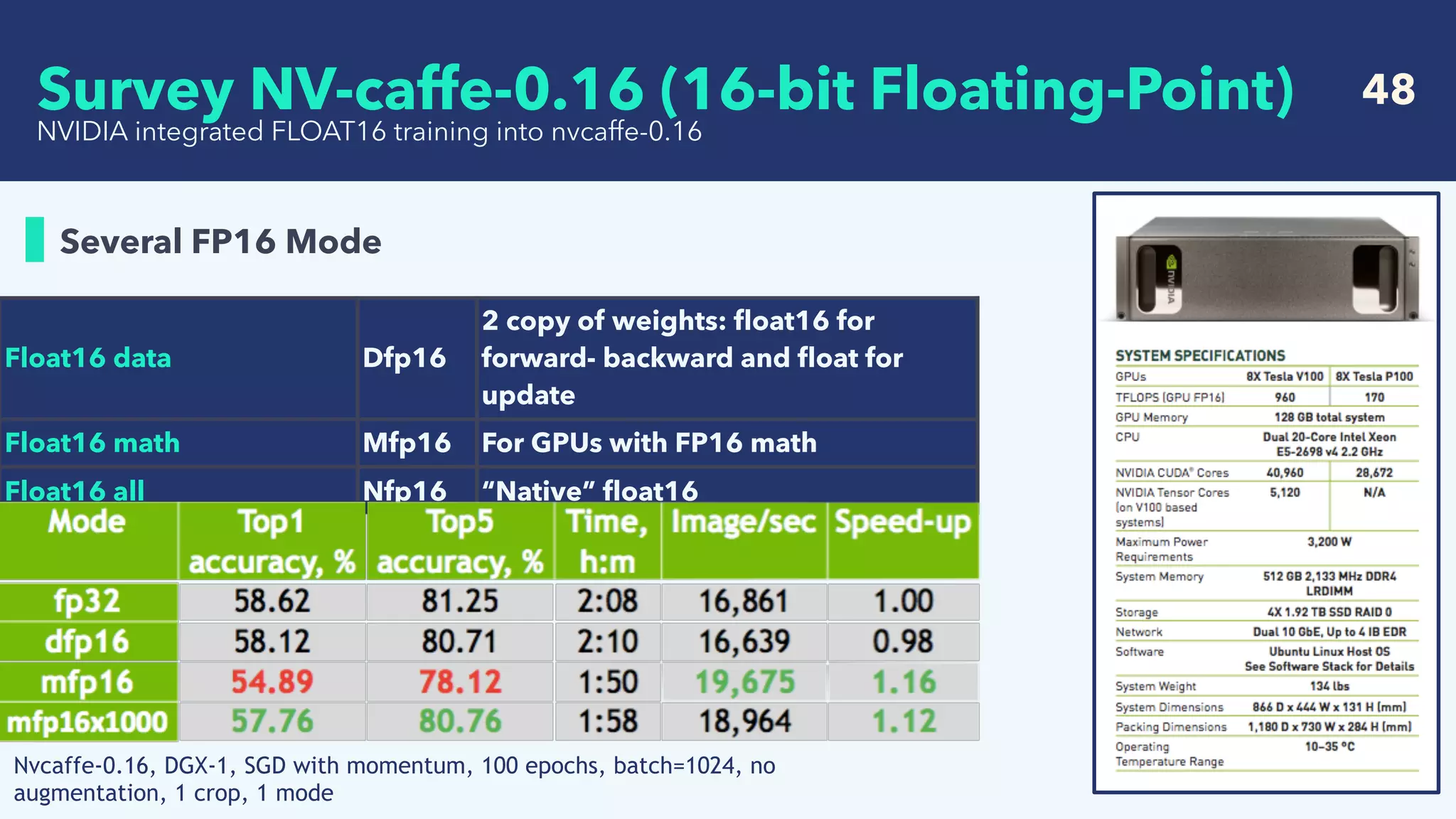

![49

NVIDIA integrated FLOAT16 training into nvcaffe-0.16

calculate in FP16

calculate in FP32

(for weight update)

[1] ΔW32(t) =half2float(Δw16(t))

[2] W32(t+1)=W32(t) - λ*ΔW32(t)

[3] W16(t+1)=float2half(W32(t+1))

Survey NV-caffe-0.16 (16-bit Floating-Point)](https://image.slidesharecdn.com/crestsurveylowprecision-180424085127/75/Survey-of-recent-deep-learning-with-low-precision-49-2048.jpg)

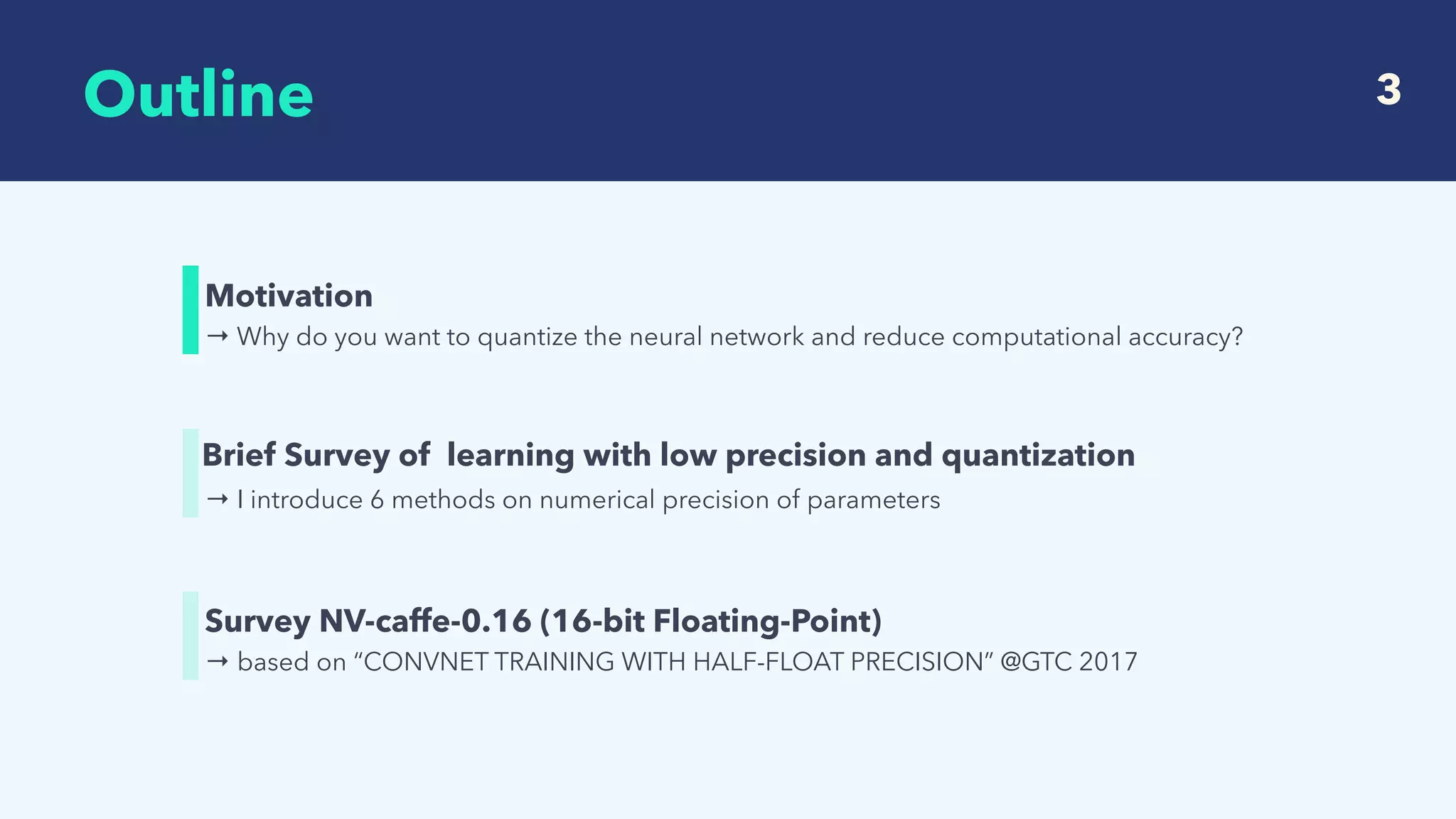

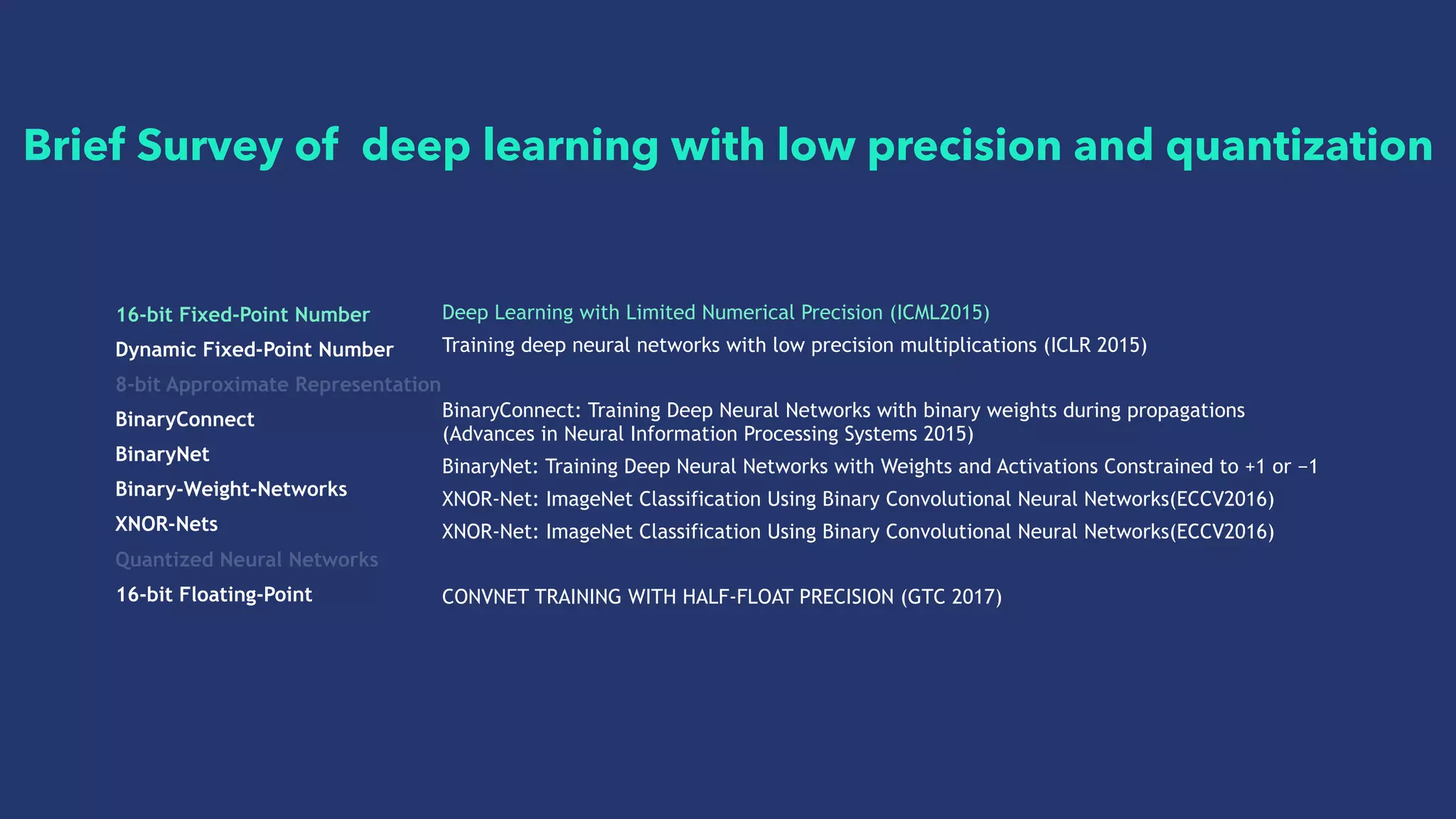

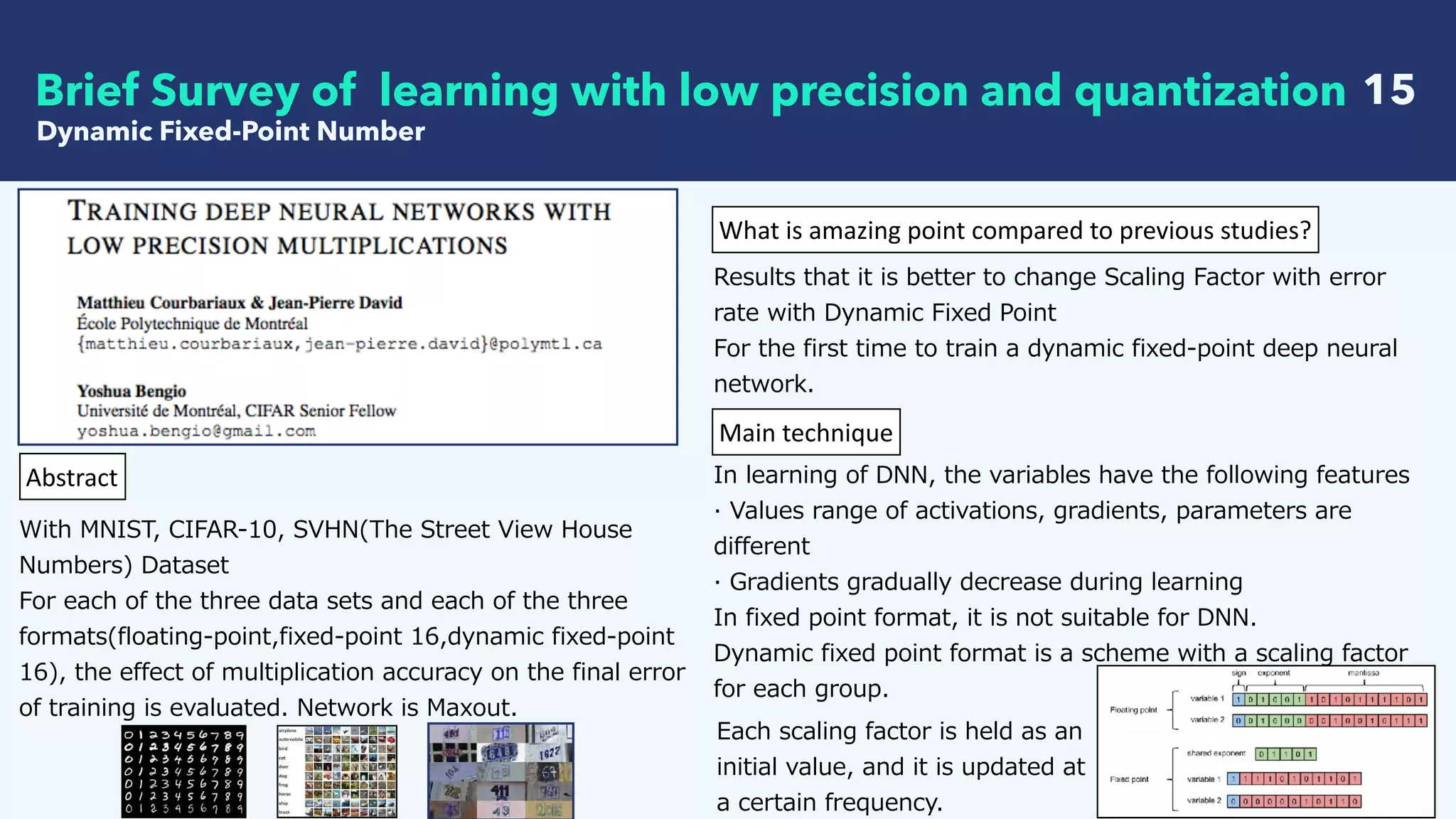

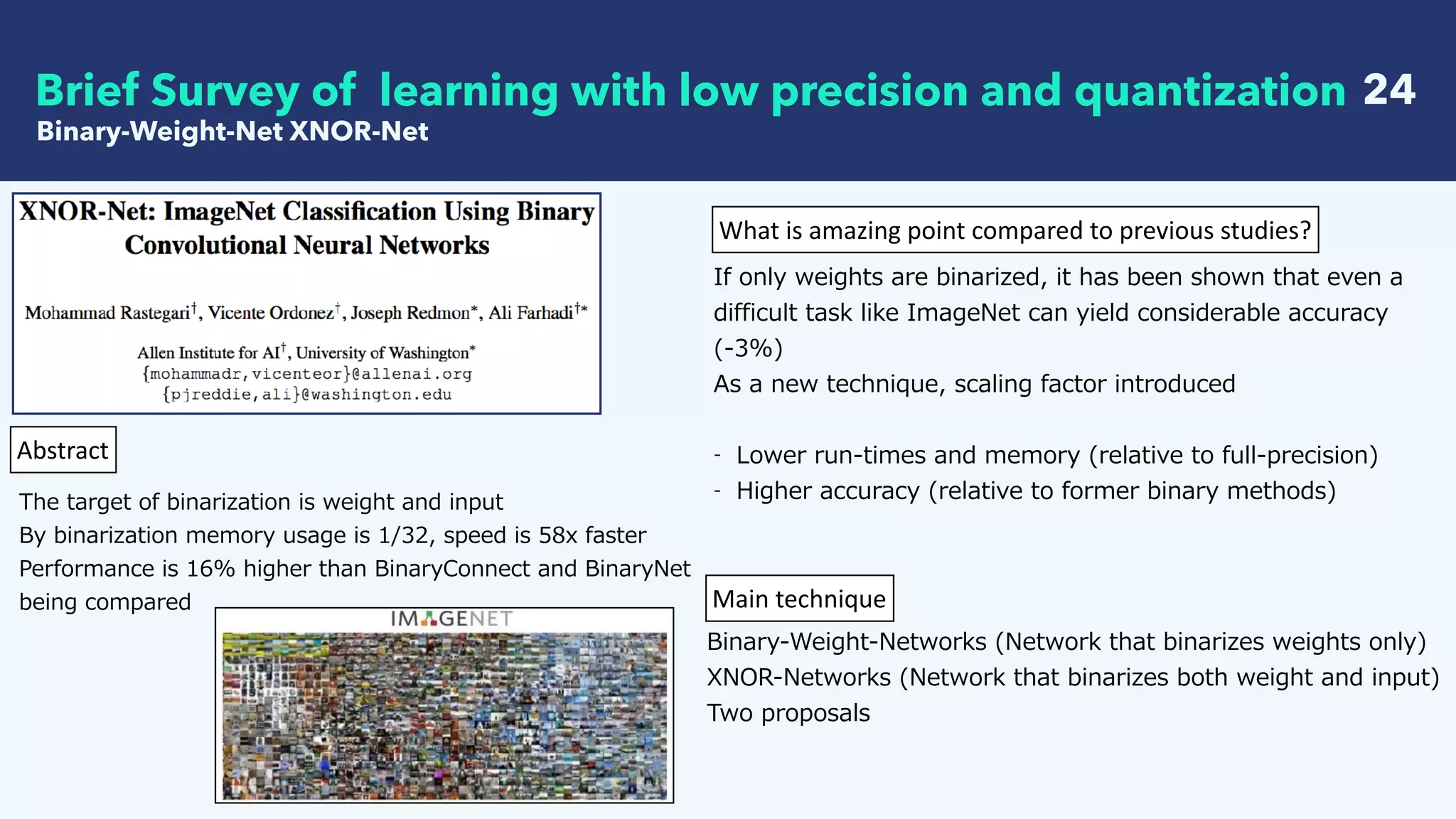

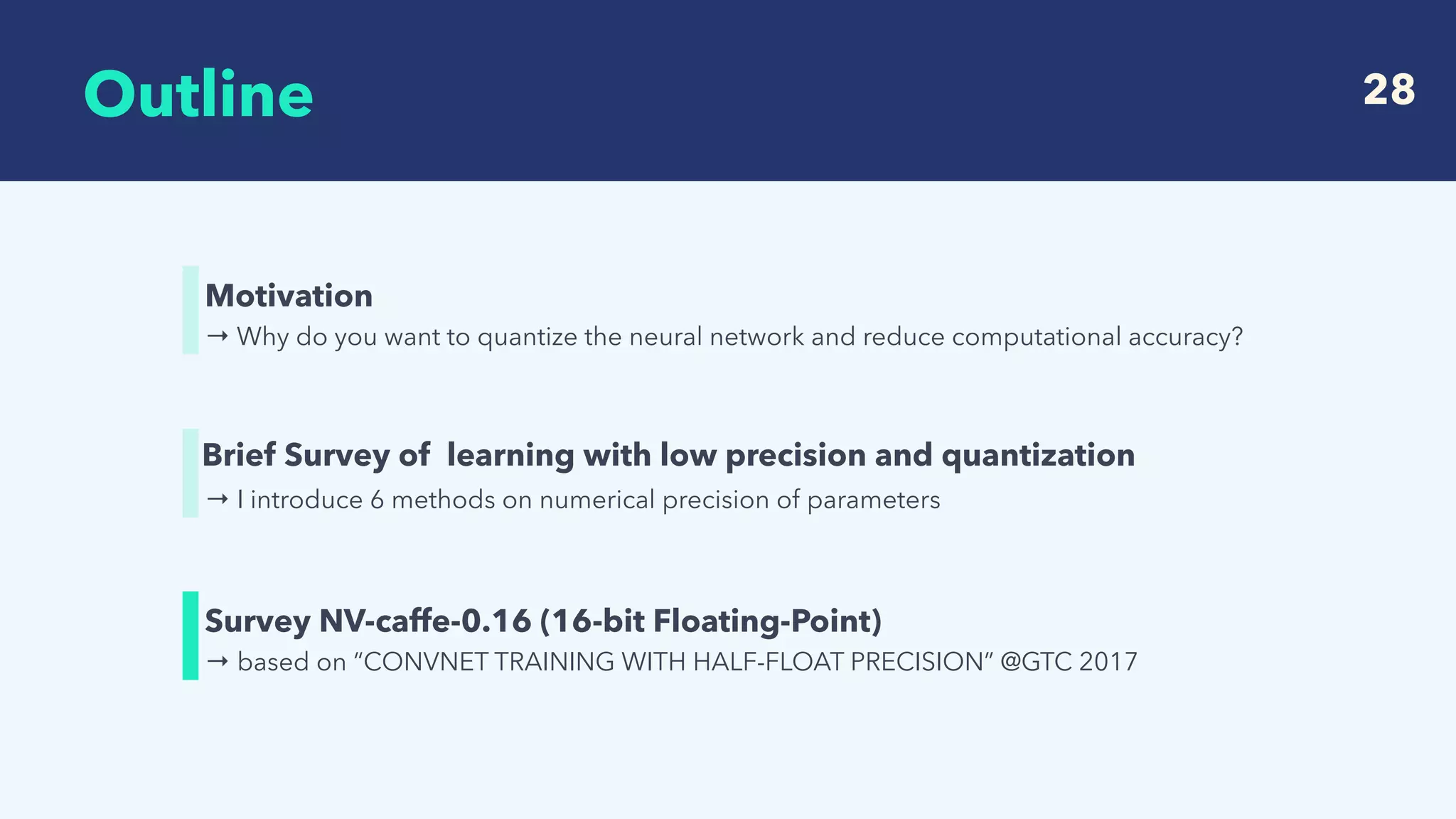

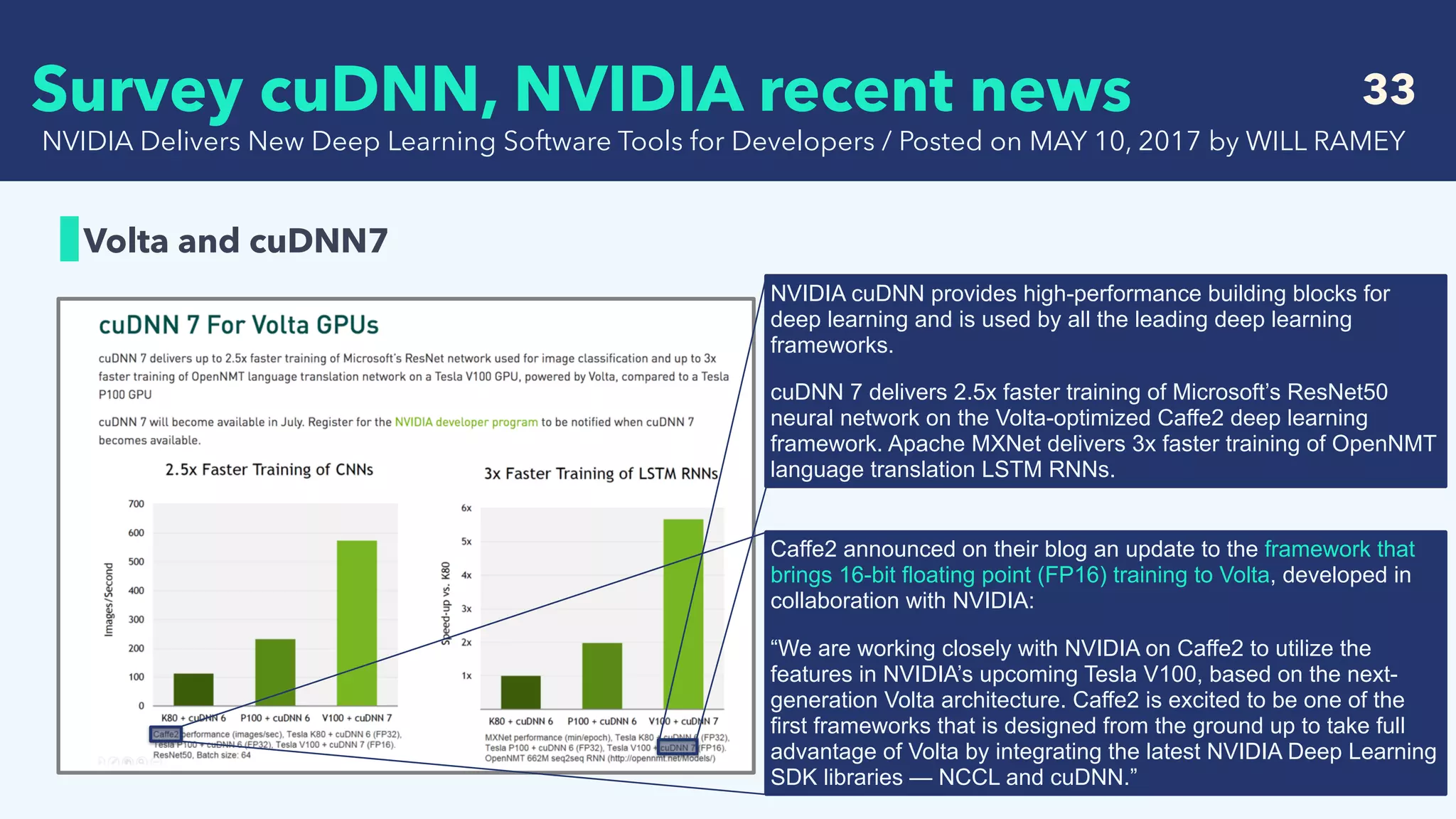

![50

NVIDIA integrated FLOAT16 training into nvcaffe-0.16

calculate in FP16

calculate in FP32

(for weight update)

[1] G16(t+1) = m* G16(t+1) + Δw16(t)

[2] W32=half2float(W16(t))- λ*half2float(G16(t+1))

[3] W16(t+1)=float2half(W32(t+1))

Survey NV-caffe-0.16 (16-bit Floating-Point)](https://image.slidesharecdn.com/crestsurveylowprecision-180424085127/75/Survey-of-recent-deep-learning-with-low-precision-50-2048.jpg)