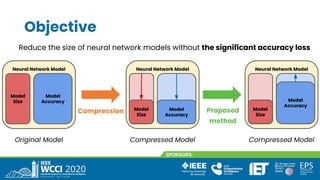

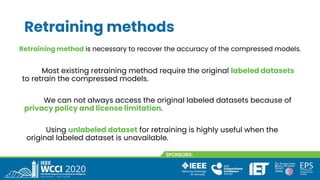

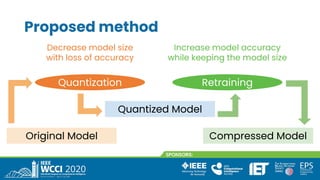

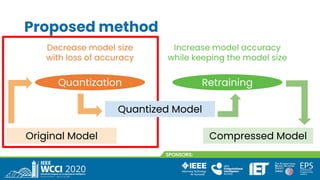

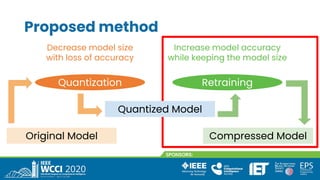

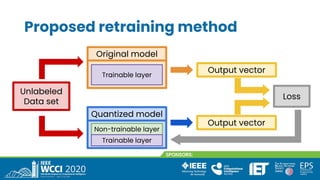

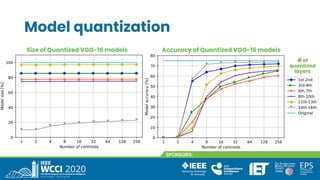

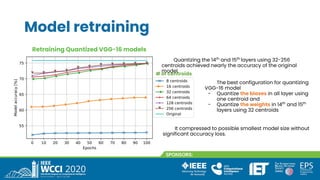

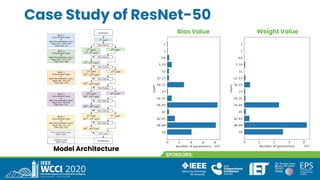

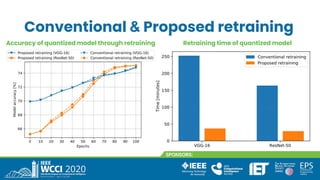

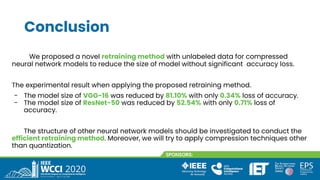

The document discusses a novel retraining method for quantized neural network models using unlabeled data to reduce model size without significant accuracy loss. Experiments demonstrated that the VGG-16 model's size was reduced by 81.10% with only a 0.34% accuracy loss, and the ResNet-50 model's size was reduced by 52.54% with a 0.71% accuracy loss. The study emphasizes the importance of retraining methods when original labeled datasets are unavailable and suggests further exploration of compression techniques.

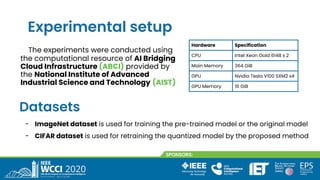

![Experimental setup

Hardware Specification

CPU Intel Xeon Gold 6148 x 2

Main Memory 364 GiB

GPU Nvidia Tesla V100 SXM2 x4

GPU Memory 16 GiB

Datasets

- ImageNet dataset is used for training the pre-trained model or the original model

- CIFAR dataset is used for retraining the quantized model by the proposed method

Hardware specification[*]

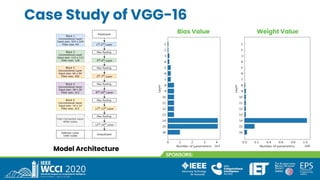

Targeted models

1. VGG-16 model

2. ResNet-50 model

[*] The hardware specification of a compute node in AI Bridging Cloud Infrastructure (ABCI)

provided by National Institute of Advanced Industrial Science and Technology (AIST)](https://image.slidesharecdn.com/retrainingquantizedneuralnetworkmodelswithunlabeleddata-220502100920/85/Retraining-Quantized-Neural-Network-Models-with-Unlabeled-Data-pdf-20-320.jpg)