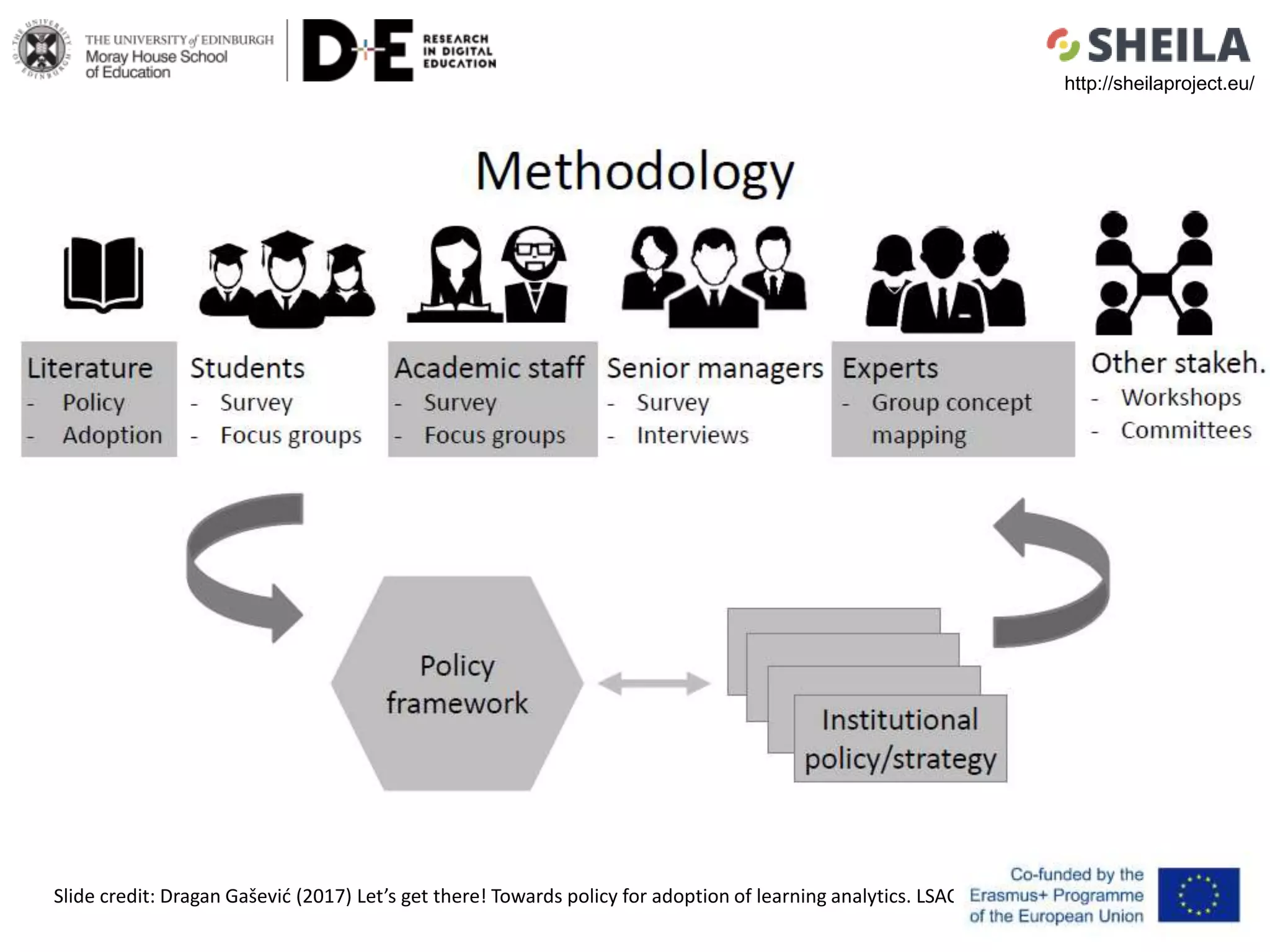

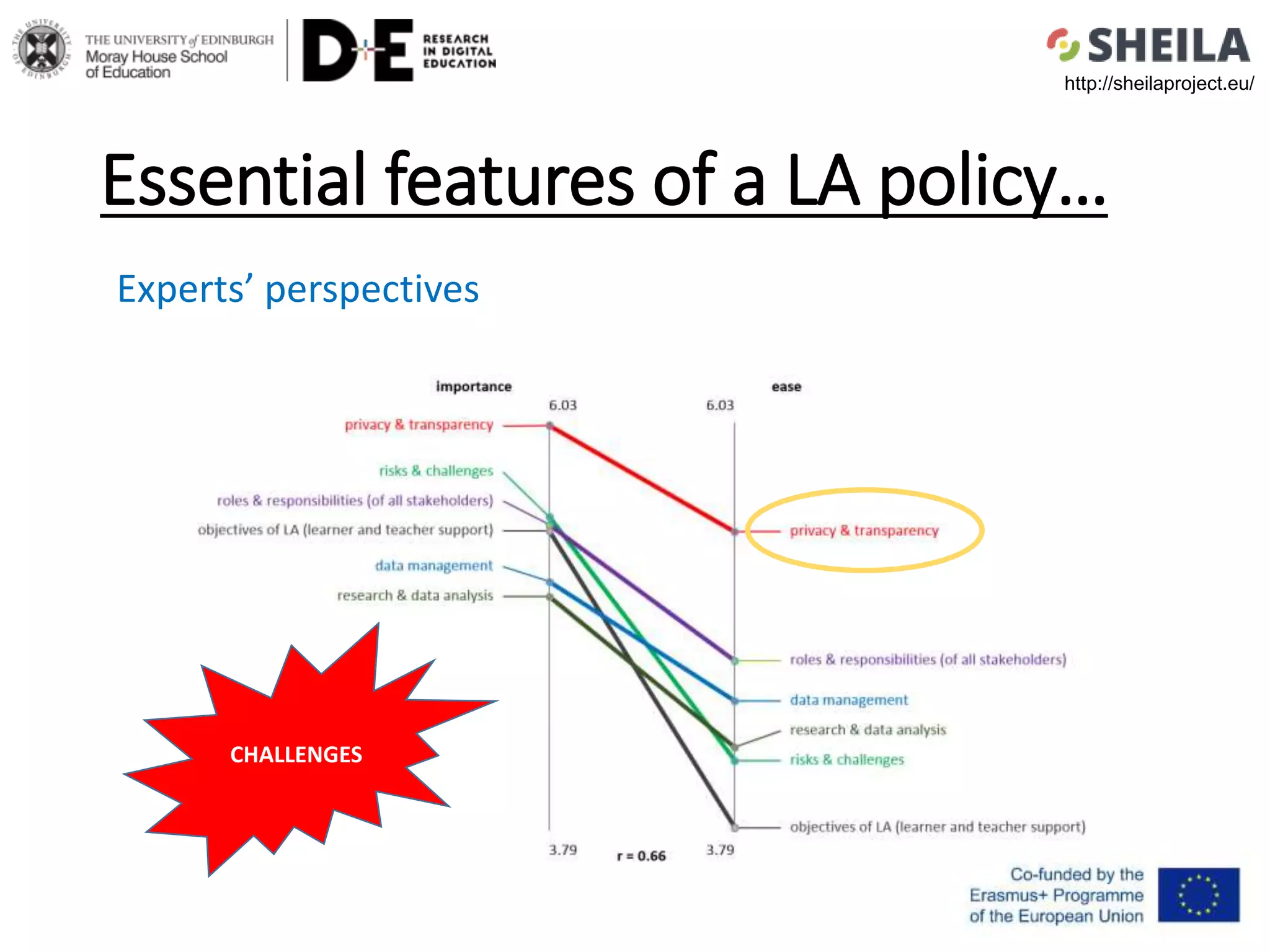

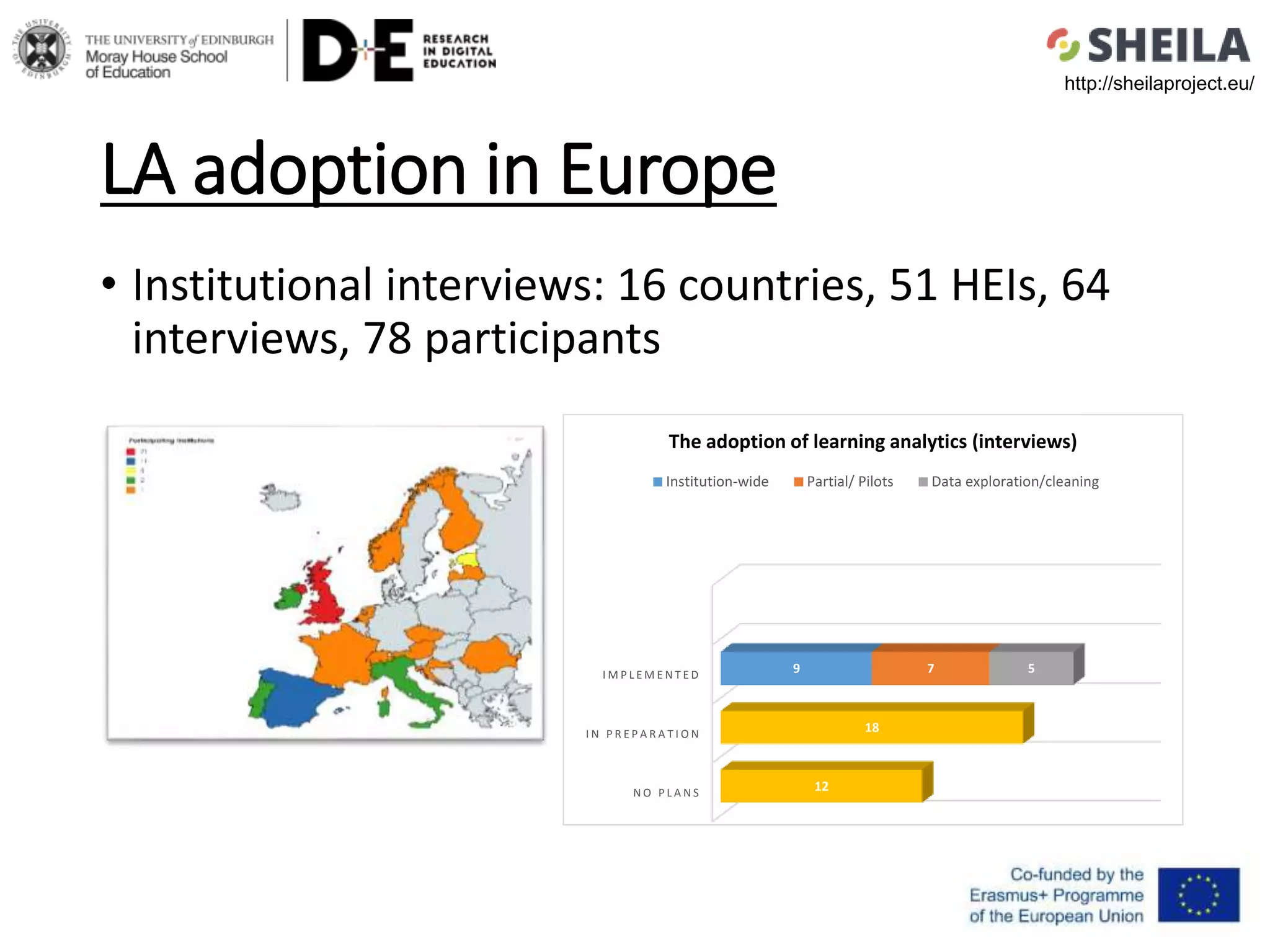

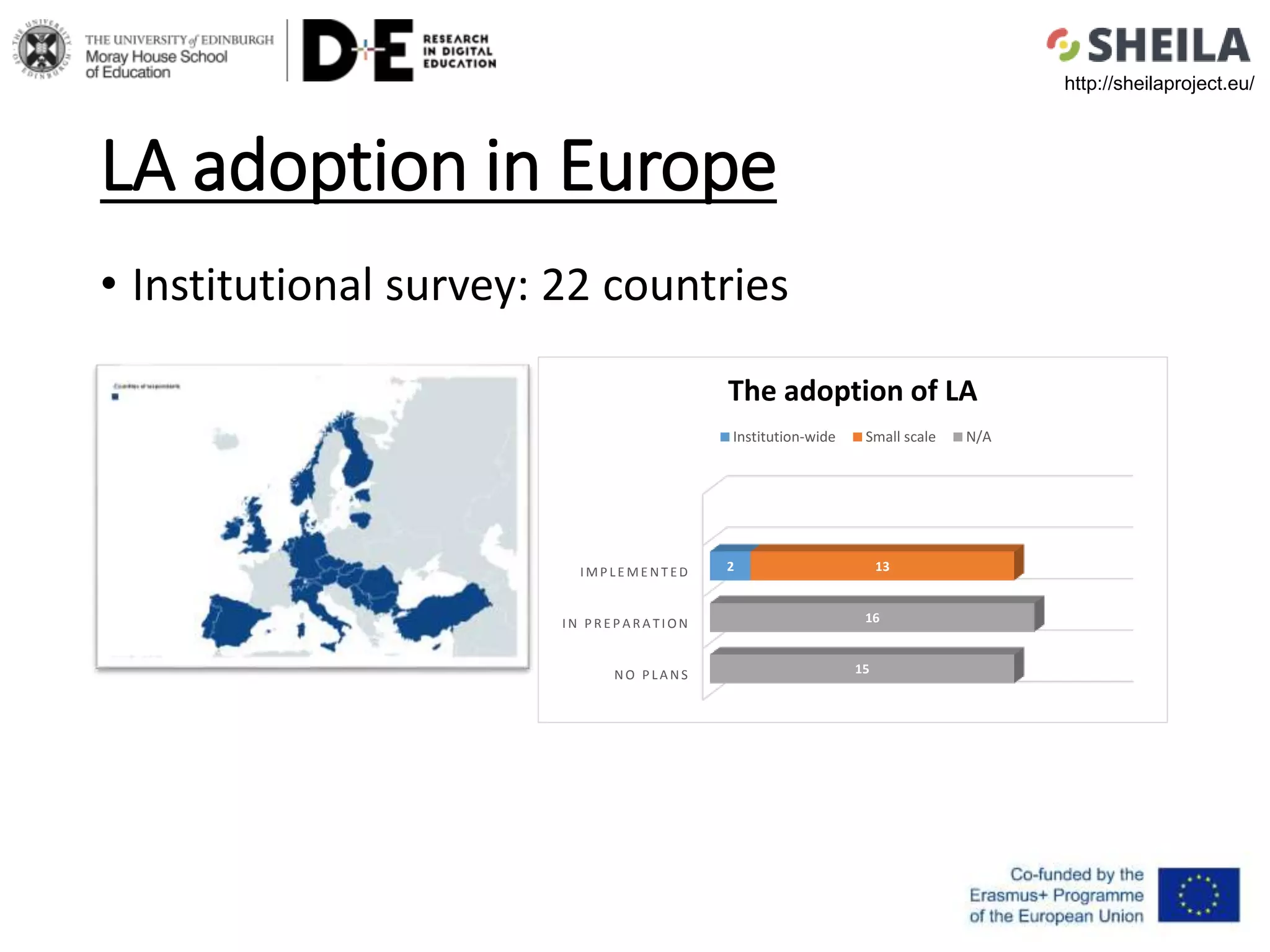

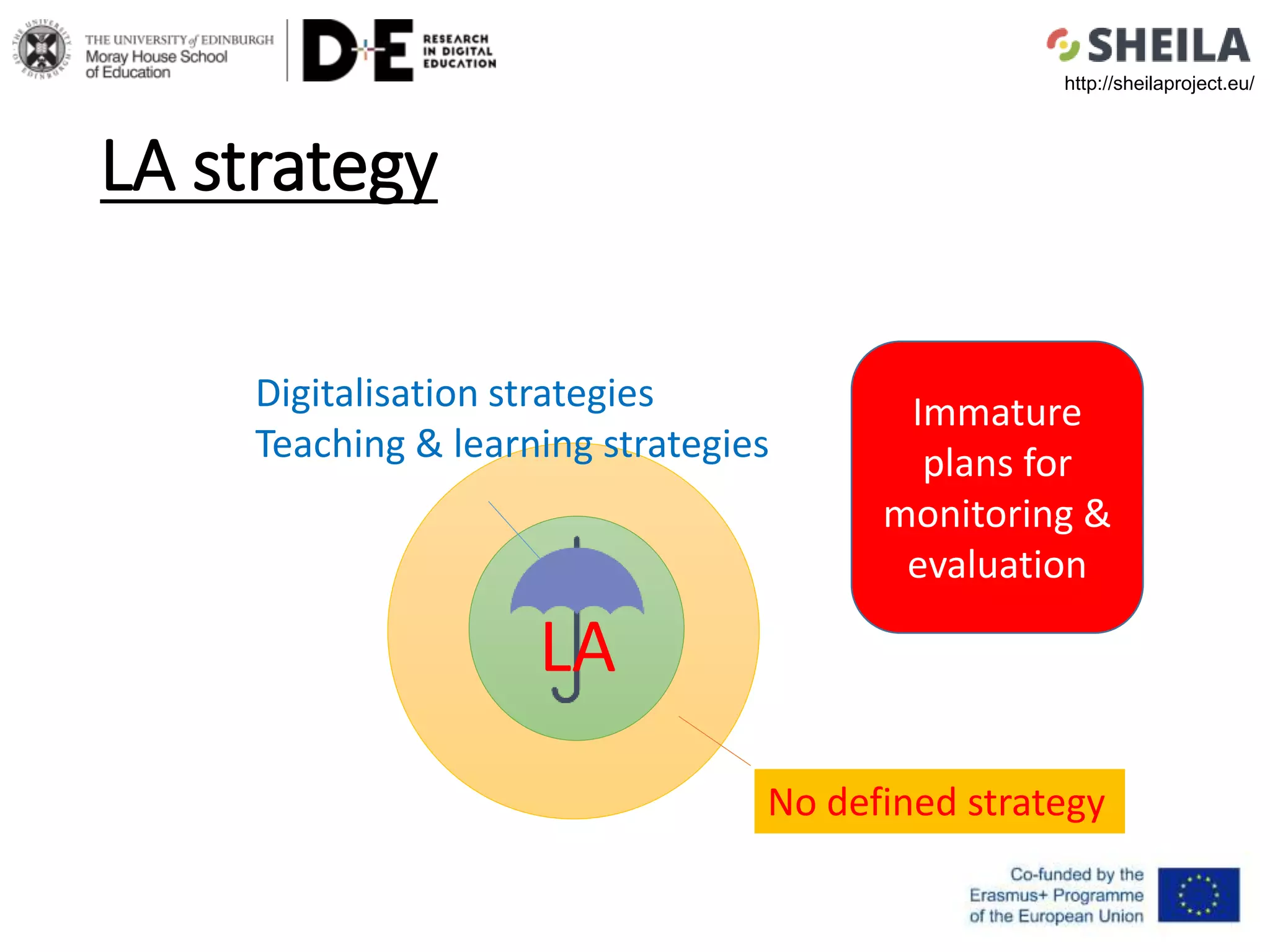

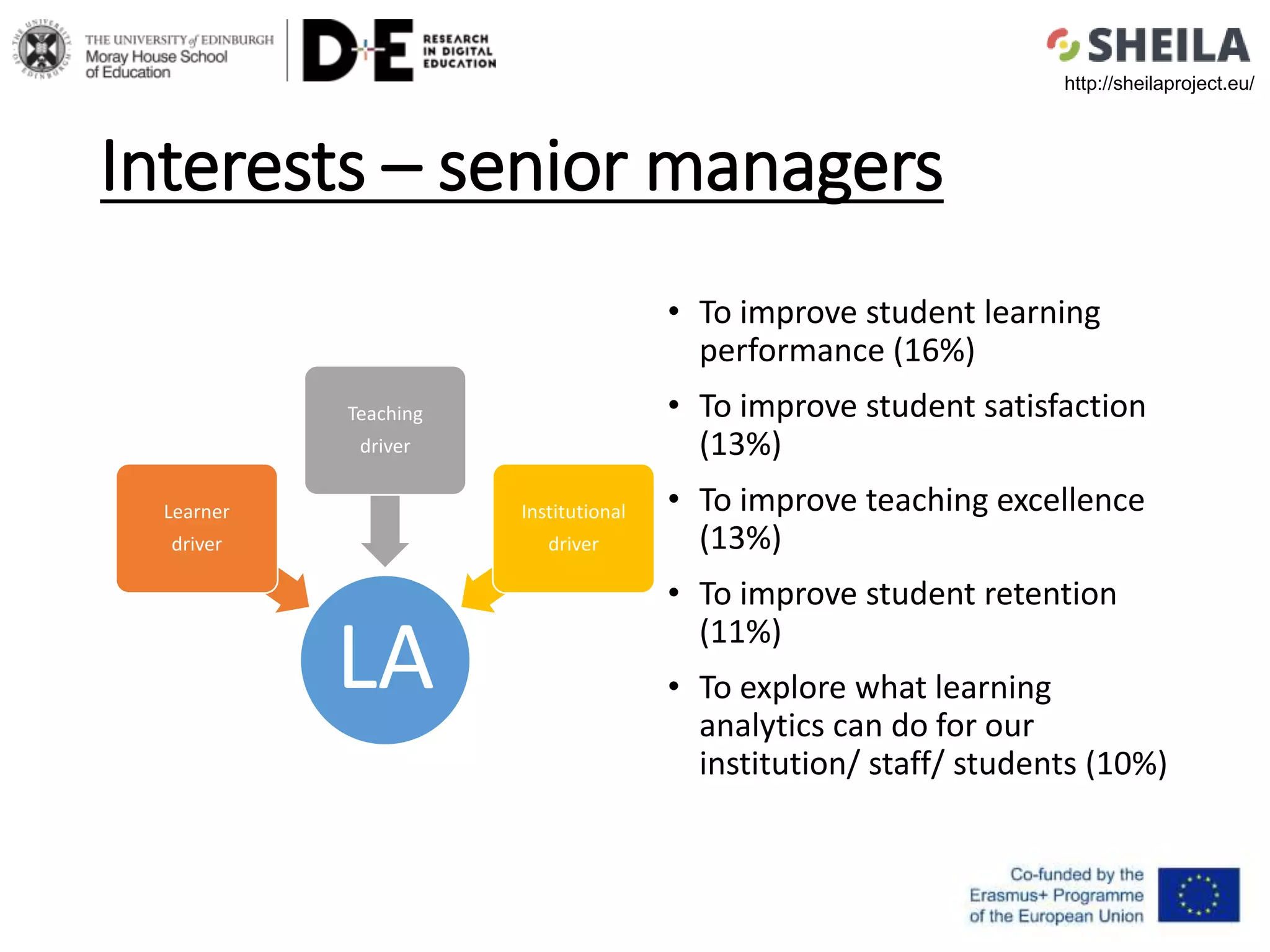

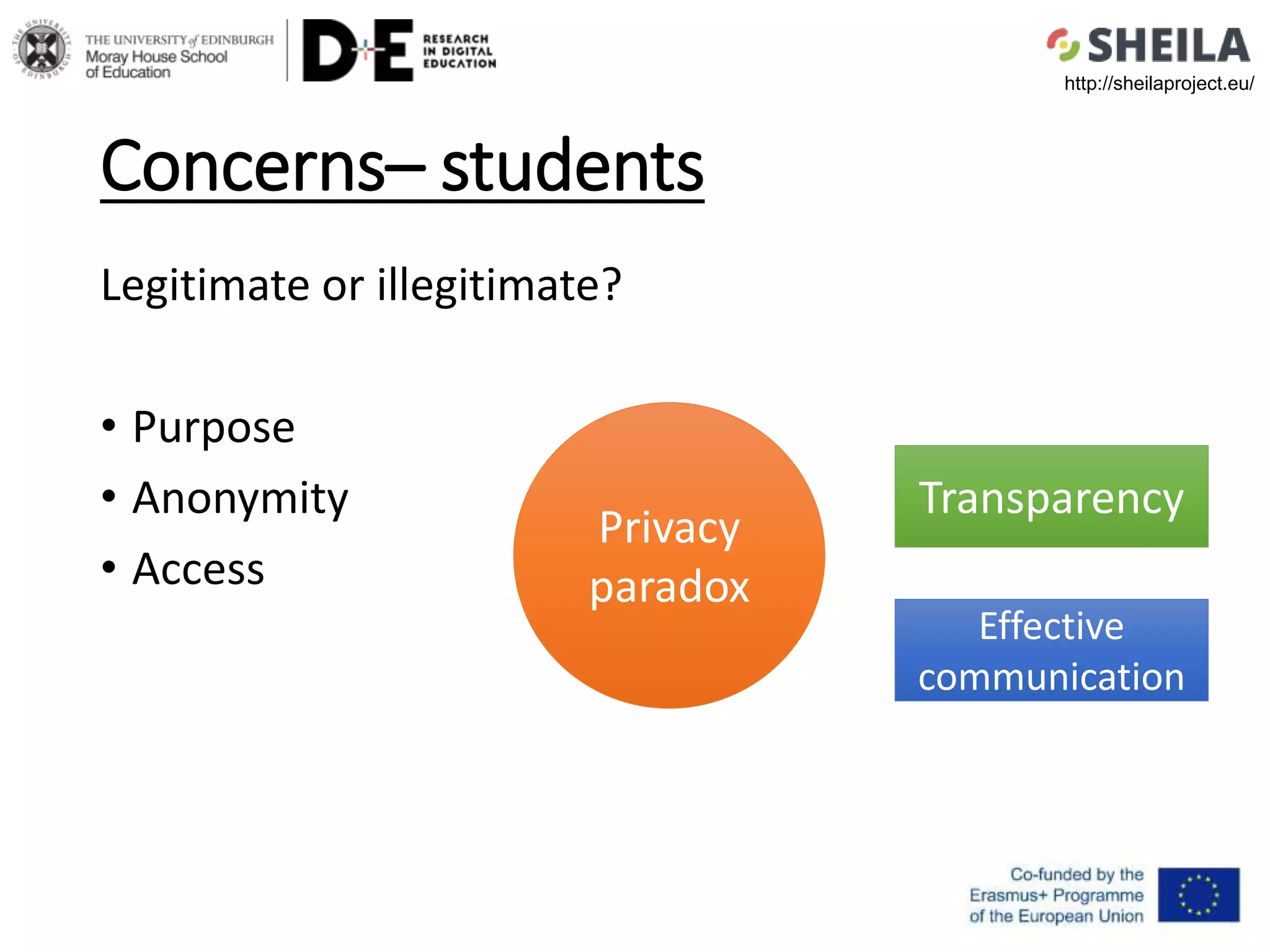

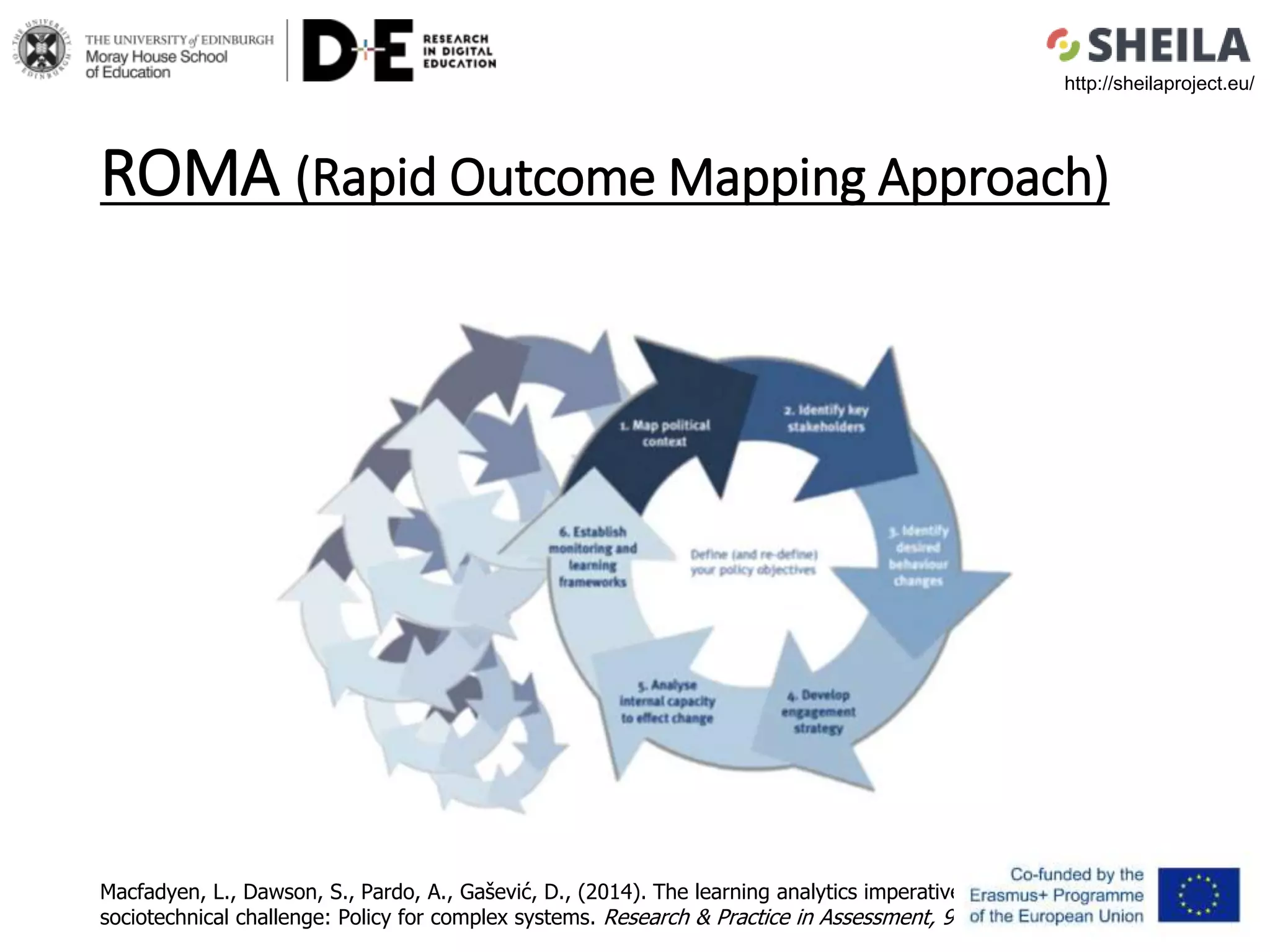

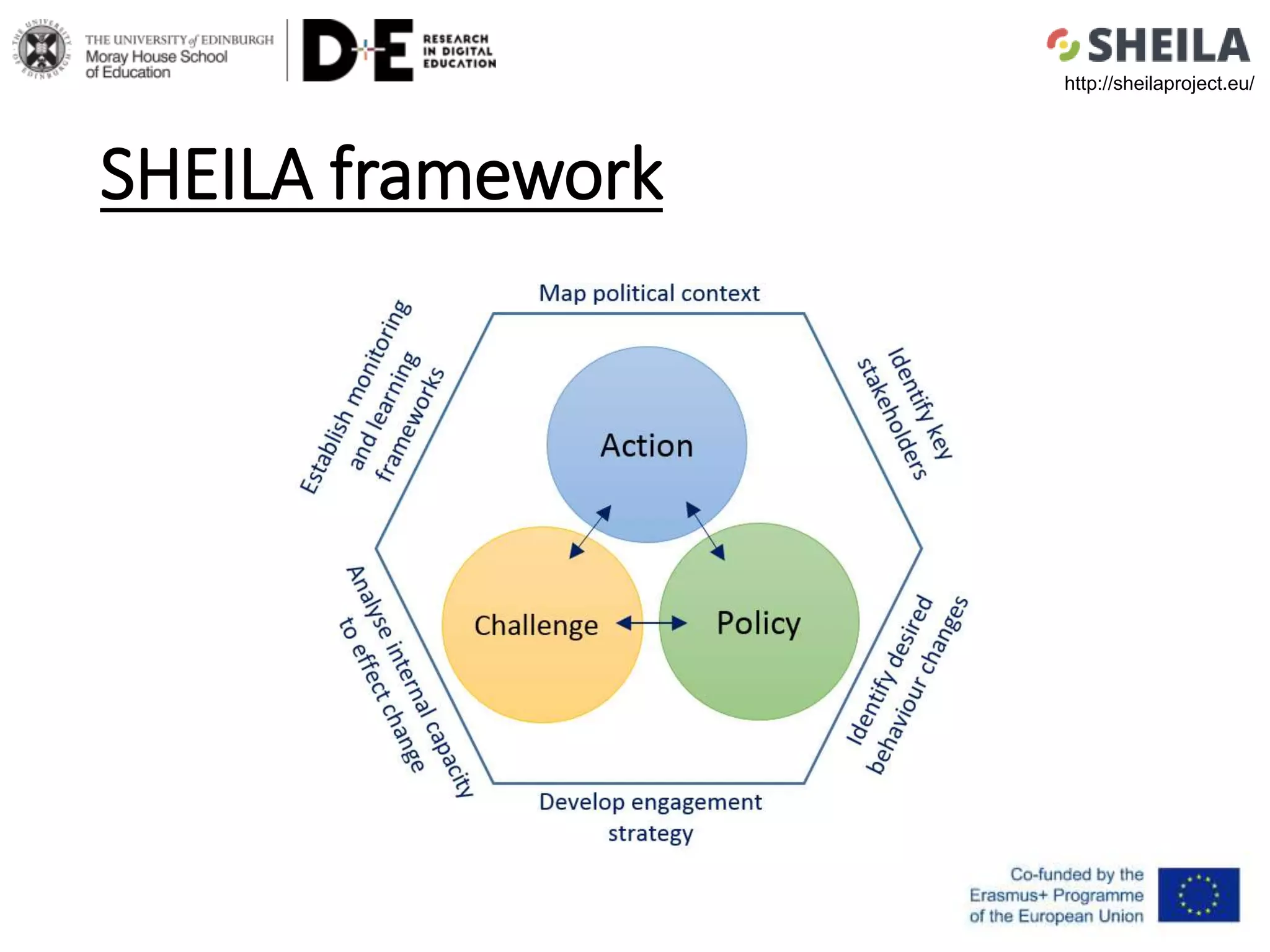

The document discusses the integration of learning analytics in higher education, outlining challenges and policy frameworks for its adoption. Key obstacles include leadership engagement, stakeholder involvement, data literacy training, and addressing privacy and ethical concerns. It highlights insights from institutional interviews across Europe, illustrating diverse interests and concerns of various stakeholders regarding the implementation of learning analytics.