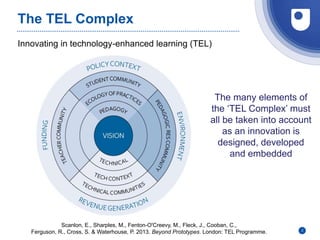

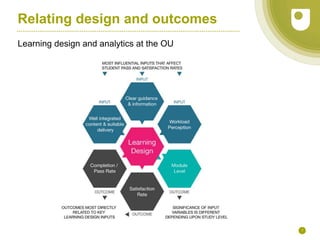

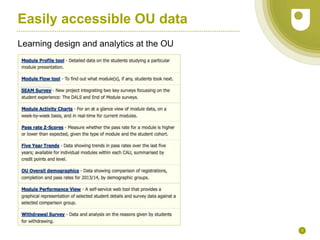

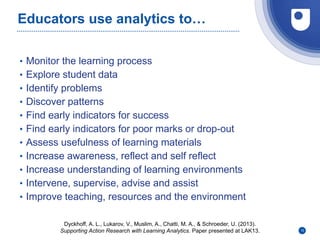

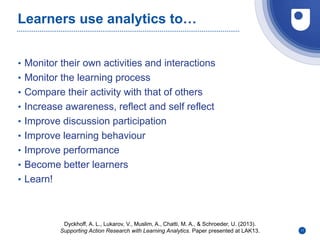

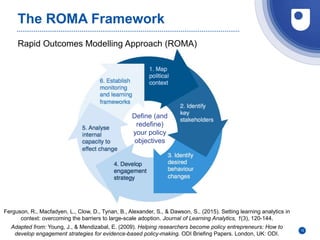

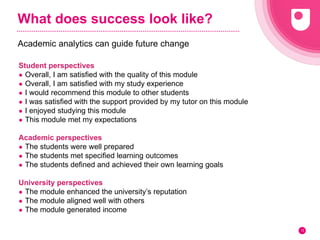

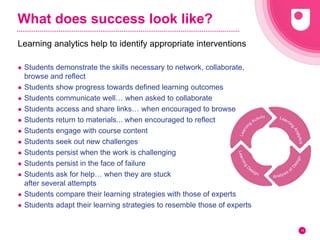

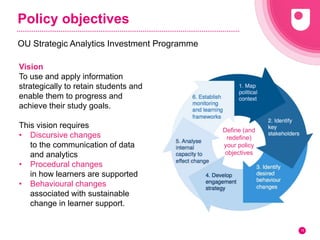

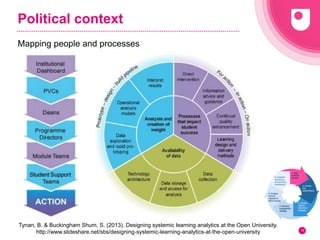

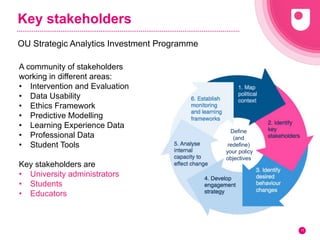

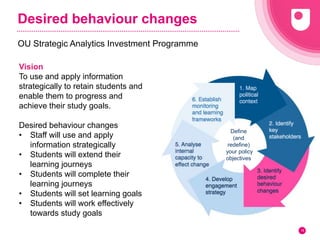

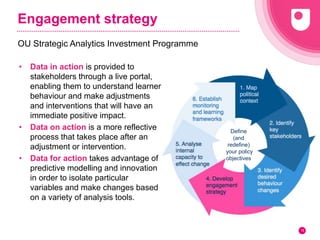

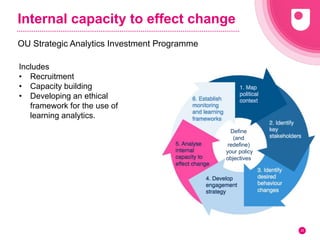

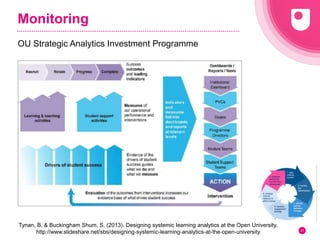

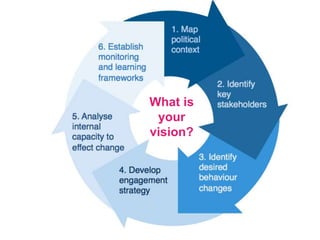

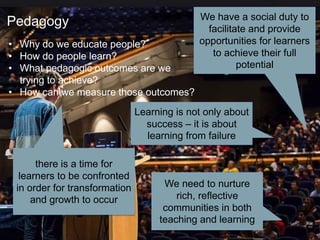

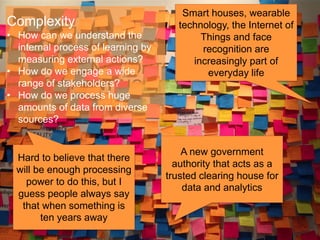

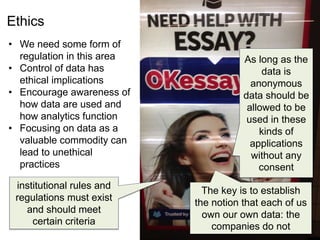

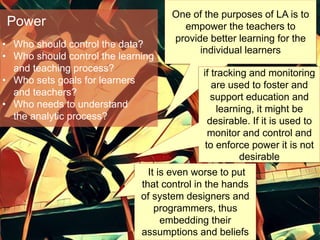

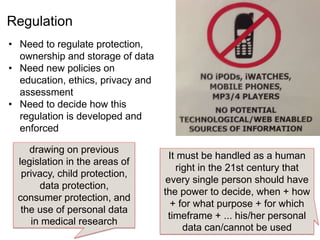

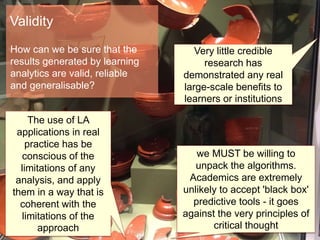

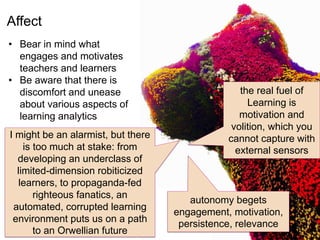

The document discusses the development of learning analytics within educational institutions, particularly at The Open University, focusing on capabilities that enhance teaching, learning, and student retention. It highlights the need for strategic investment in data science, ethical considerations regarding data use, and the importance of engaging stakeholders to foster effective learning environments. Additionally, it emphasizes the potential impact of analytics on educational policy and the necessity for a clear vision to guide future innovations in technology-enhanced learning.