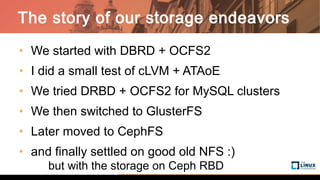

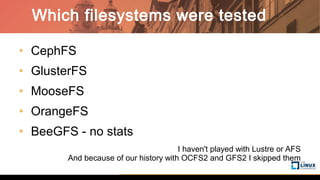

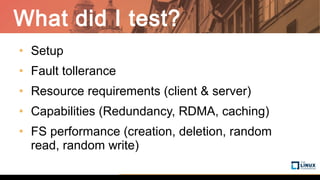

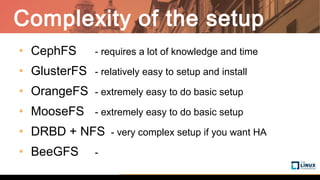

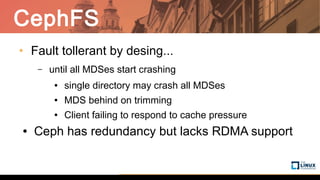

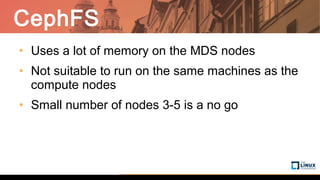

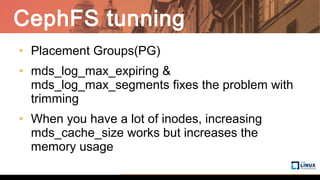

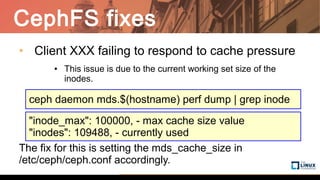

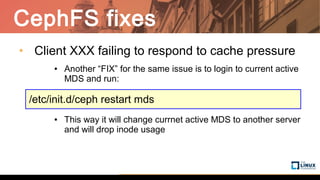

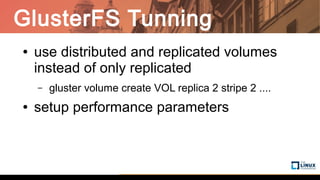

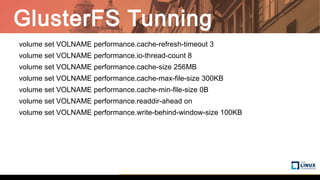

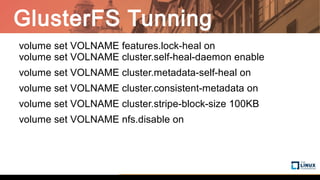

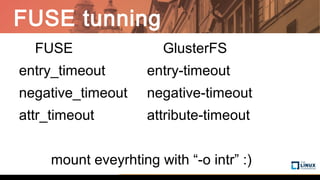

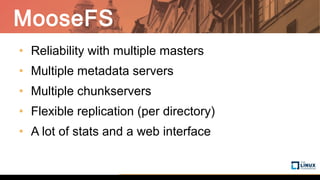

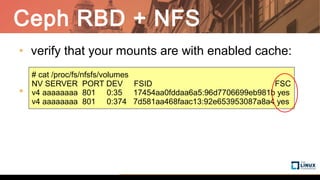

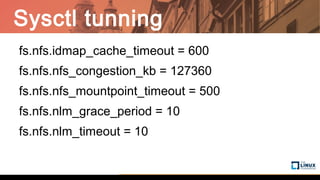

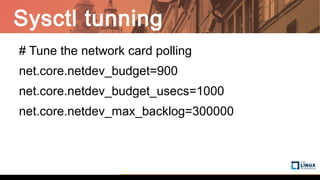

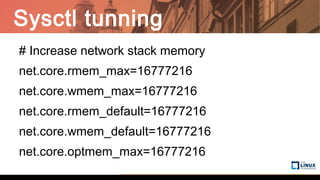

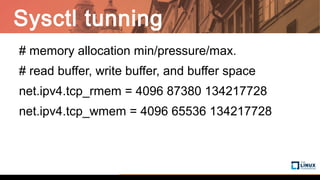

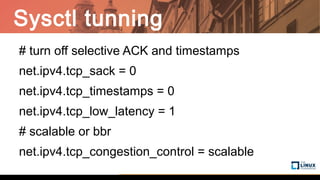

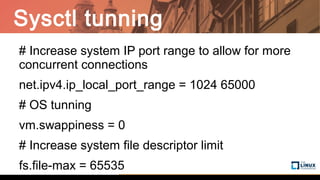

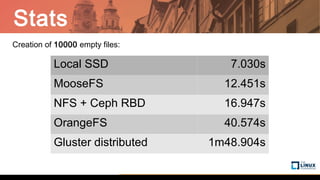

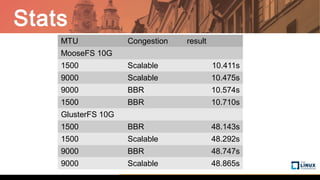

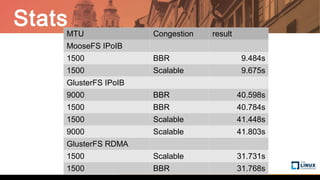

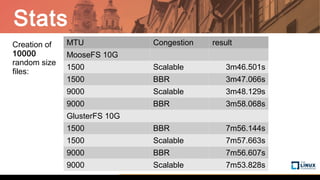

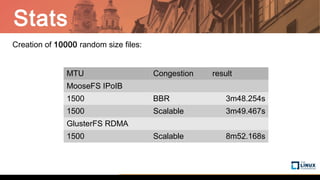

This document summarizes Marian Marinov's testing and experience with different distributed filesystems at his company SiteGround. He tested CephFS, GlusterFS, MooseFS, OrangeFS, and BeeGFS. CephFS required a lot of resources but lacked redundancy. GlusterFS was relatively easy to set up but had high CPU usage. MooseFS and OrangeFS were also easy to set up. Ultimately, they settled on Ceph RBD with NFS and caching for performance and simplicity. File creation performance tests showed MooseFS and NFS+Ceph RBD outperformed OrangeFS and GlusterFS. Tuning settings like MTU, congestion control, and caching helped optimize performance.