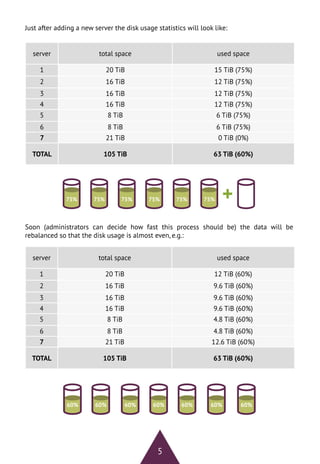

LizardFS v4.0 was recently released. It is a distributed, scalable, fault-tolerant and highly available file system that allows combining disk space from many servers into a single namespace. Key features include snapshots, QoS, data replication with standard and XOR replicas, georeplication, metadata replication, LTO library support, quotas, POSIX compliance, trash functionality, and monitoring tools. It has an architecture with separate metadata and chunk servers and can scale out by adding new servers without downtime. It is suitable for applications like archives, virtual machine storage, backups, and more due to its enterprise-class features running on commodity hardware.