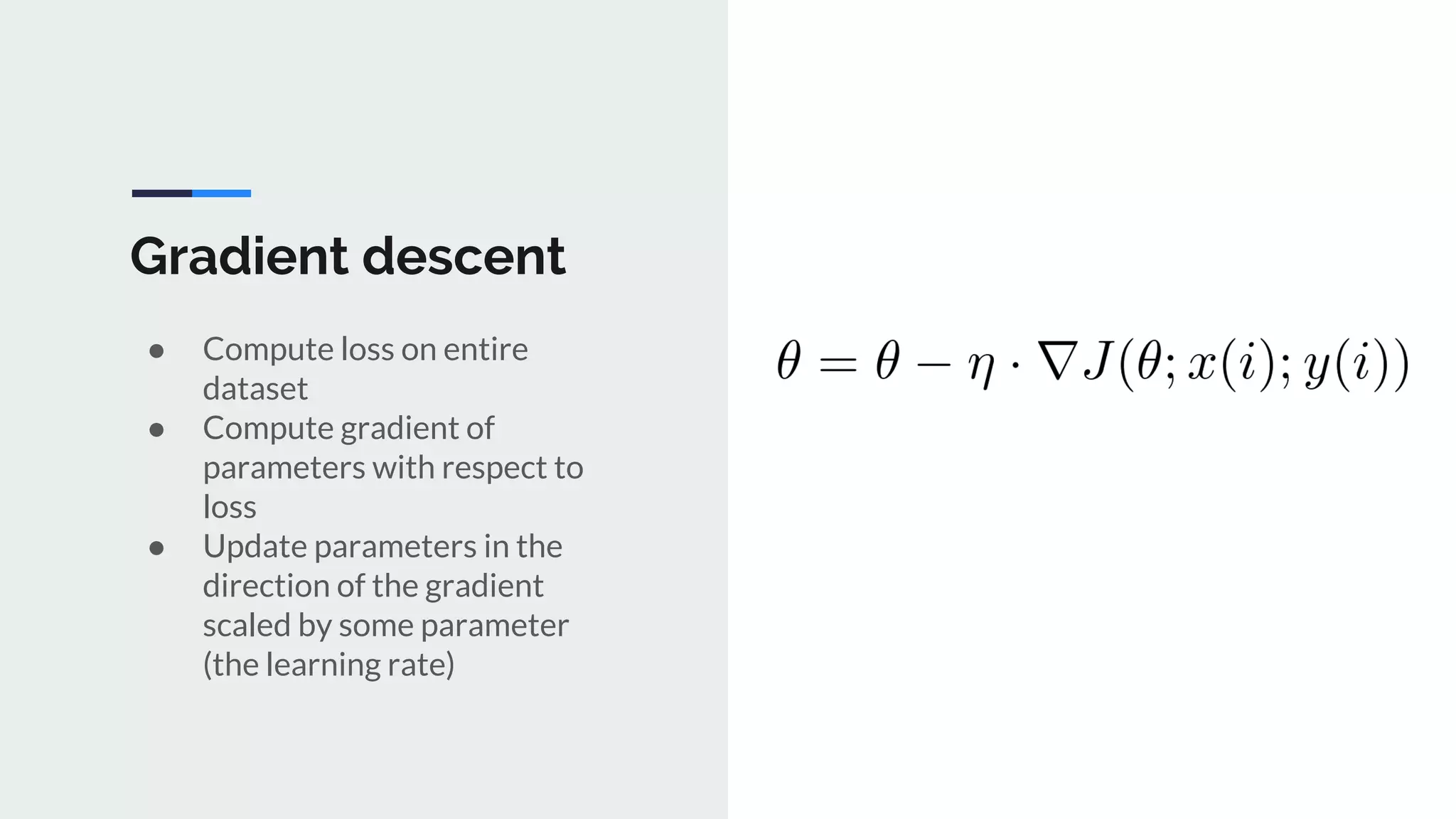

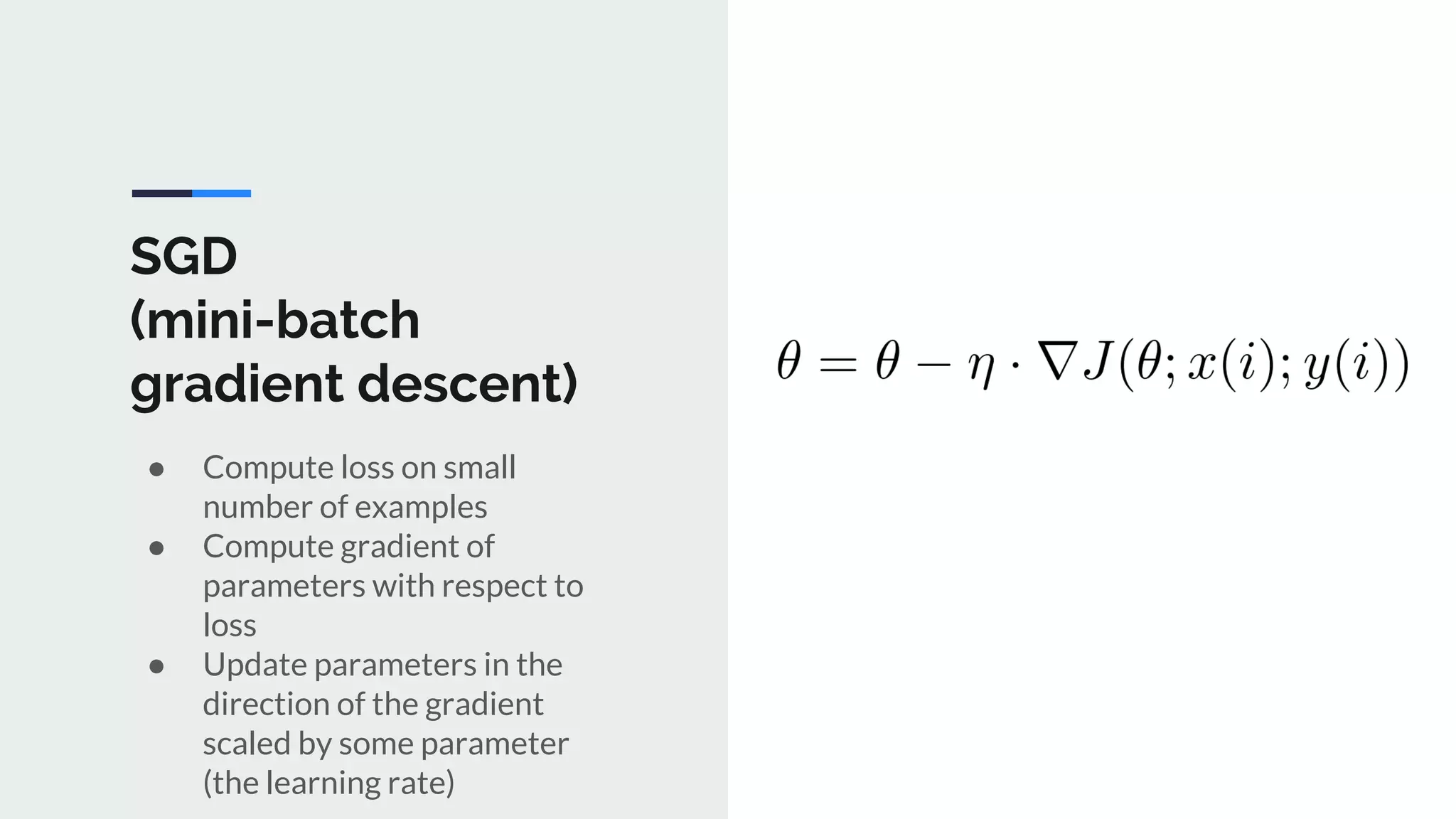

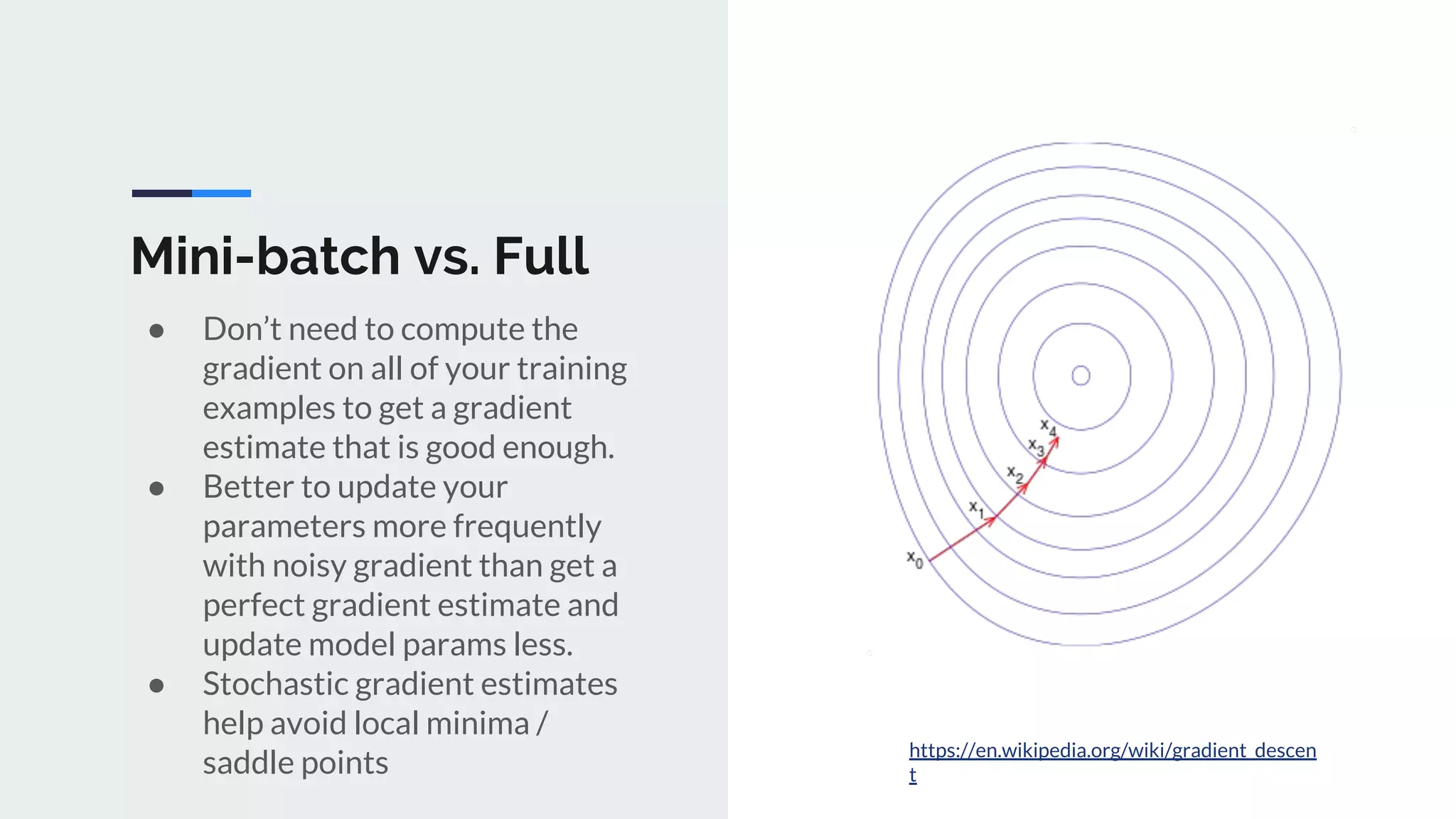

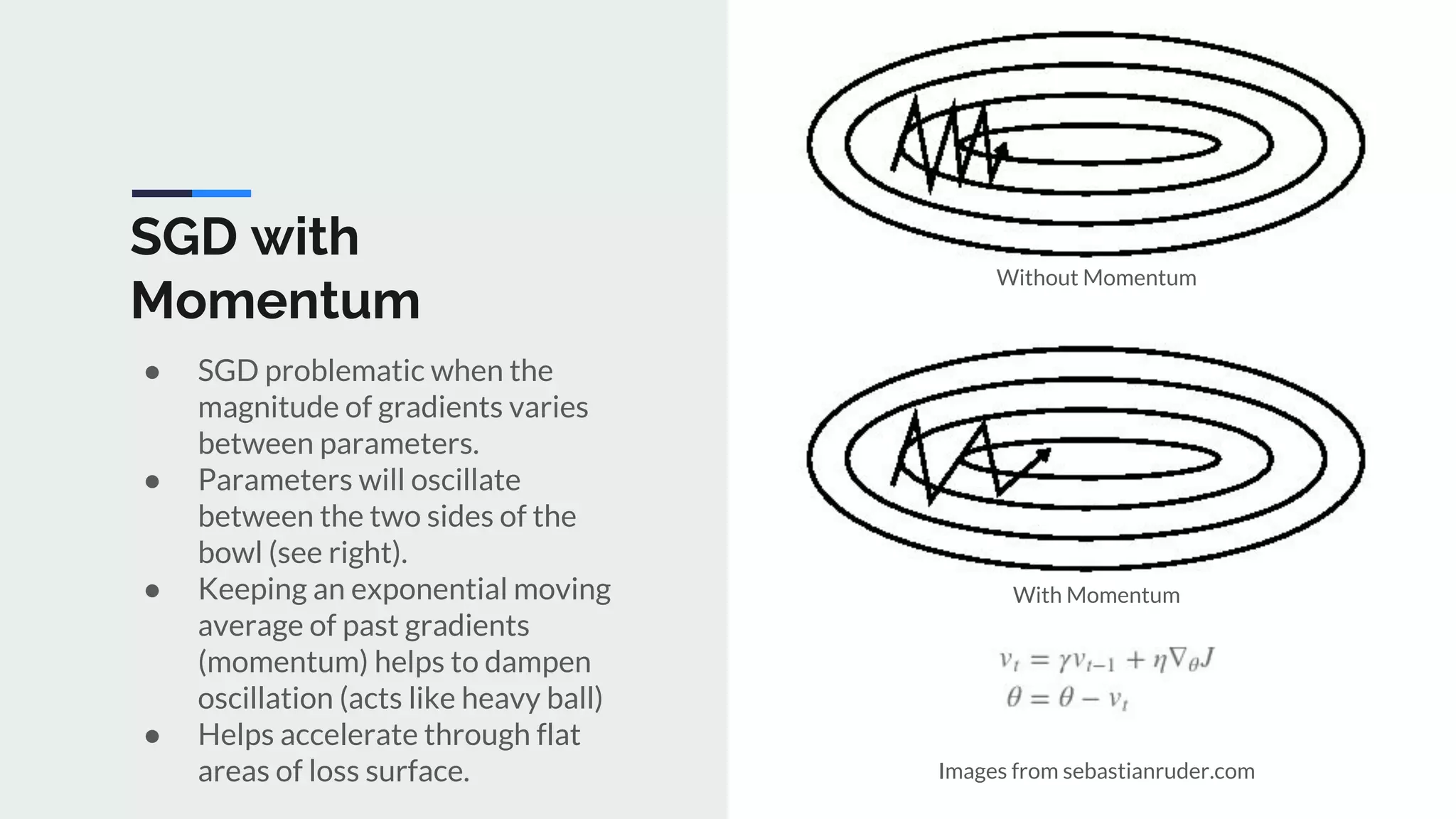

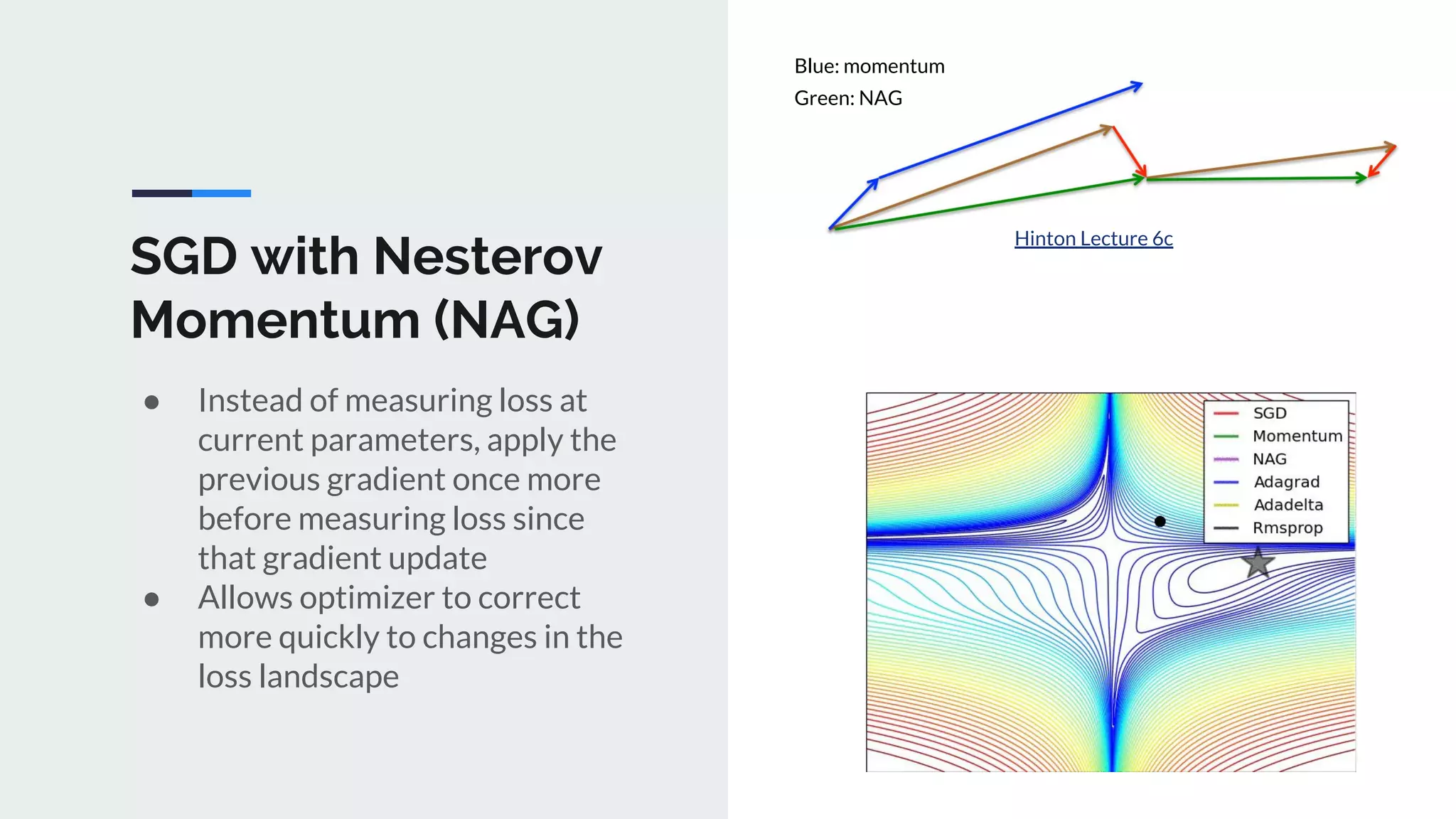

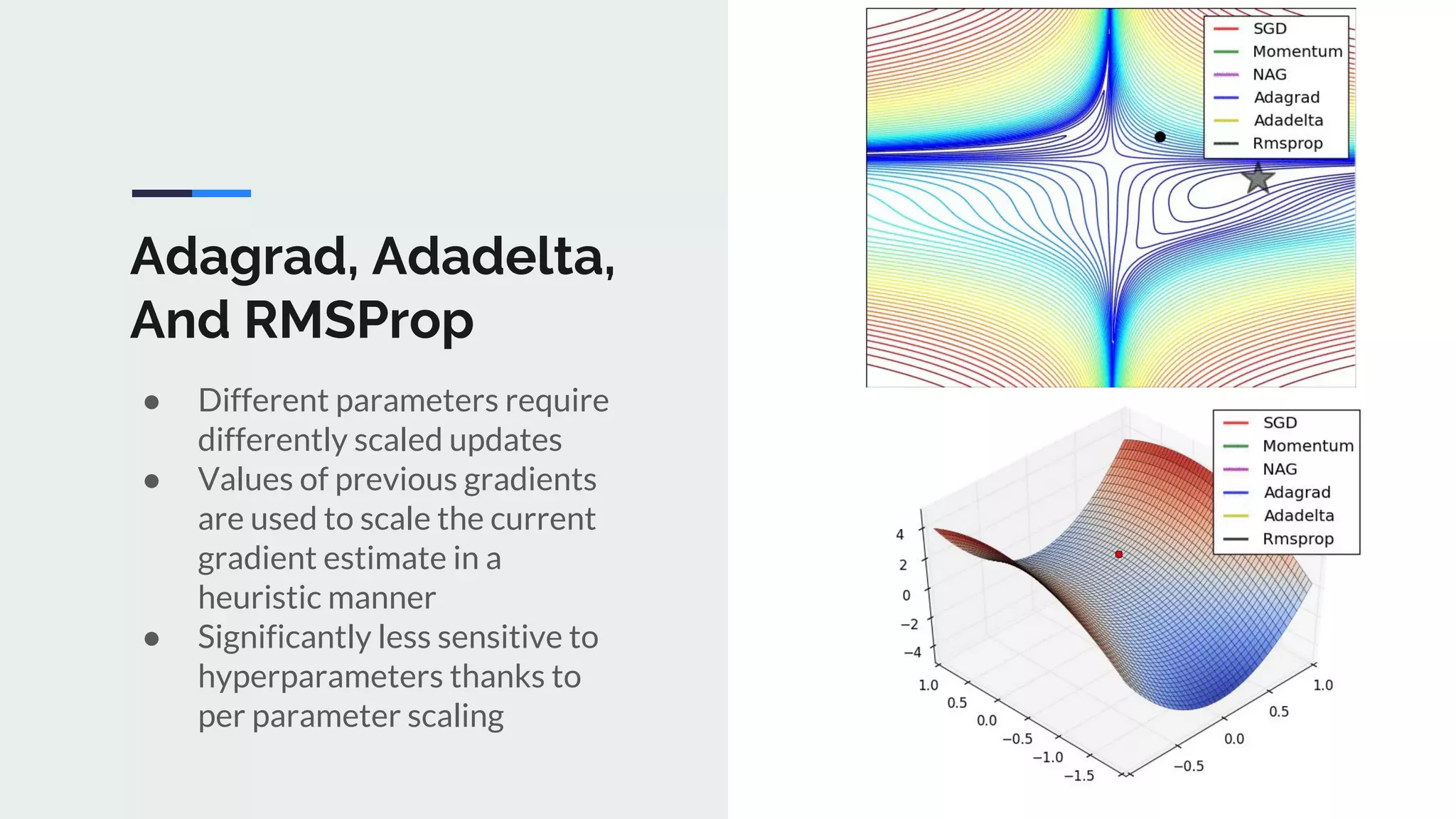

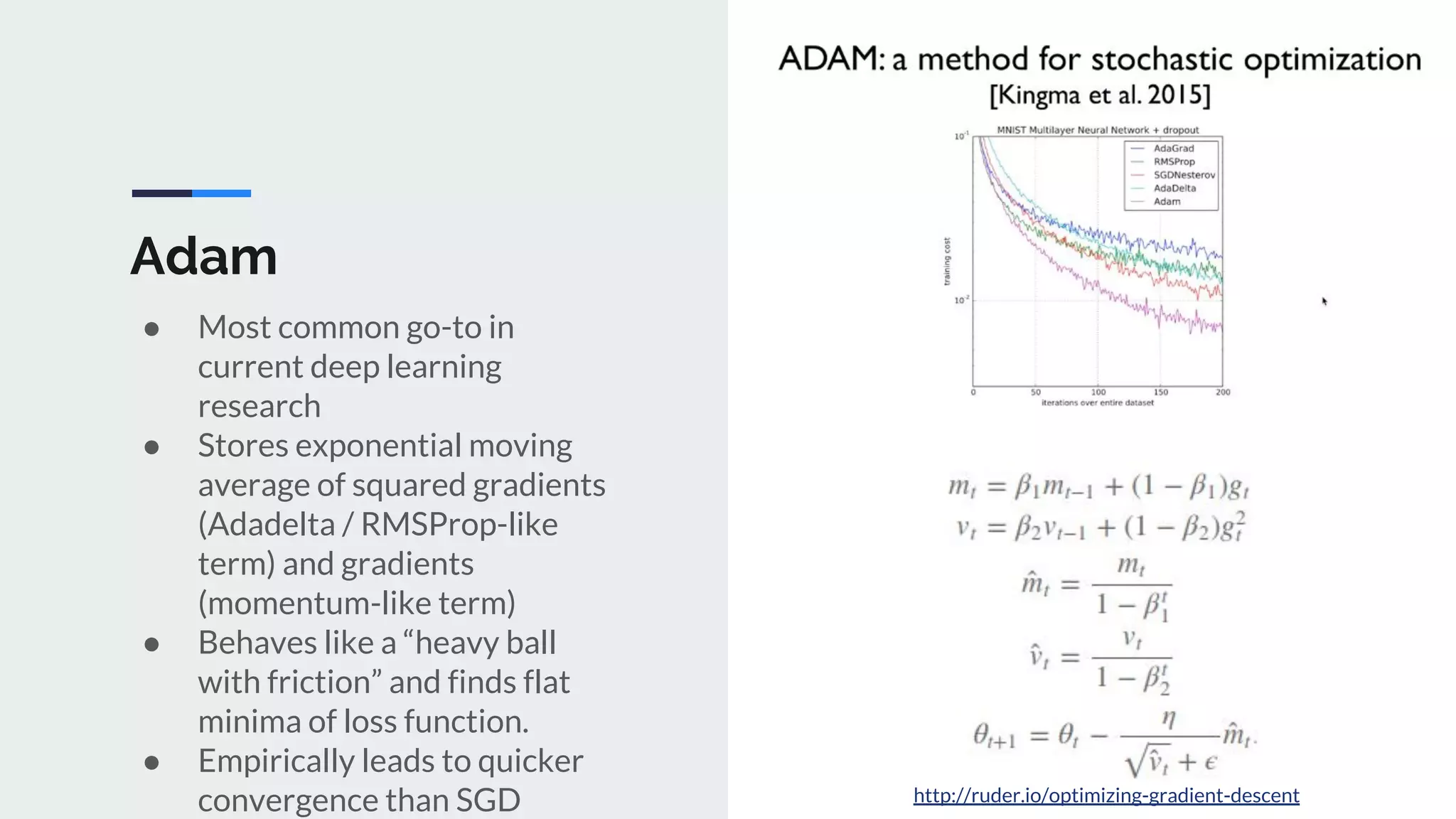

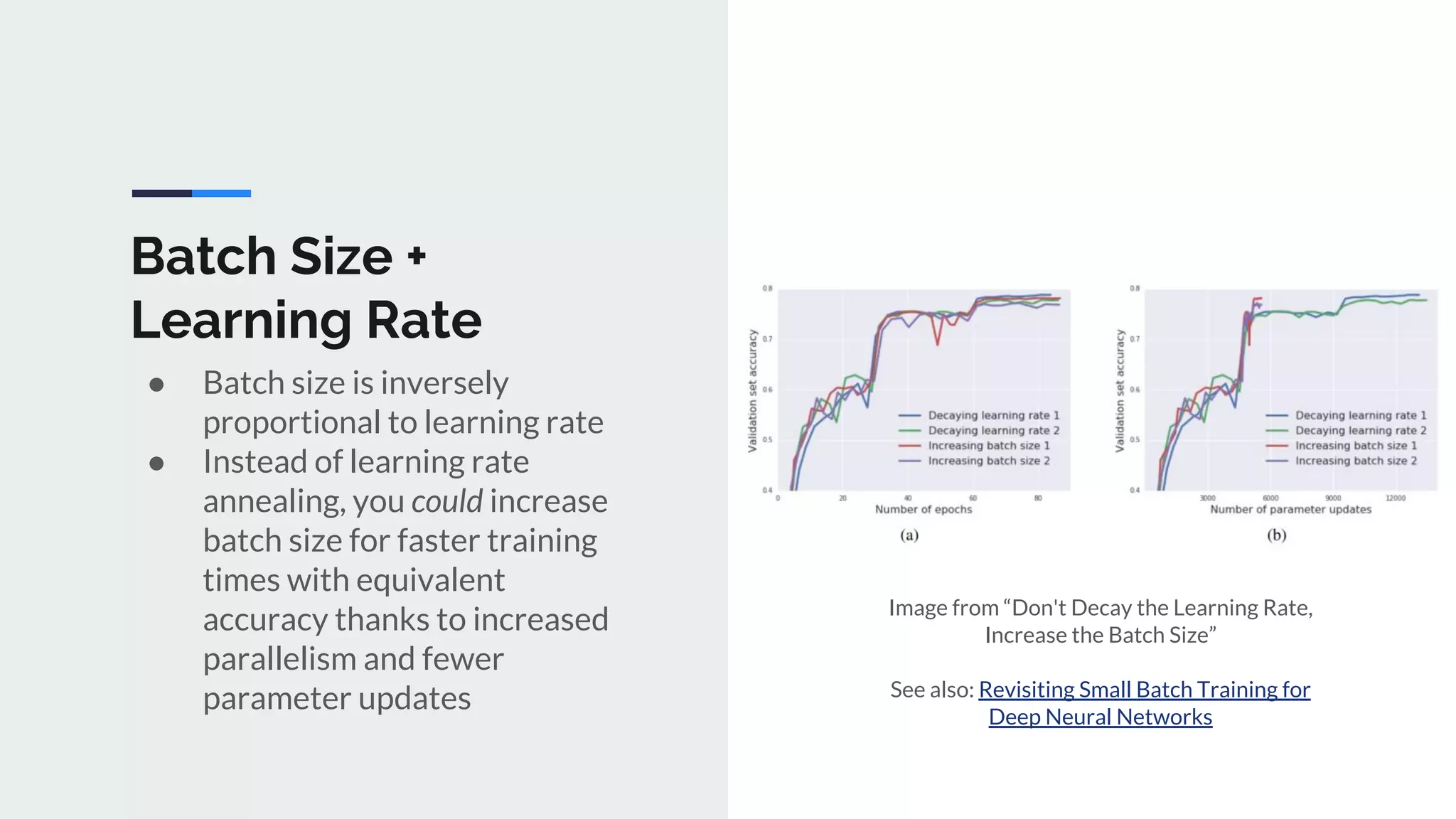

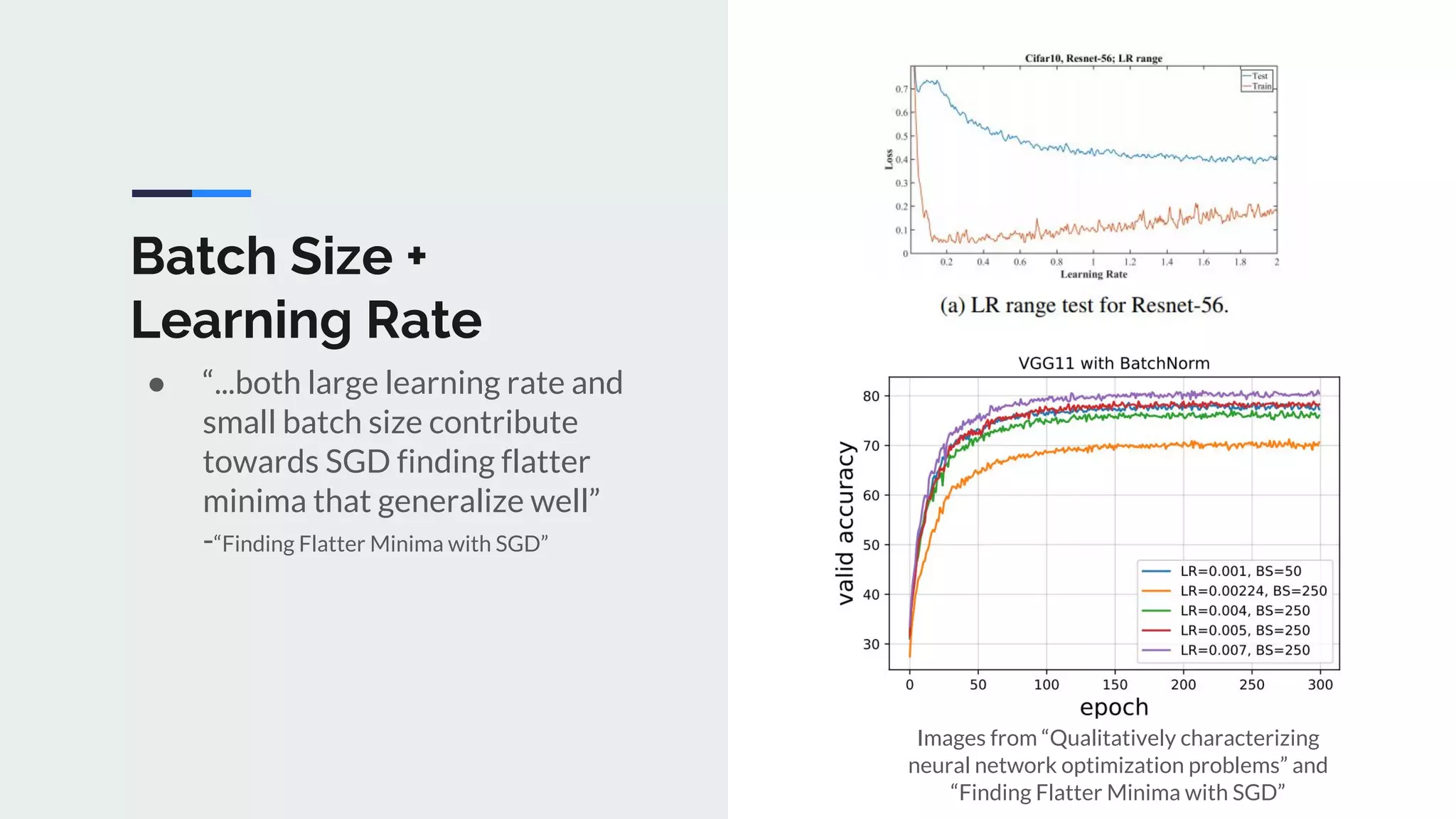

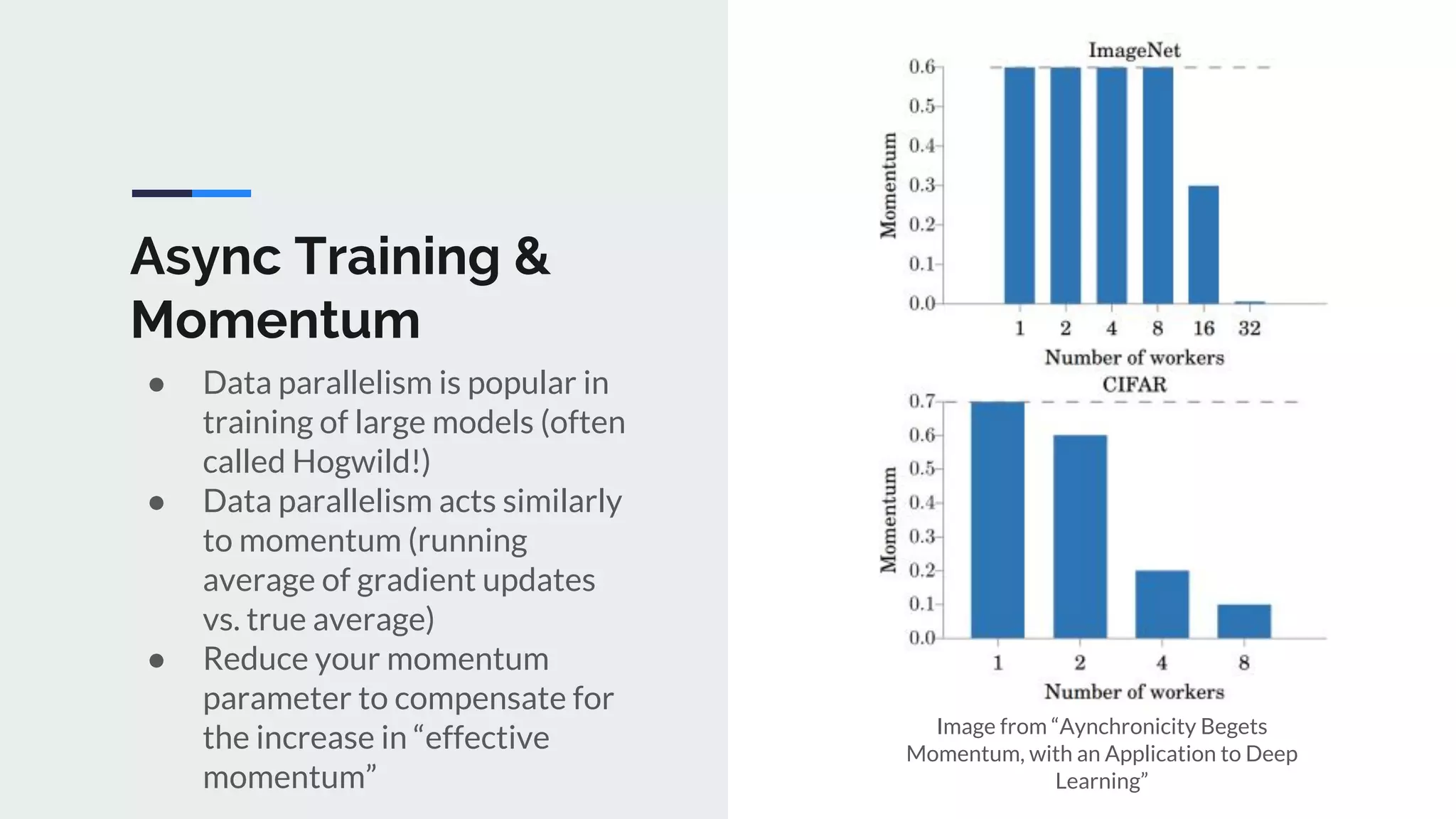

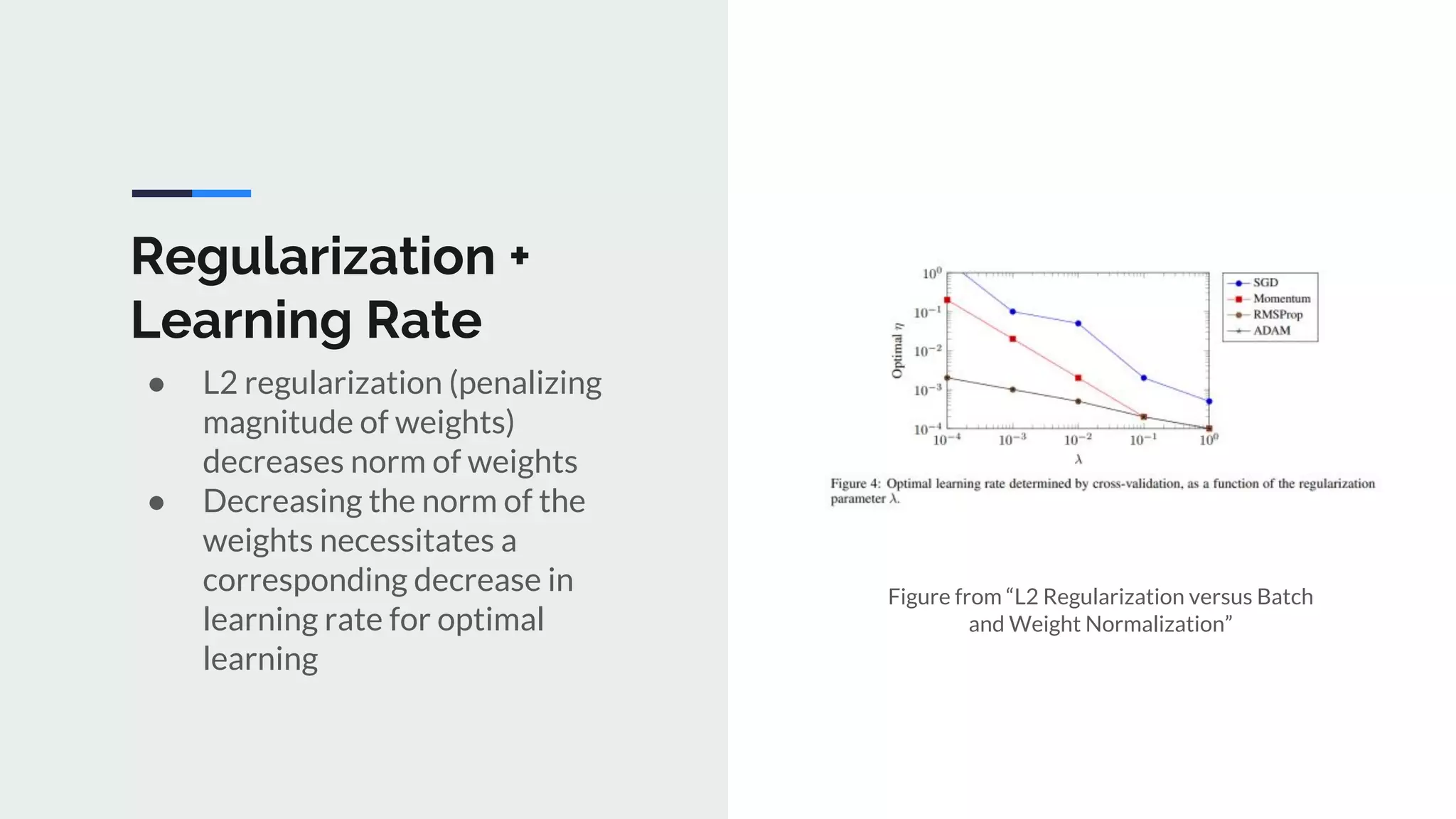

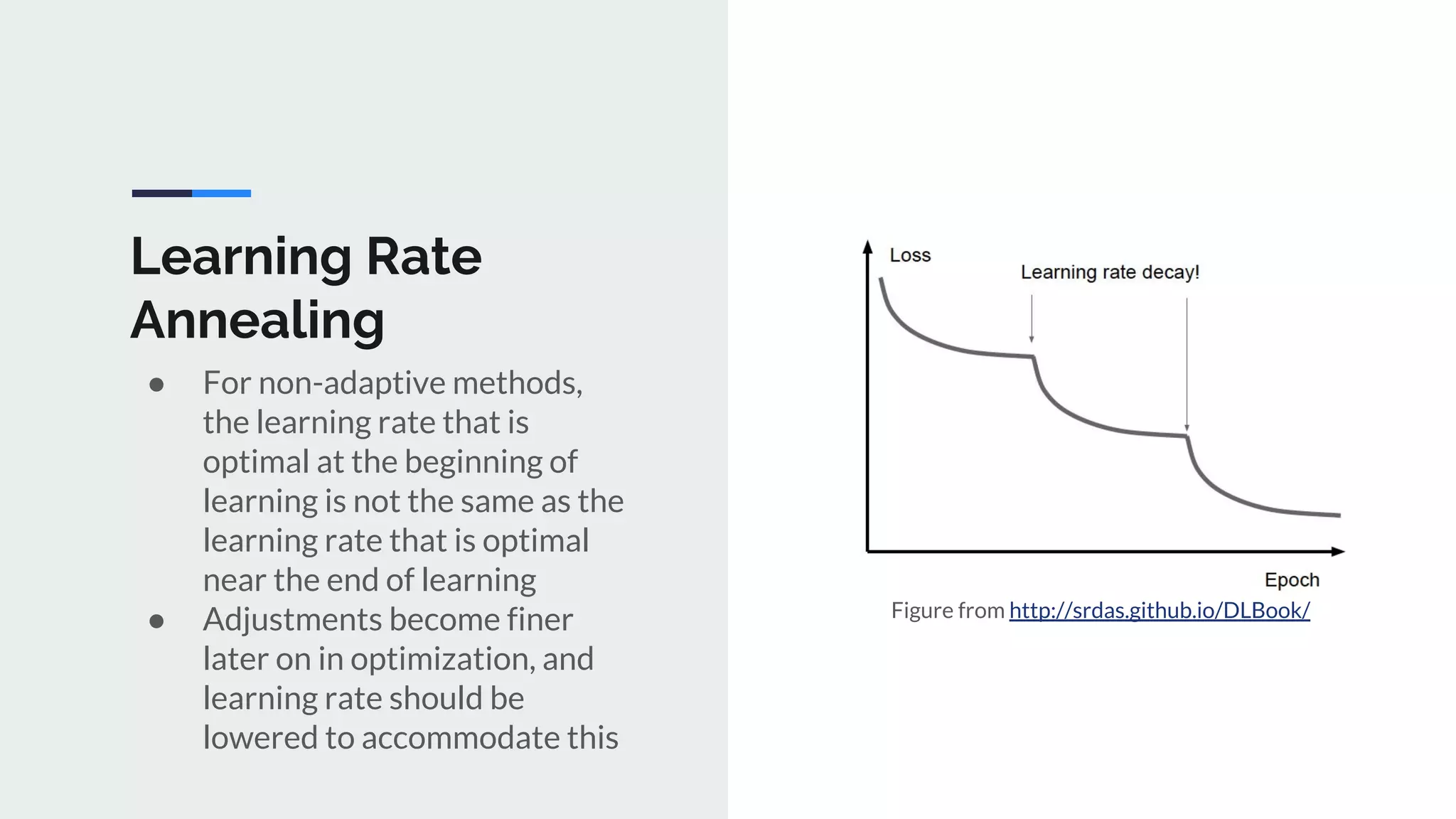

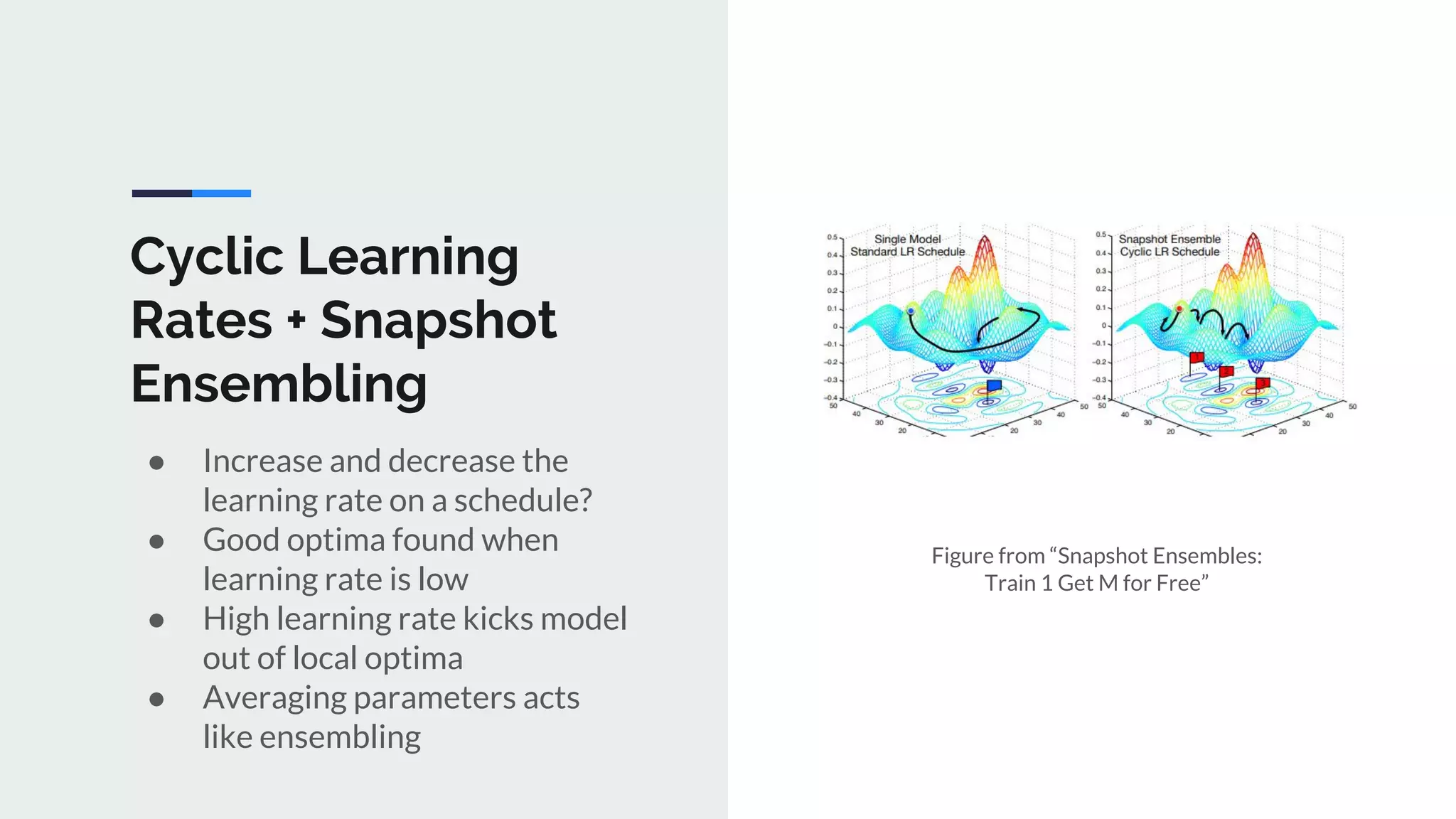

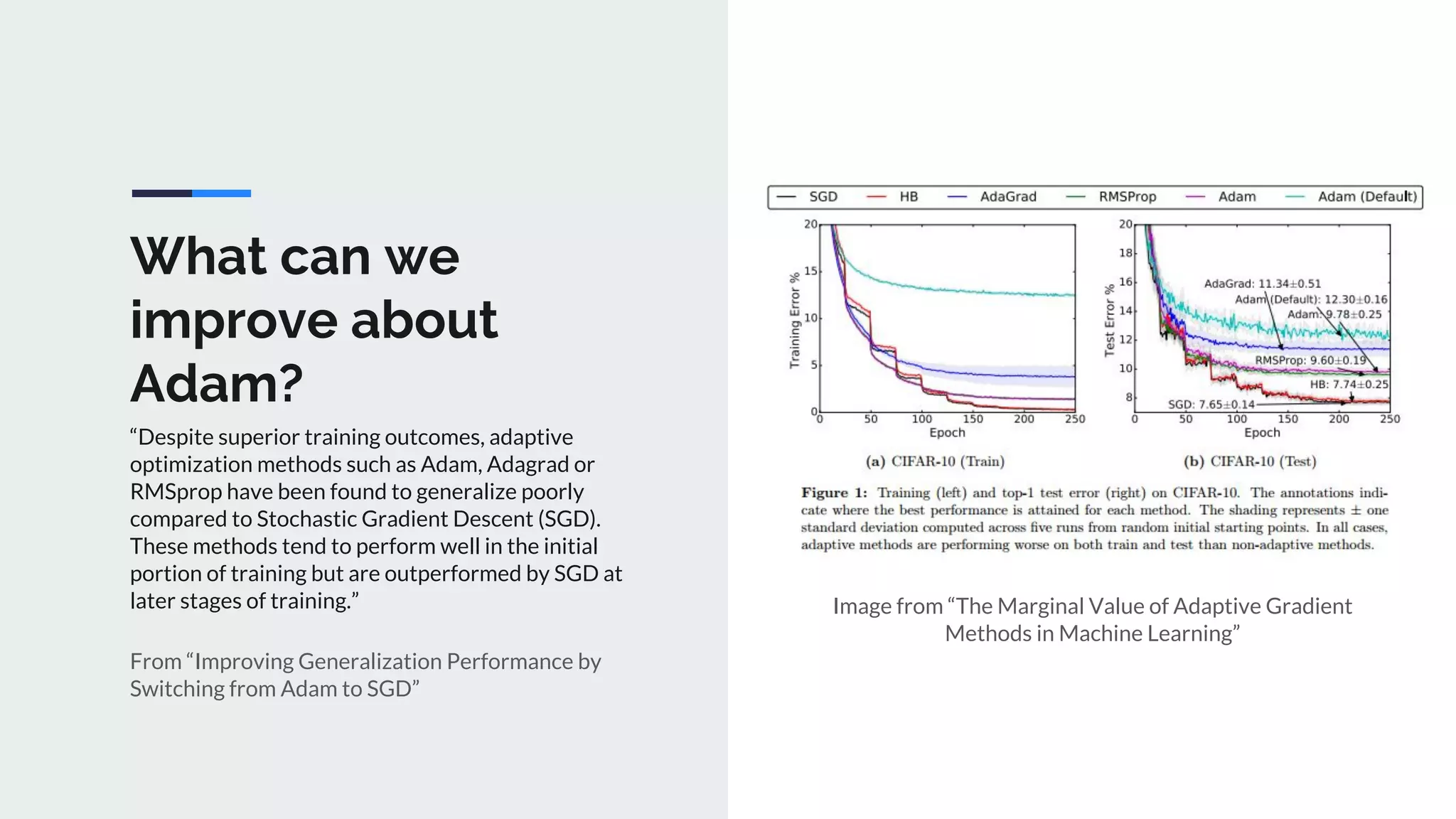

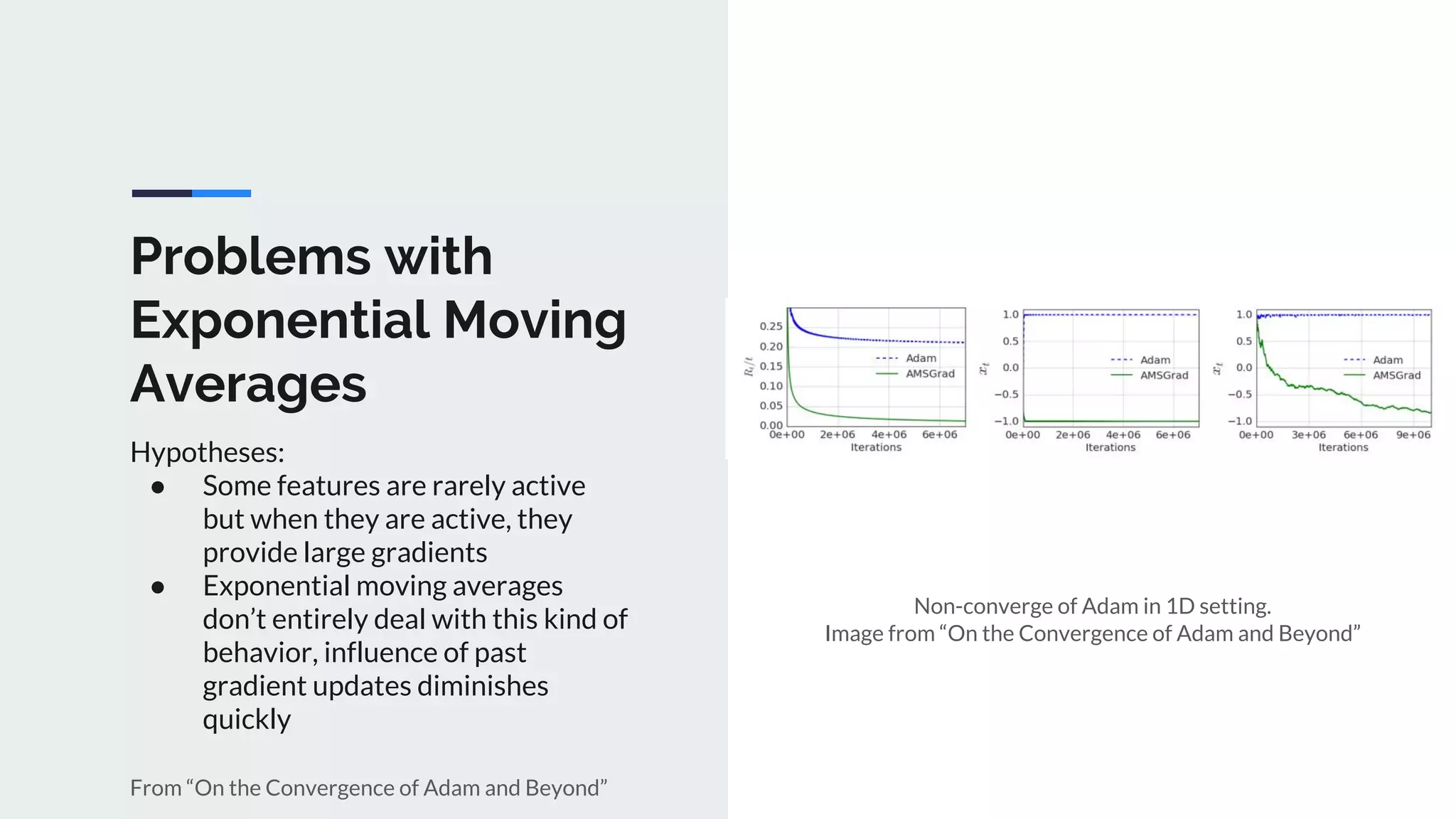

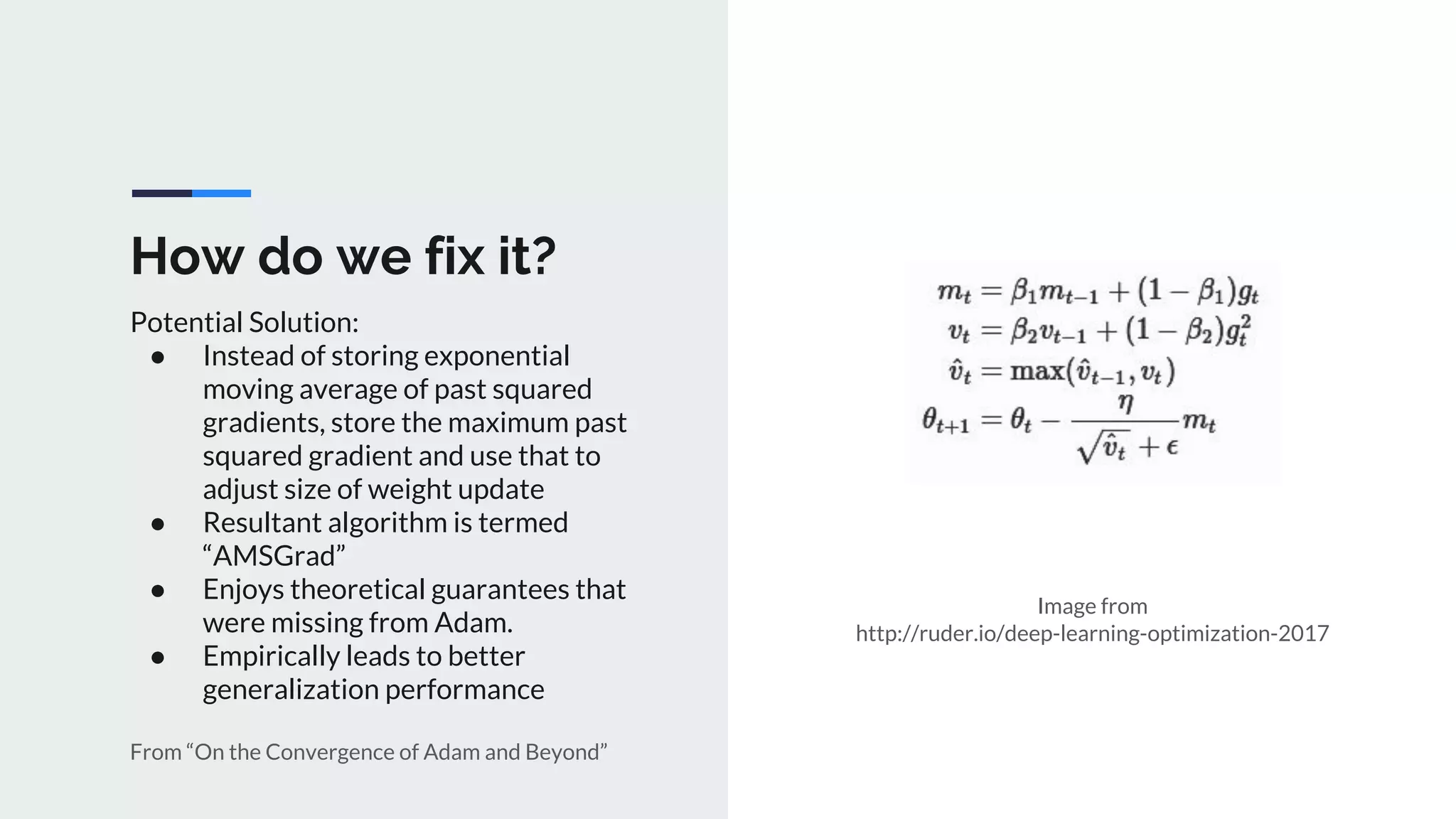

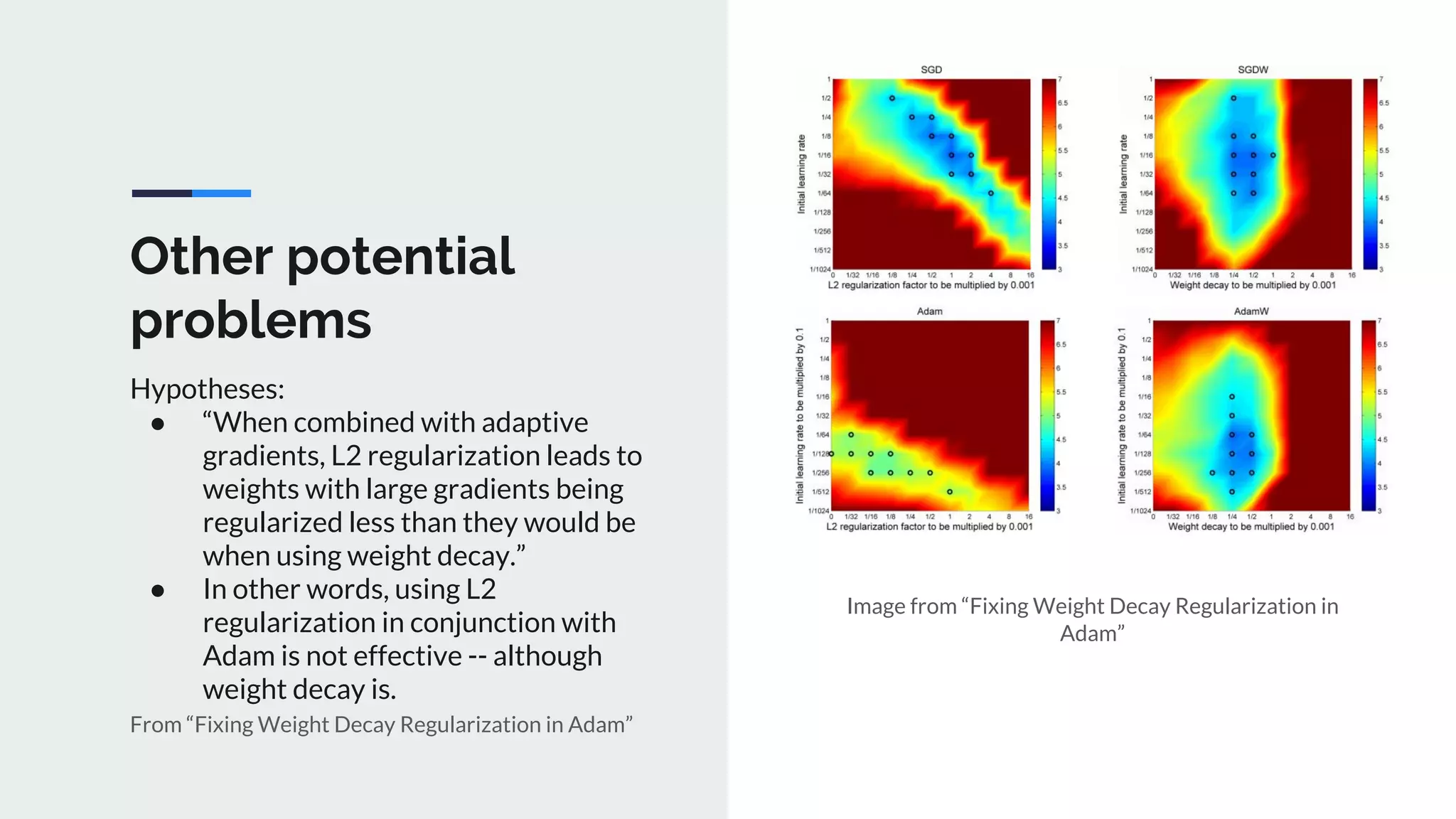

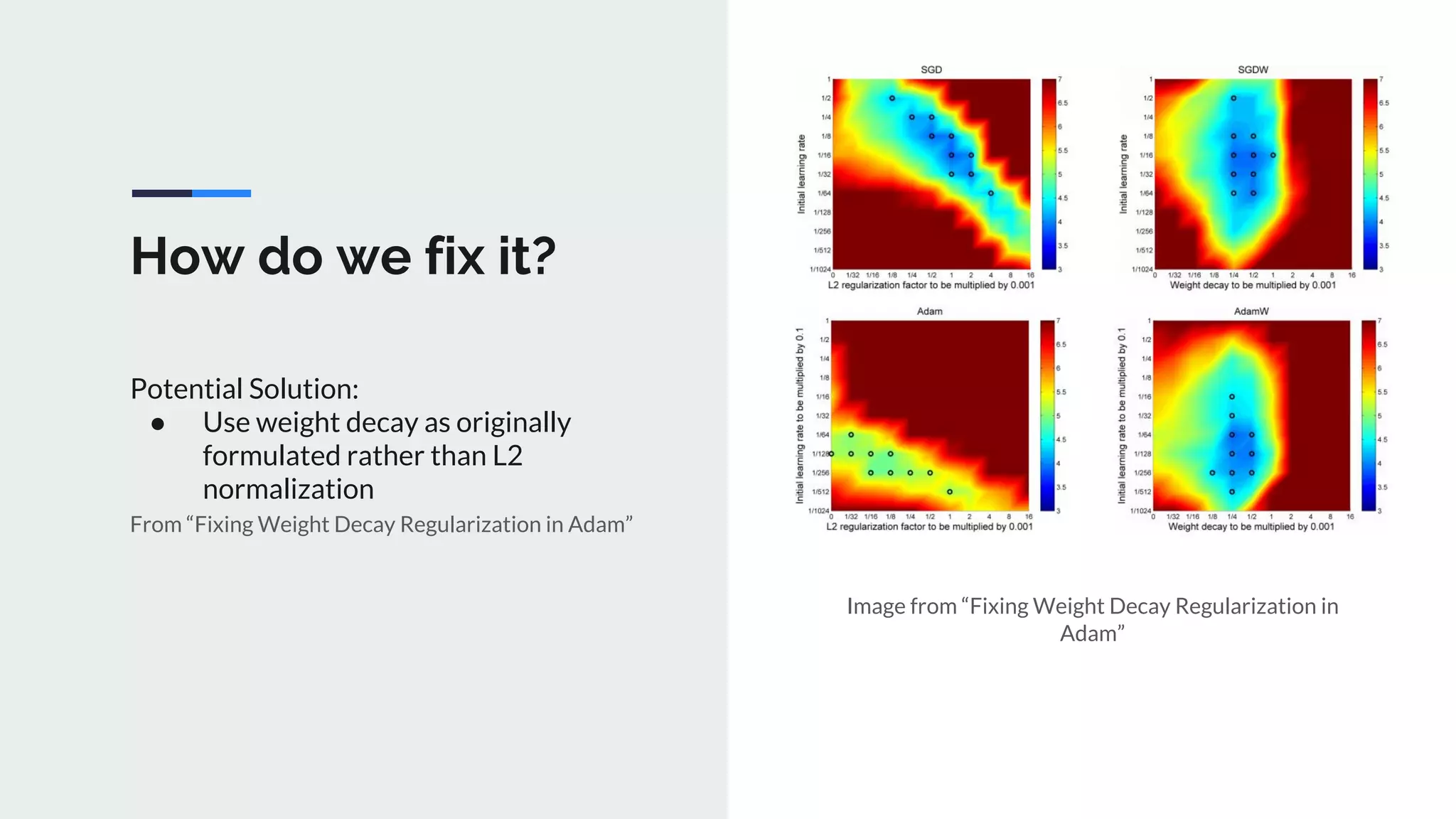

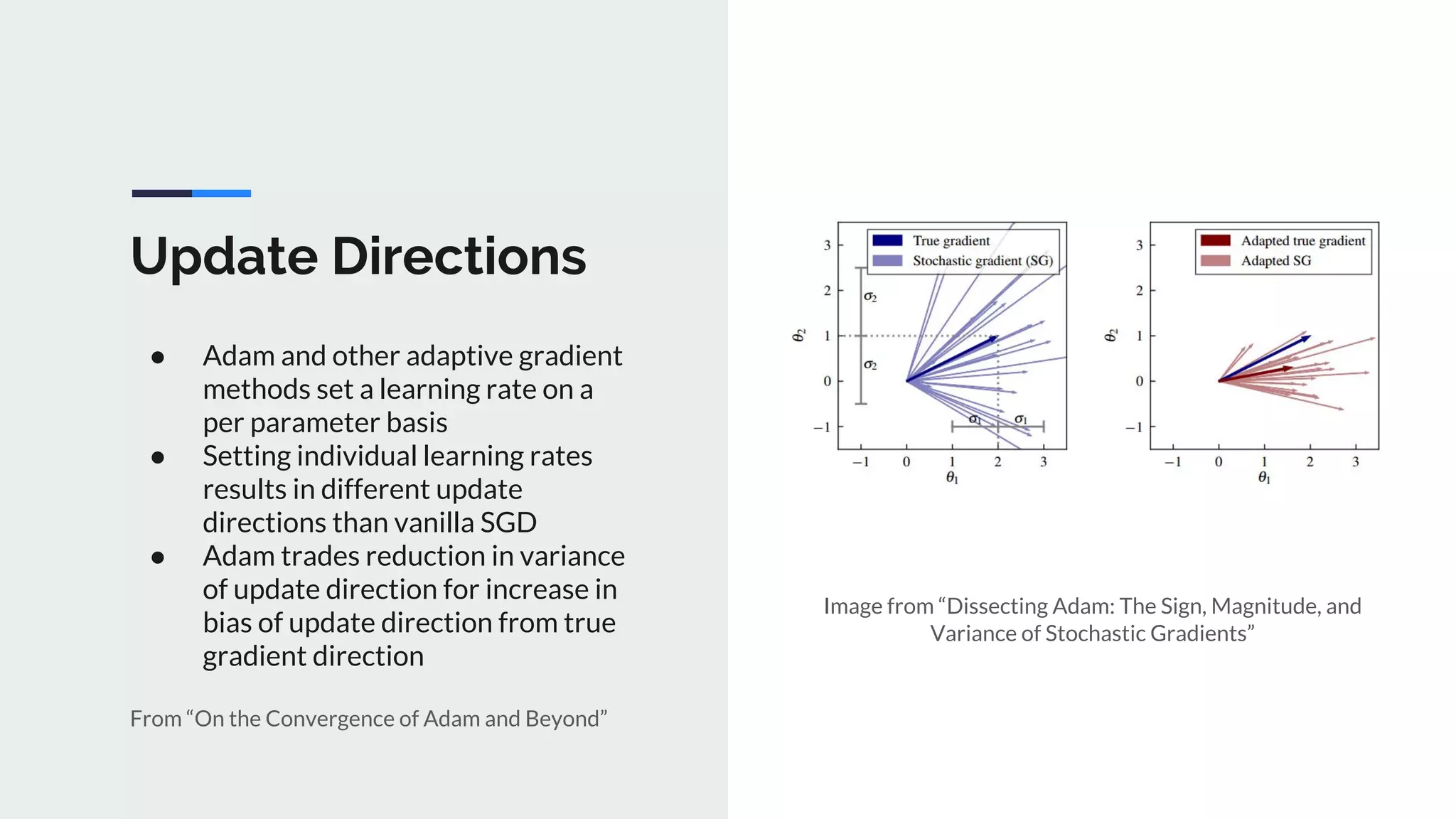

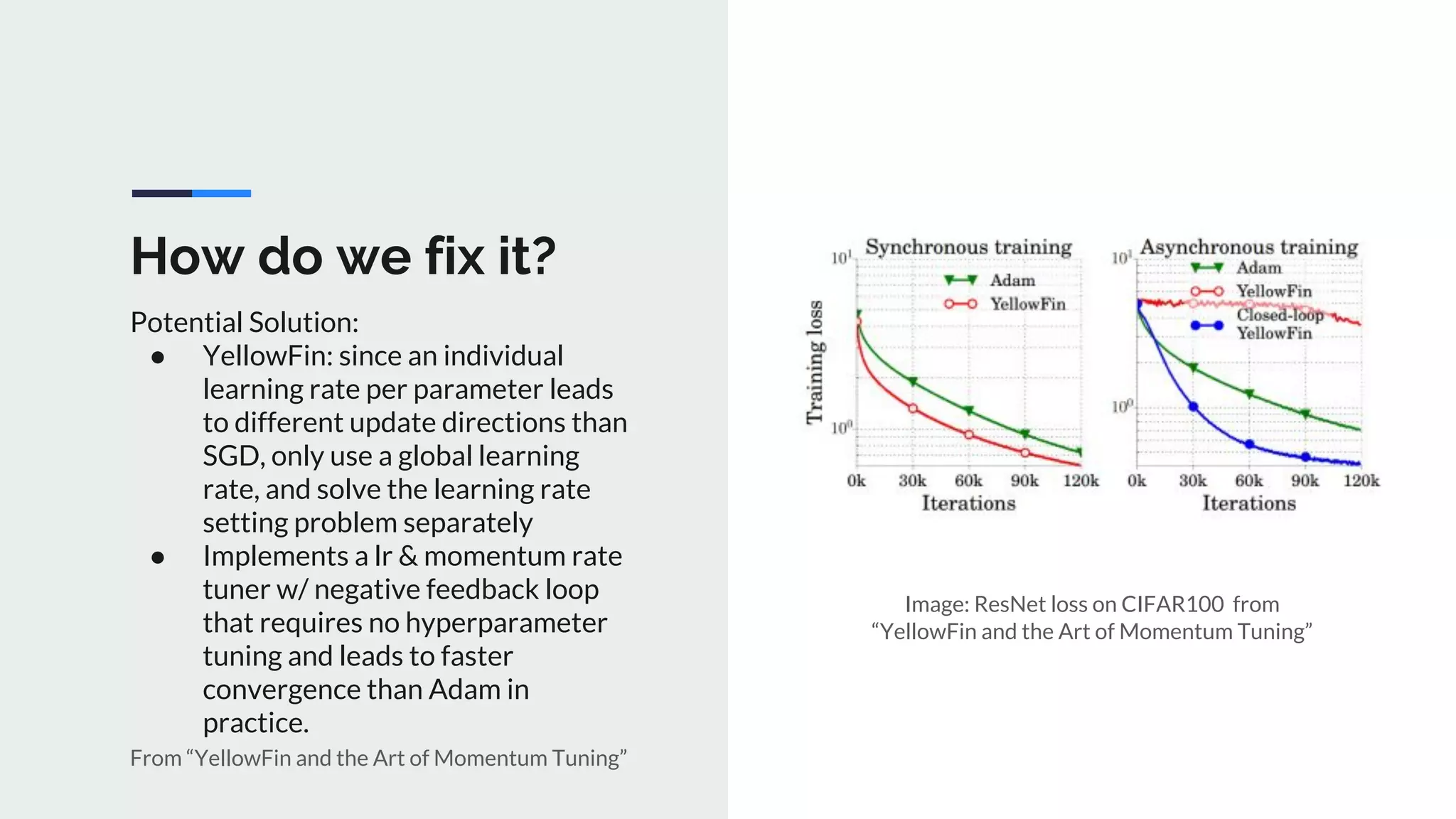

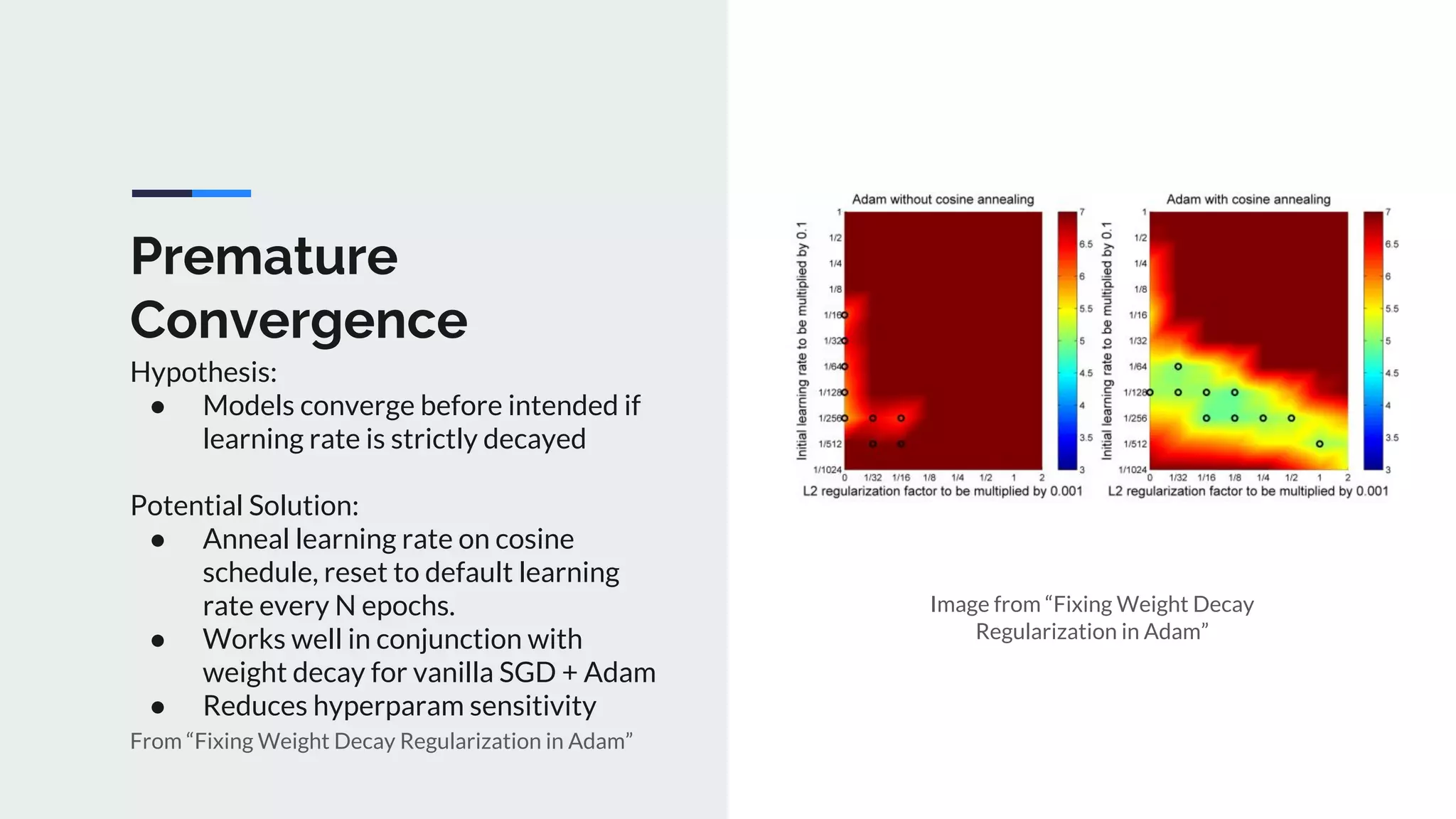

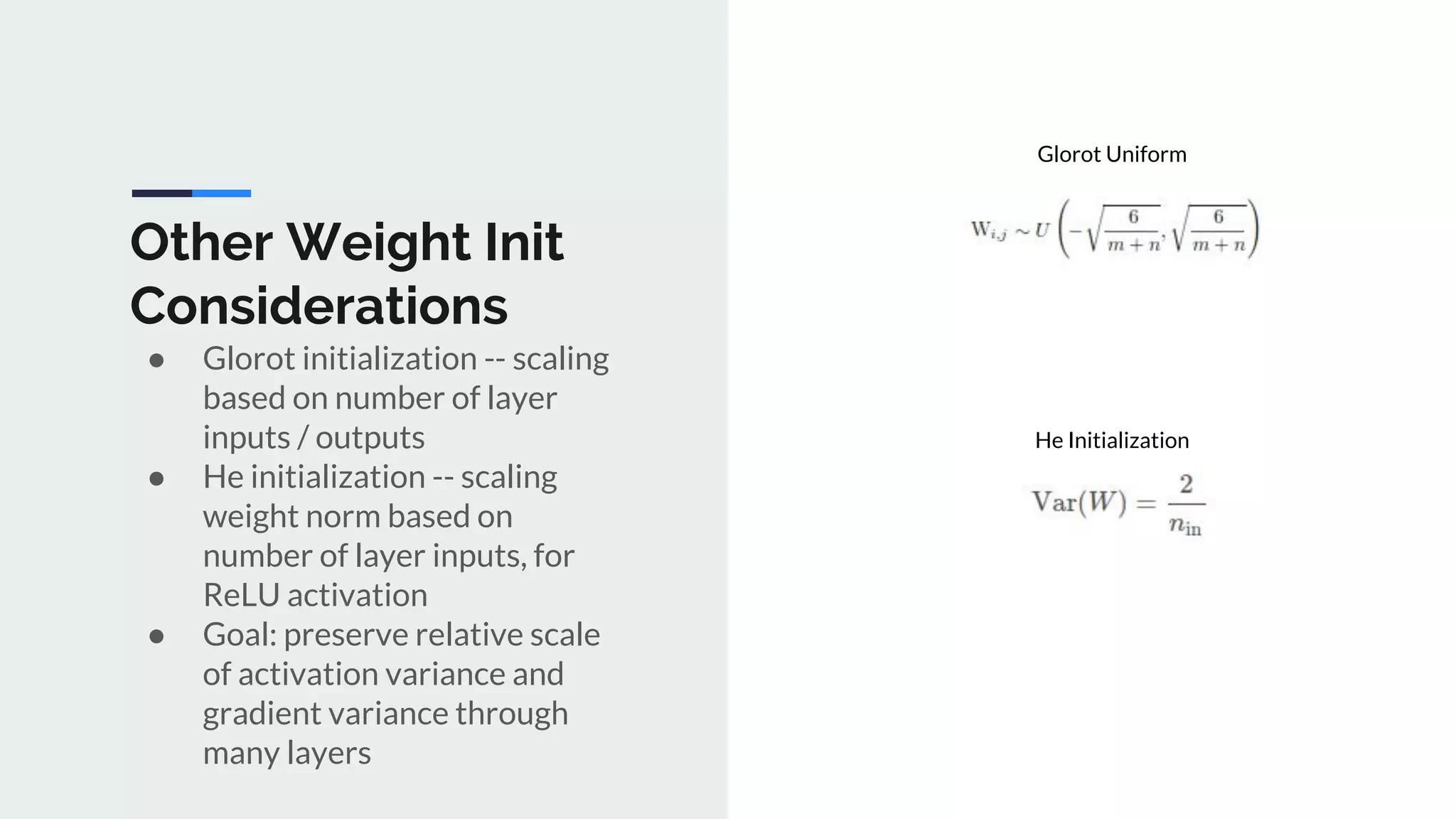

The document provides an extensive overview of optimization techniques in machine learning, particularly focusing on gradient descent methods such as SGD, momentum, and Adam. It discusses the benefits of adjustments like learning rate scheduling and batch size variations, as well as potential issues with adaptive methods and strategies for improvement. Key takeaways include the importance of parameter initialization, the need for careful consideration of hyperparameters, and ongoing developments in the field of optimization.