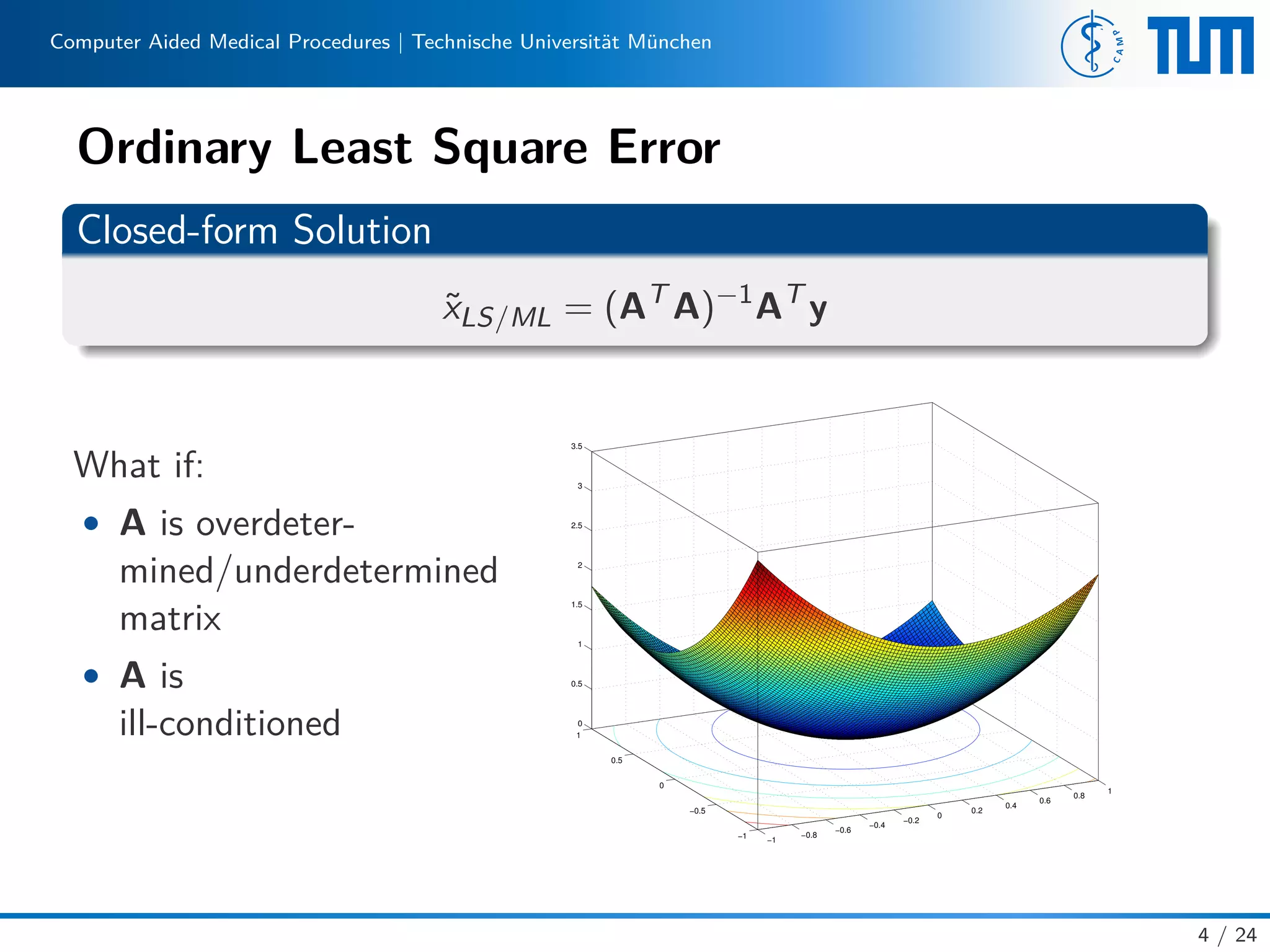

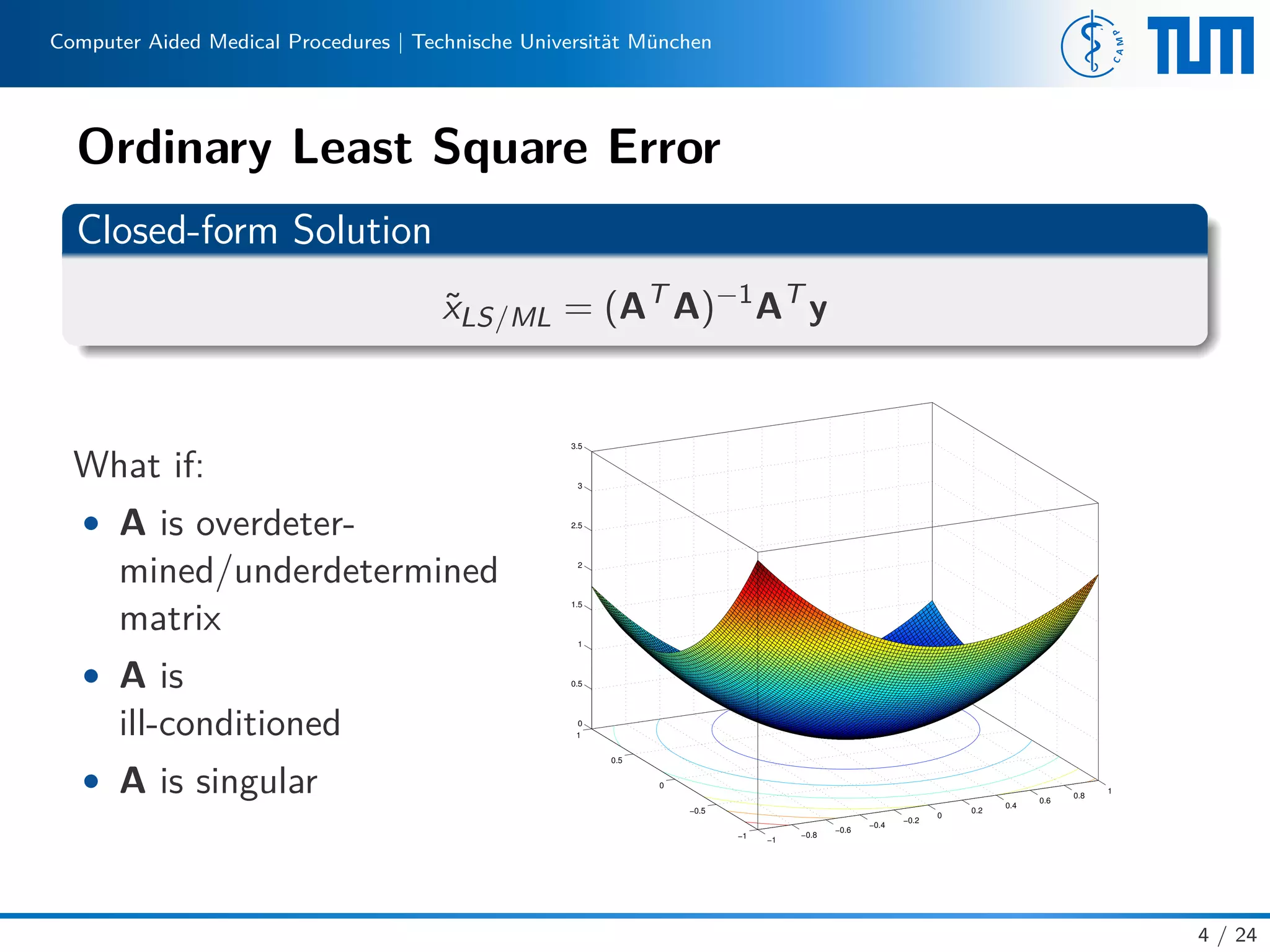

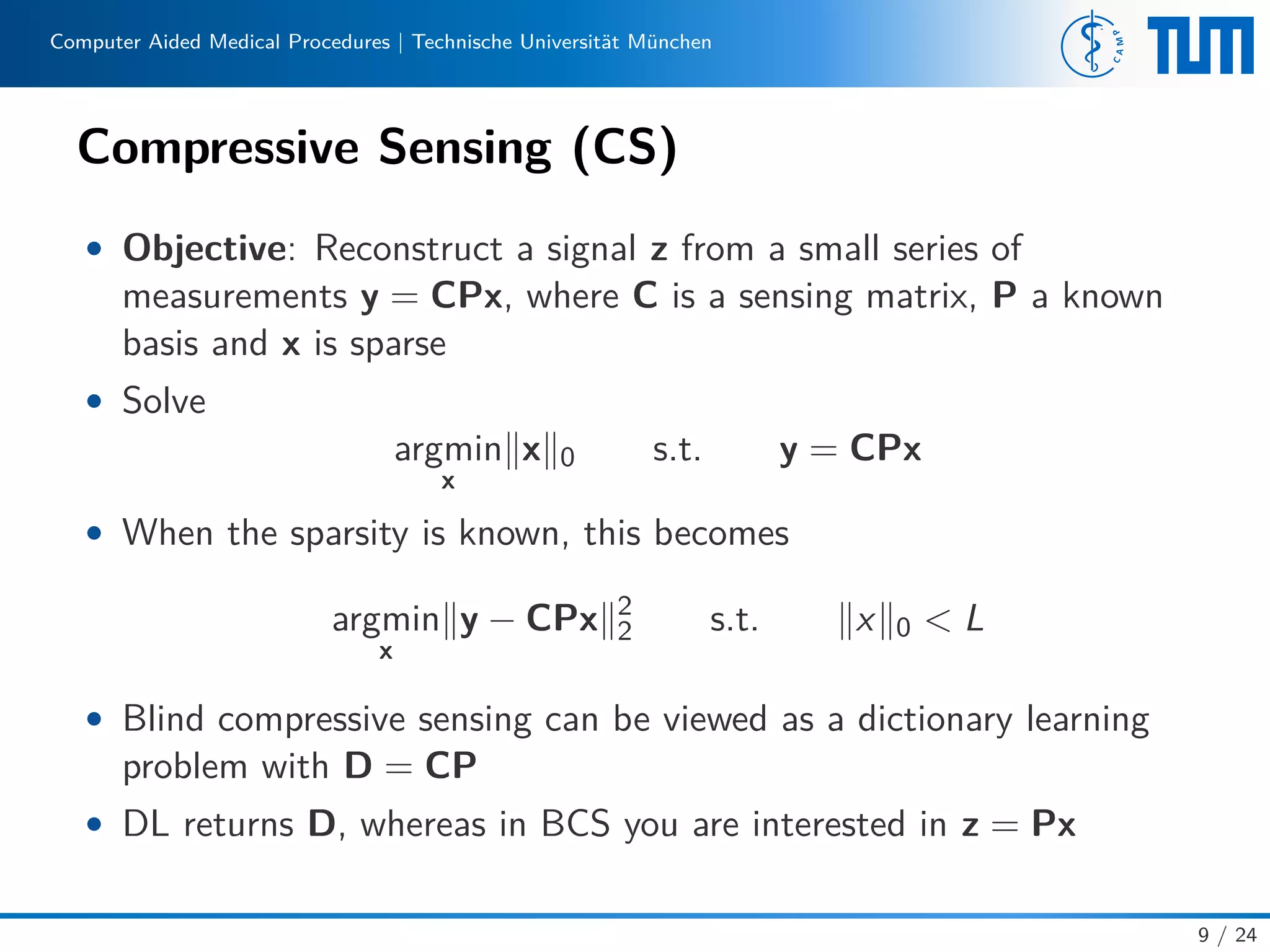

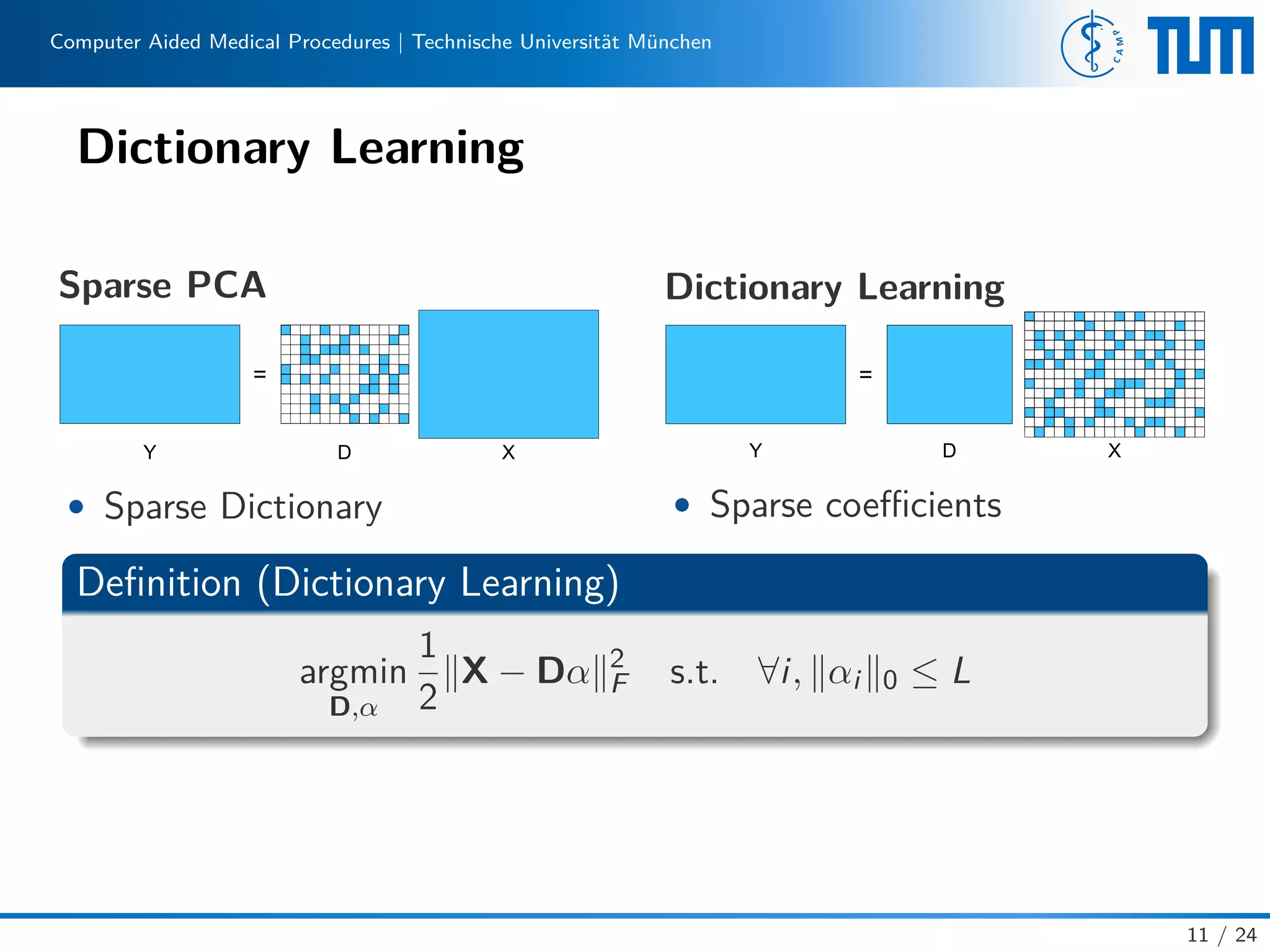

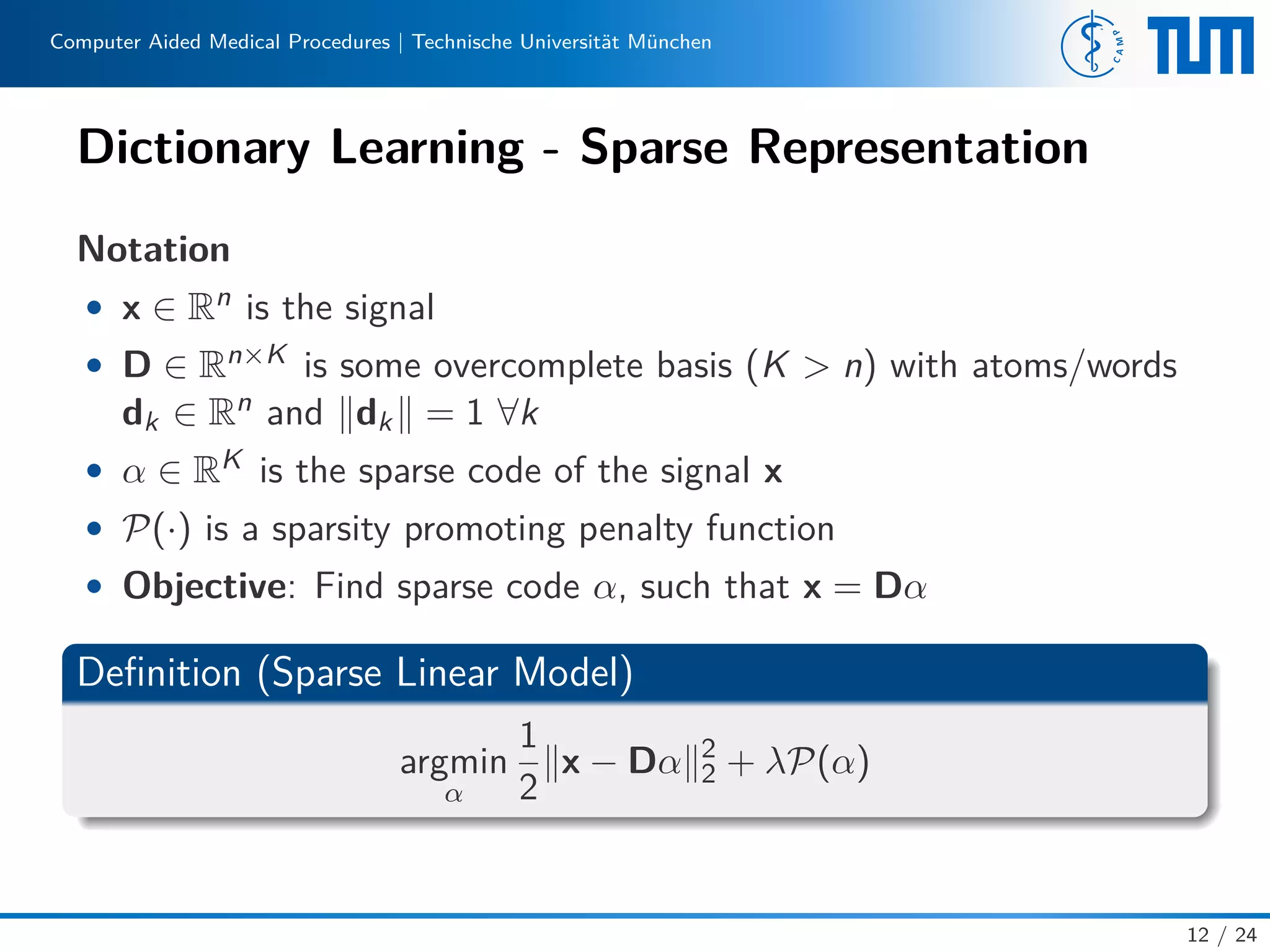

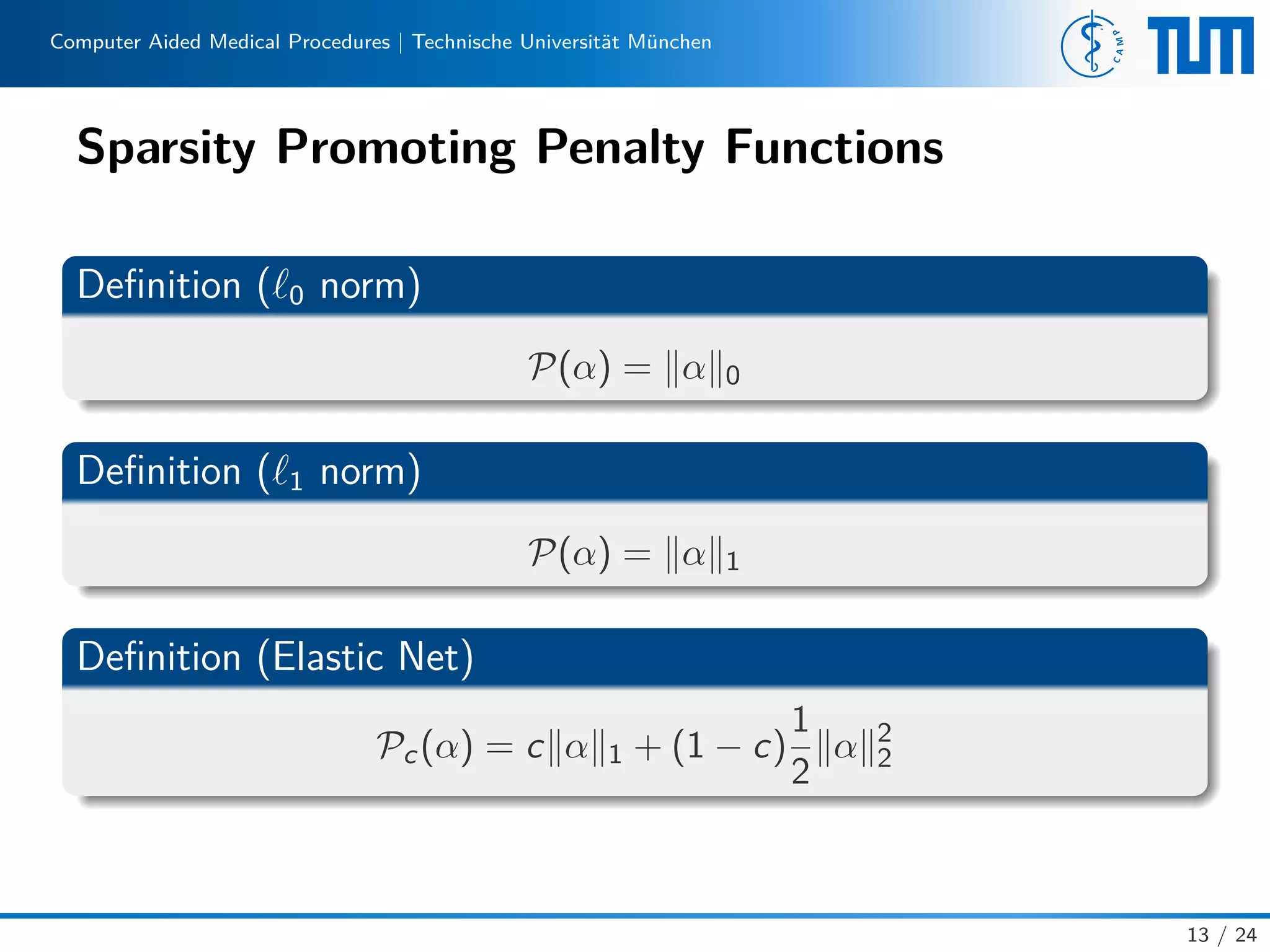

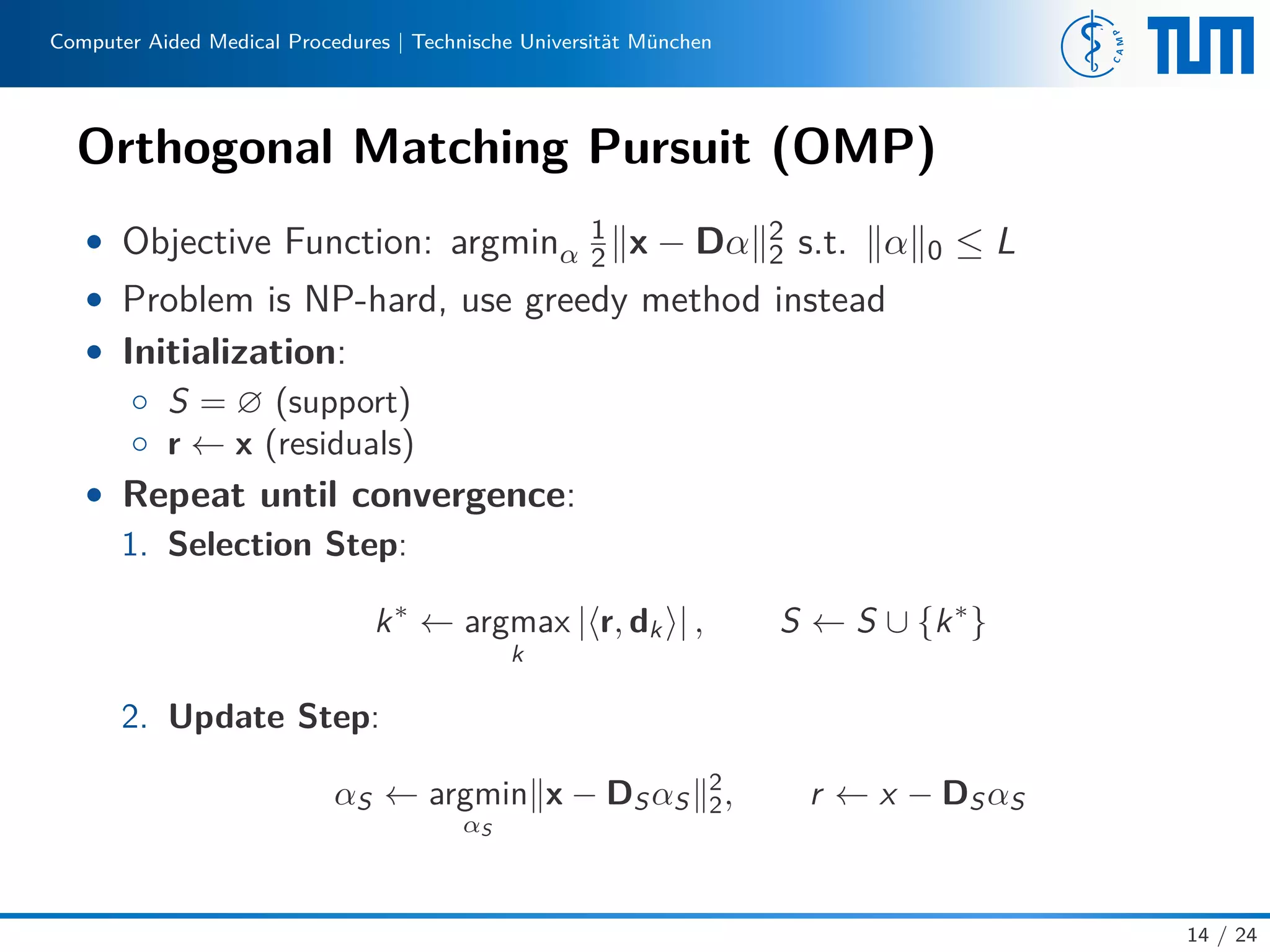

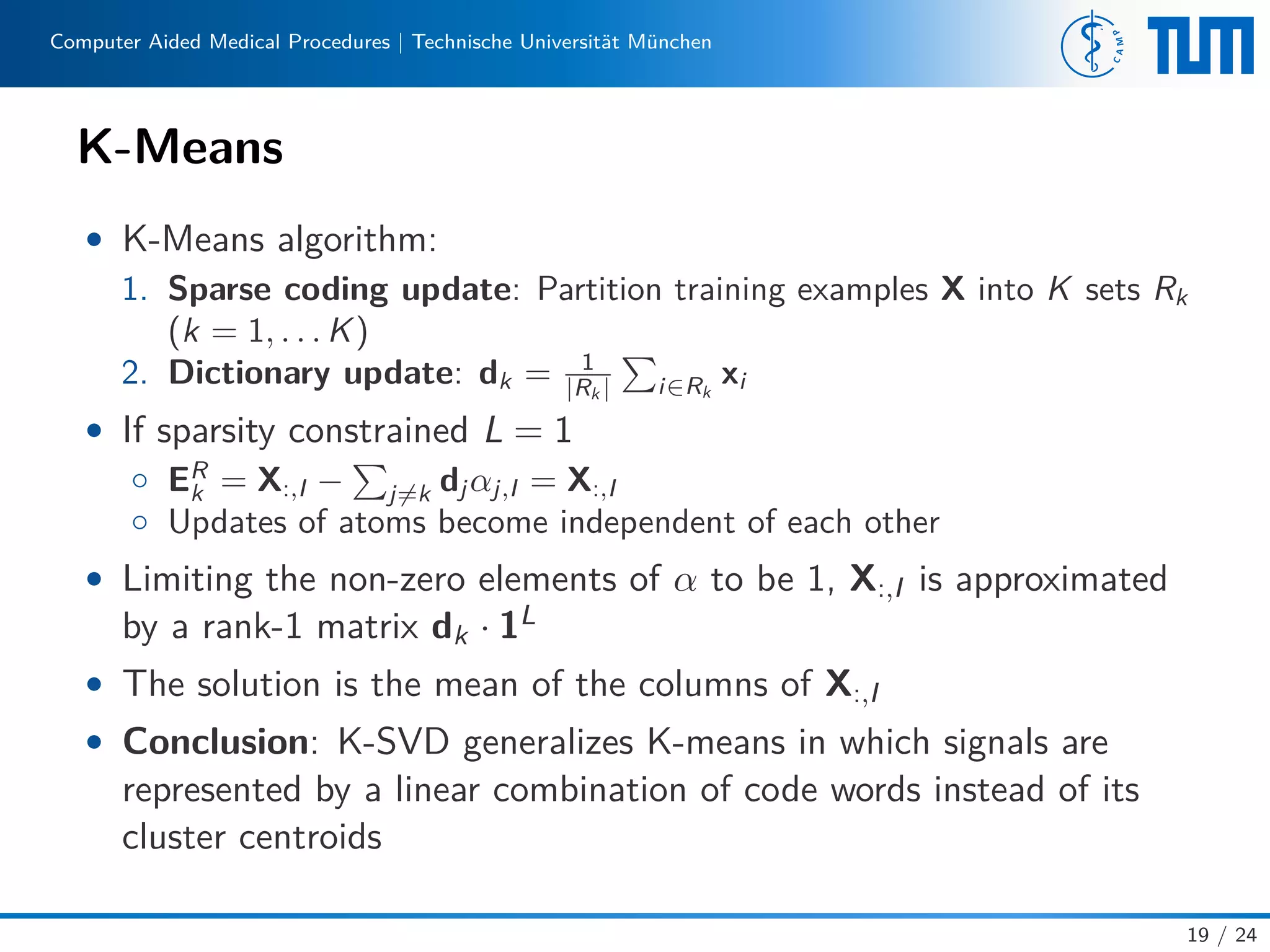

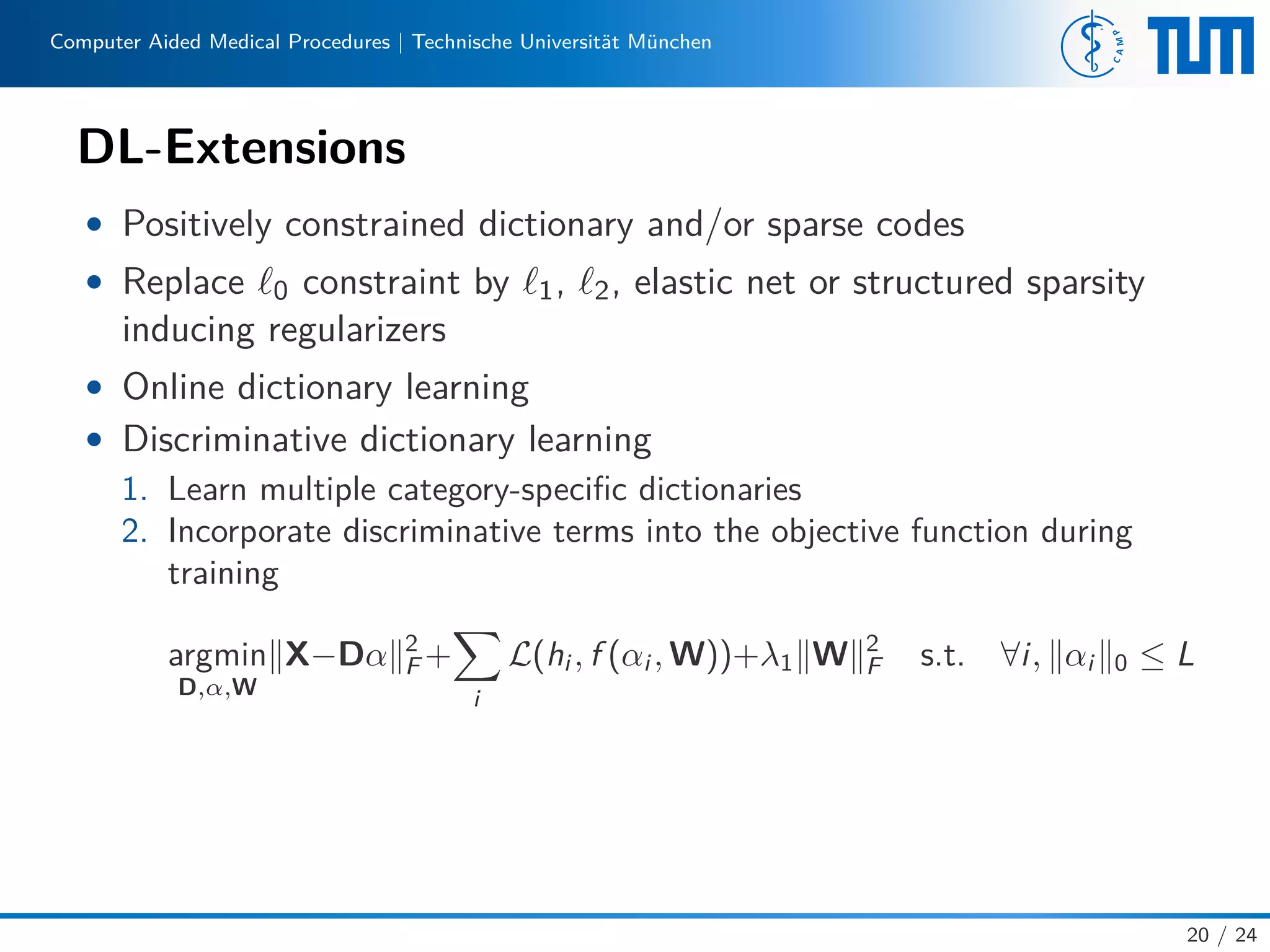

This document provides an introduction to sparse methods, including regularization techniques and compressive sensing. It discusses how regularization can address ill-posed problems by adding constraints that encourage simple or sparse solutions. Compressive sensing aims to reconstruct sparse signals from few measurements. Dictionary learning seeks an overcomplete basis to sparsely represent signals via linear combinations of atoms. Algorithms like orthogonal matching pursuit and K-SVD are described for solving dictionary learning problems. The document outlines extensions of these sparse methods.

![Computer Aided Medical Procedures | Technische Universität München

Posedness

Definition (Well-Posed Problem)

According to Hadamard[1], a problem is well-posed if

1. It has a solution

2. The solution is unique

3. The solution depends continuously on data and parameters.

Define the following, and

explain their impacts:

• ill-posed problem

• well-conditioned

• ill-conditioned

−1

−0.5

0

0.5

1

−1

−0.5

0

0.5

1

0

0.01

0.02

0.03

0.04

0.05

0.06

0.07

0.08

0.09

5 / 24](https://image.slidesharecdn.com/lwcwf7xqmypqi2cubpv5-signature-49459baad3b4f2df83805f7a4a101ee788648bf968f26a182df1810d50ca8bec-poli-150529201109-lva1-app6891/75/Introduction-to-Sparse-Methods-7-2048.jpg)

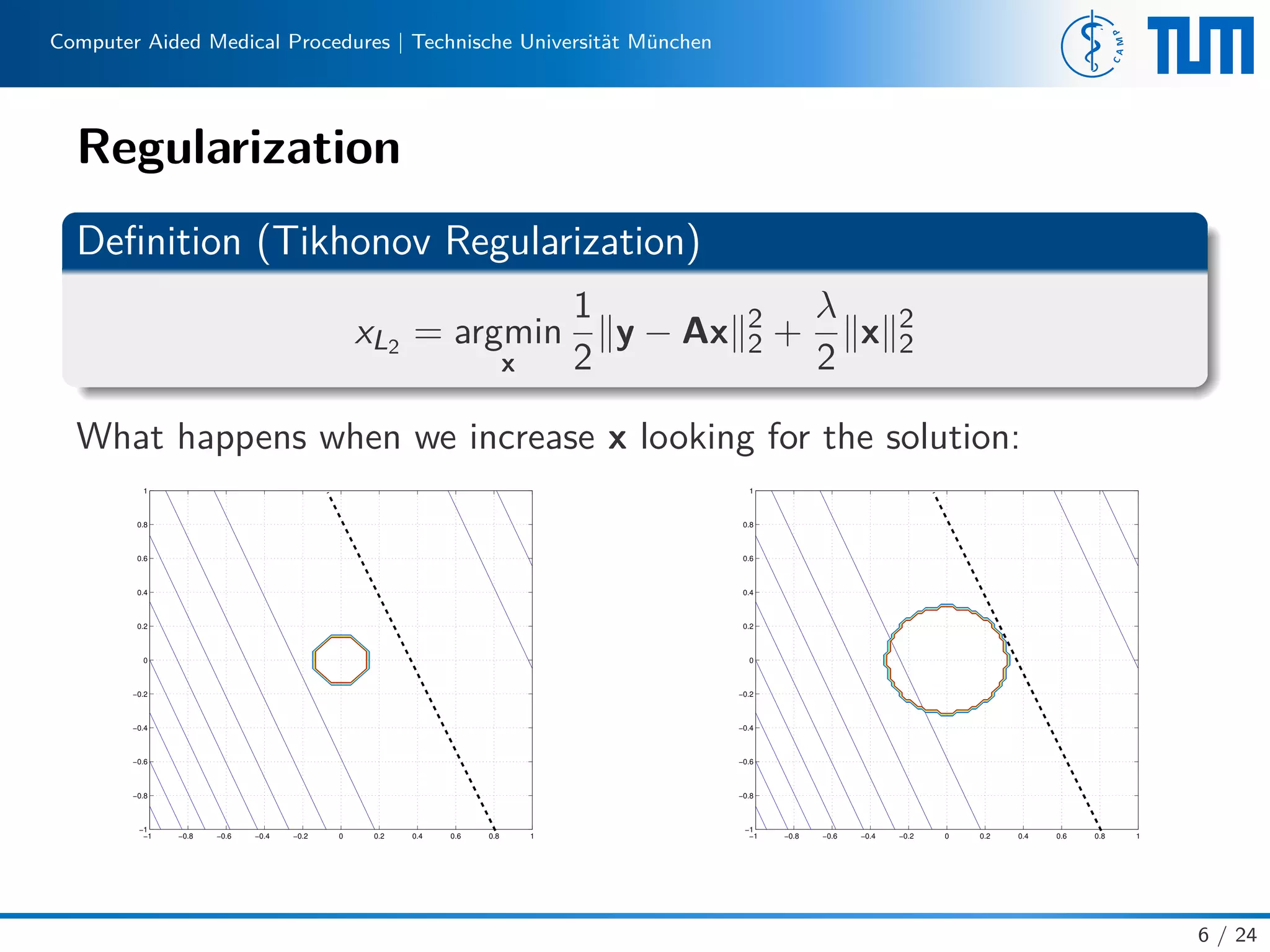

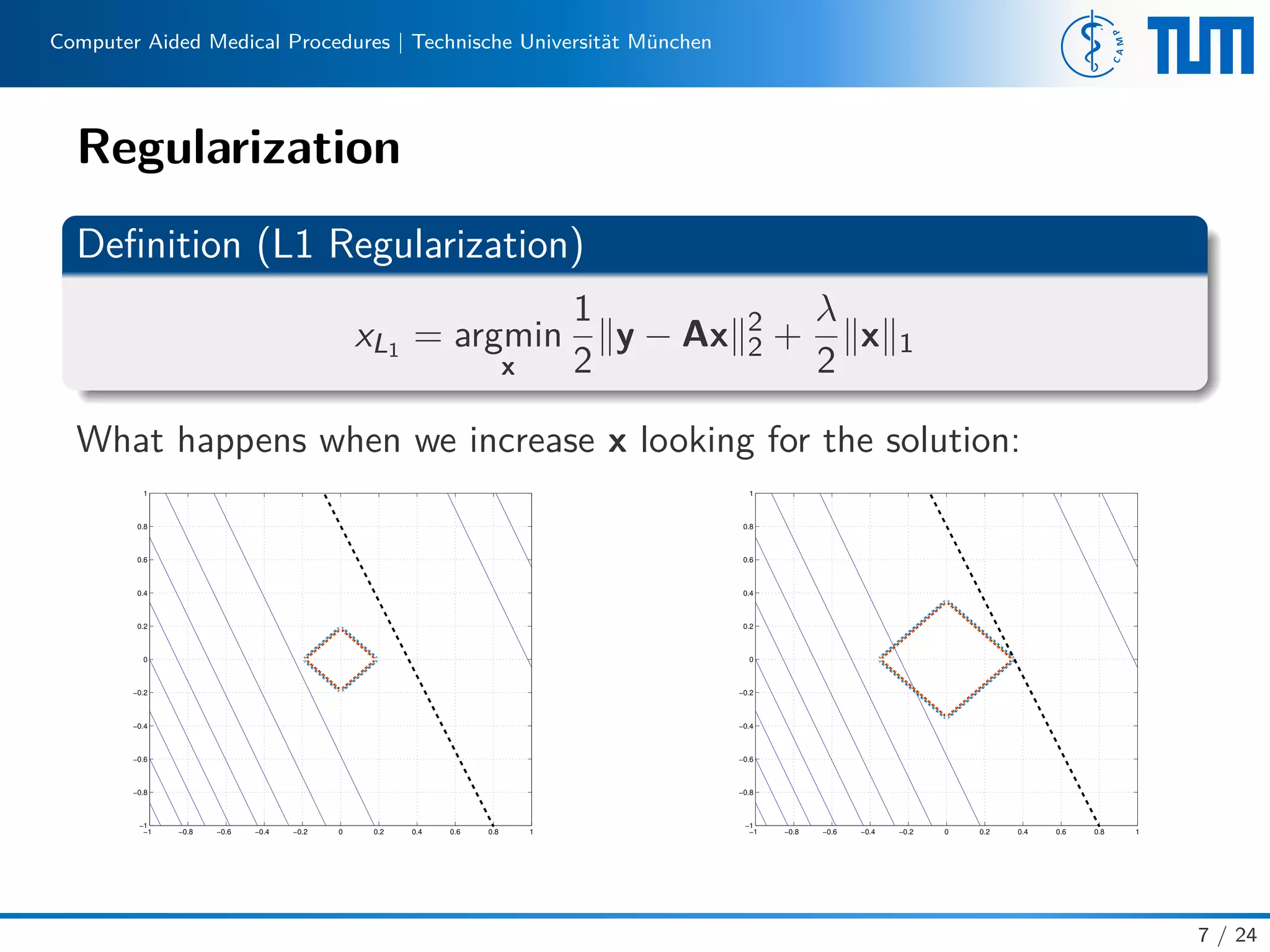

![Computer Aided Medical Procedures | Technische Universität München

Regularization-Extensions

Definition (General Regularization)

xRLS/MAP = argmin

x

1

2

y − Ax 2

2 + λP(x)

• Incorporate different regularization terms into the objective

function p-norm x p [2] [3]

x 0 x 1 x 2 x 4

8 / 24](https://image.slidesharecdn.com/lwcwf7xqmypqi2cubpv5-signature-49459baad3b4f2df83805f7a4a101ee788648bf968f26a182df1810d50ca8bec-poli-150529201109-lva1-app6891/75/Introduction-to-Sparse-Methods-10-2048.jpg)

![Computer Aided Medical Procedures | Technische Universität München

Regularization-Extensions

Definition (General Regularization)

xRLS/MAP = argmin

x

1

2

y − Ax 2

2 + λP(x)

• Incorporate different regularization terms into the objective

function p-norm x p [2] [3]

x 0 x 1 x 2 x 4

• Use other RKHS function

8 / 24](https://image.slidesharecdn.com/lwcwf7xqmypqi2cubpv5-signature-49459baad3b4f2df83805f7a4a101ee788648bf968f26a182df1810d50ca8bec-poli-150529201109-lva1-app6891/75/Introduction-to-Sparse-Methods-11-2048.jpg)

![Computer Aided Medical Procedures | Technische Universität München

Regularization-Extensions

Definition (General Regularization)

xRLS/MAP = argmin

x

1

2

y − Ax 2

2 + λP(x)

• Incorporate different regularization terms into the objective

function p-norm x p [2] [3]

x 0 x 1 x 2 x 4

• Use other RKHS function

• Incorporate CS for Sparse prior assumptions

8 / 24](https://image.slidesharecdn.com/lwcwf7xqmypqi2cubpv5-signature-49459baad3b4f2df83805f7a4a101ee788648bf968f26a182df1810d50ca8bec-poli-150529201109-lva1-app6891/75/Introduction-to-Sparse-Methods-12-2048.jpg)

![Computer Aided Medical Procedures | Technische Universität München

OMP – Update Step

• Again, it can be solved by the closed form solution of LSE

αS = DT

S DS

−1

DT

S y. However, the update step is expensive.

• DT

S DS is symmetric positive-definite and updated by appending a

single row and column

• Its Cholesky factorization requires only the computation of its last

row

• For a large set of signals, Batch-OMP can be used [4]

15 / 24](https://image.slidesharecdn.com/lwcwf7xqmypqi2cubpv5-signature-49459baad3b4f2df83805f7a4a101ee788648bf968f26a182df1810d50ca8bec-poli-150529201109-lva1-app6891/75/Introduction-to-Sparse-Methods-19-2048.jpg)

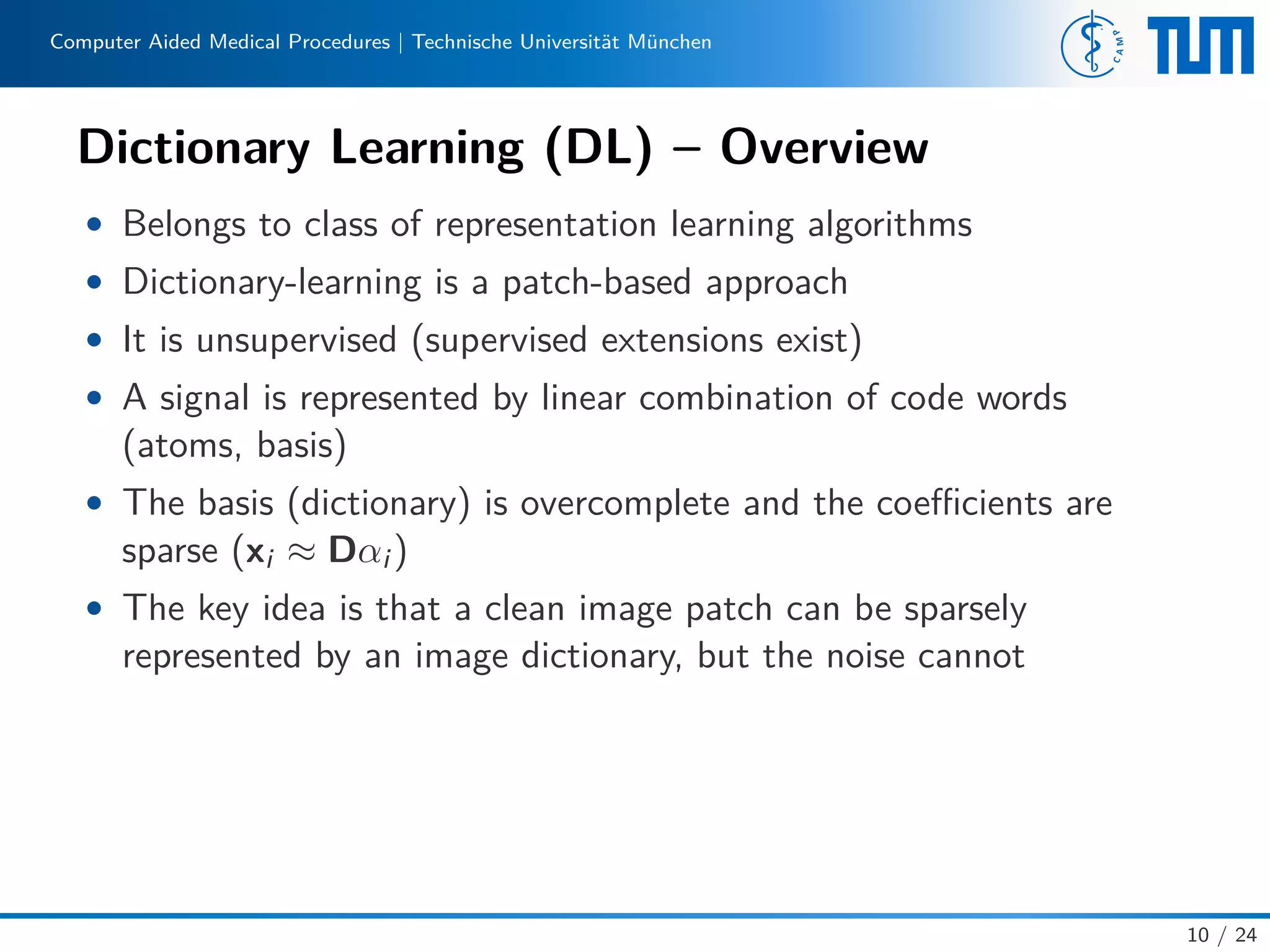

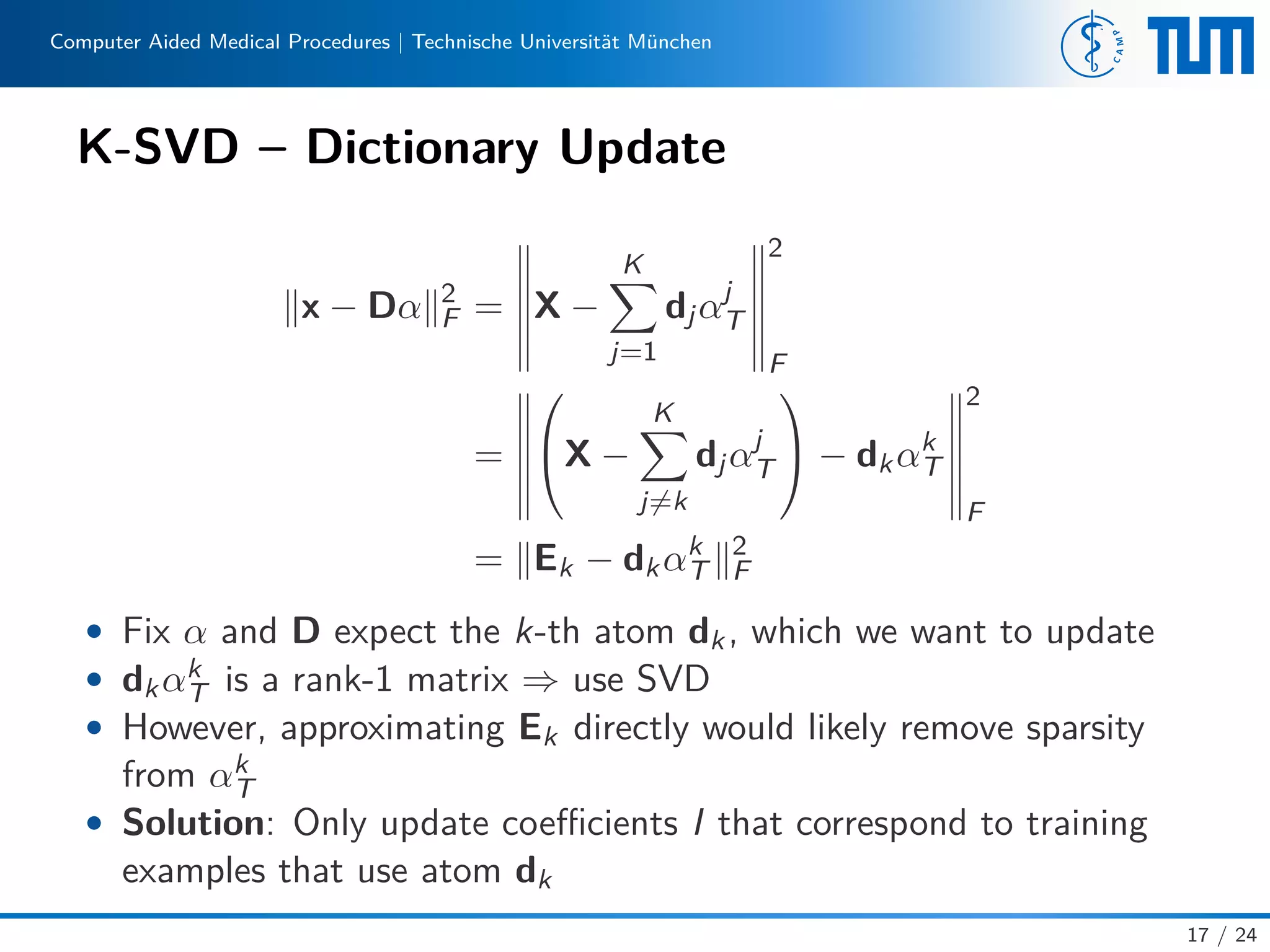

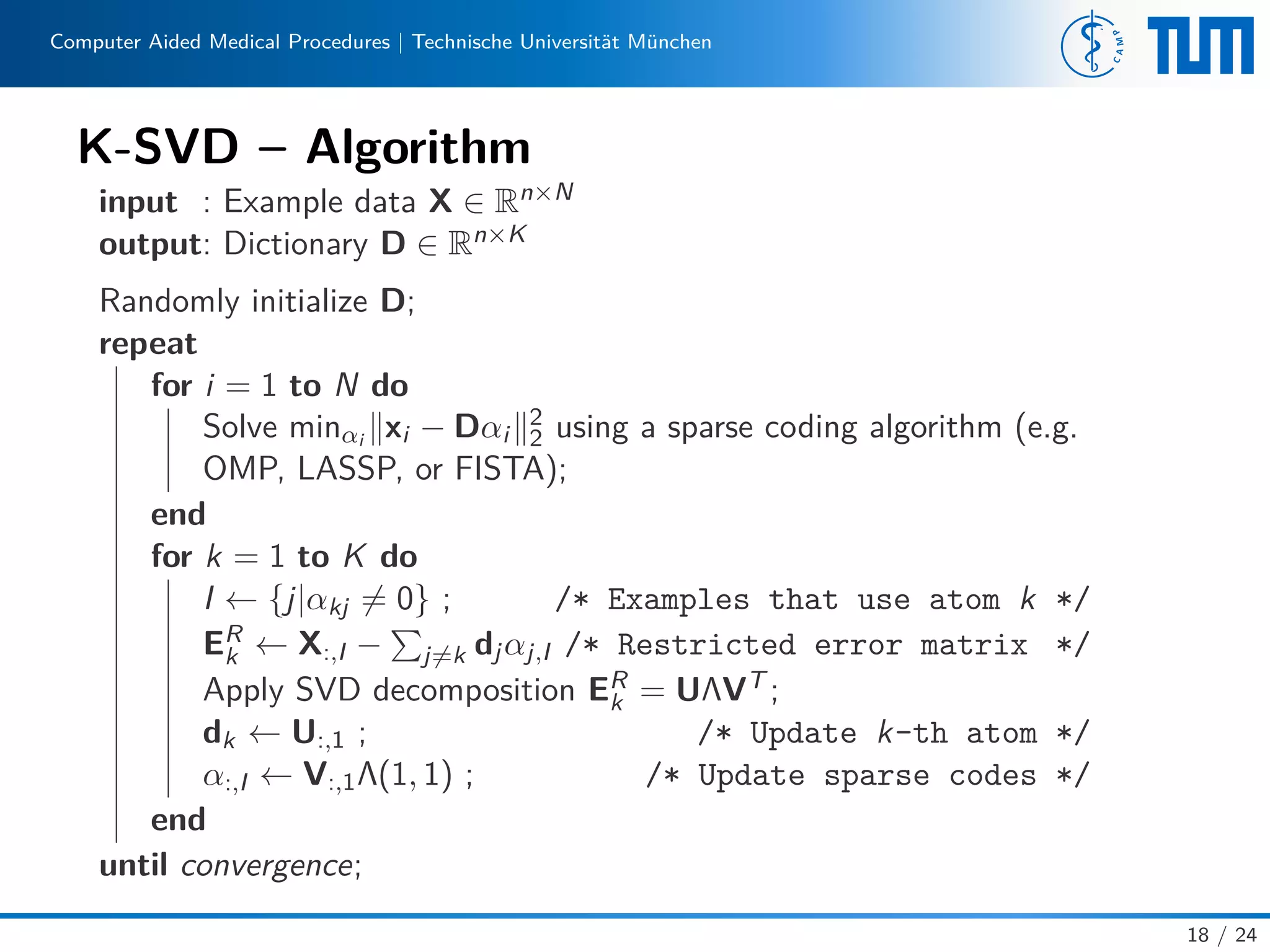

![Computer Aided Medical Procedures | Technische Universität München

K-SVD [5]

• Dictionary learning problem is both non-convex and non-smooth

• Minimize objective function iteratively by

1. fixing D and finding the best sparse codes α

2. updating one atom dk at a time, while keeping all other atoms fixed,

and changing its non-zero coefficients at the same time (support does

not change)

• Pruning step:

◦ Eliminate atoms that are too close to each other

◦ Eliminate atoms that are used by less than b training examples

◦ Replace them with least explained samples

16 / 24](https://image.slidesharecdn.com/lwcwf7xqmypqi2cubpv5-signature-49459baad3b4f2df83805f7a4a101ee788648bf968f26a182df1810d50ca8bec-poli-150529201109-lva1-app6891/75/Introduction-to-Sparse-Methods-20-2048.jpg)

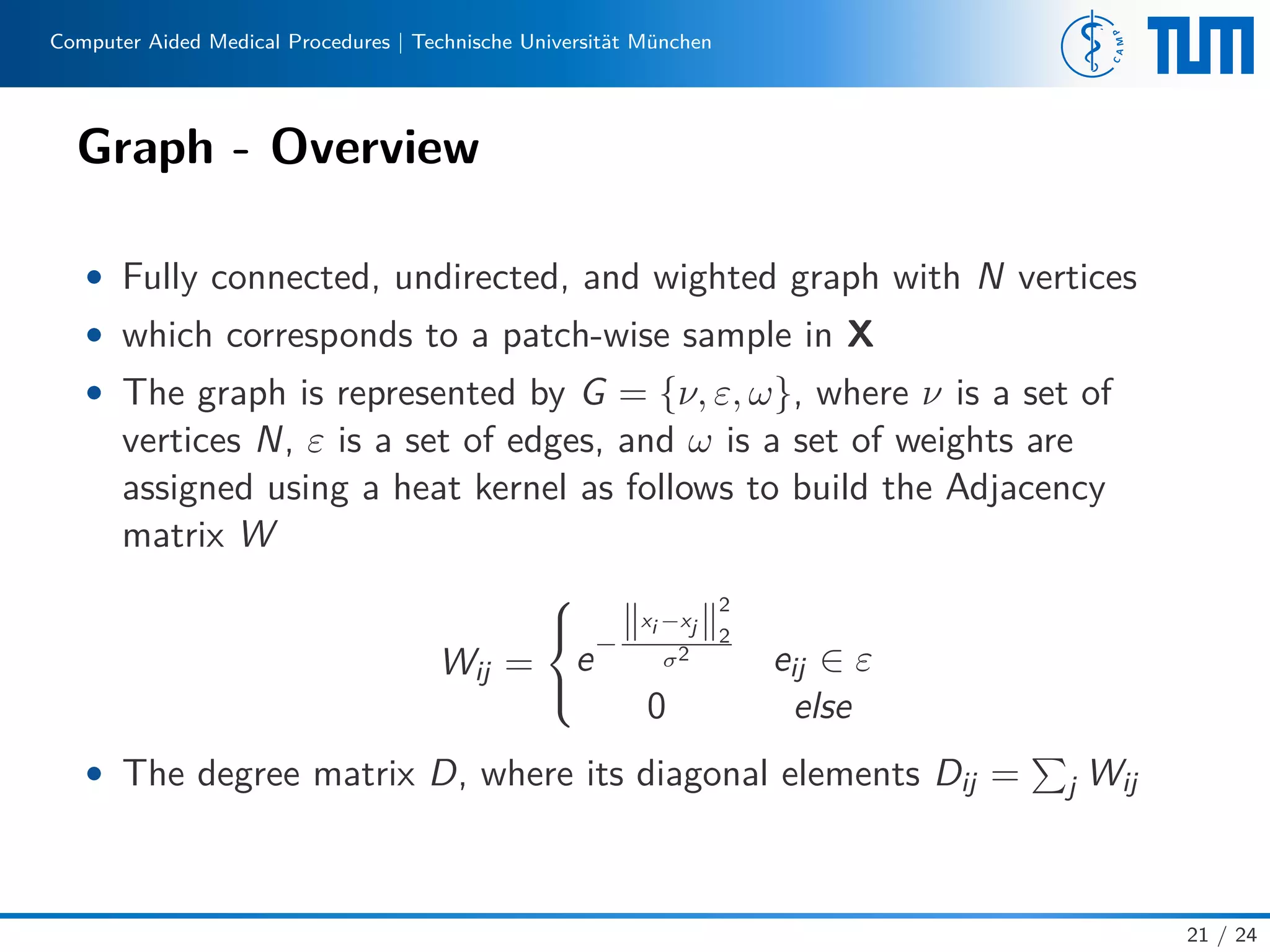

![Computer Aided Medical Procedures | Technische Universität München

Graph Sparse Coding (GraphSC) [6]

• Build up the Normalized Laplacian Matrix ˜L from the transition

one Lt = D−1W

Definition (GraphSC)

argmin

D,α

1

2

x − Dα 2

2 + λ α 0 + Tr(αT ˜Lα)

GraphSC-Extension

• Incorporate semi-supervised discriminative classification [7]

•

22 / 24](https://image.slidesharecdn.com/lwcwf7xqmypqi2cubpv5-signature-49459baad3b4f2df83805f7a4a101ee788648bf968f26a182df1810d50ca8bec-poli-150529201109-lva1-app6891/75/Introduction-to-Sparse-Methods-26-2048.jpg)