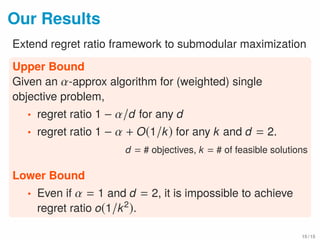

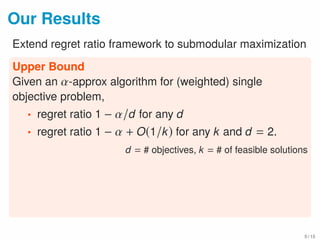

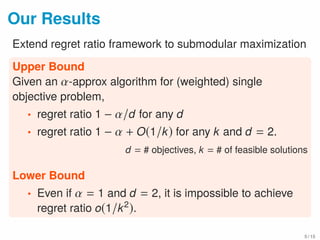

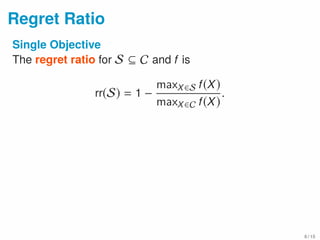

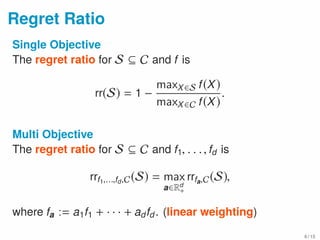

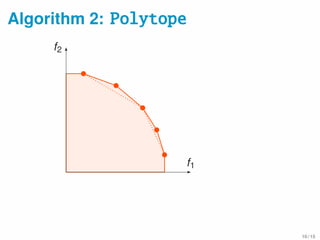

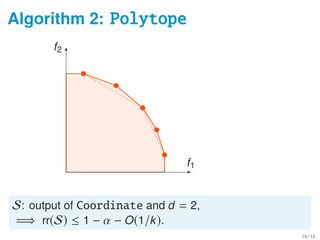

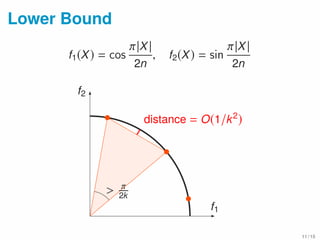

This document presents algorithms for minimizing regret ratio in multi-objective submodular function maximization. It introduces the concept of regret ratio for evaluating the quality of a solution set for multiple objectives. It then proposes two algorithms, Coordinate and Polytope, that provide upper bounds on regret ratio by leveraging approximation algorithms for single objective problems. Experimental results on a movie recommendation dataset show the proposed algorithms achieve significantly lower regret ratios than a random baseline.

![Multi-objective Optimization?

× Exponentially Many Pareto Solutions!

“Good” Subsets of Pareto Solutions

• k-representative skyline queries [Lin et al. 07,

Tao et al. 09]

• top-k dominating queries [Yiu and Mamoulis 09]

• regret minimizing database [Nanongkai et al. 10]

4 / 15](https://image.slidesharecdn.com/aaai-170209064605/85/Regret-Minimization-in-Multi-objective-Submodular-Function-Maximization-8-320.jpg)

![Multi-objective Optimization?

× Exponentially Many Pareto Solutions!

“Good” Subsets of Pareto Solutions

• k-representative skyline queries [Lin et al. 07,

Tao et al. 09]

• top-k dominating queries [Yiu and Mamoulis 09]

• regret minimizing database [Nanongkai et al. 10]

Issue These assume data points are explicitly given...

4 / 15](https://image.slidesharecdn.com/aaai-170209064605/85/Regret-Minimization-in-Multi-objective-Submodular-Function-Maximization-9-320.jpg)

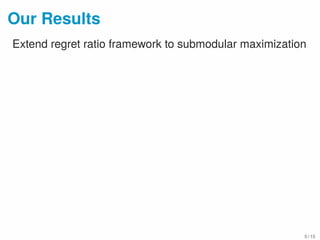

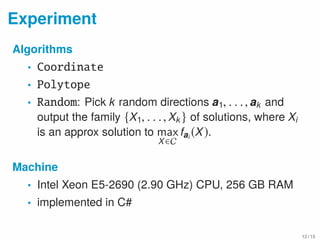

![Data Summarization

Dataset: MovieLens

E: set of movies, si,j: similarities of movies i and j

f1(X) =

i∈E j∈X

si,j, coverage

f2(X) = λ

i∈E j∈E

si,j − λ

i∈X j∈X

si,j diversity

C = 2E

(unconstrained), 1 ≤ k ≤ 20, λ > 0,

single-objective algorithm: double greedy (1/2-approx)

[Buchbinder et al. 12]

13 / 15](https://image.slidesharecdn.com/aaai-170209064605/85/Regret-Minimization-in-Multi-objective-Submodular-Function-Maximization-27-320.jpg)