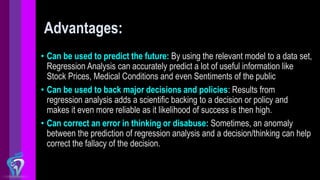

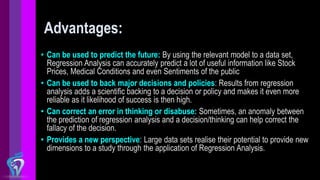

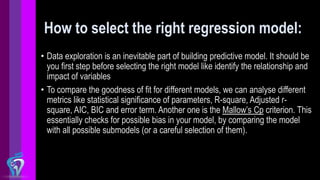

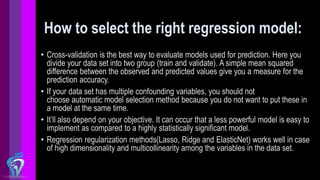

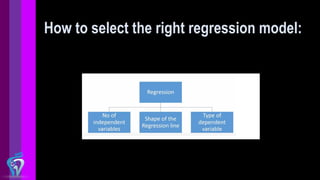

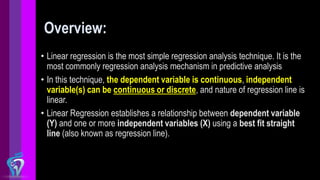

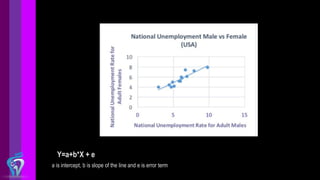

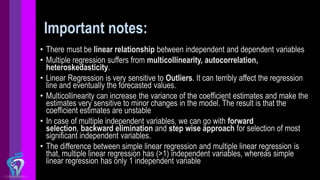

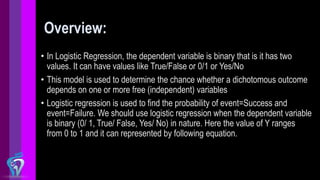

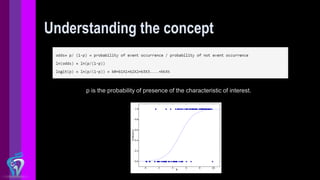

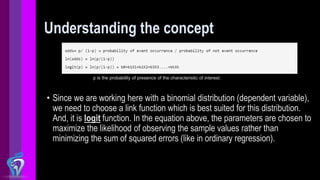

The document provides an overview of regression analysis techniques, including linear regression and logistic regression. It explains that regression analysis is used to understand relationships between variables and can be used for prediction. Linear regression finds relationships when the dependent variable is continuous, while logistic regression is used when the dependent variable is binary. The document also discusses selecting the appropriate regression model and highlights important considerations for linear and logistic regression.