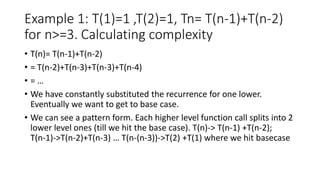

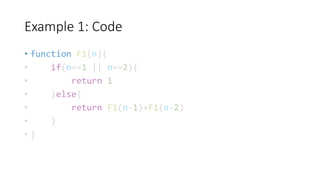

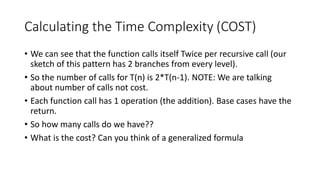

The document discusses recurrence relations in the context of recursive functions, focusing on their mathematical representation and subsequent analysis of asymptotic complexities. It explains the process of calculating the time complexity of recursive functions through substitution and tree sketching, eventually leading to Big O notation. The document emphasizes the importance of understanding these relationships for estimating function calls and operations in software engineering.