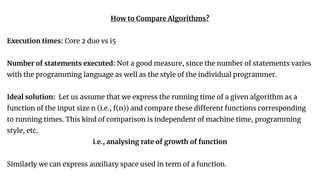

1. Algorithm analysis helps determine which algorithm is most efficient in terms of time and space consumed by analyzing the rate of growth of the time and space complexity functions as the input size increases.

2. Big-O notation provides an asymptotic upper bound on the growth rate of an algorithm, while Omega and Theta notations provide asymptotic lower and tight bounds respectively.

3. Recurrence relations can be used to analyze the time complexity of recursive algorithms, where the running time T(n) is expressed as a function of smaller inputs.