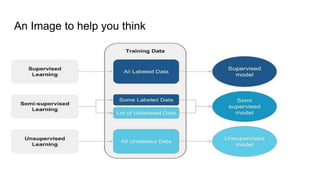

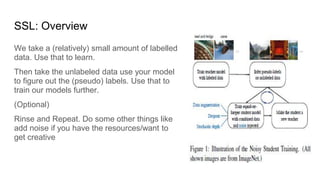

The document discusses semi-supervised learning, which combines labeled and unlabeled data to enhance learning efficiency while minimizing expensive labeling costs. It outlines the key assumptions of semi-supervised learning, including continuity, cluster, and manifold assumptions, and compares the method to human learning processes. The document aims to provide insights into leveraging the strengths of both supervised and unsupervised learning for better model training.