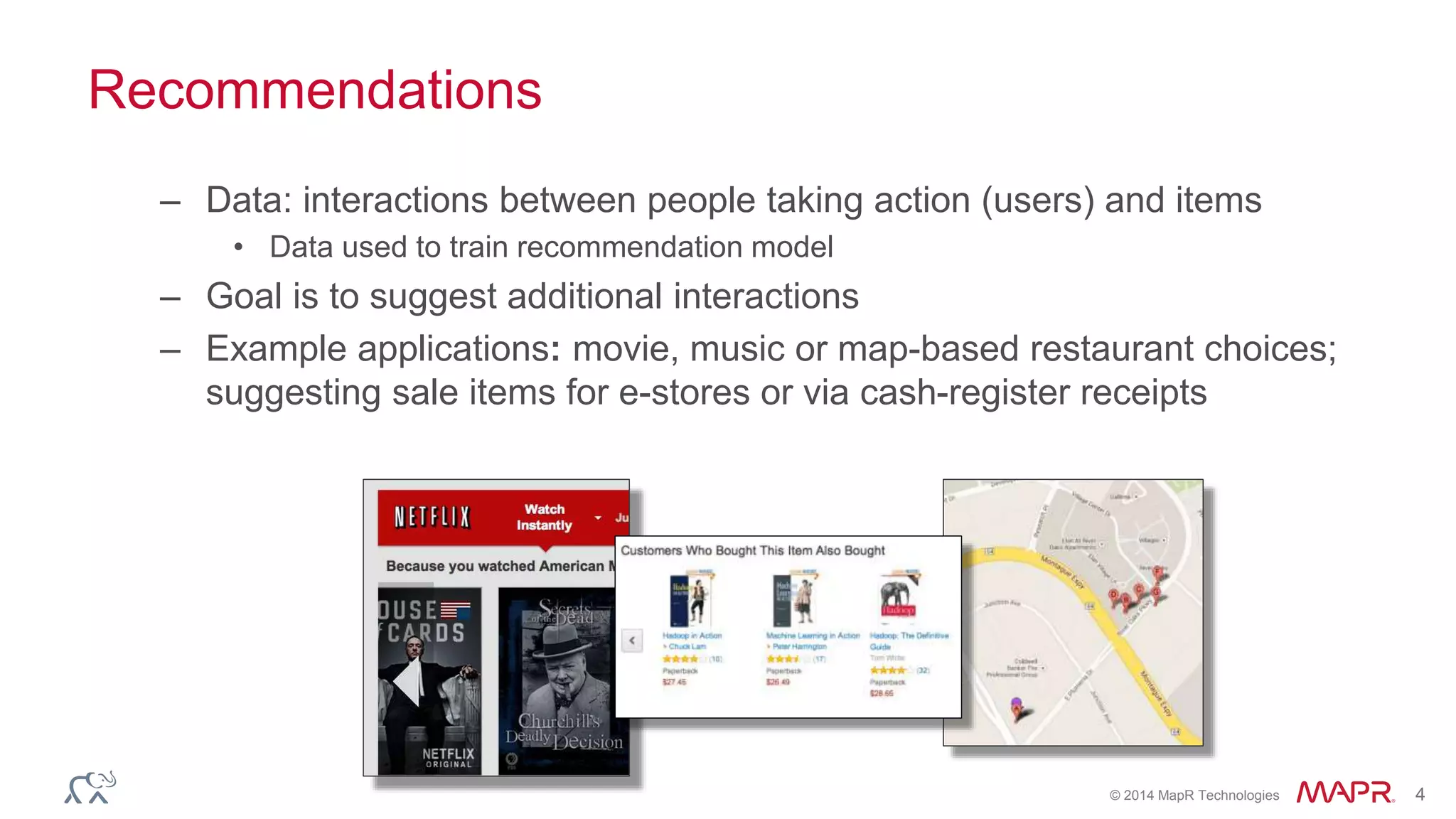

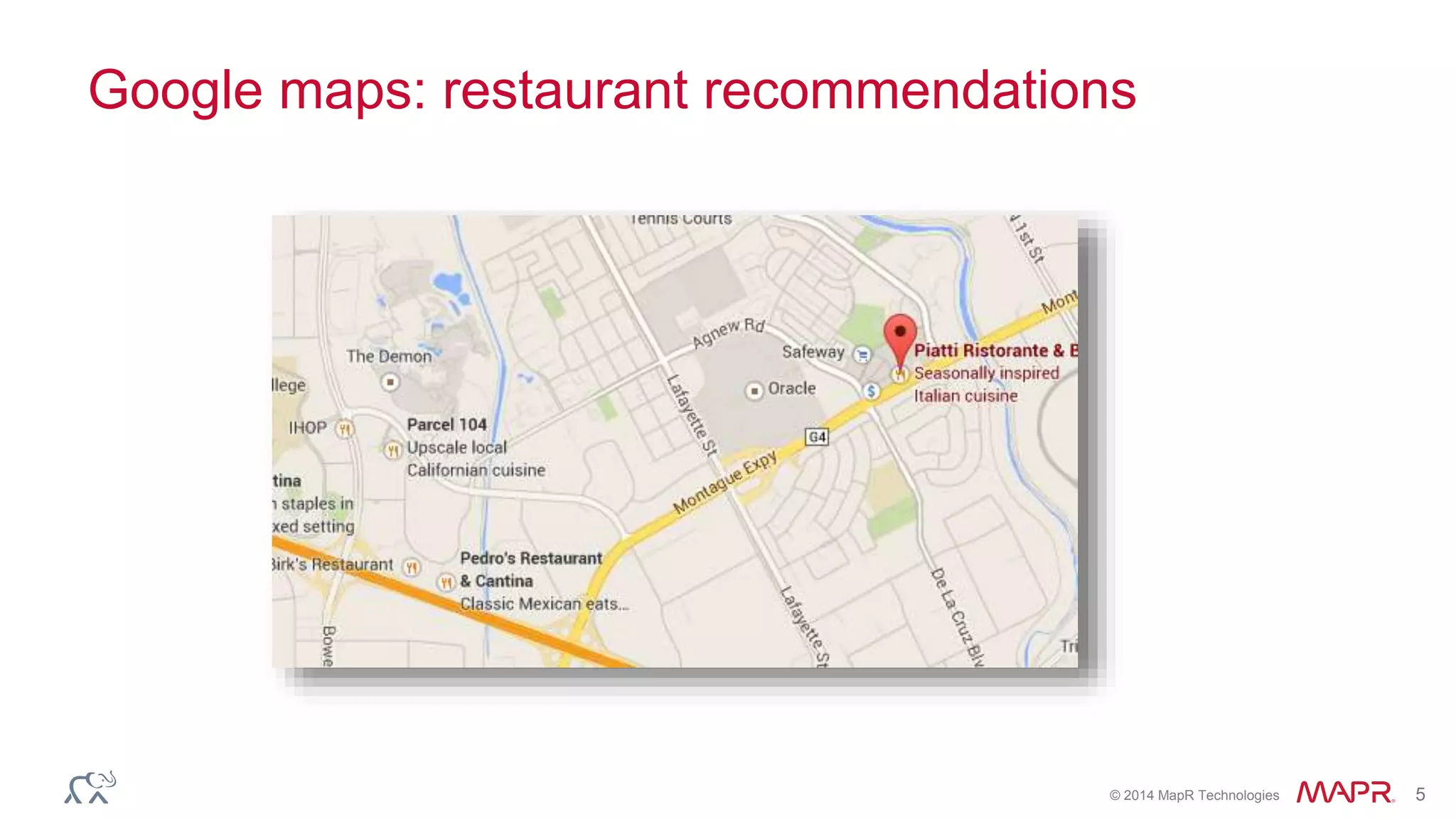

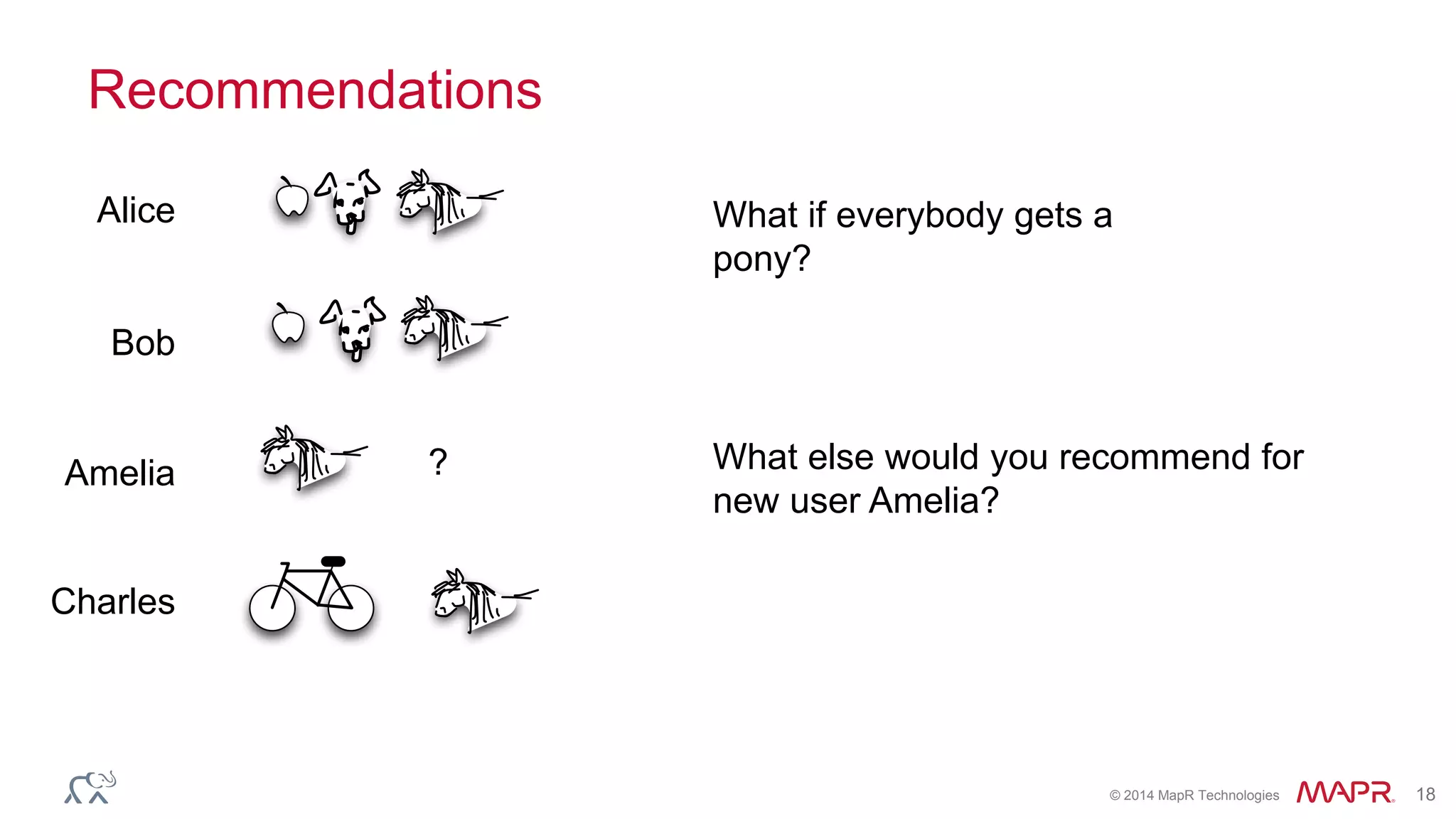

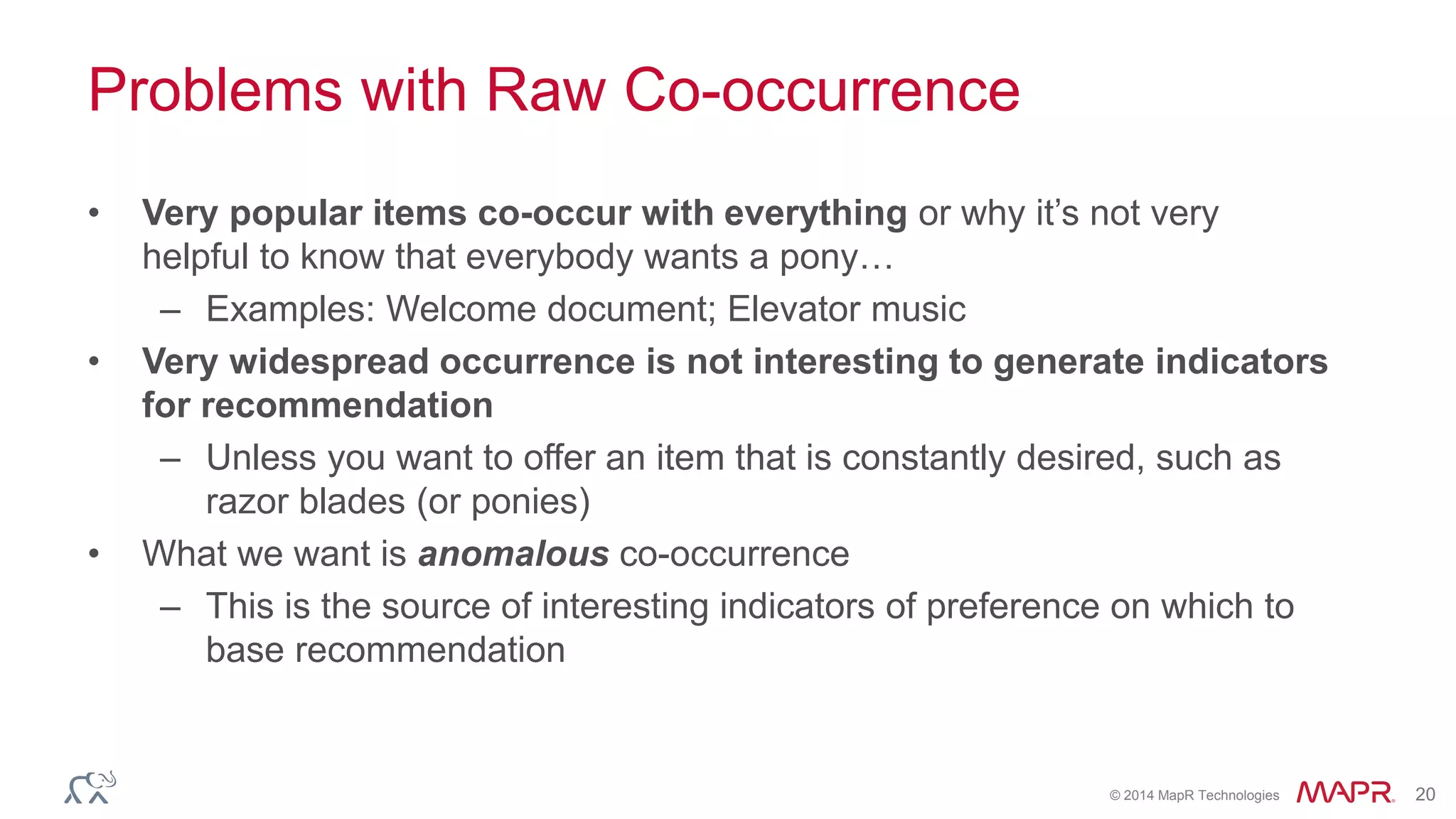

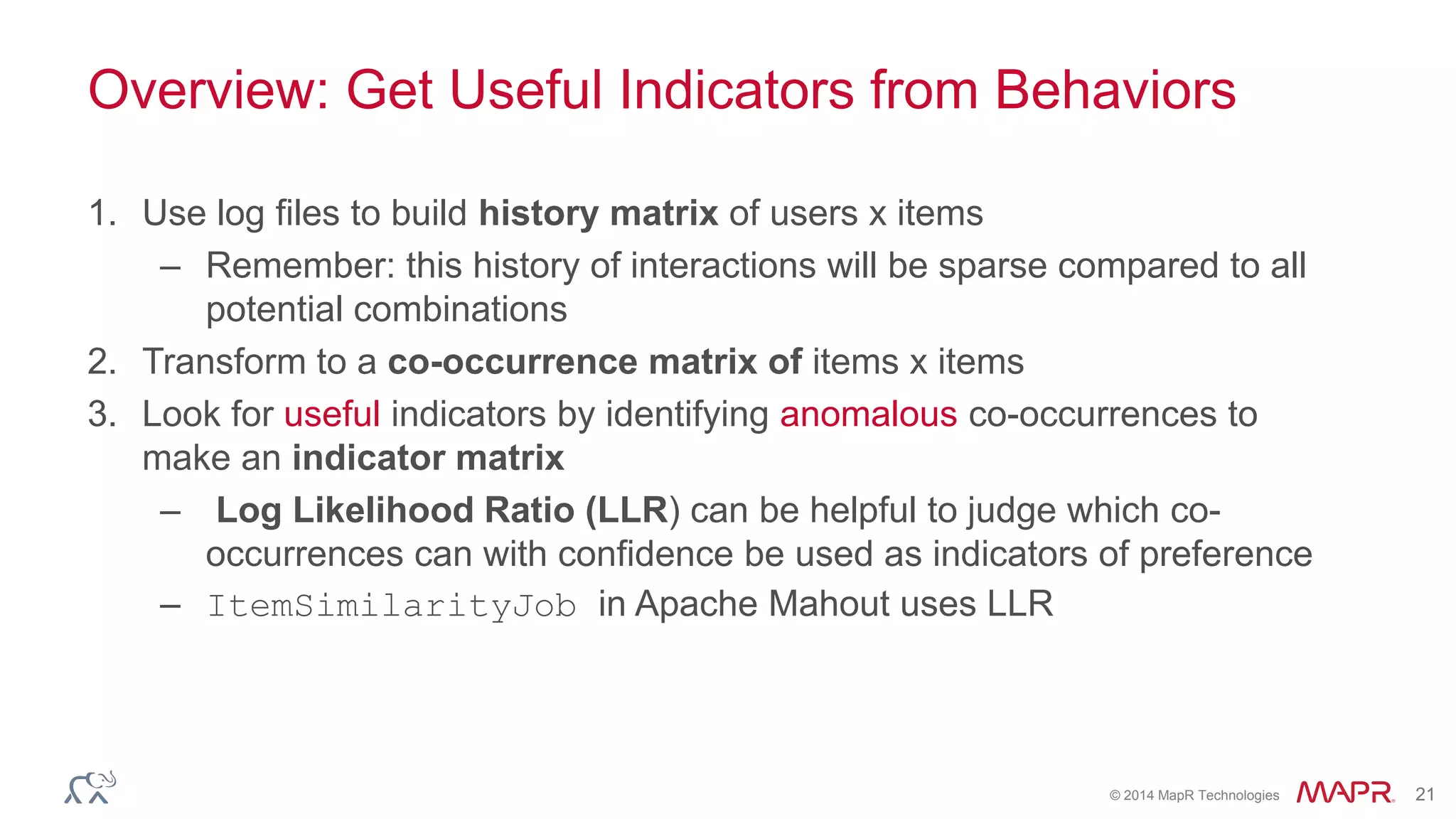

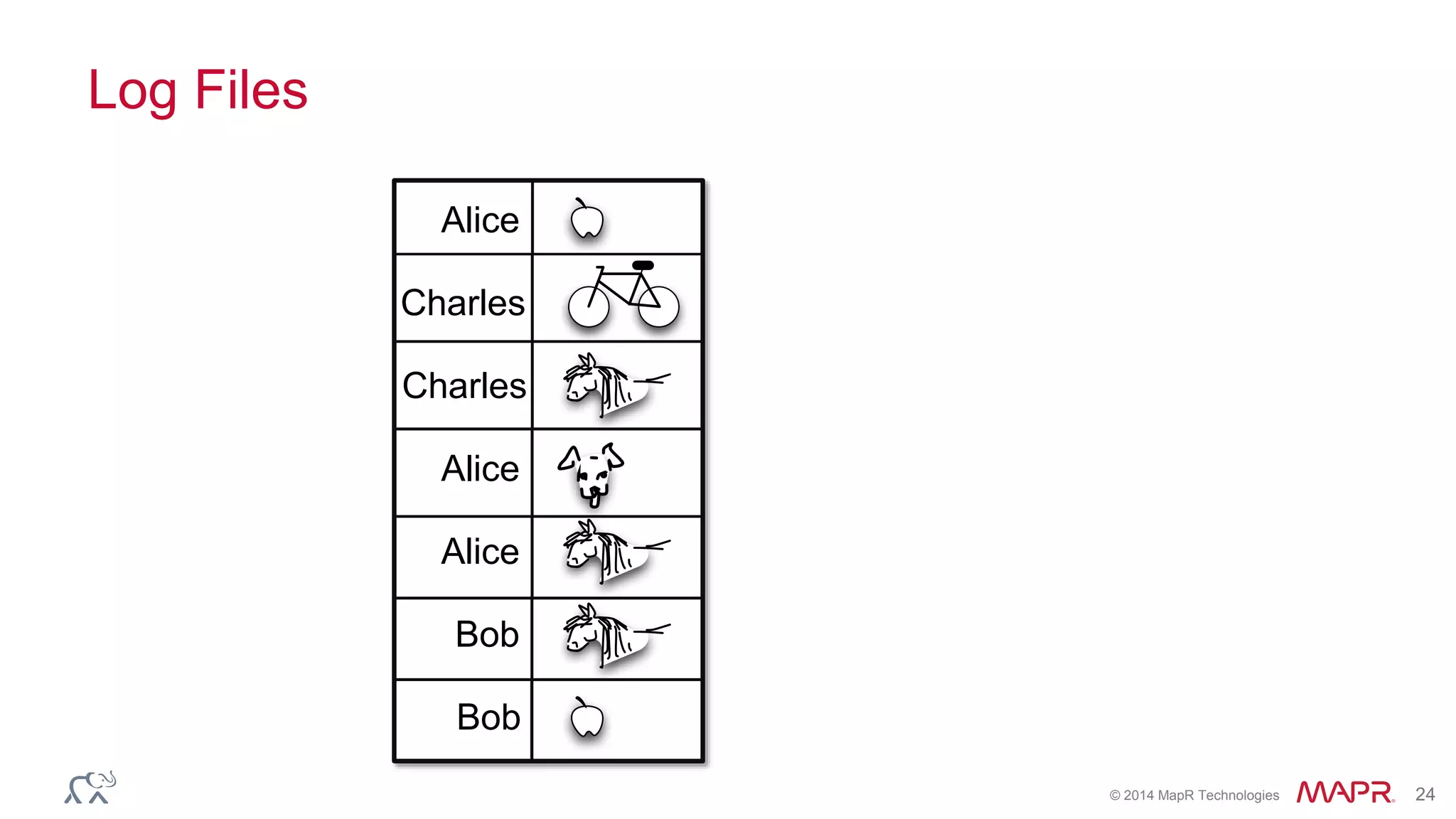

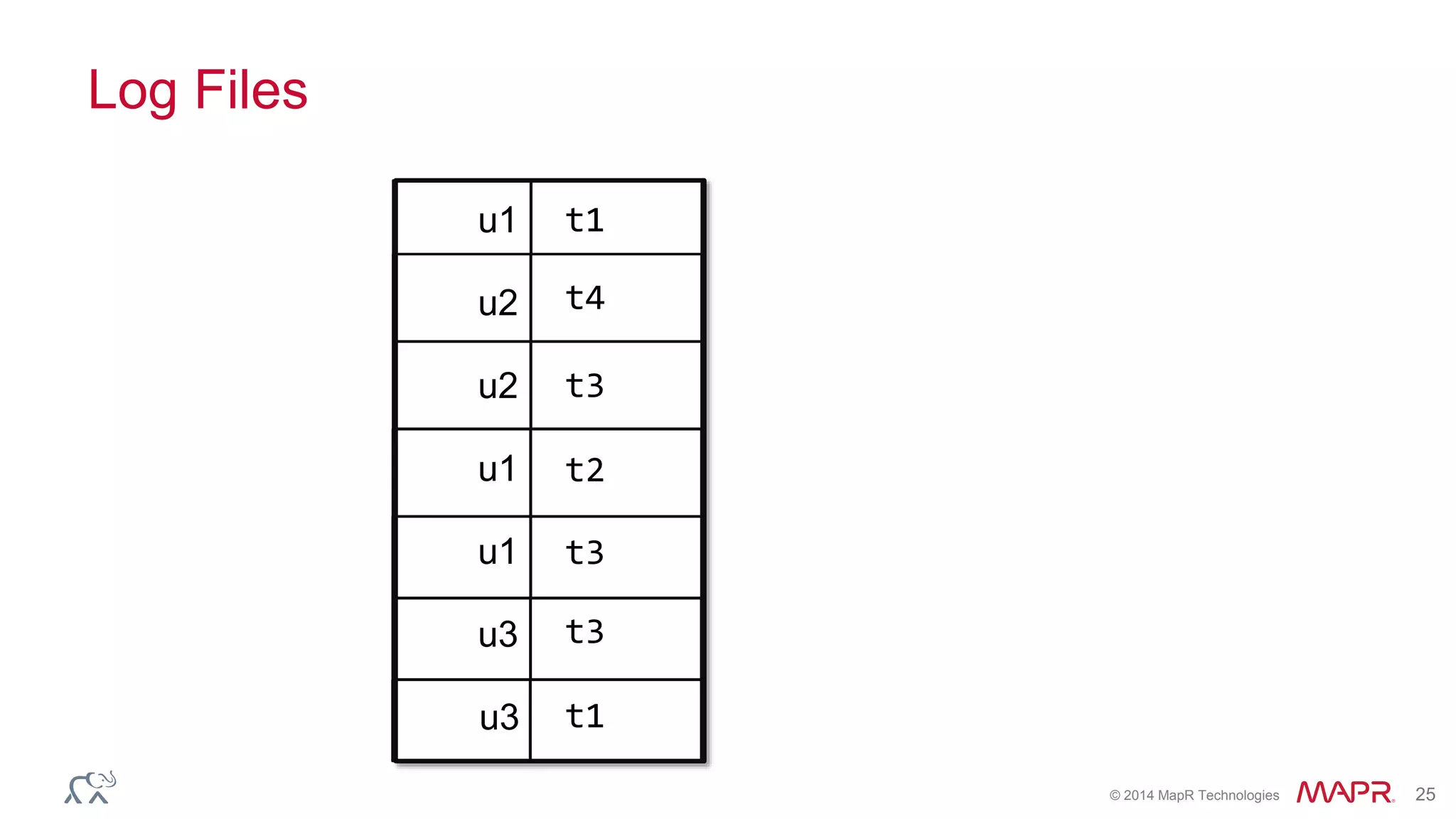

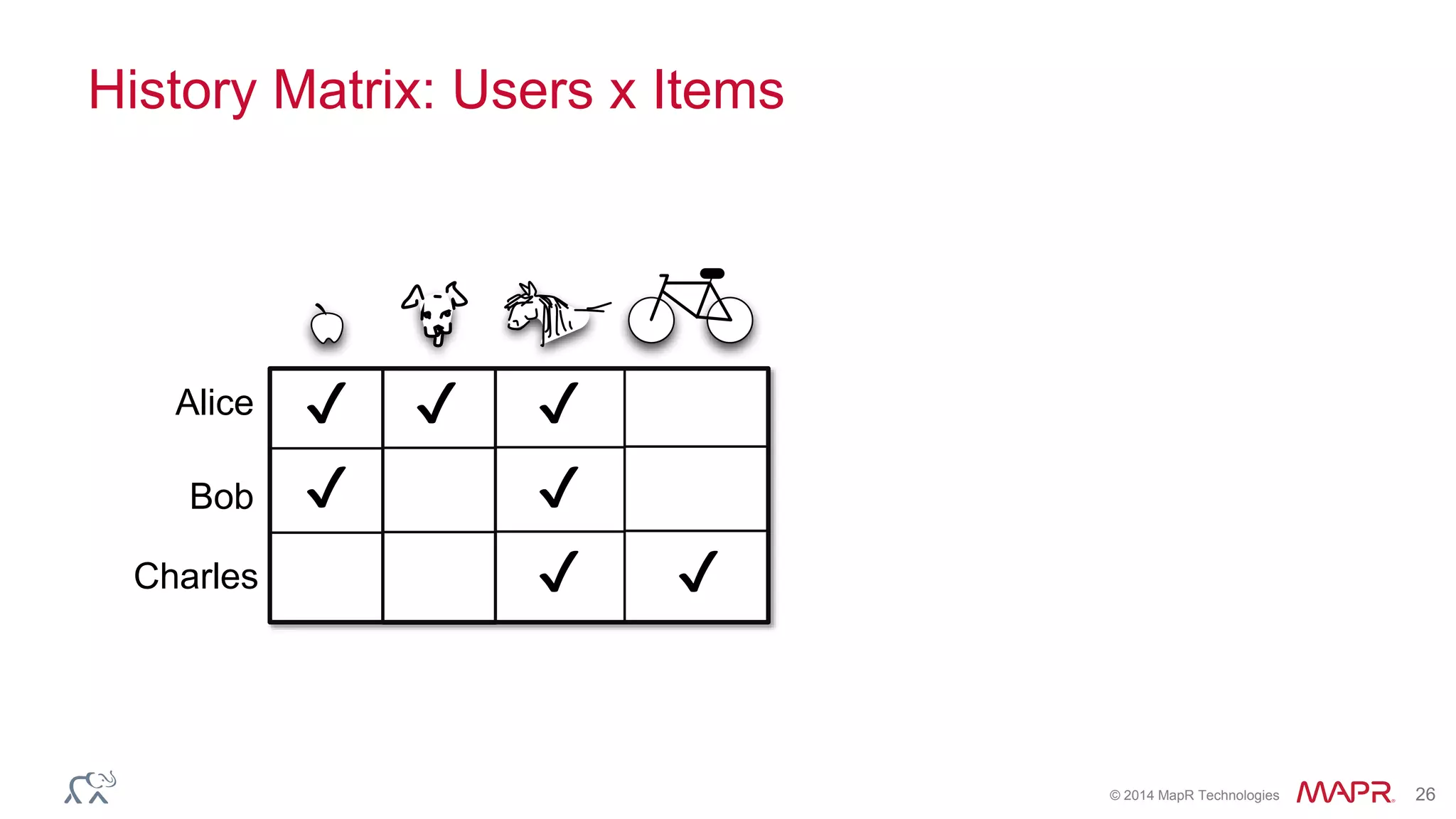

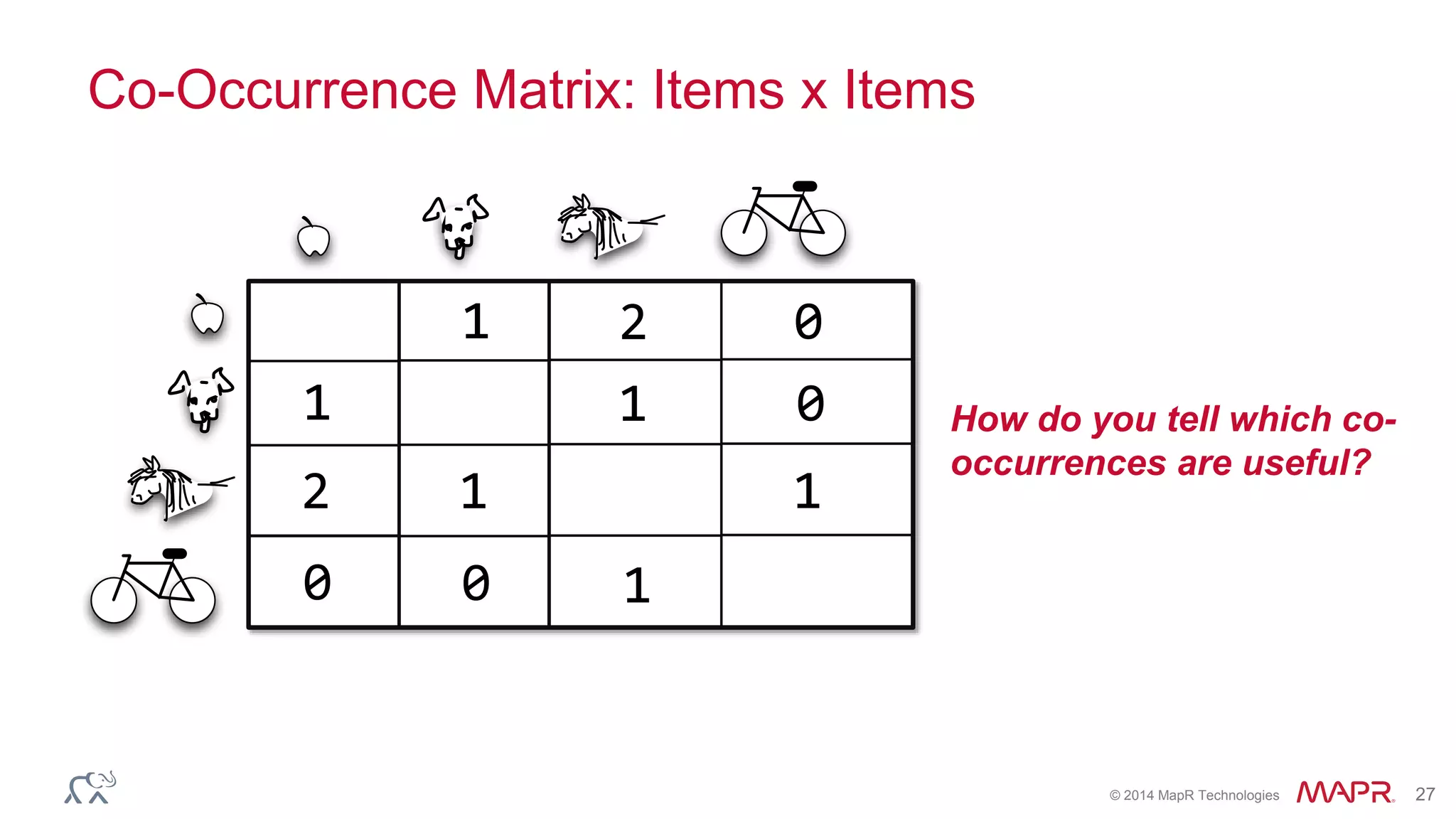

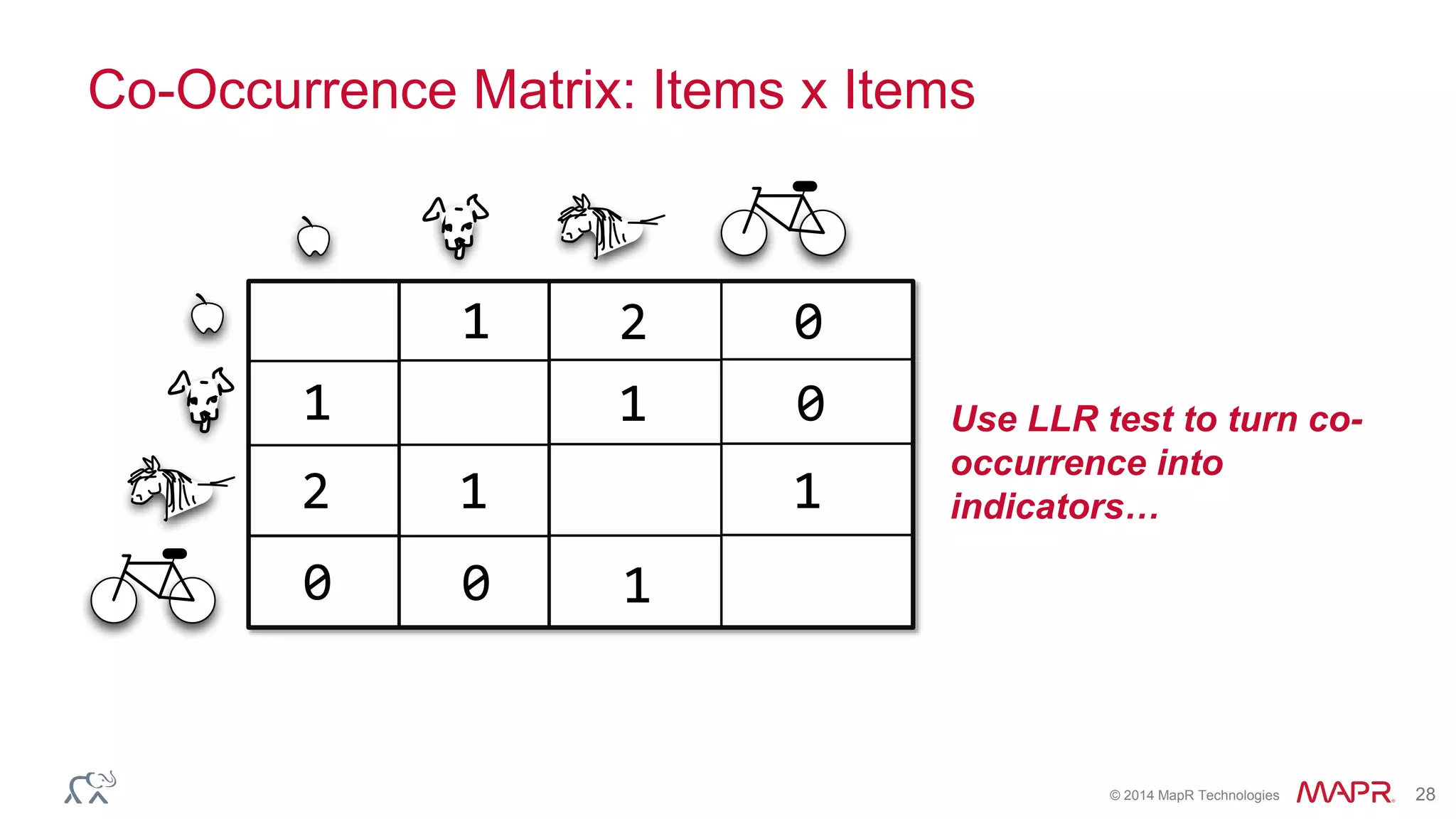

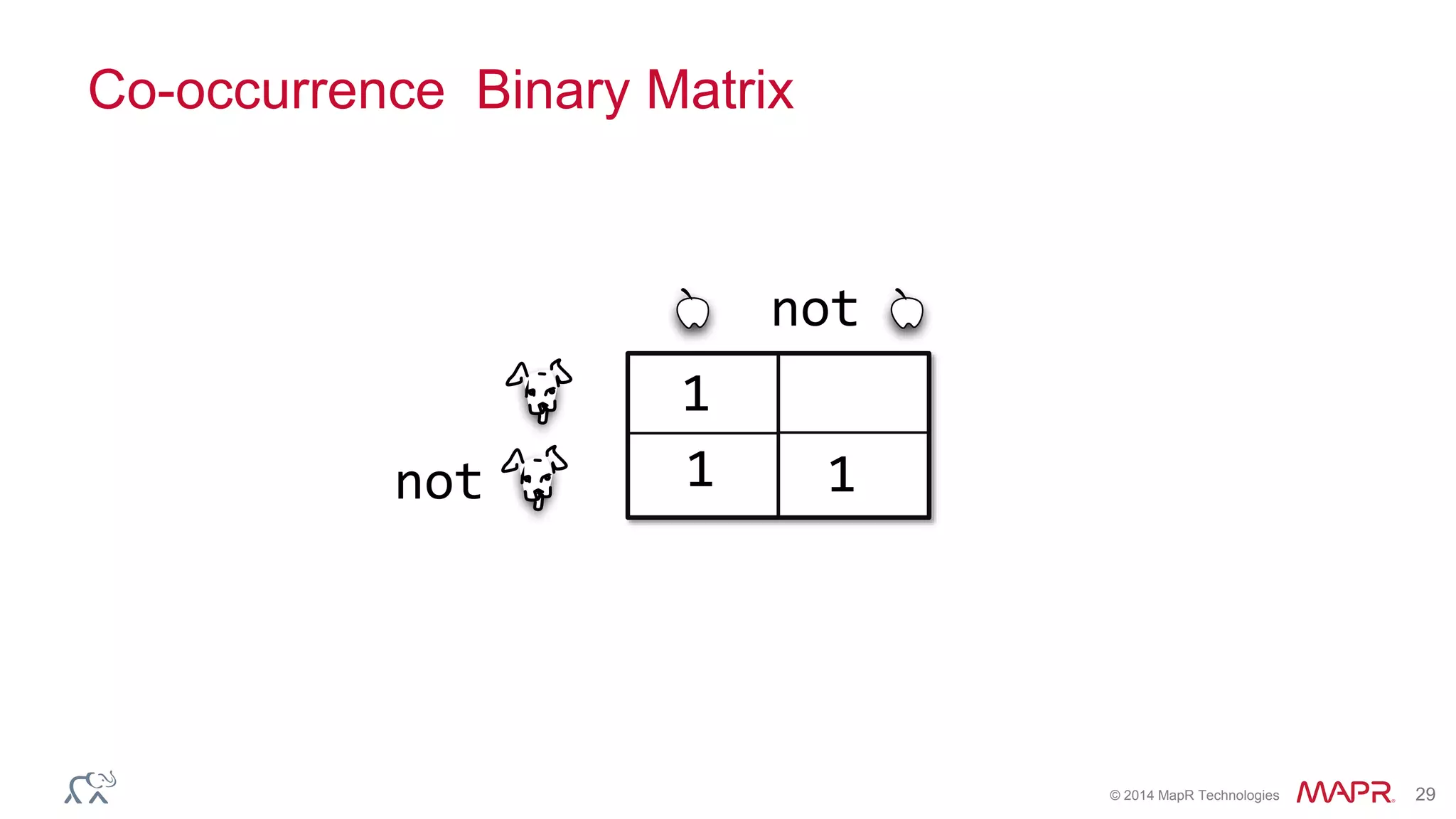

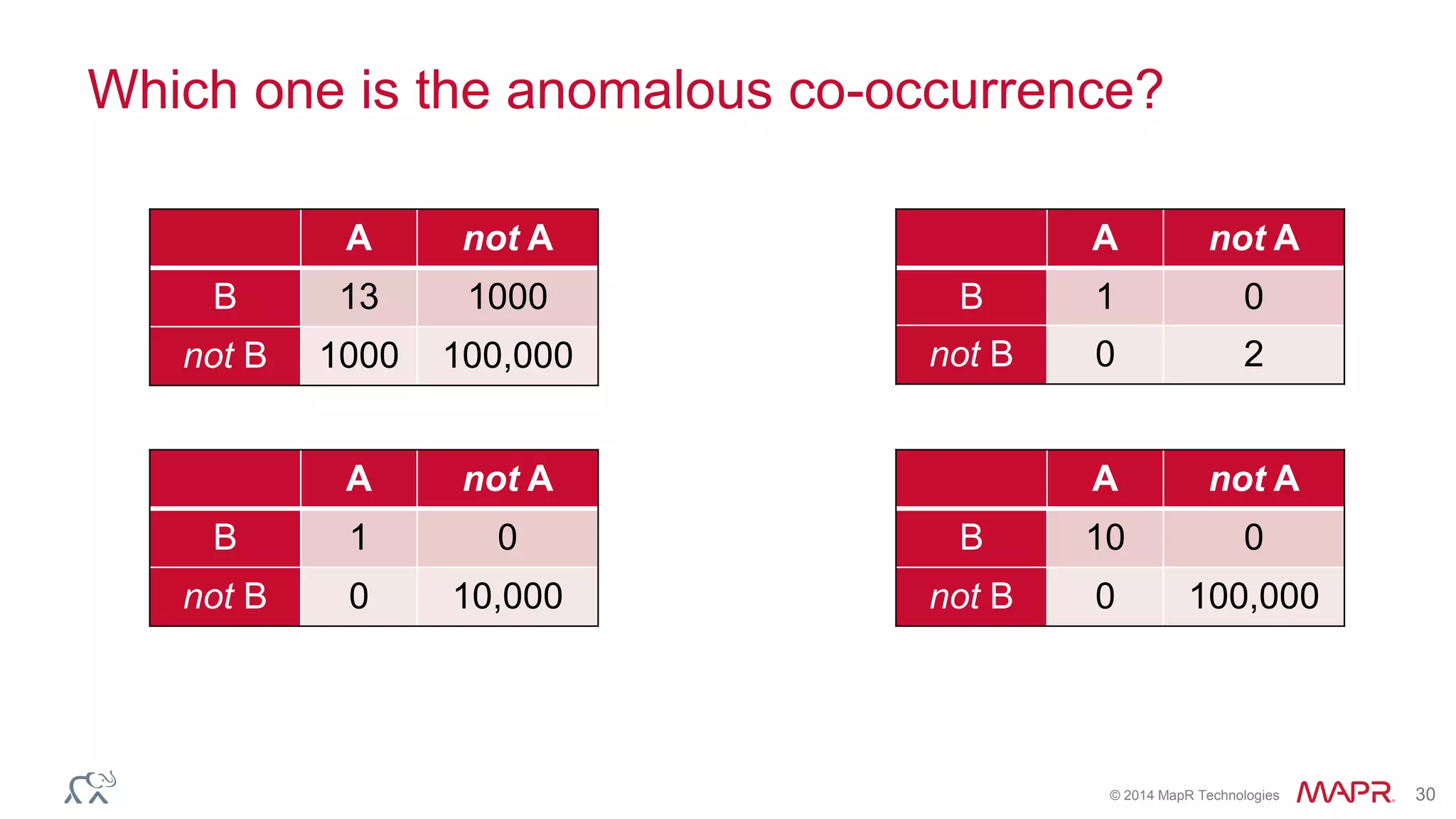

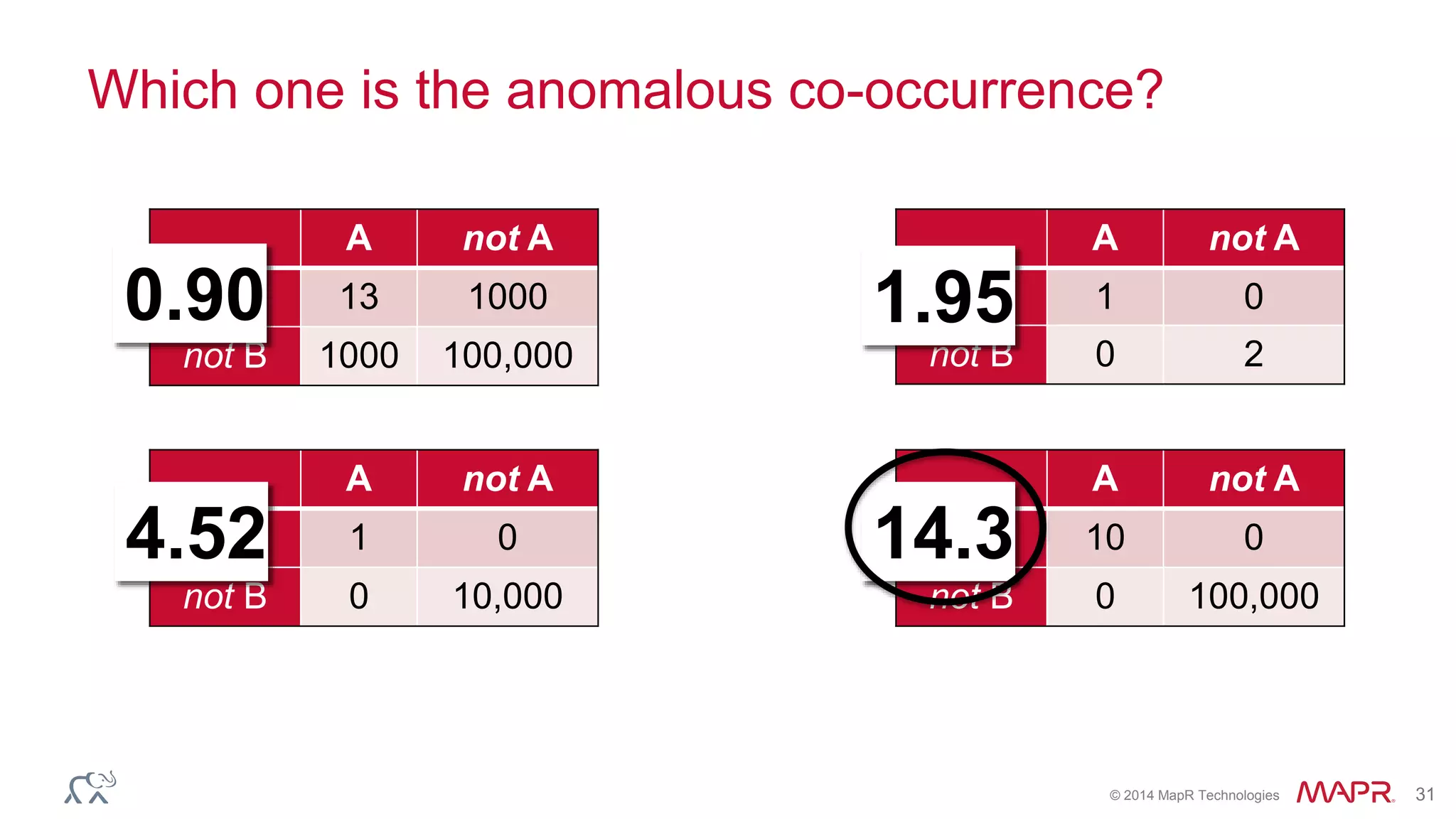

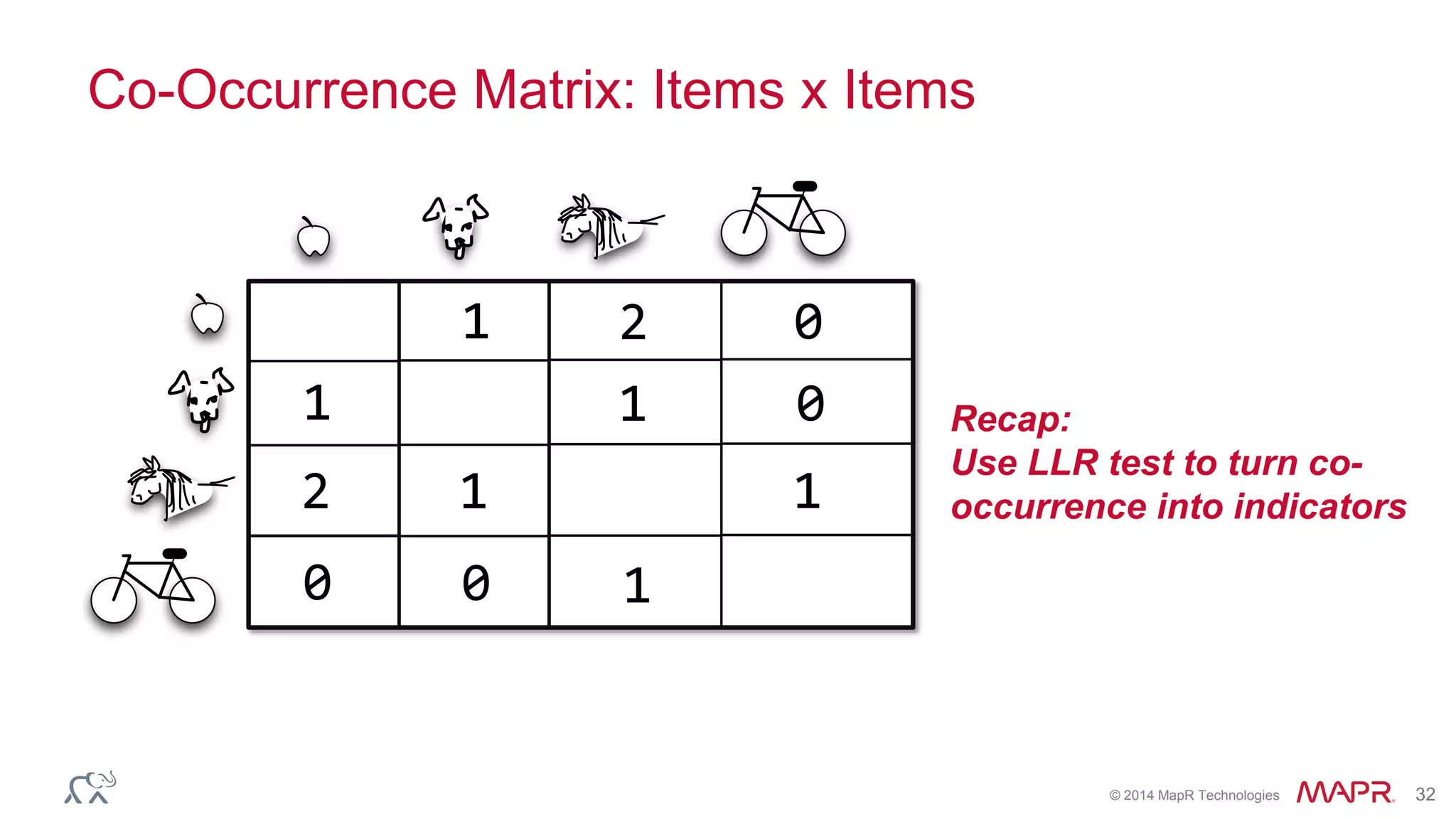

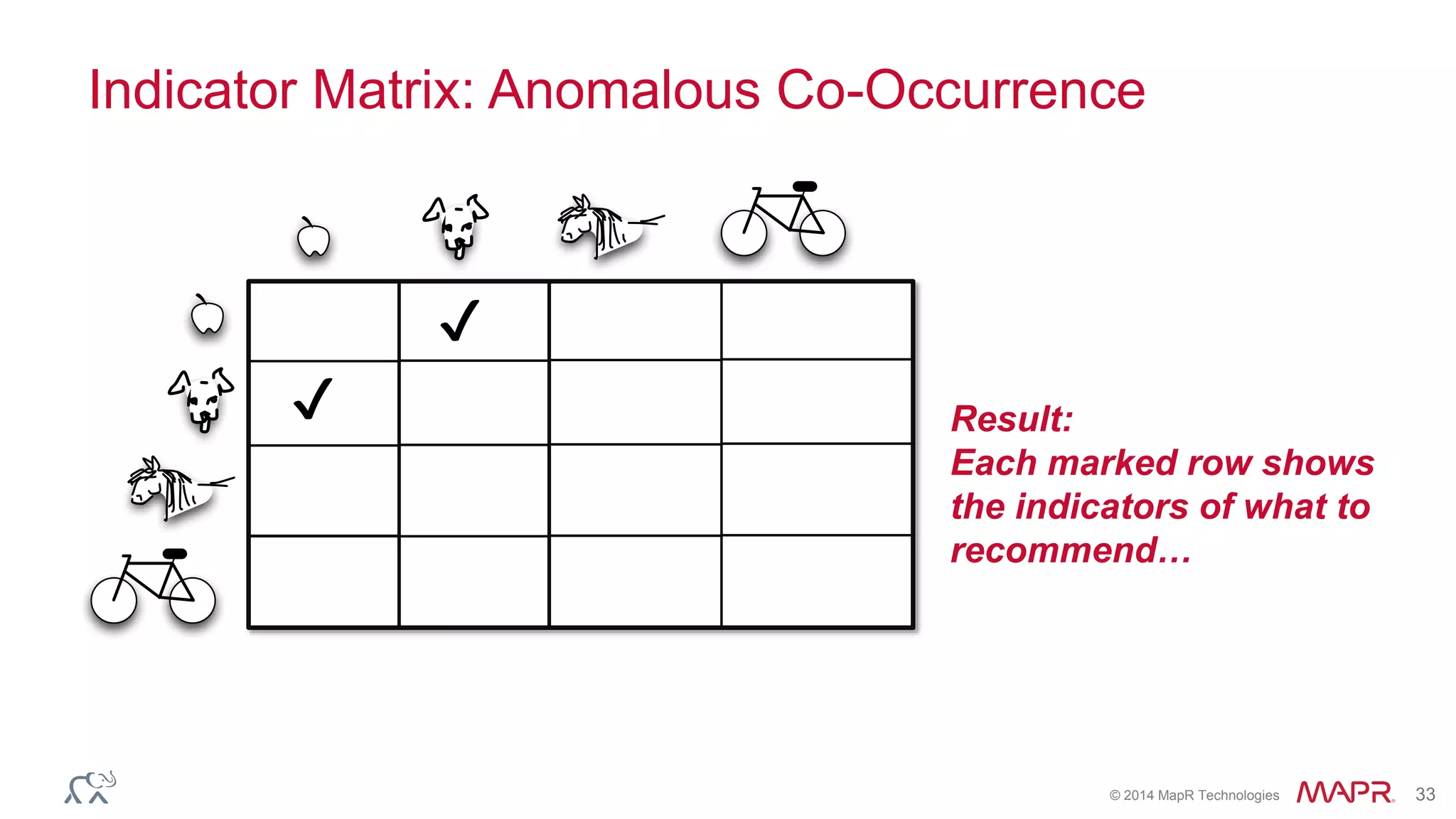

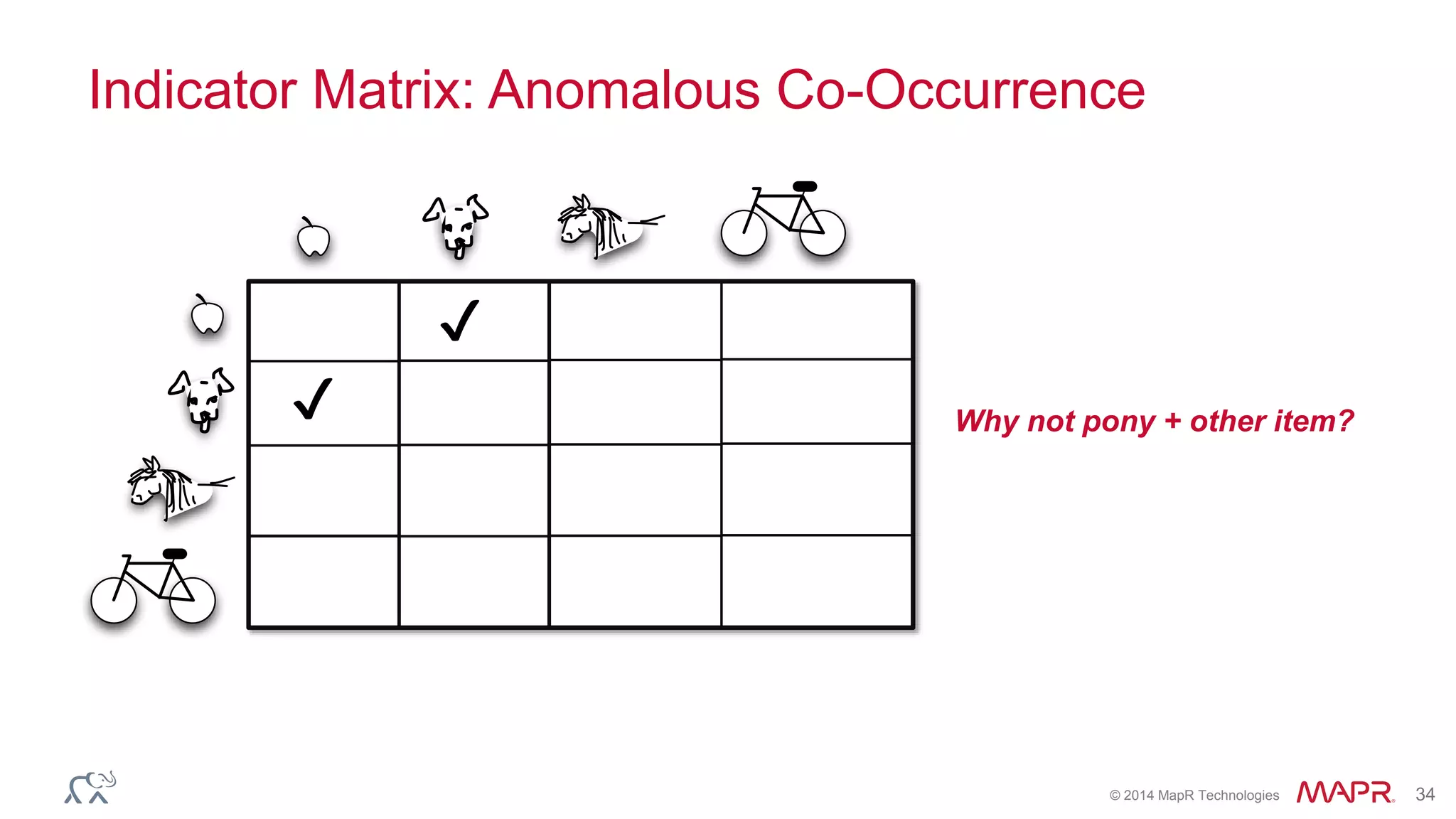

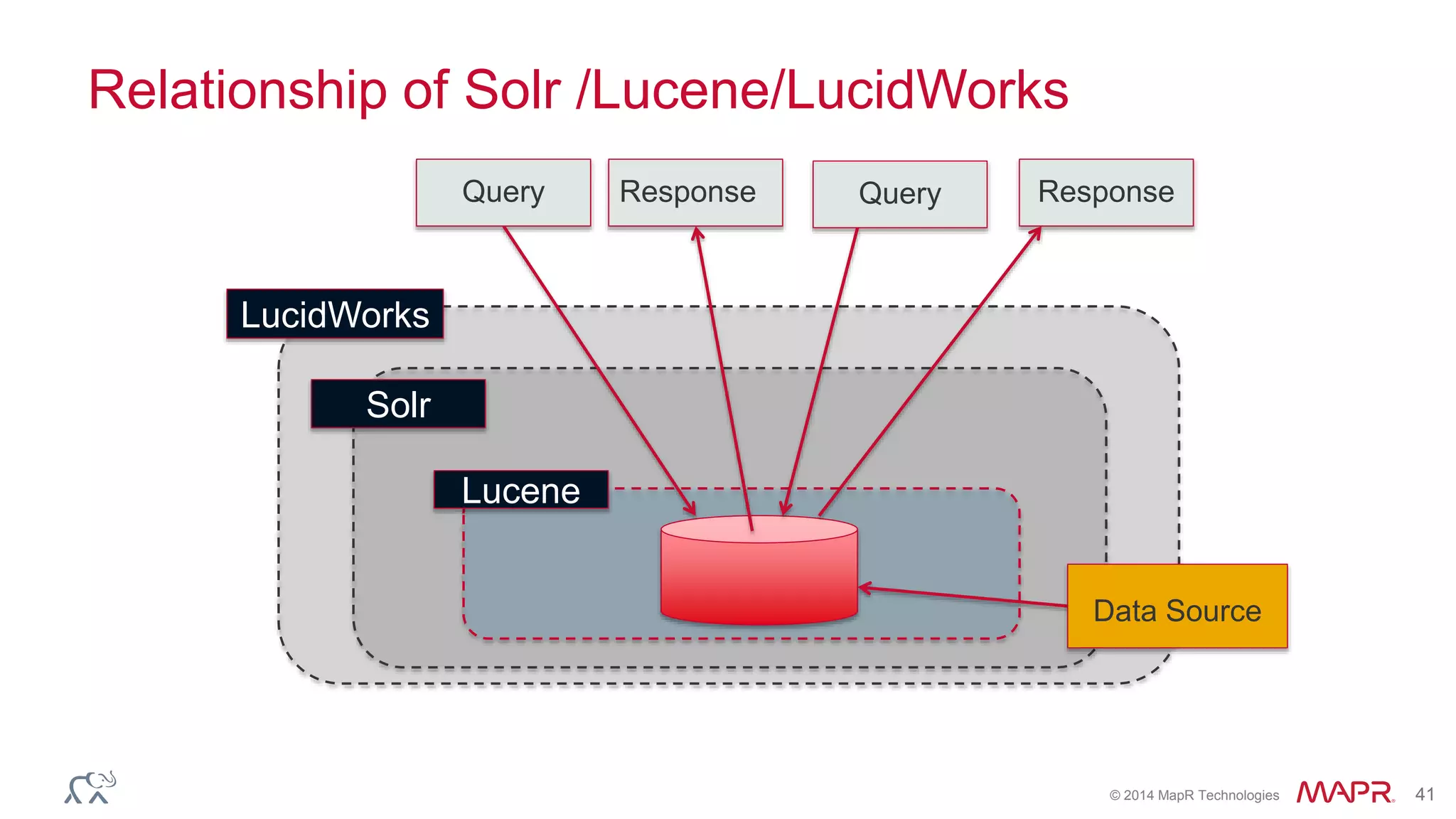

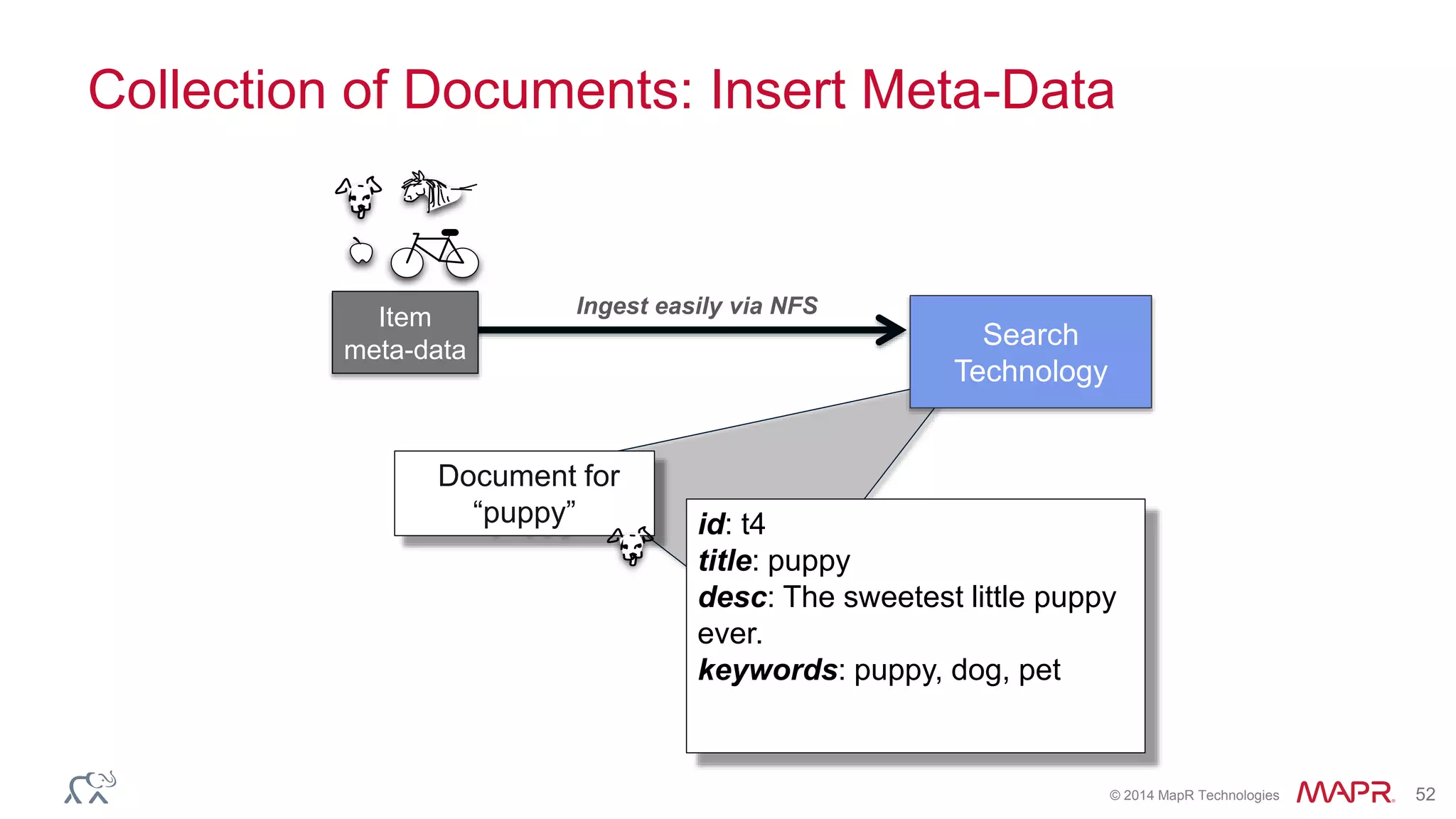

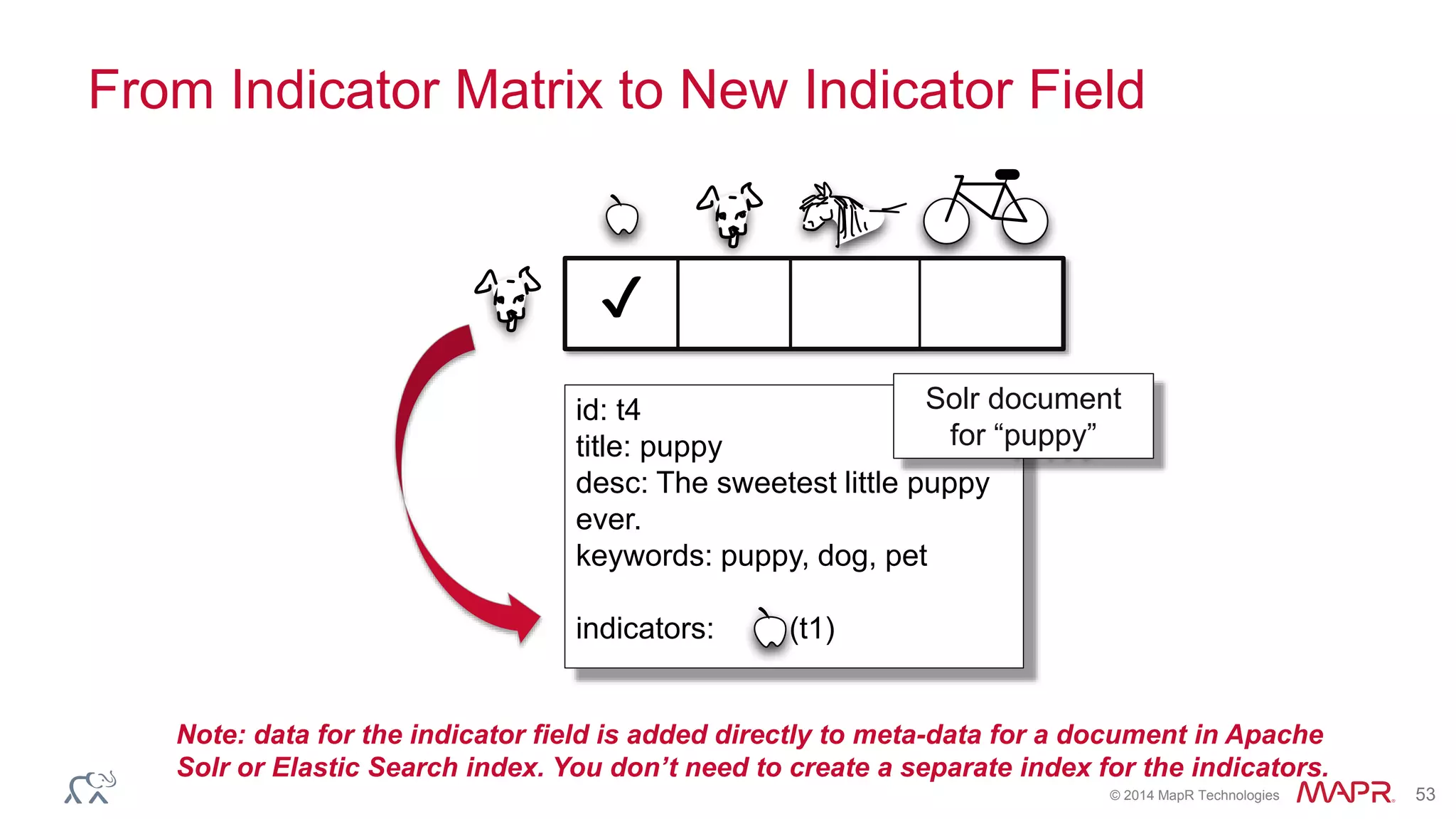

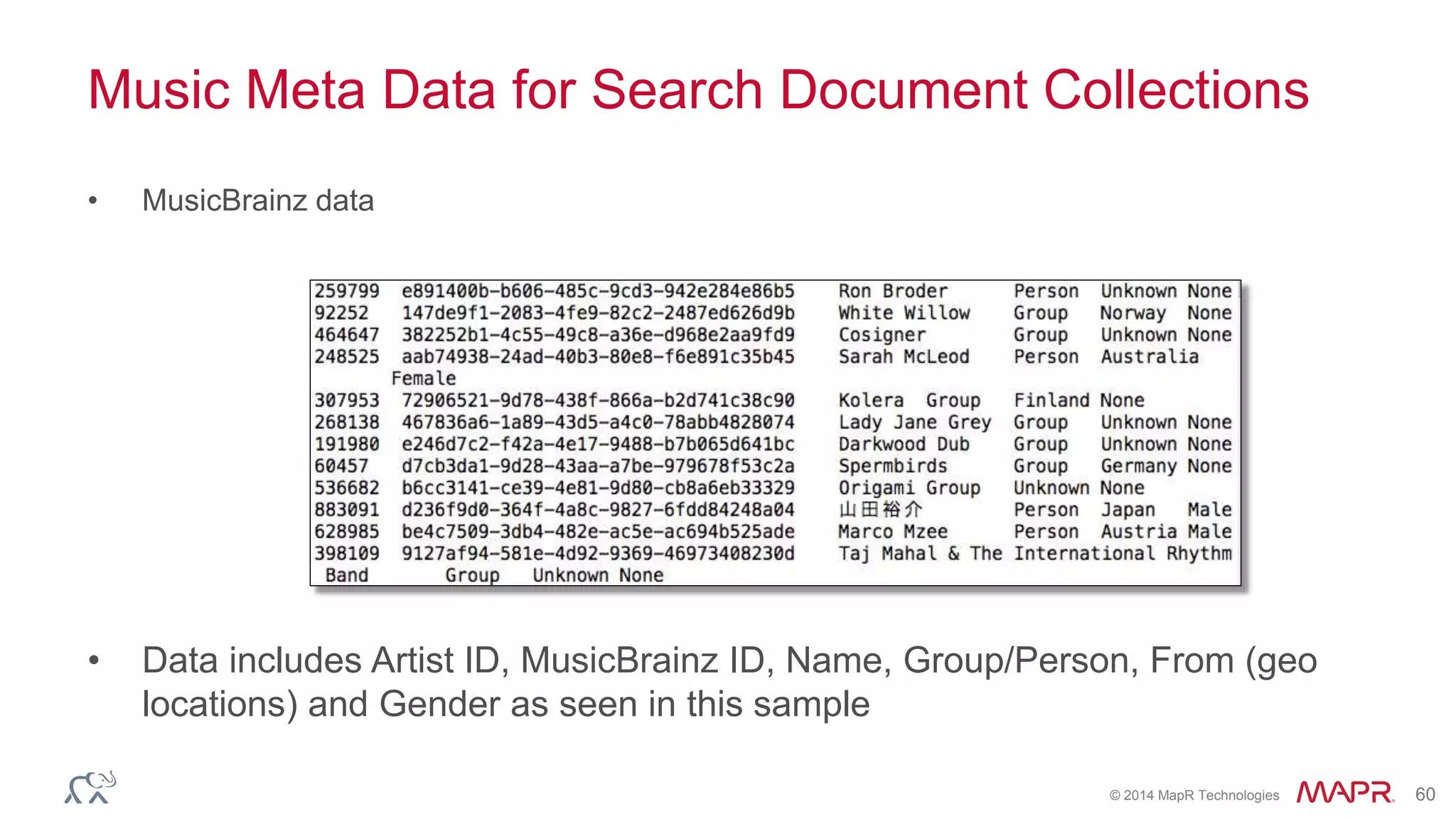

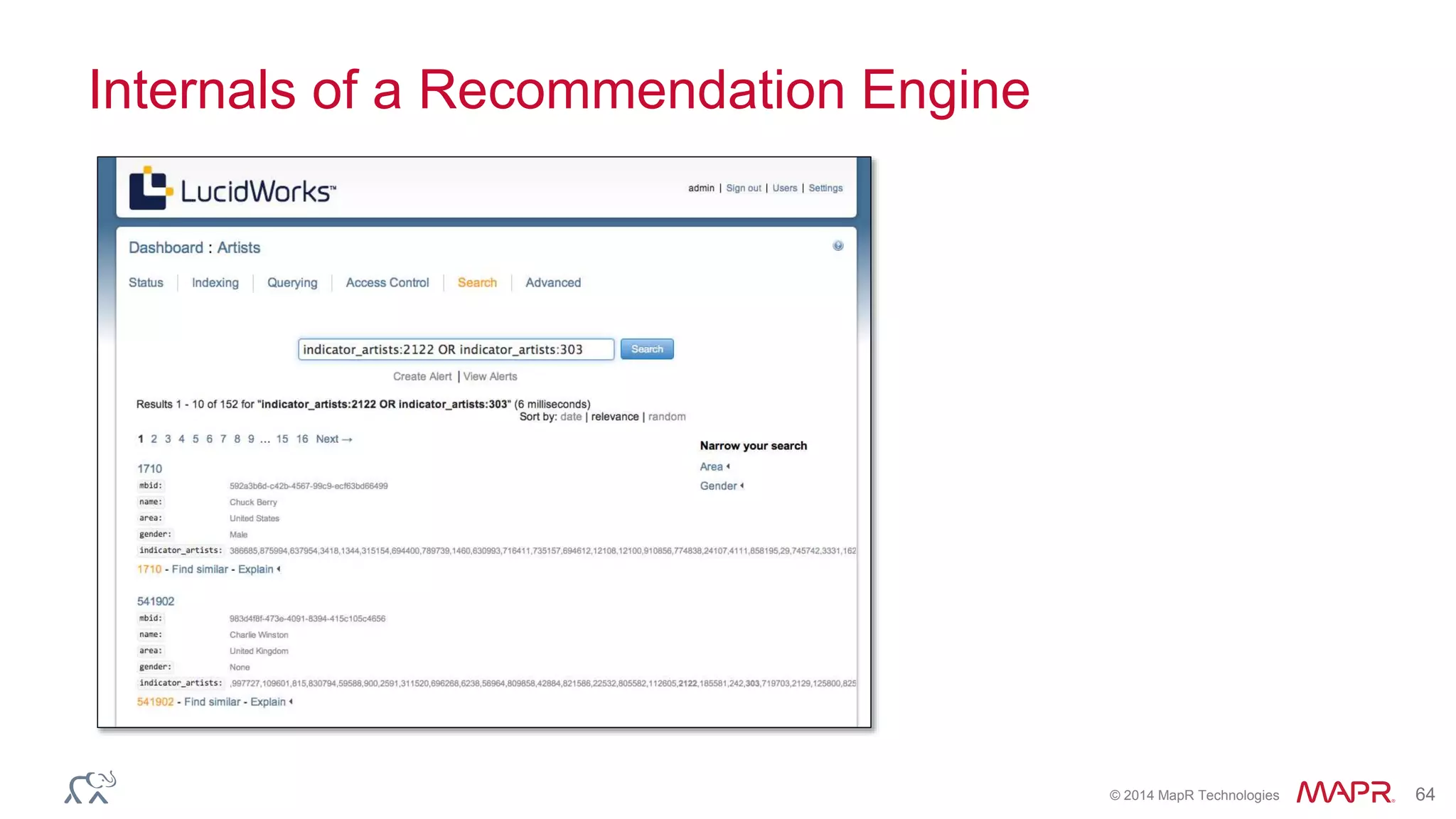

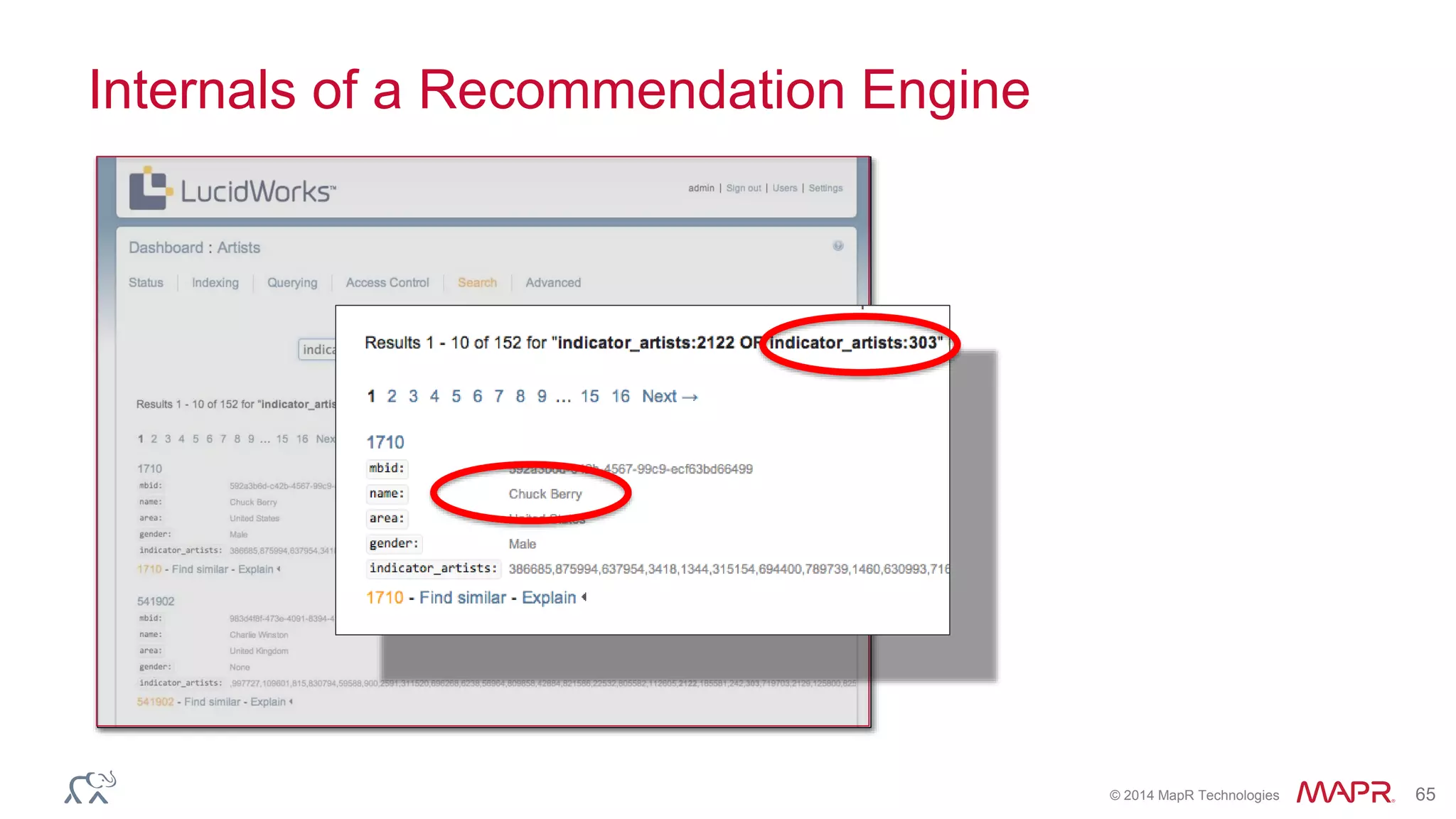

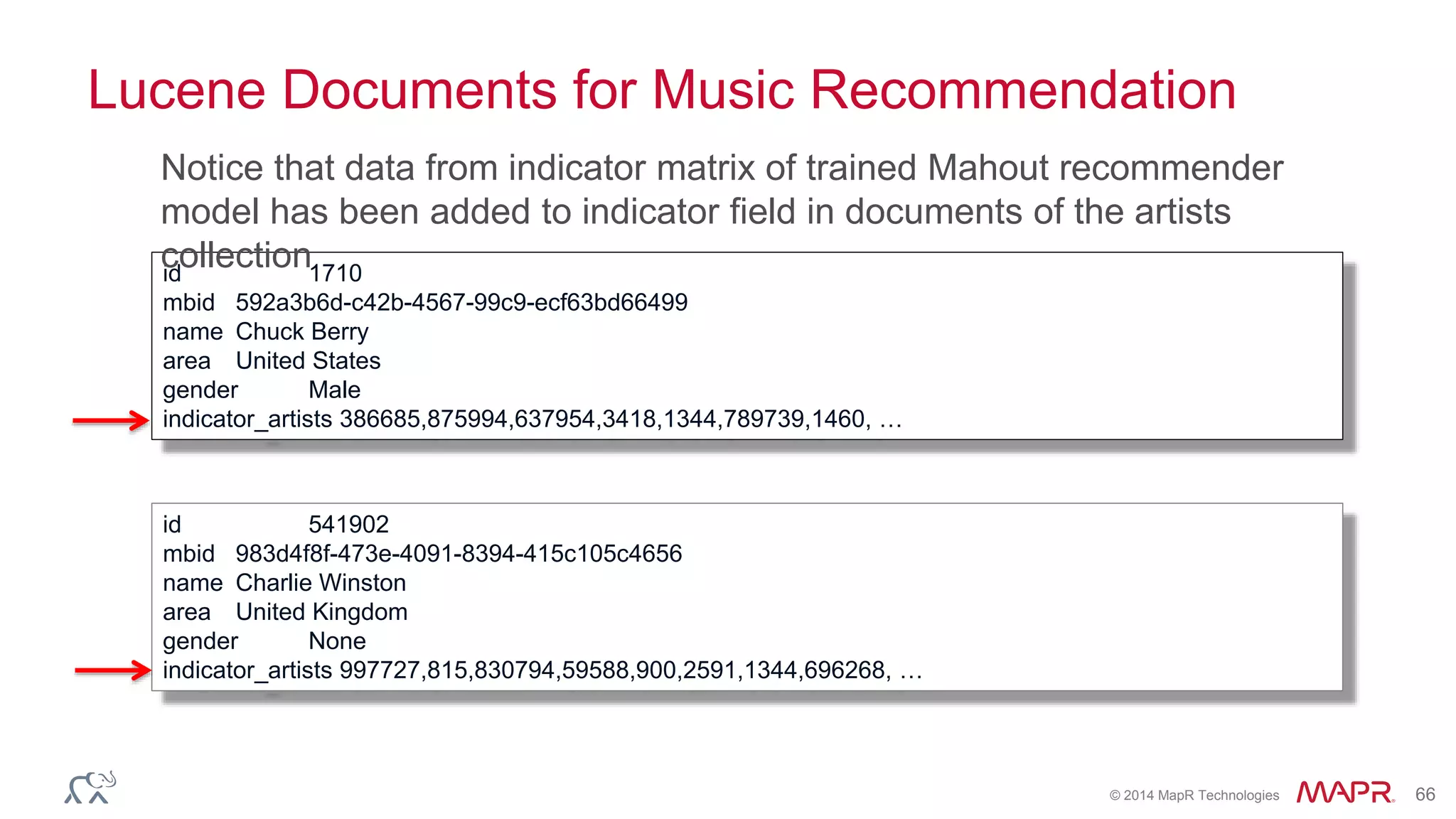

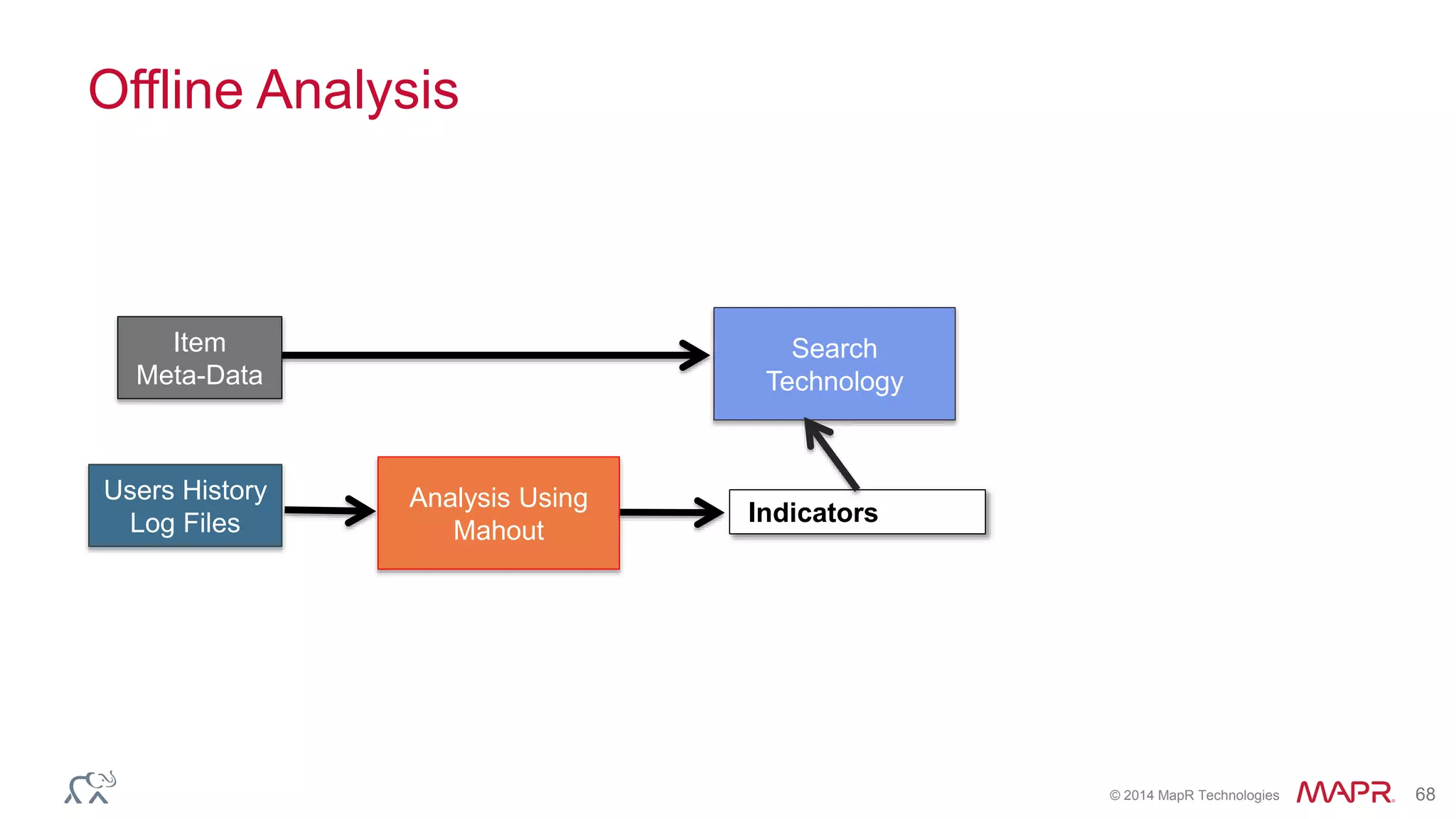

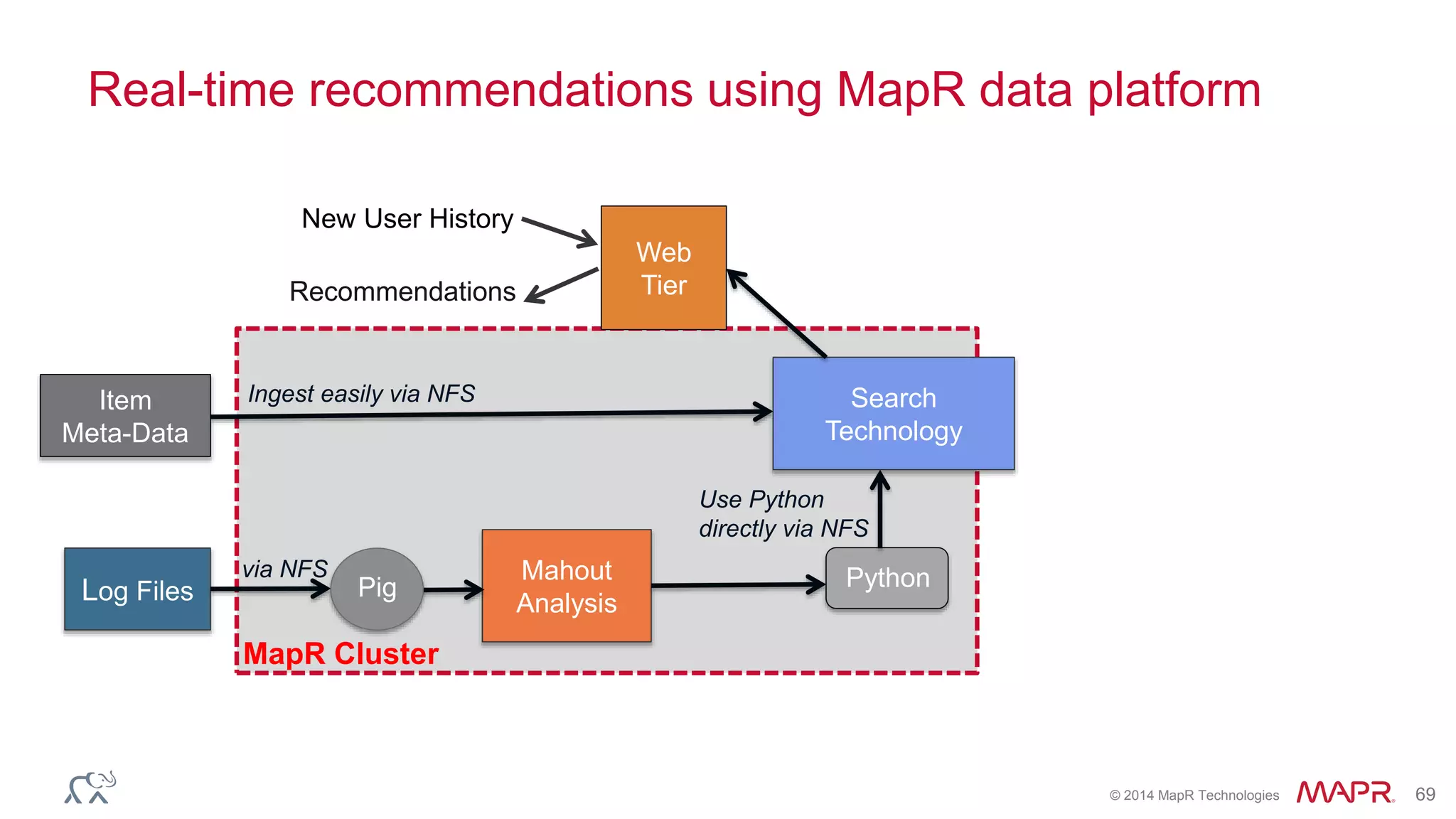

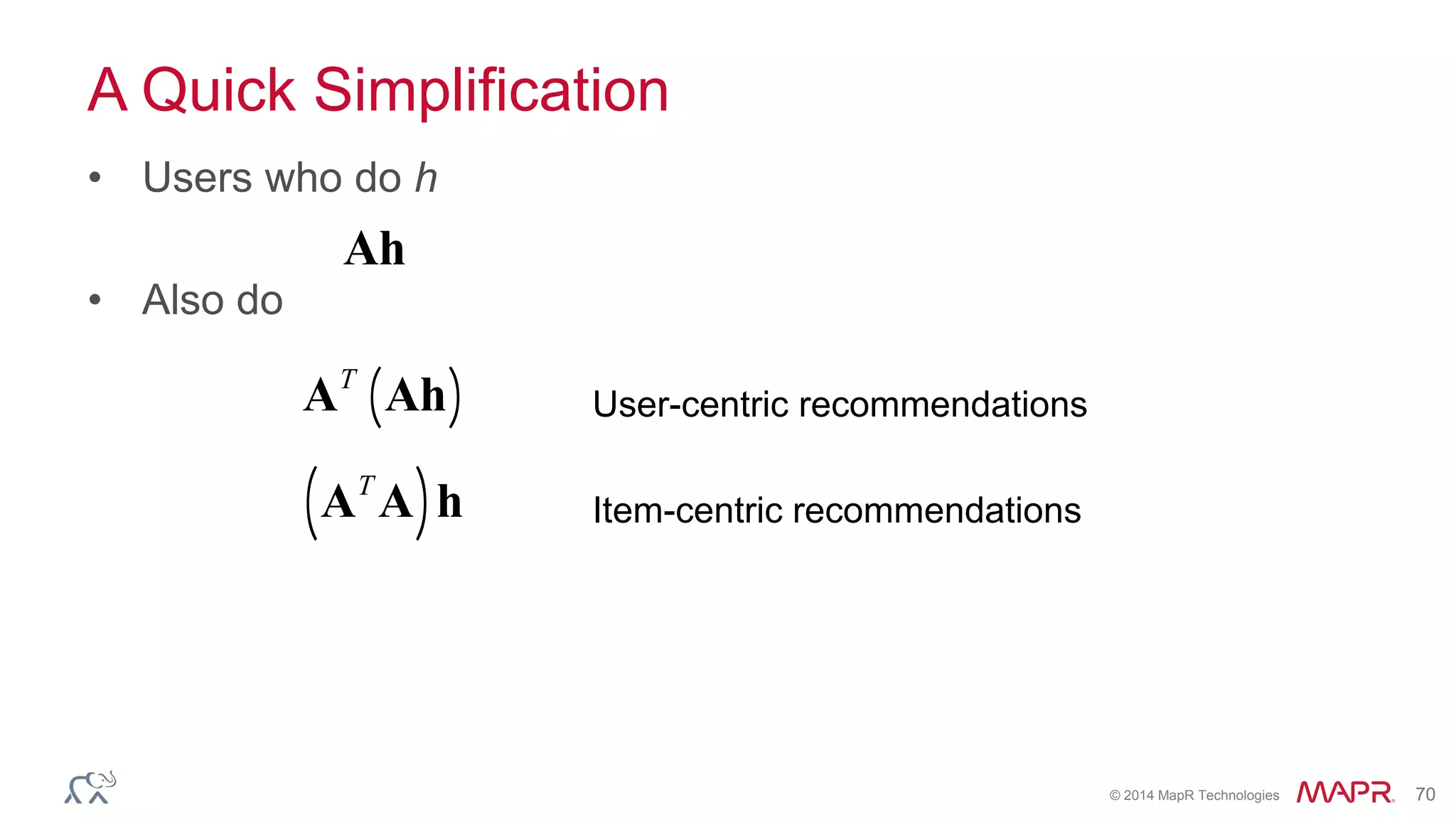

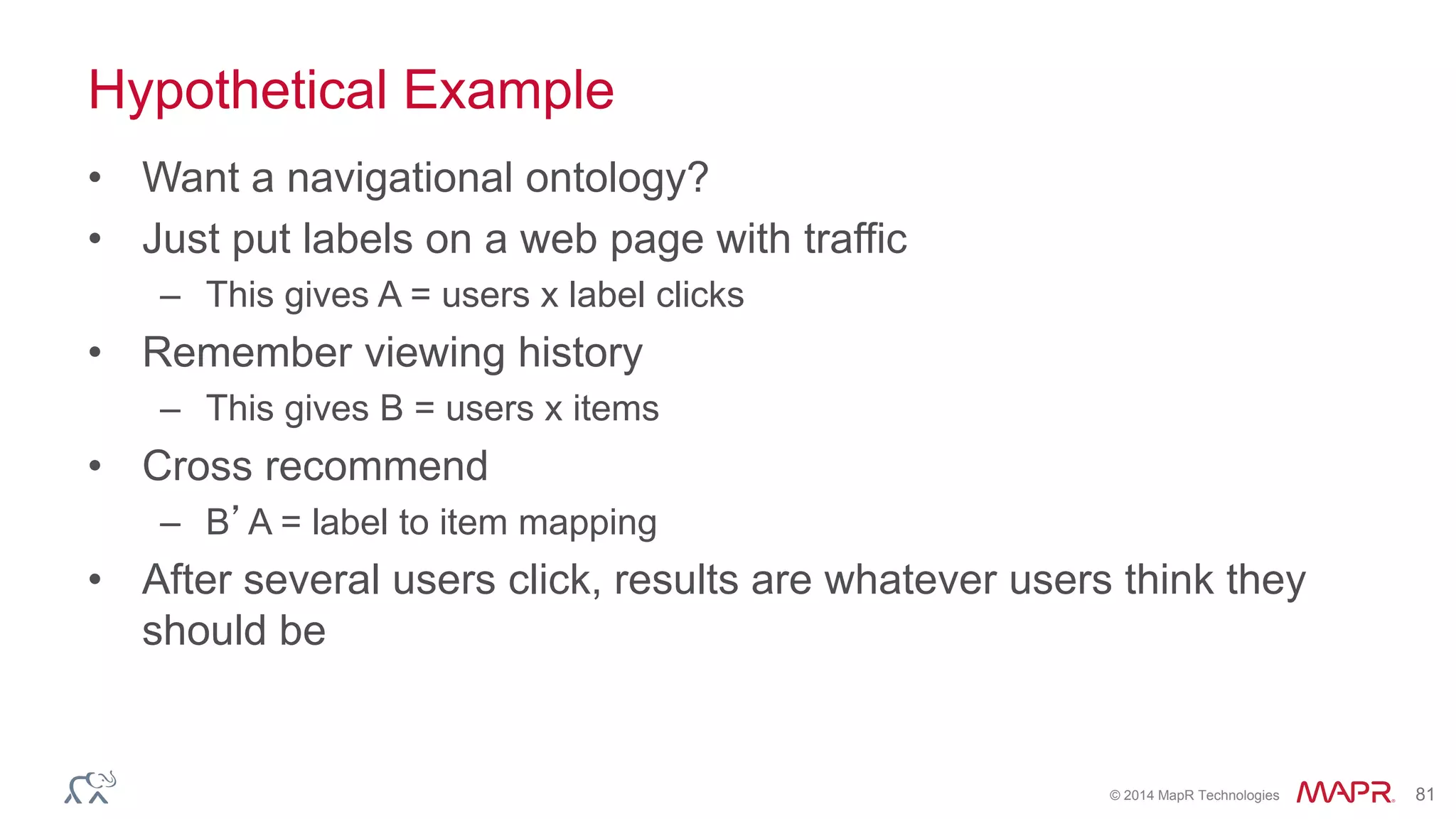

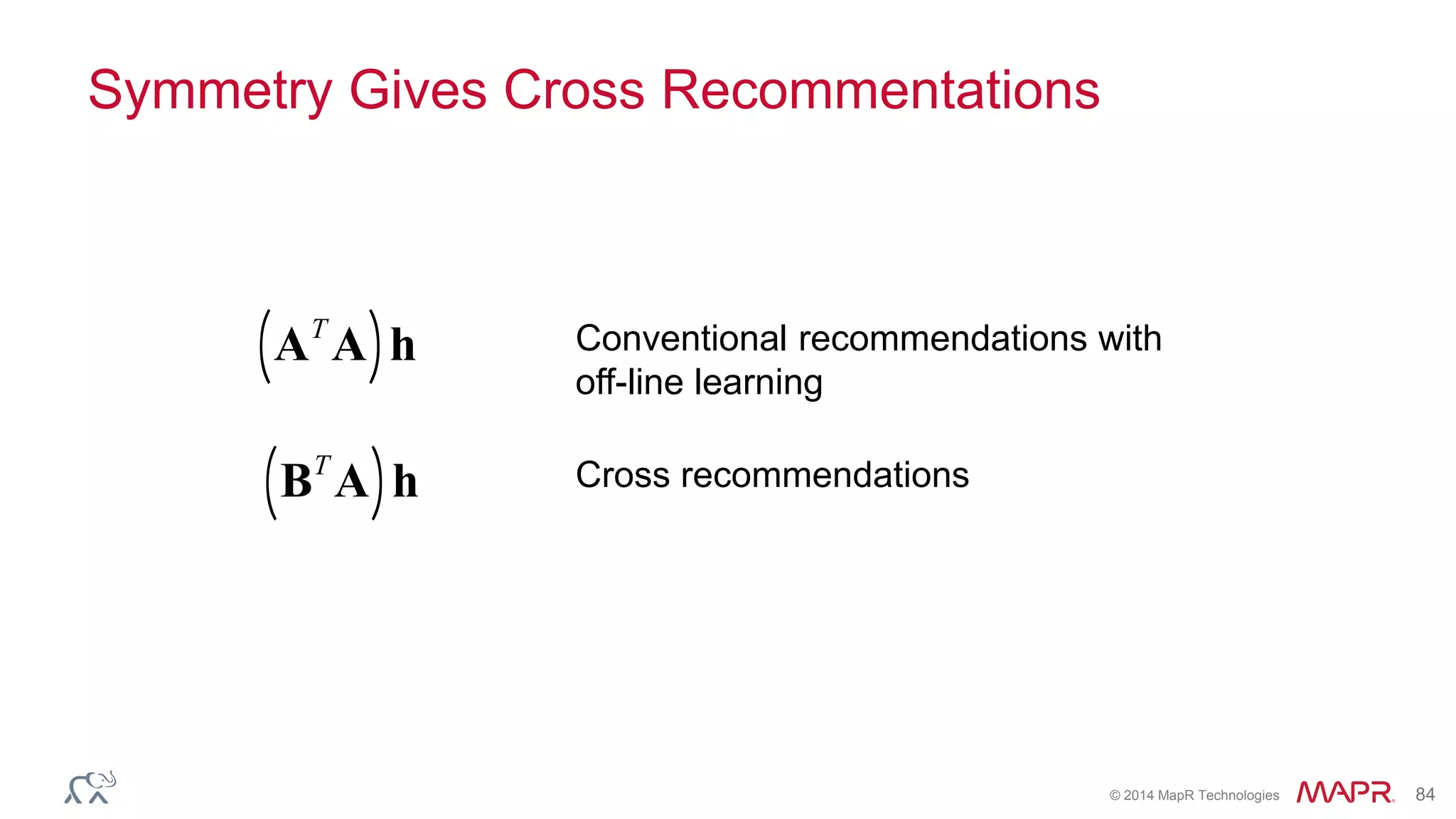

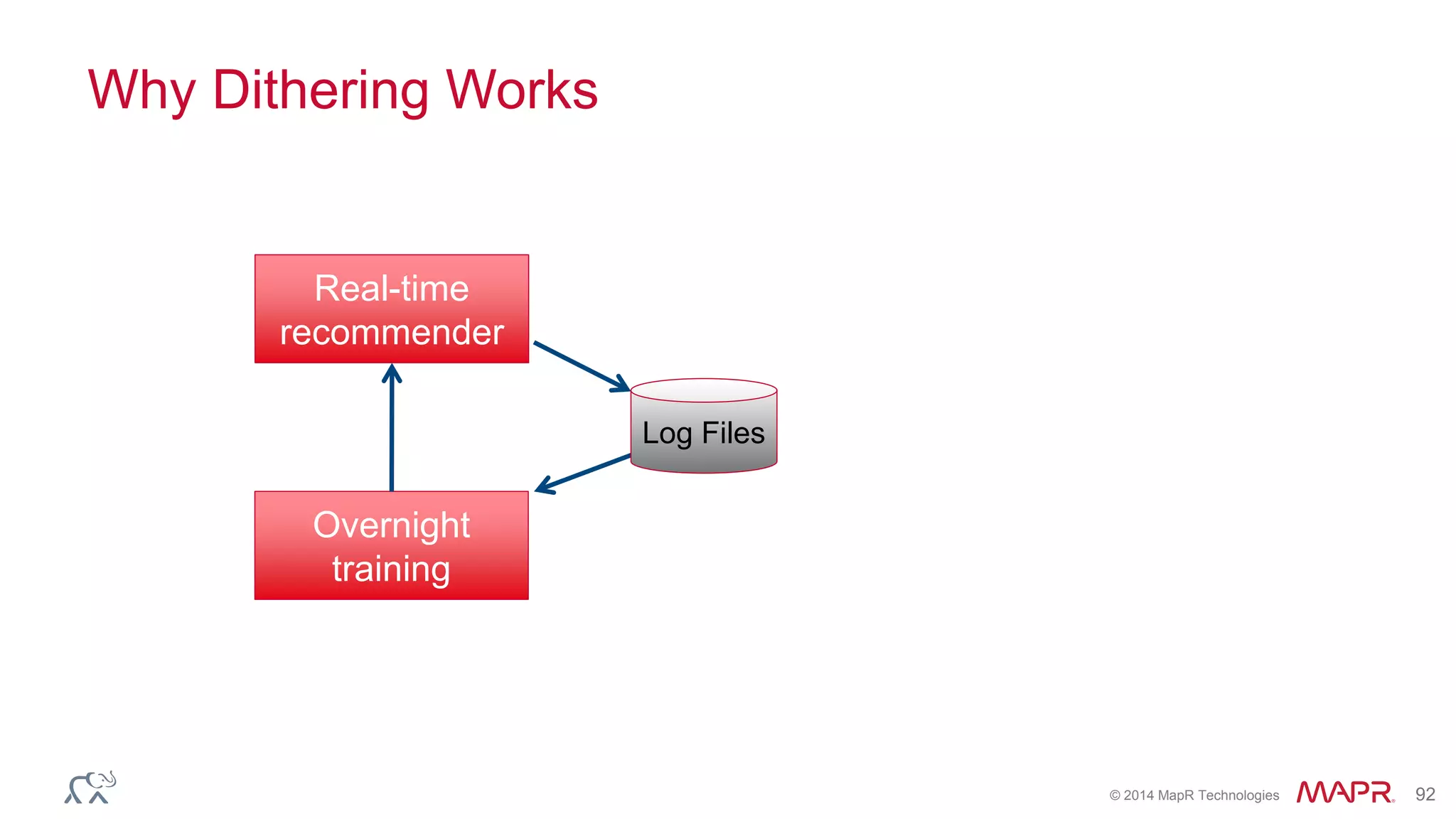

The document discusses the use of Apache Mahout for creating recommendation systems, highlighting the importance of using the right data and understanding user interactions to make accurate suggestions. It details methods for building recommendation models through user-item interaction data, transforming them into co-occurrence matrices, and applying statistical tests to identify significant patterns. Additionally, it covers the integration of search technologies like Apache Solr and Lucene to enhance the delivery of personalized recommendations in various applications, including music and movie suggestions.

![© 2014 MapR Technologies 94

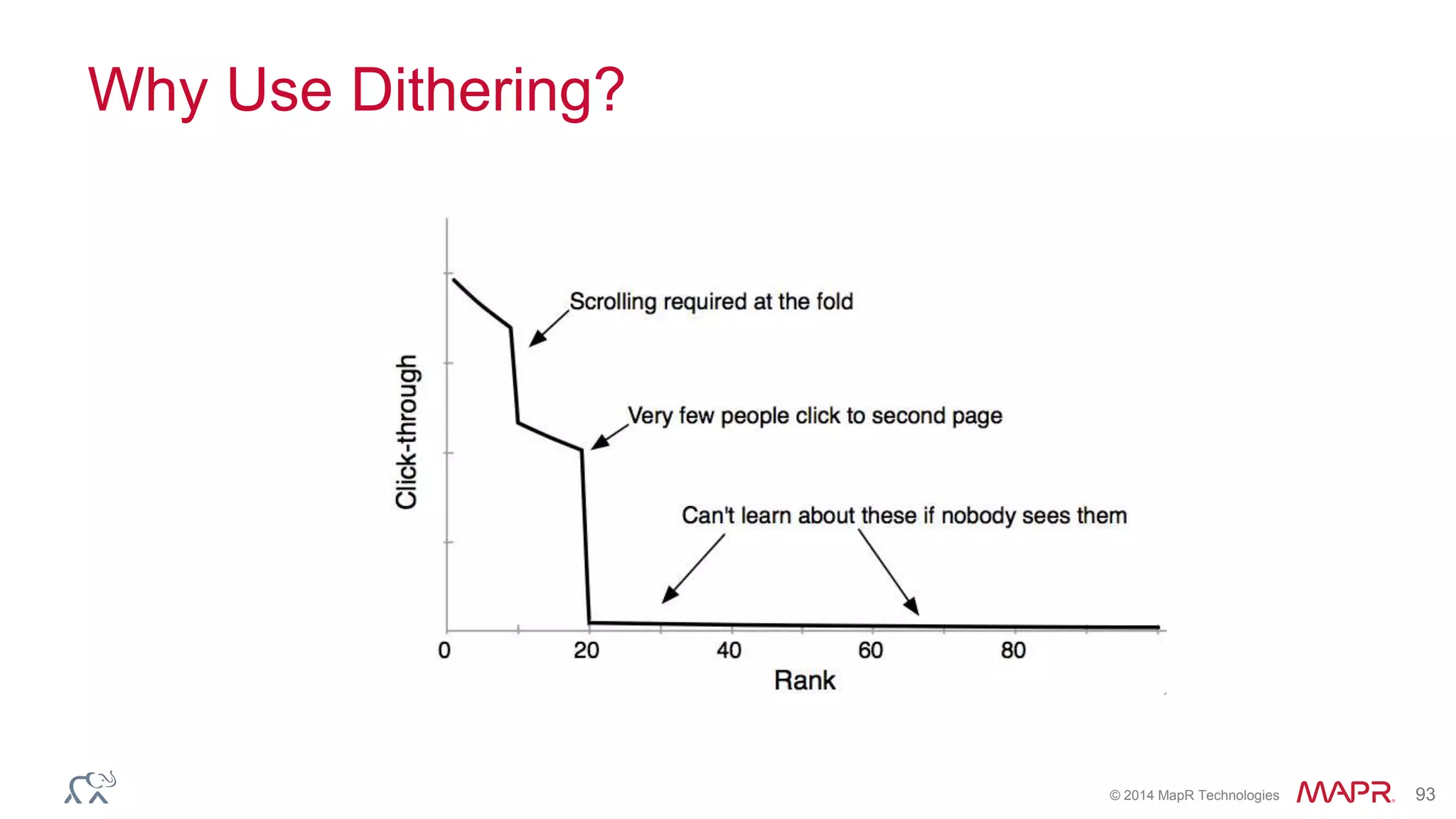

Simple Dithering Algorithm

• Synthetic score from log rank plus Gaussian

• Pick noise scale to provide desired level of mixing

• Typically

• Also… use floor(t/T) as seed

s = logr + N(0,loge)

Dr

r

µe

e Î 1.5,3[ ]](https://image.slidesharecdn.com/recommendation-workshop-bigdataeverywhere-140623140350-phpapp02/75/Practical-Machine-Learning-Innovations-in-Recommendation-Workshop-83-2048.jpg)