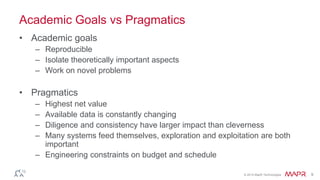

Ted Dunning presents on algorithms that really matter for deploying machine learning systems. The most important advances are often not the algorithms but how they are implemented, including making them deployable, robust, transparent, and with the proper skillsets. Clever prototypes don't matter if they can't be standardized. Sketches that produce many weighted centroids can enable online clustering at scale. Recursive search and recommendations, where one implements the other, can also be important.

![© 2014 MapR Technologies 16

Simple Dithering Algorithm

• Generate synthetic score from log rank plus Gaussian

• Pick noise scale to provide desired level of mixing

• Typically

• Oh… use floor(t/T) as seed

s = logr + N(0,e)

e Î 0.4, 0.8[ ]

Dr µrexpe](https://image.slidesharecdn.com/th-220p-210a-dunning-140617135935-phpapp02/85/How-to-Determine-which-Algorithms-Really-Matter-15-320.jpg)

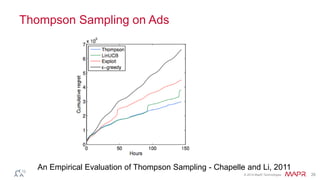

![© 2014 MapR Technologies 23

Thompson Sampling

• Select each shell according to the probability that it is the best

• Probability that it is the best can be computed using posterior

• But I promised a simple answer

P(i is best) = I E[ri |q]= max

j

E[rj |q]

é

ëê

ù

ûúò P(q | D) dq](https://image.slidesharecdn.com/th-220p-210a-dunning-140617135935-phpapp02/85/How-to-Determine-which-Algorithms-Really-Matter-22-320.jpg)

![© 2014 MapR Technologies 24

Thompson Sampling – Take 2

• Sample θ

• Pick i to maximize reward

• Record result from using i

q ~P(q | D)

i = argmax

j

E[rj |q]](https://image.slidesharecdn.com/th-220p-210a-dunning-140617135935-phpapp02/85/How-to-Determine-which-Algorithms-Really-Matter-23-320.jpg)