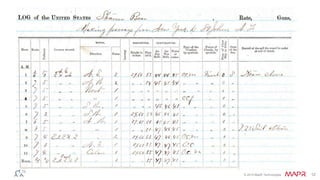

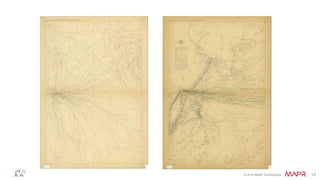

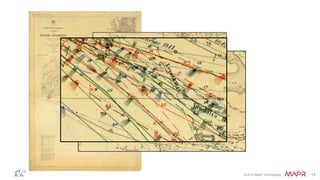

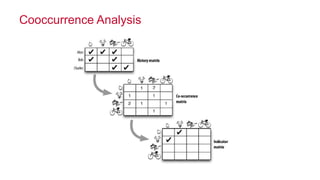

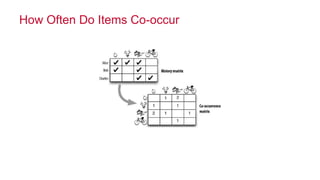

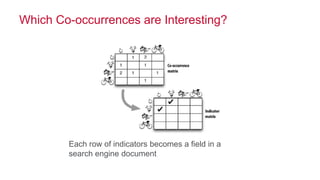

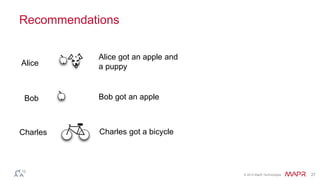

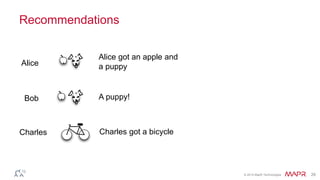

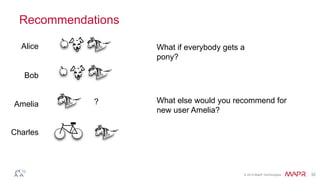

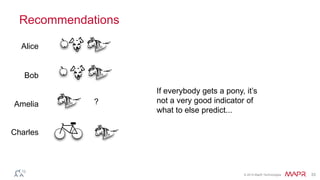

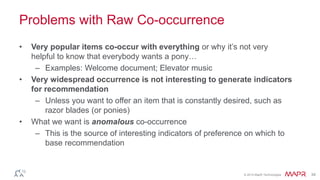

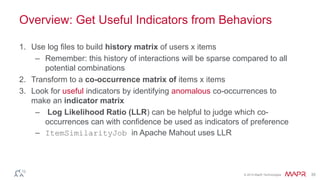

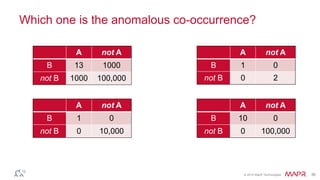

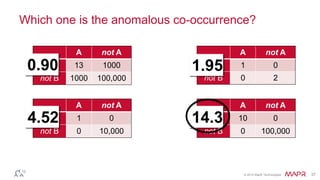

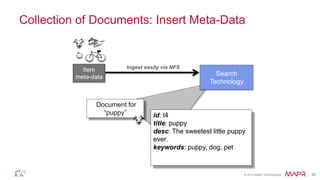

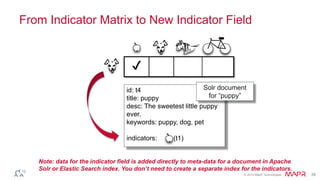

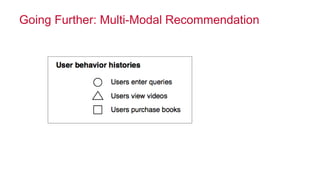

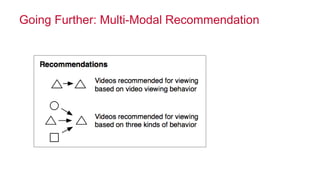

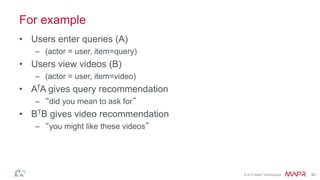

The document presents insights from Ted Dunning, Chief Applications Architect at MapR Technologies, discussing the evolution of big data project practices, particularly in behavioral analysis and recommendation systems. It critiques common assumptions about data utilization in recommendations and emphasizes the importance of anomalous co-occurrences for generating useful indicators. The content also highlights the need for integrating various forms of data to enhance recommendation systems, drawing on historical examples and contrasting them with current practices.