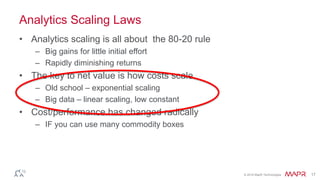

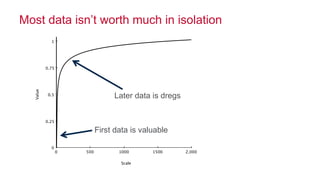

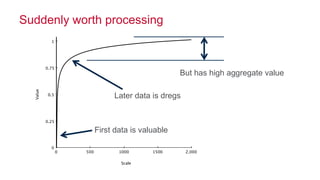

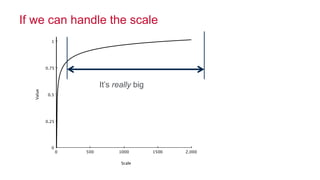

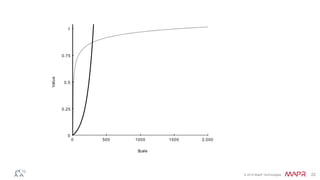

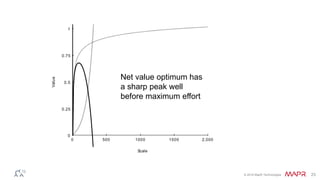

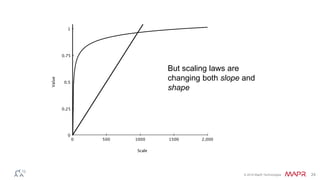

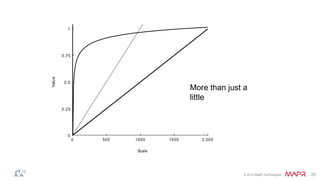

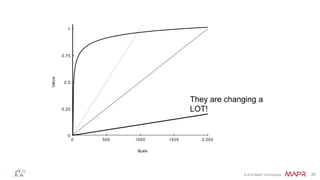

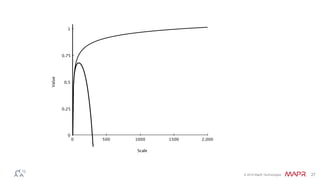

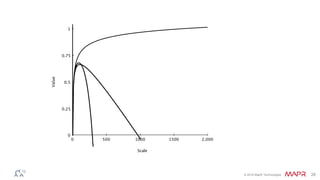

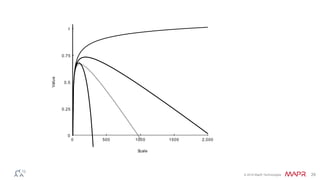

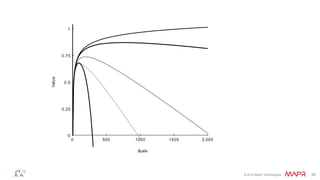

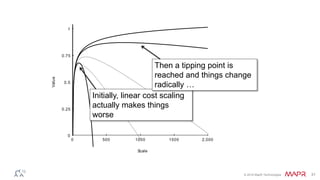

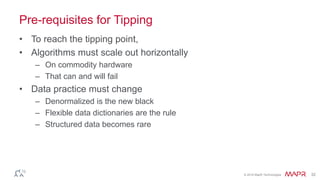

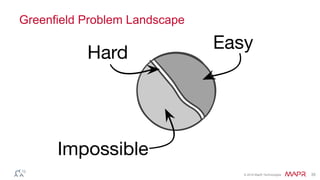

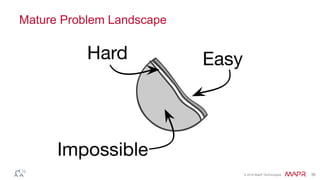

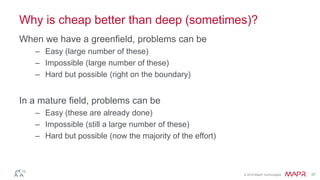

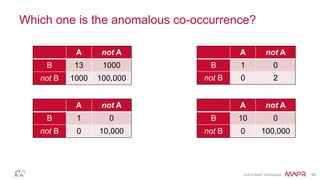

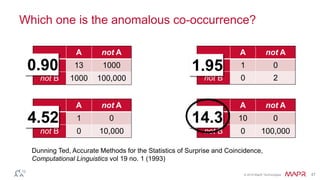

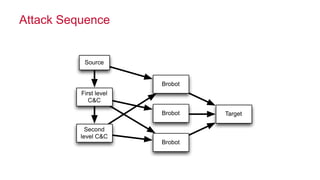

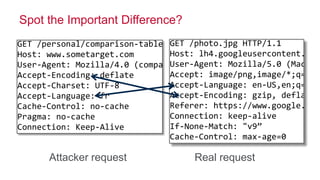

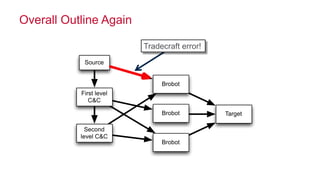

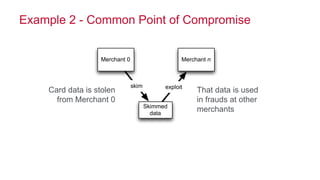

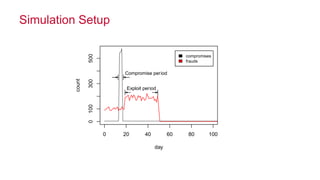

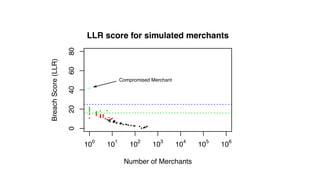

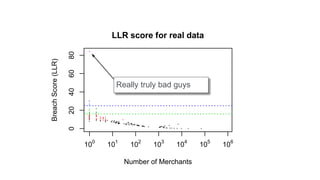

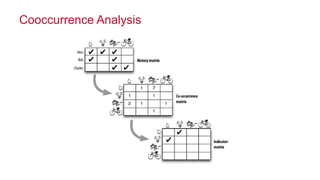

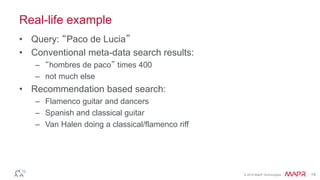

The document discusses how big data has enabled new opportunities by changing scaling laws and problem landscapes. Specifically, linearly scaling costs with big data now make it feasible to process large amounts of data, opening up many problems that were previously impossible or too difficult. This has created many "green field" opportunities where simple approaches can solve important problems. Two examples discussed are using log analysis to detect security threats and using transaction histories to find a common point of compromise for a data breach.