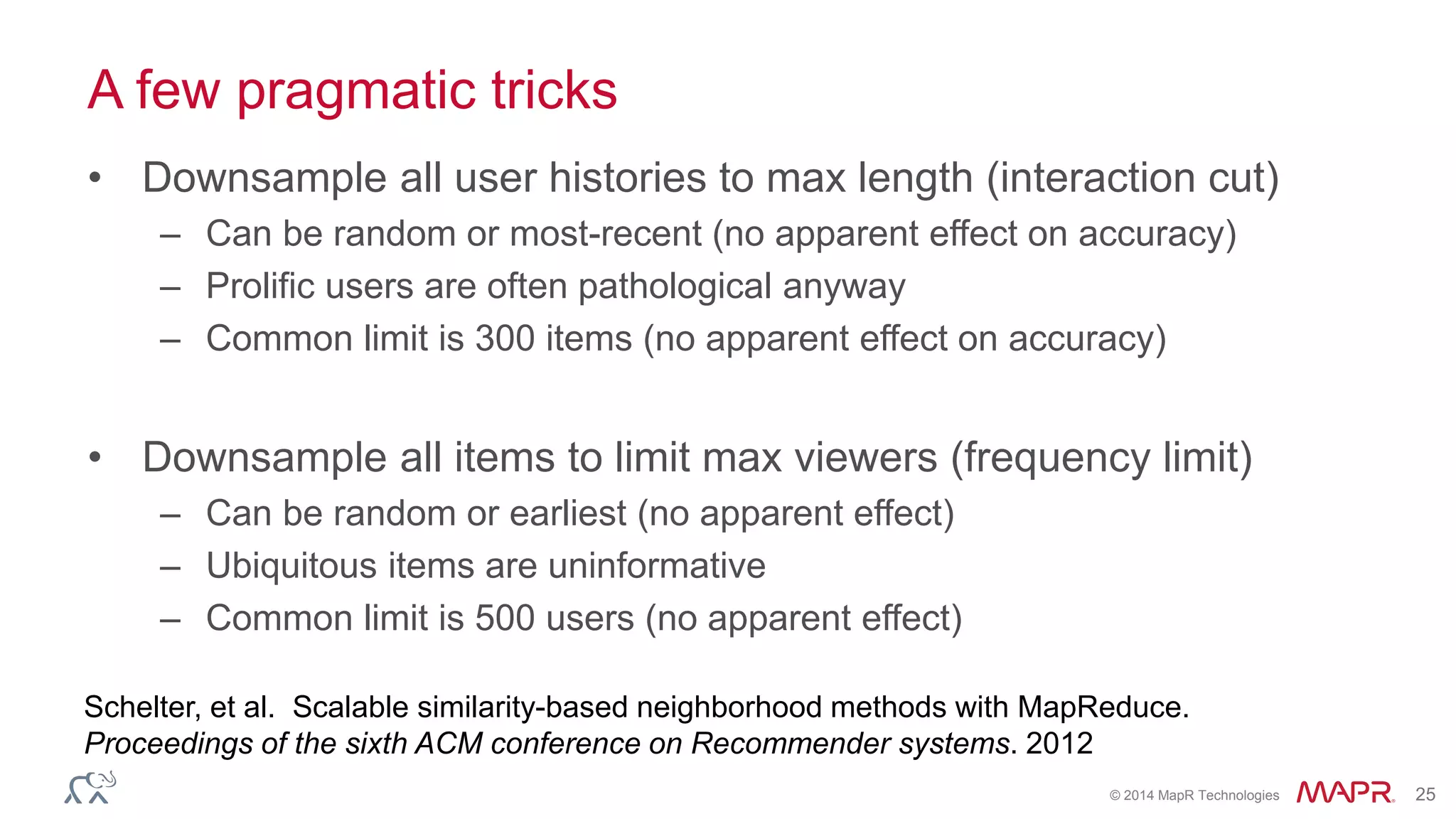

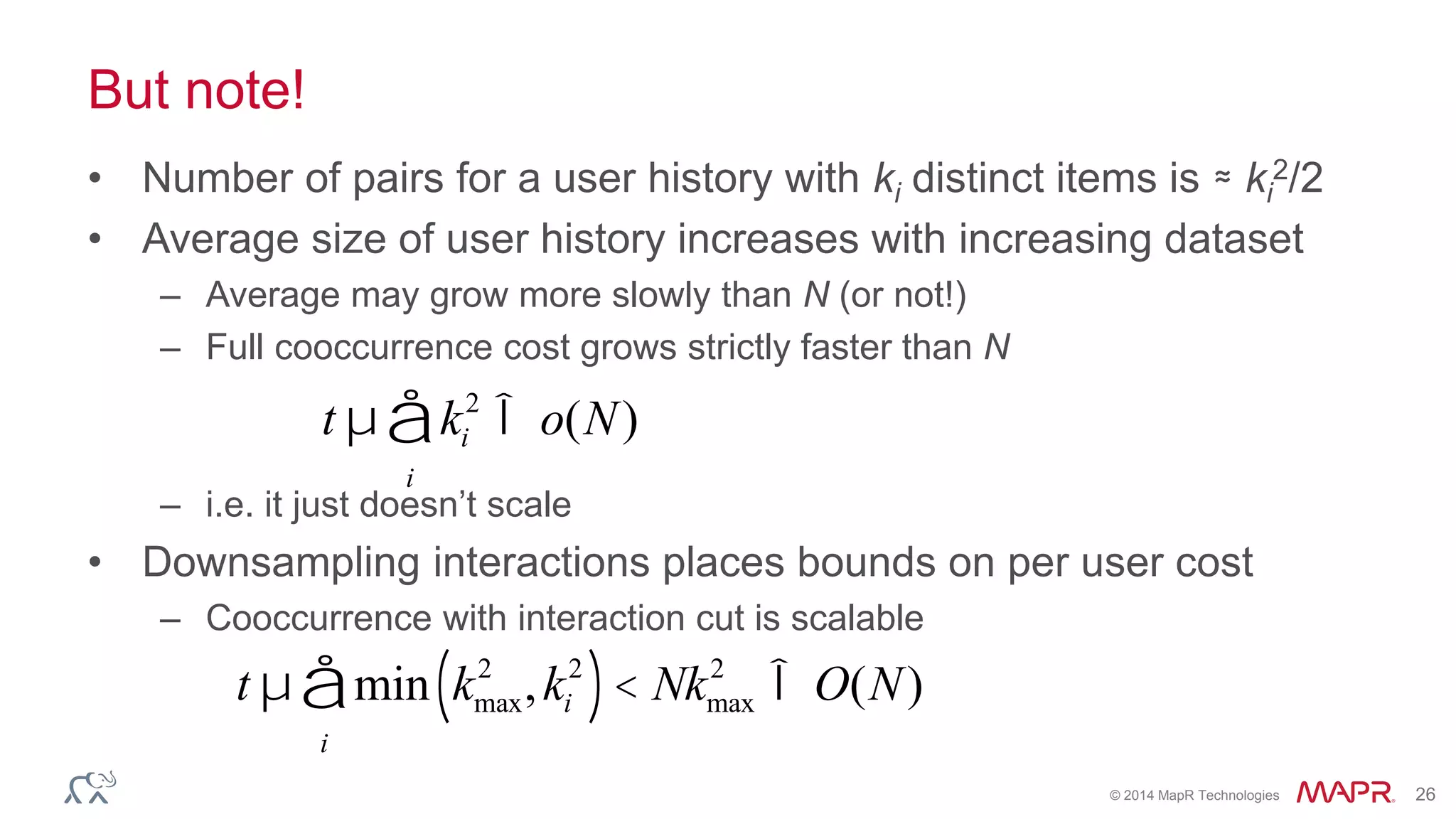

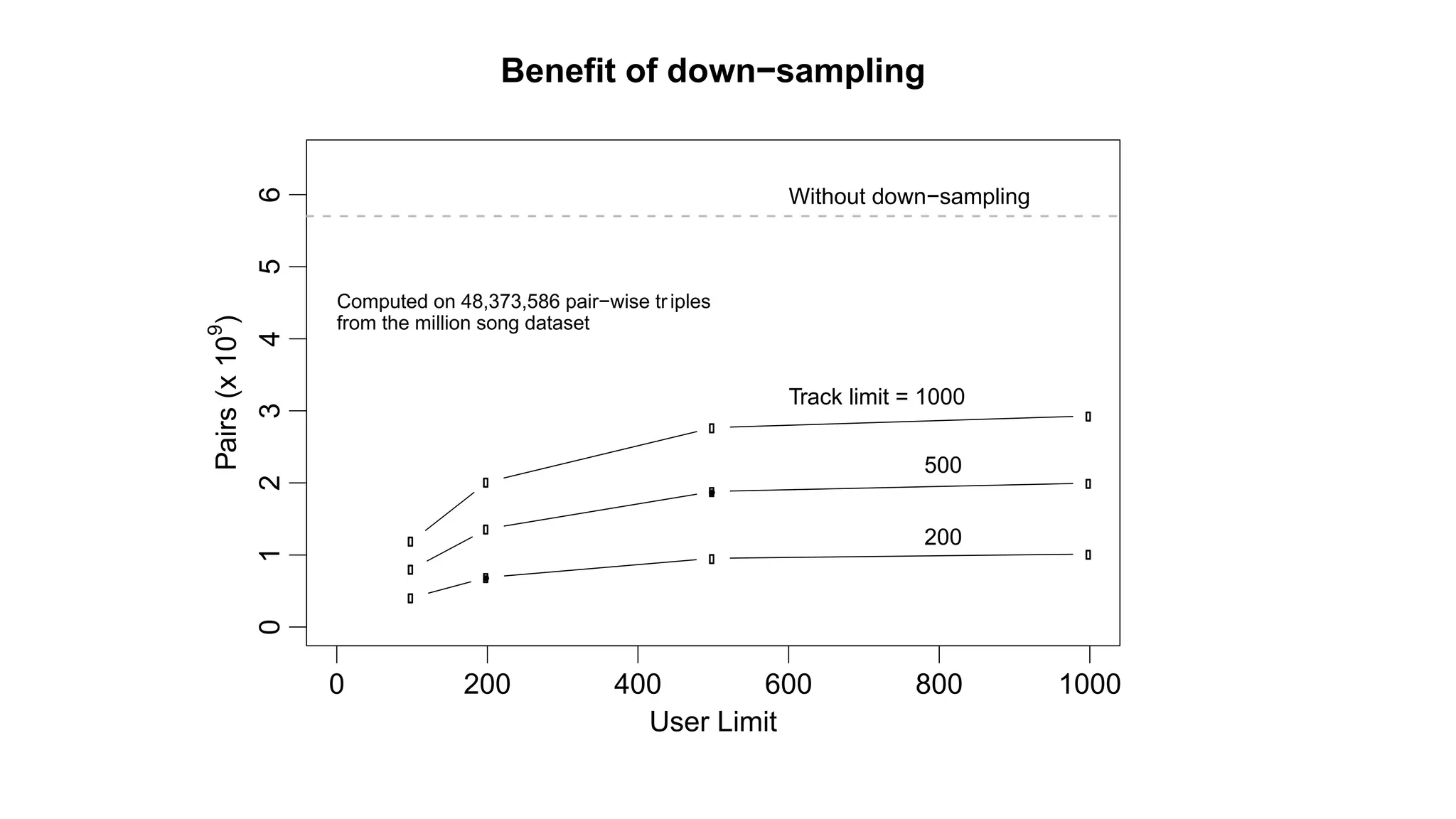

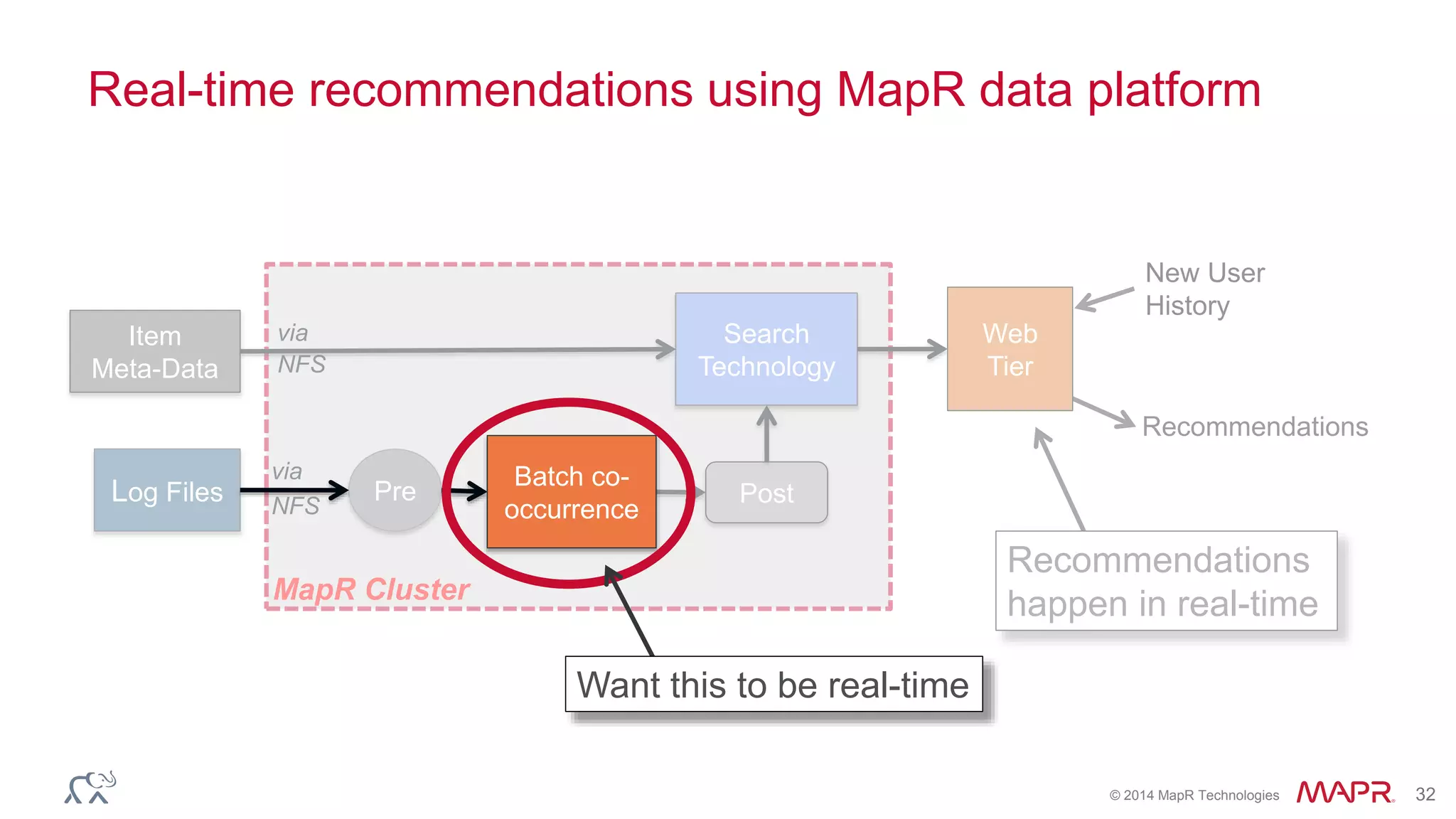

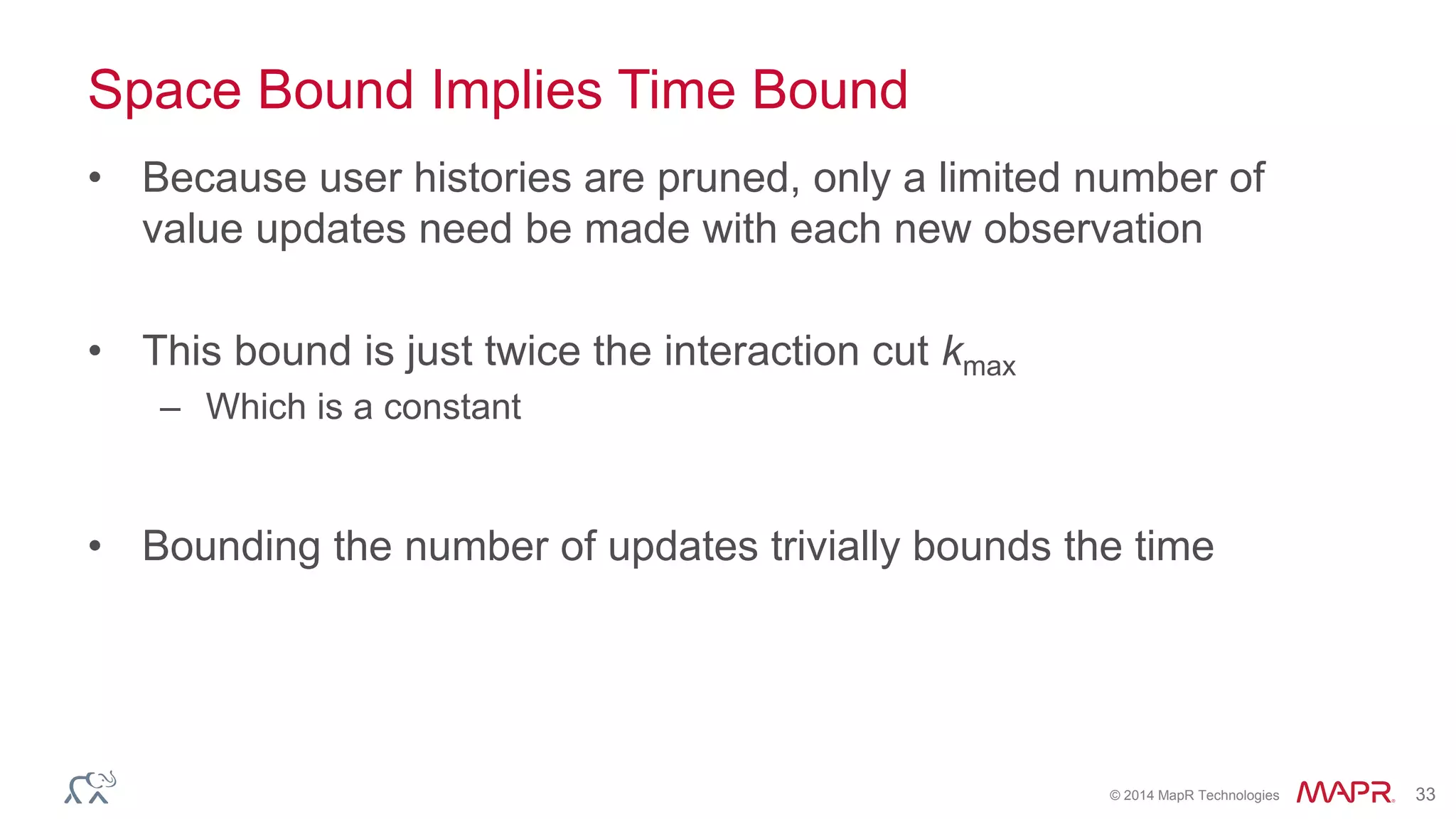

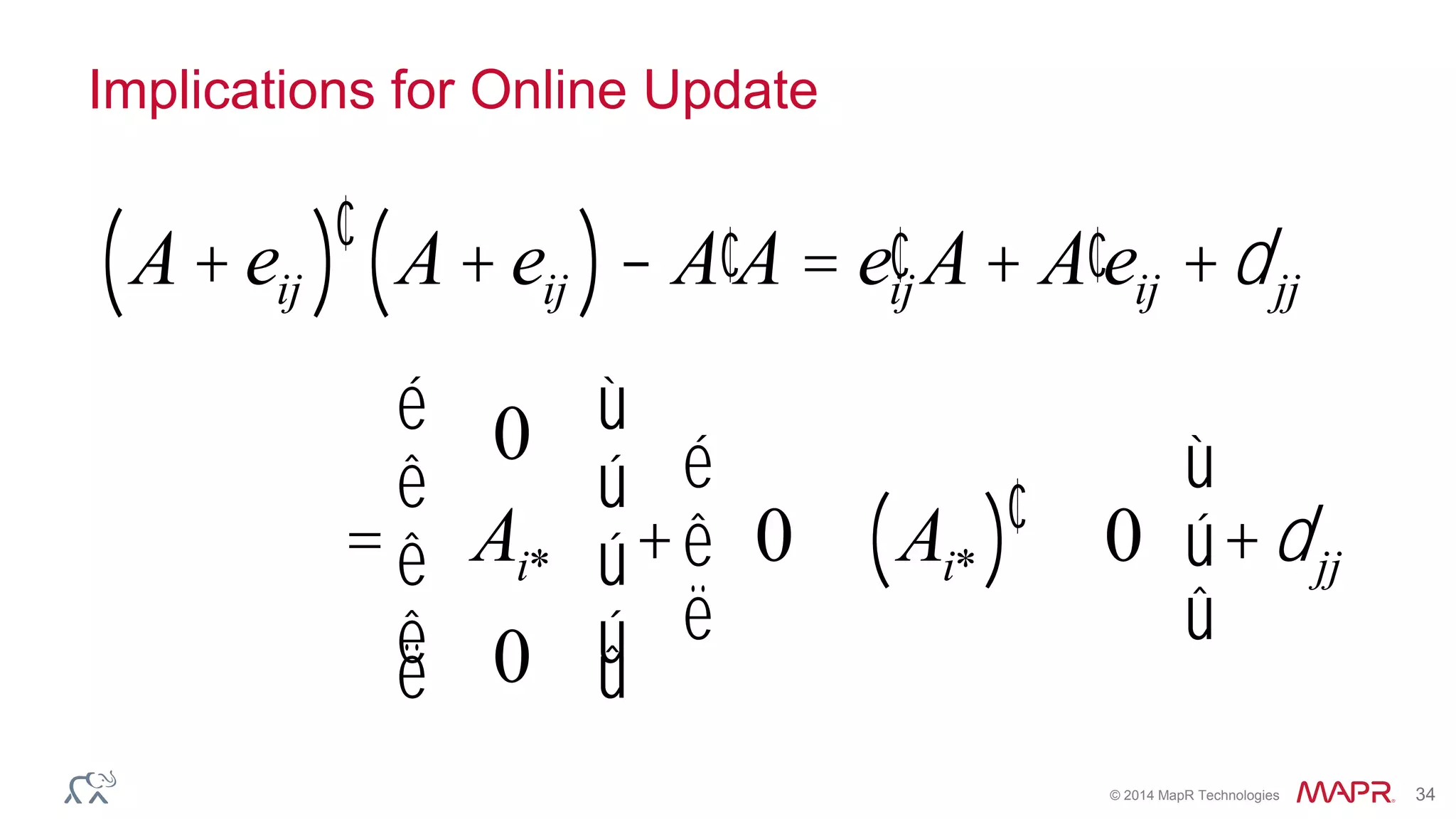

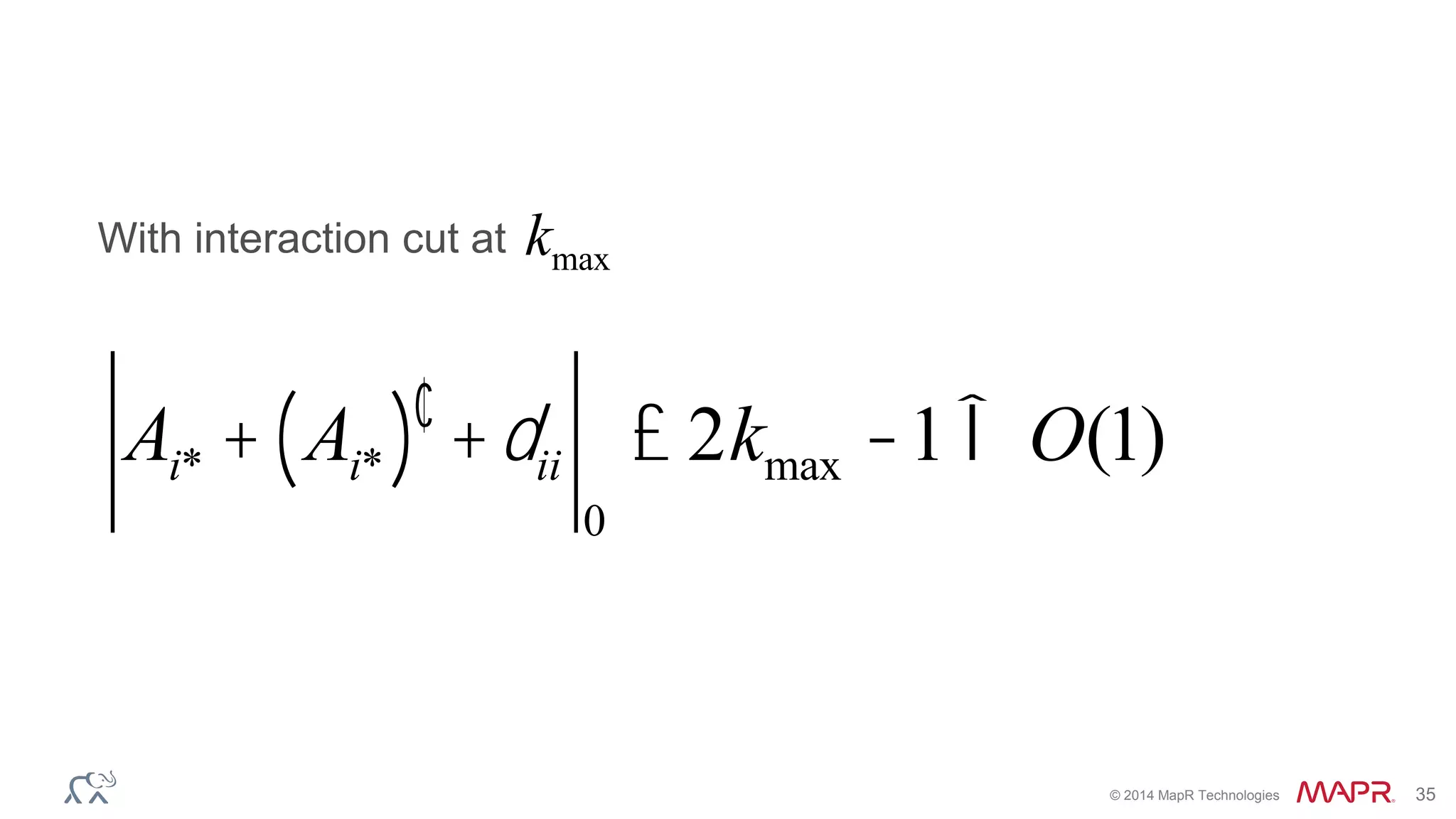

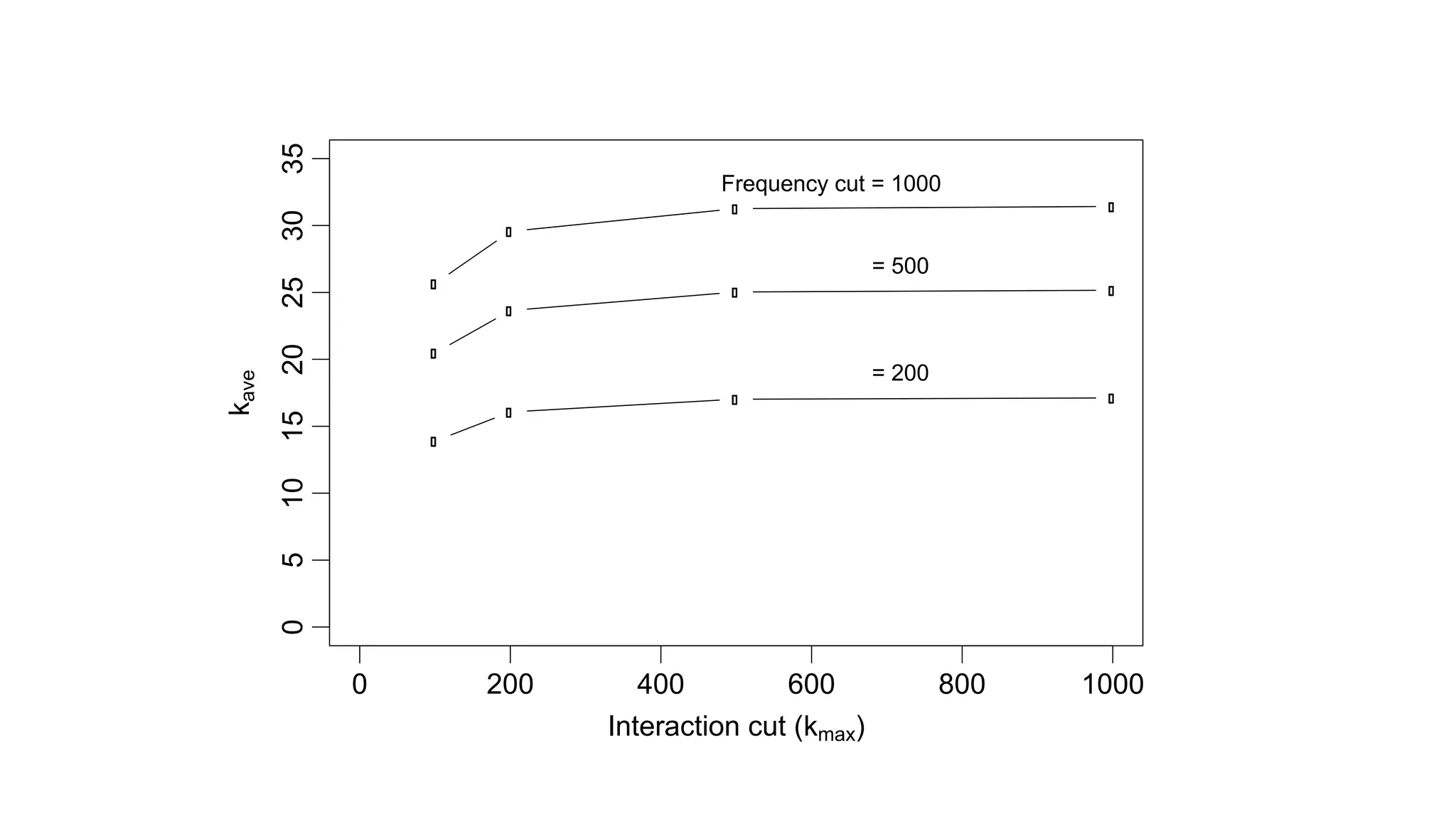

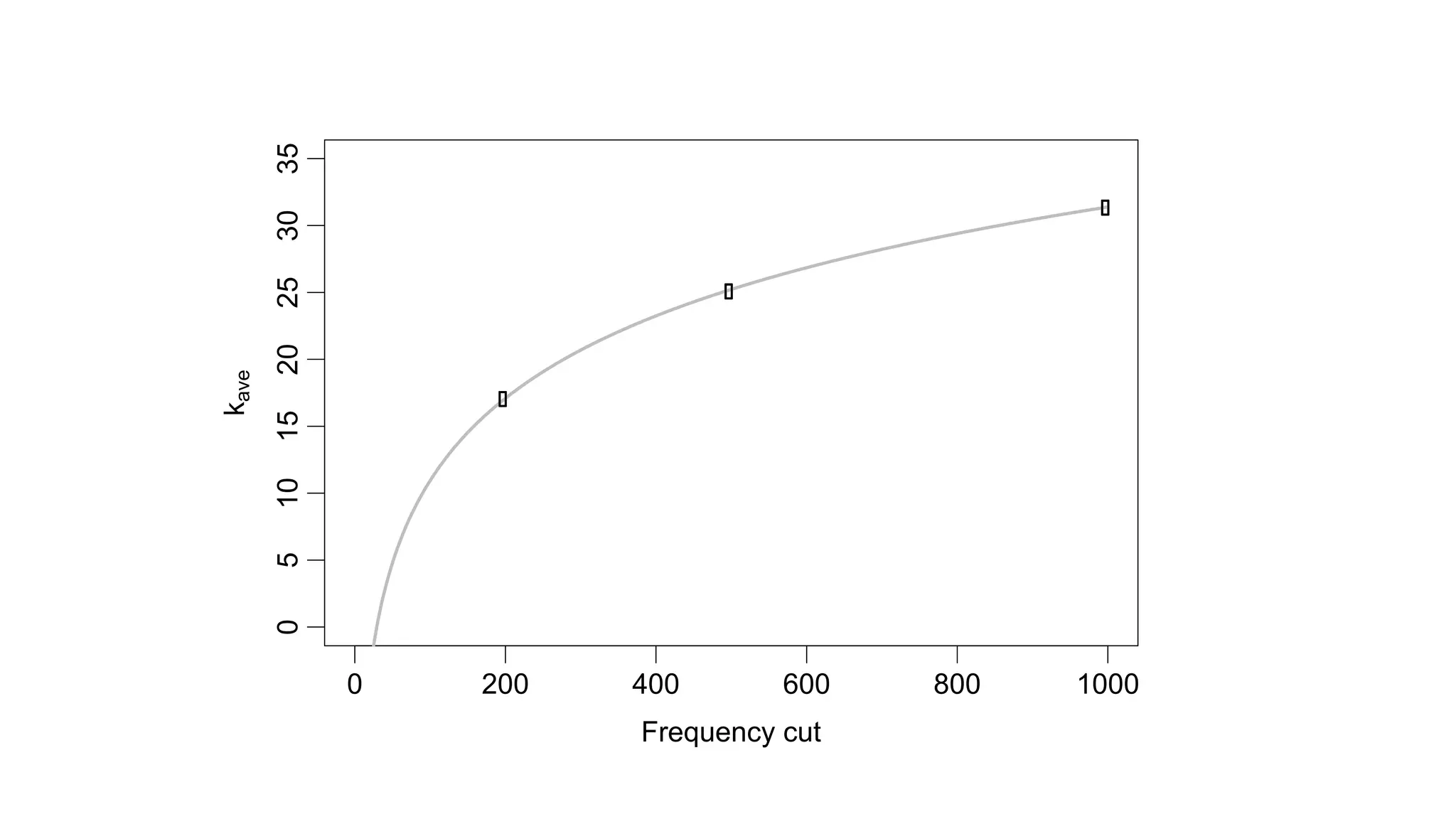

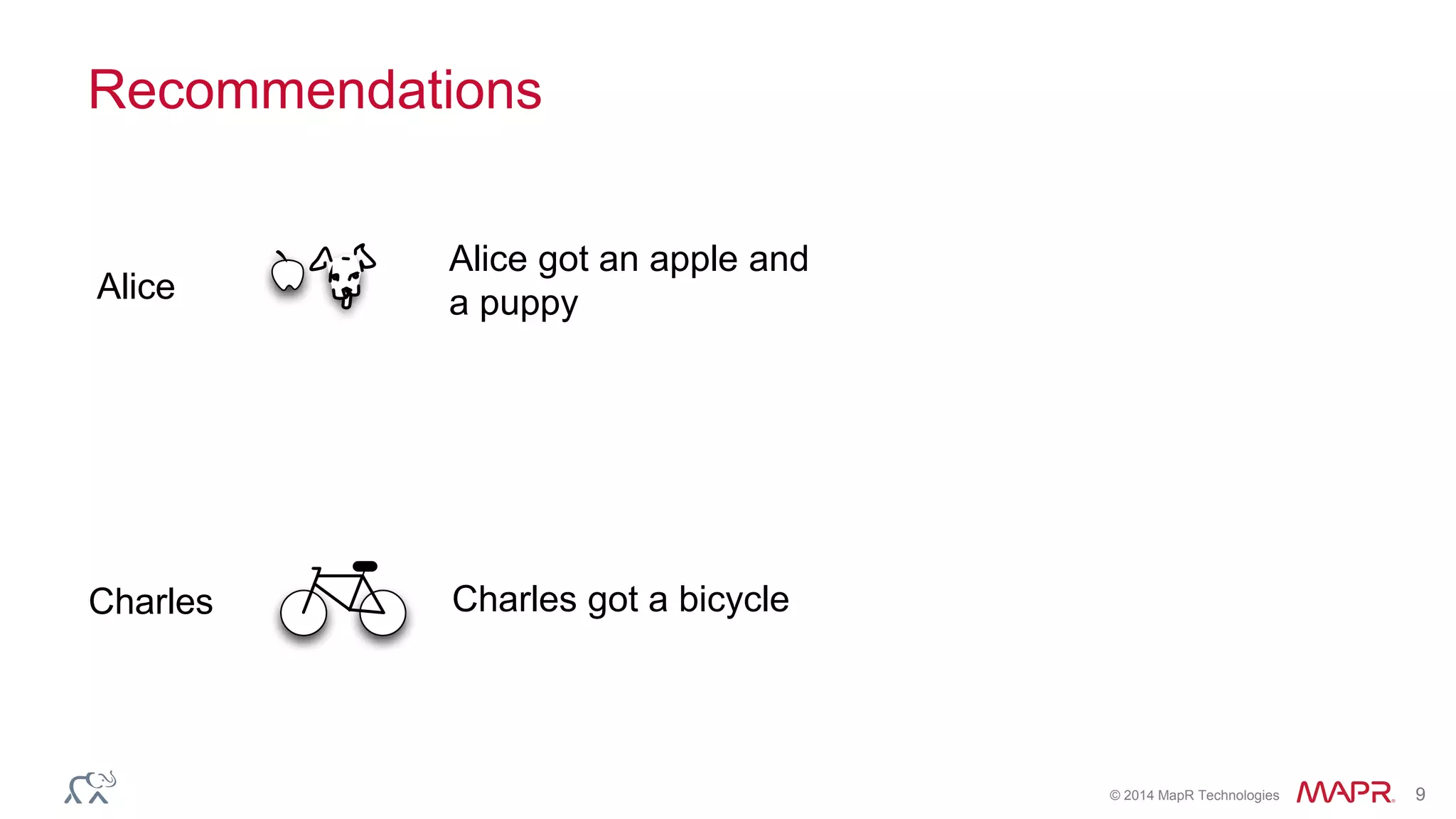

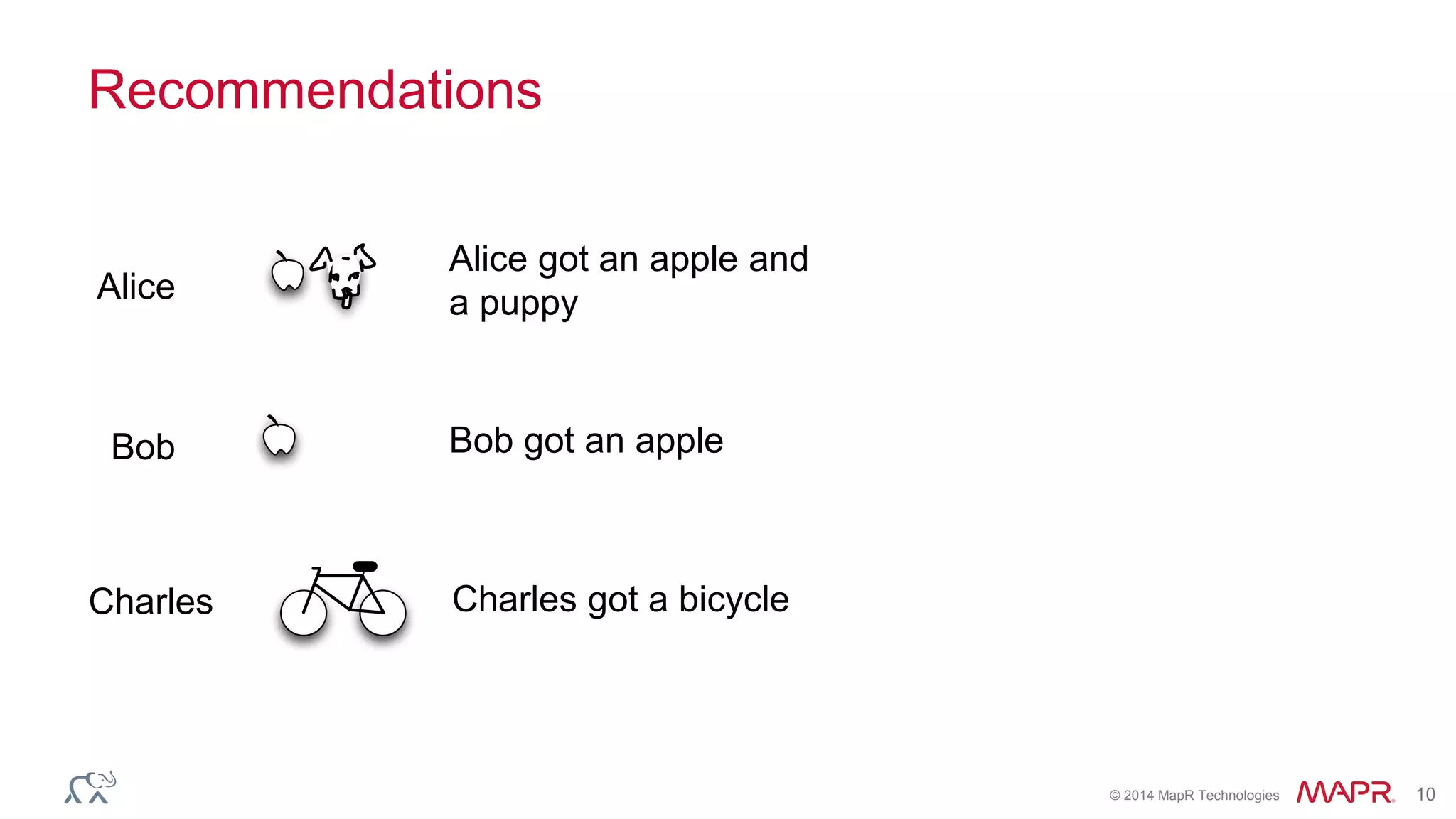

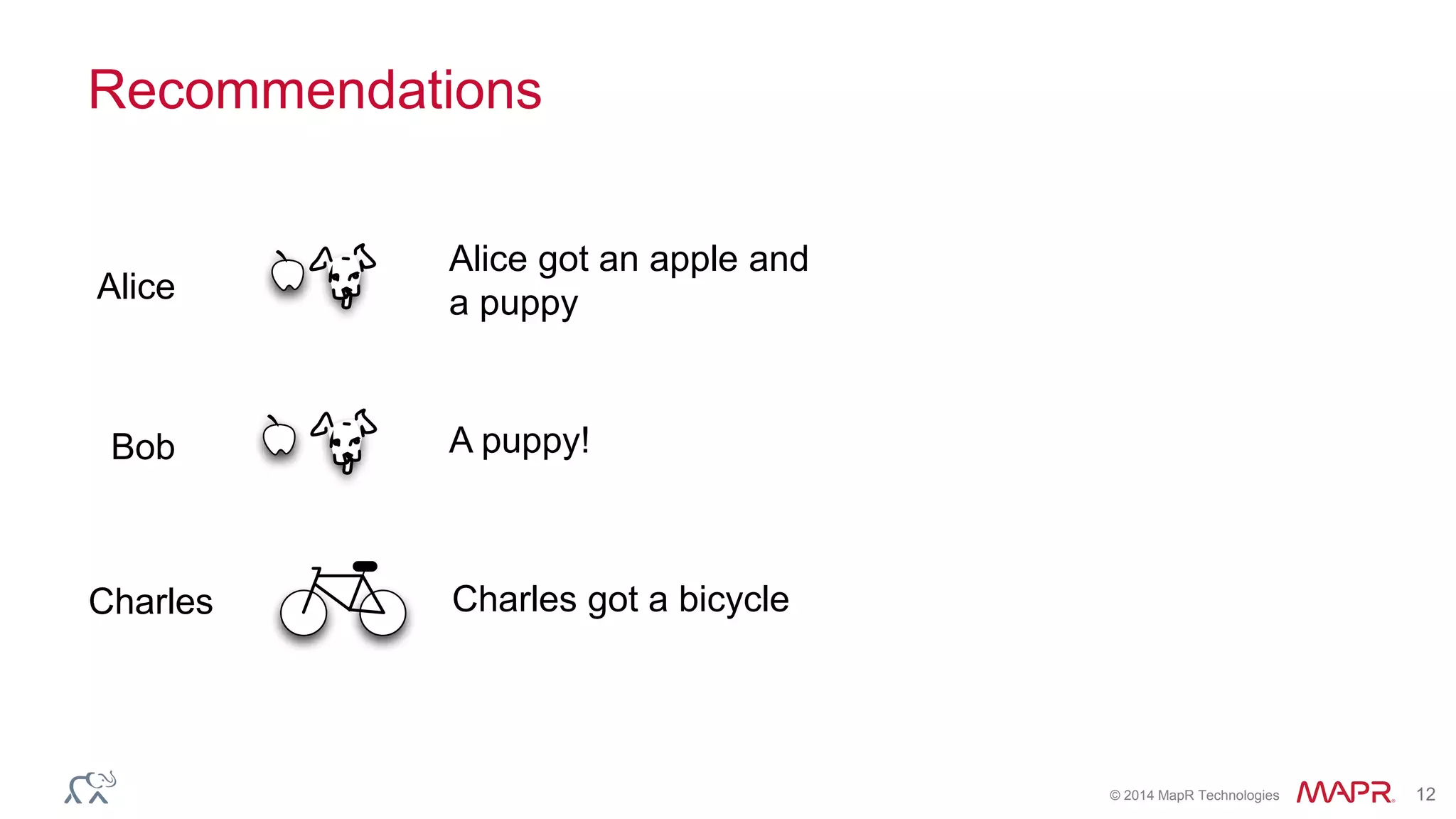

This document discusses techniques for making recommendations in real-time using co-occurrence analysis. It describes how interaction cut and frequency cut downsampling allow batch co-occurrence analysis to scale to large datasets. These same techniques also enable an online approach to updating recommendations in real-time with each new user interaction. The key insights are that limiting user histories and item frequencies results in a bounded number of updates needed for each new data point, allowing real-time recommendations using MapR's distributed data platform.

![© 2014 MapR Technologies 23

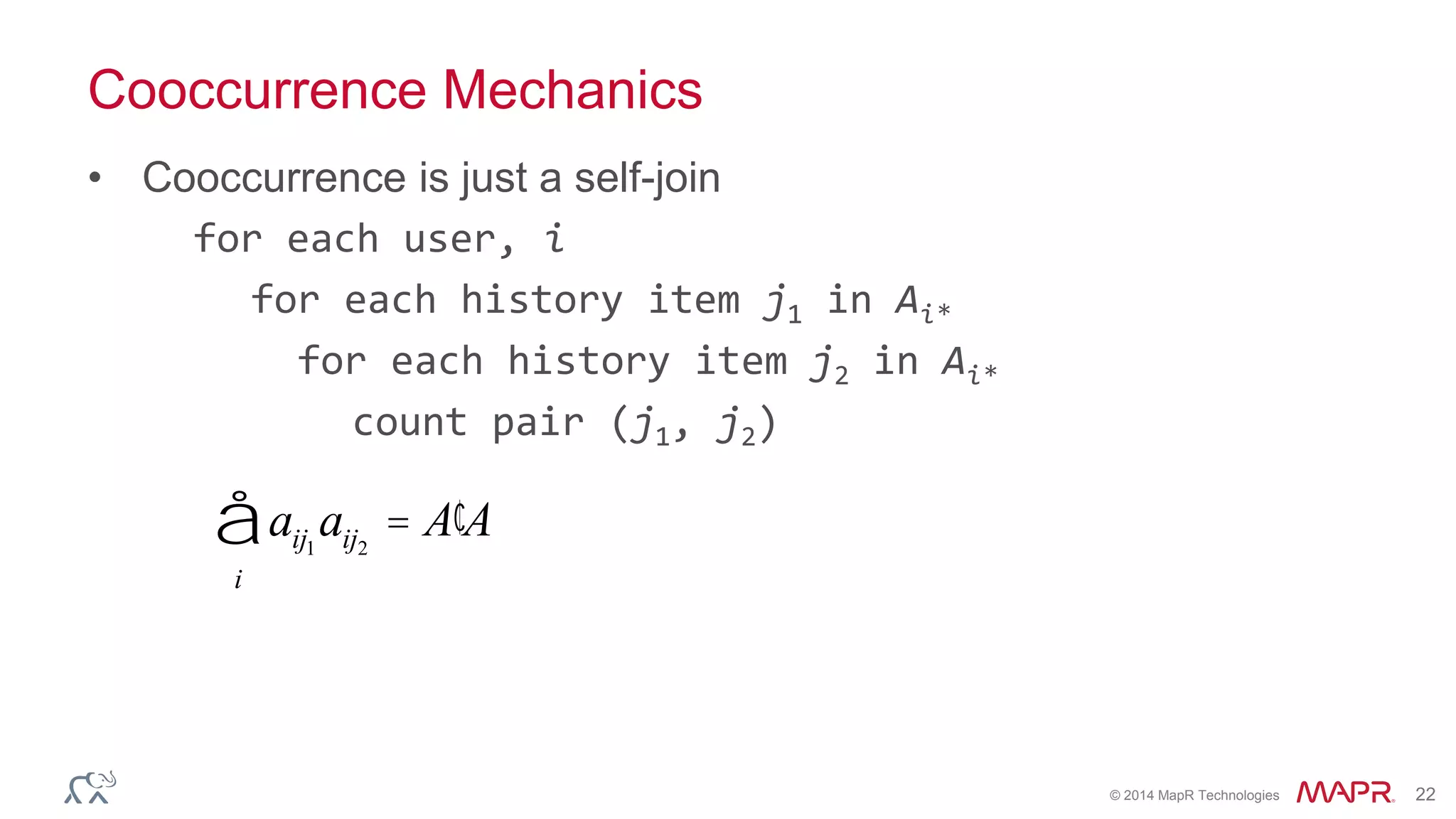

Cross-occurrence Mechanics

• Cross occurrence is just a self-join of adjoined matrices

for each user, i

for each history item j1 in Ai*

for each history item j2 in Bi*

count pair (j1, j2)

aij1

bij2

= ¢A B

i

å A | B[ ]¢ A | B[ ] =

¢A A ¢A B

¢B A ¢B B

é

ë

ê

ù

û

ú](https://image.slidesharecdn.com/dunning-ml-conf-2014-150428210102-conversion-gate01/75/Dunning-ml-conf-2014-22-2048.jpg)