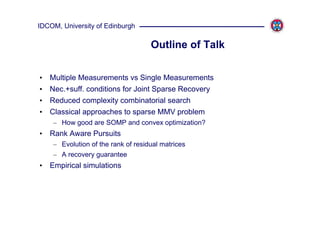

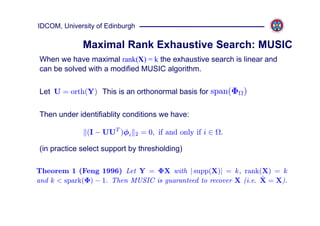

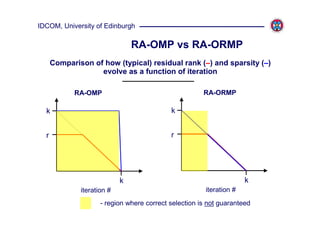

This document discusses rank-aware algorithms for joint sparse recovery from multiple measurement vectors (MMV). It begins by introducing the MMV problem and showing that when the rank of the signal matrix is r, the necessary and sufficient conditions for unique recovery are less restrictive than in the single measurement vector case. Classical MMV algorithms like SOMP and l1/lq minimization are not rank-aware. The document then proposes two rank-aware pursuit algorithms:

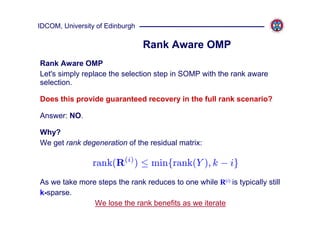

1) Rank-Aware OMP, which modifies the atom selection step of SOMP but still suffers from rank degeneration over iterations.

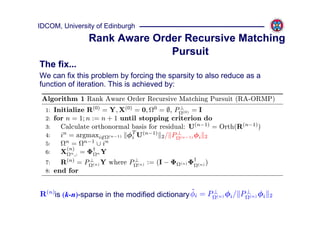

2) Rank-Aware Order Recursive Matching Pursuit (RA-ORMP), which forces the sparsity

![IDCOM, University of Edinburgh

MMV uniqueness

Worst Case

• Uniqueness of solution for sparse MMV problem is equivalent to that for

SMV problem. Simply replicate SMV problem:

X = {x, x, . . . , x}

Hence nec. + suff. condition to uniquely determine each k-sparse vector x is

given by SMV condition:

spark(Φ)

| supp(X)| = k <

2

Rank 'r' Case

• If Rank(Y)=r then the necessary + sufficient conditions are less restrictive

[Chen & Huo 2006, D. & Eldar 2010]:

spark(Φ) − 1 + rank(Y)

| supp(X)| = k <

2

Equivalently we can replace rank(Y) with rank(X).

More measurements (higher rank) makes recovery easier!](https://image.slidesharecdn.com/rankawarealgs-small11-110317055820-phpapp02/85/Rank-awarealgs-small11-5-320.jpg)

![IDCOM, University of Edinburgh

Exhaustive search solution

How does the rank change the exhaustive search?

SMV exhaustive search:

find , | | = k s.t. ΦX = Y

However since span(Y) ⊂ span(Φ ) and rank(Y) = r

∃γ⊂ , |γ| = k − r s.t. span([Φγ , Y ]) = span(Φ )

n

In fact we have a reduced k−r+1 combinatorial search.](https://image.slidesharecdn.com/rankawarealgs-small11-110317055820-phpapp02/85/Rank-awarealgs-small11-7-320.jpg)

![IDCOM, University of Edinburgh

Do such MMV solutions exploit the

rank?

Answer: NO. [D. & Eldar 2010]

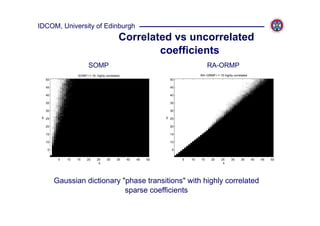

Theorem 2 (SOMP is not rank aware) Let τ be given such that 1 ≤ τ ≤ k

and suppose that

max ||Φ† φj ||1 > 1

j∈

for some support , | | = k. Then there exists an X with supp(X) = and

rank(X) = τ that SOMP cannot recover.

SMV OMP Exact

Recovery condition

Proof - Rank r perturbation of rank 1 problem approaches rank 1 recovery

property due to continuity norm.](https://image.slidesharecdn.com/rankawarealgs-small11-110317055820-phpapp02/85/Rank-awarealgs-small11-13-320.jpg)

![IDCOM, University of Edinburgh

Do such MMV solutions exploit the

rank?

Answer: NO. [D. & Eldar 2010]

Theorem 3 (ℓ1 /ℓq minimization is not rank aware) Let τ be given such

that 1 ≤ τ ≤ k and suppose that there exists a z ∈ N (Φ) such that

||z ||1 > ||z c ||1

for some support , | | = k. Then there exists an X with supp(X) = ,

rank(X) l=Null

SMV 1 τ that the mixed norm solution cannot recover.

Space Property

Proof - Rank r perturbation of rank 1 problem approaches rank 1 recovery

property due to continuity of norm.](https://image.slidesharecdn.com/rankawarealgs-small11-110317055820-phpapp02/85/Rank-awarealgs-small11-14-320.jpg)

![IDCOM, University of Edinburgh

Rank Aware Selection

Aim: to select individual atoms in a similar manner to modified MUSIC

Rank Aware Selection [D. & Eldar 2010]

At the nth iteration make the following selection:

(n) (n−1)

= ∪ argmax ||φT U(n−1) ||2

i

i

where U(n−1) = orth(R(n−1) )

Properties:

1. Worst case behaviour does not approach SMV case.

2. When rank(R) = k it always selects a correct atom as with

MUSIC](https://image.slidesharecdn.com/rankawarealgs-small11-110317055820-phpapp02/85/Rank-awarealgs-small11-16-320.jpg)

![IDCOM, University of Edinburgh

Rank Aware OMP

Alternative Solutions

Recently two independent solutions have been proposed that are variations on

a theme:

1. Compressive MUSIC [Kim et al 2010]

i. perform SOMP for k-r-1 steps but SOMP is rank blind

ii. apply modified MUSIC

2. Iterative MUSIC [Lee & Bresler 2010]

1. orthogonalize: U = orth(Y ) orthogonalization is not

2. apply SOMP to {Φ, U } for k-r-1 steps guaranteed beyond step 1

3. apply modified MUSIC

This motivates us to consider a minor modification of (2):

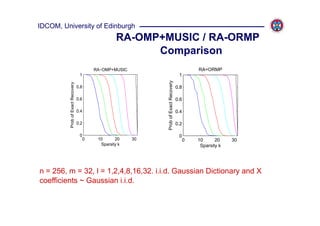

3 RA-OMP+MUSIC

i. perform RA-OMP for k-r-1 steps

ii. apply modified MUSIC](https://image.slidesharecdn.com/rankawarealgs-small11-110317055820-phpapp02/85/Rank-awarealgs-small11-21-320.jpg)

![IDCOM, University of Edinburgh

Recovery guarantee

Two nice rank aware solutions

a) Apply RA-OMP for k-r-1 steps then complete with modified MUSIC

b) Apply RA-ORMP for k steps (if first k-r steps make correct selection we

have guaranteed recovery)

we now have the following recovery guarantee [Blanchard & D.]:

Theorem 4 (MMV CS recovery) Assume XΛ ∈ Rn×r is in general position

for some support set Λ, |Λ| = k > r and let Φ is a random matrix independent

of X, Φi,j ∼ N (0, m−1 ). Then (a) and (b) can recover X from Y with high

probability if:

log N

m ≥ const.k +1

r

That is: as r increases the effect of the log N term diminishes](https://image.slidesharecdn.com/rankawarealgs-small11-110317055820-phpapp02/85/Rank-awarealgs-small11-22-320.jpg)