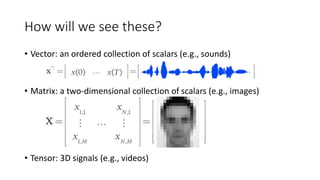

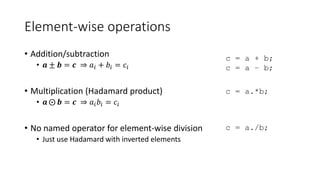

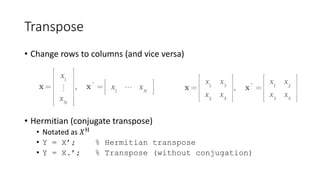

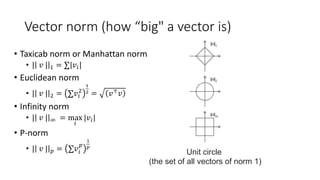

- The document provides an introduction to linear algebra and MATLAB. It discusses various linear algebra concepts like vectors, matrices, tensors, and operations on them.

- It then covers key MATLAB topics - basic data types, vector and matrix operations, control flow, plotting, and writing efficient code.

- The document emphasizes how linear algebra and MATLAB are closely related and commonly used together in applications like image and signal processing.

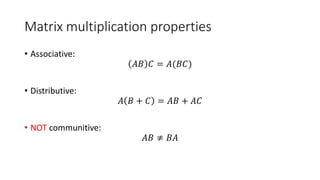

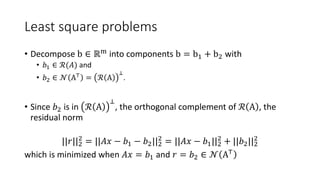

![Which division?

Left Right

Array division C = ldivide(A, B);

C = A.B;

C = rdivide(A, B);

C = A./B;

Matrix division C = mldivide(A, B);

C = A/B;

C = mldivide(A, B);

C = AB;

EX: Array division

A = [1 2; 3, 4];

B = [1,1; 1, 1];

C1 = A.B;

C2 = A./B;

C1 =

1.0000 0.5000

0.3333 0.2500

C2 =

1 2

3 4

EX: Matrix division

X = AB;

-> the (least-square) solution to AX = B

X = A/B;

-> the (least-square) solution to XB = A

B/A = (A'B')'](https://image.slidesharecdn.com/cs445linearalgebraandmatlabtutorial-150831010550-lva1-app6891/85/Linear-Algebra-and-Matlab-tutorial-11-320.jpg)

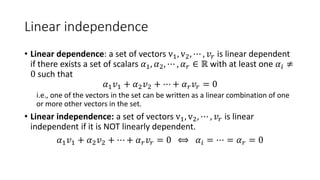

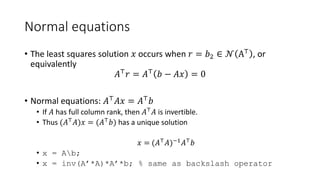

![Reshaping operators

• The vec operator

• Unrolls elements column-wise

• Useful for getting rid of matrices/tensors

• B = A(:);

• The reshape operator

• B = reshape(A, sz);

• prod(sz) must be the same as

numel(A)

EX: Reshape

A = 1:12;

B = reshape(A,[3,4]);

B =

1 4 7 10

2 5 8 11

3 6 9 12](https://image.slidesharecdn.com/cs445linearalgebraandmatlabtutorial-150831010550-lva1-app6891/85/Linear-Algebra-and-Matlab-tutorial-14-320.jpg)

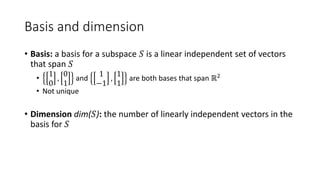

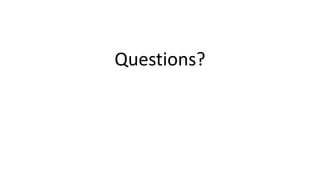

![Trace and diag

• Matrix trace

• Sum of diagonal elements

• The diag operator EX: trace

A = [1, 2; 3, 4];

t = trace(A);

Q: What’s t?

EX: diag

x = diag(A);

D = diag(x);

Q: What’s x and D?](https://image.slidesharecdn.com/cs445linearalgebraandmatlabtutorial-150831010550-lva1-app6891/85/Linear-Algebra-and-Matlab-tutorial-15-320.jpg)

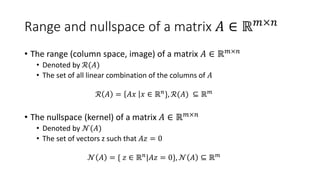

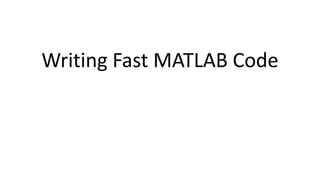

![Iterative Methods for Larger Problems

• Iterative solvers in MATLAB:

• bicg, bicgstab, cgs, gmres, lsqr, minres, pcg, symmlq, qmr

• [x,flag,relres,iter,resvec] = method(A,b,tol,maxit,M1,M2,x0)

• source: Writing Fast Matlab Code by Pascal Getreuer](https://image.slidesharecdn.com/cs445linearalgebraandmatlabtutorial-150831010550-lva1-app6891/85/Linear-Algebra-and-Matlab-tutorial-46-320.jpg)

![Solving Ax = b when A is a Special Matrix

• Circulant matrices

• Matrices corresponding to cyclic convolution

Ax = conv(h, x) are diagonalized in the Fourier domain

>> x = ifft( fft(b)./fft(h) );

• Triangular and banded

• Efficiently solved by sparse LU factorization

>> [L,U] = lu(sparse(A));

>> x = U(Lb);

• Poisson problems

• See http://www.cs.berkeley.edu/~demmel/cs267/lecture25/lecture25.html](https://image.slidesharecdn.com/cs445linearalgebraandmatlabtutorial-150831010550-lva1-app6891/85/Linear-Algebra-and-Matlab-tutorial-47-320.jpg)