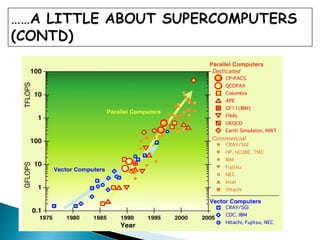

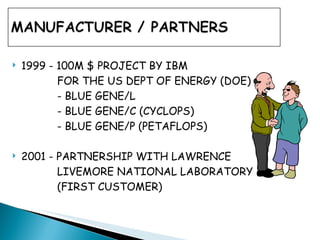

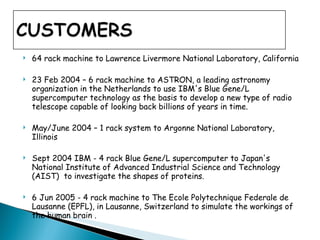

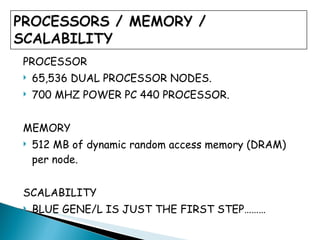

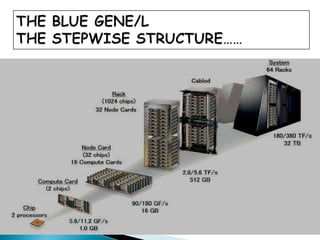

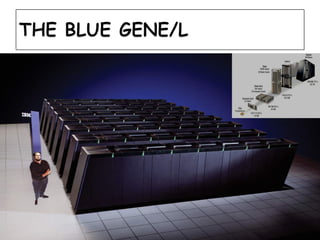

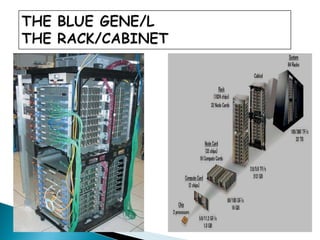

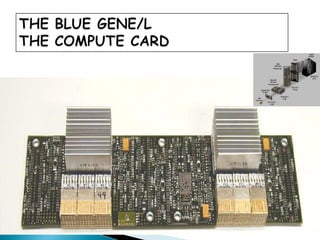

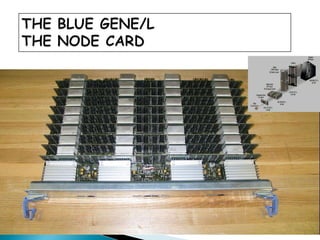

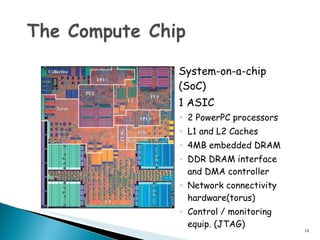

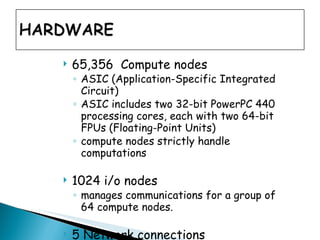

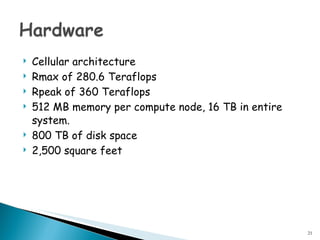

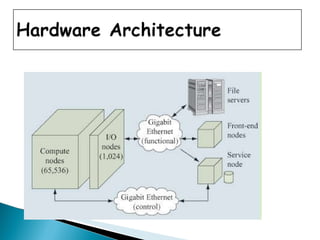

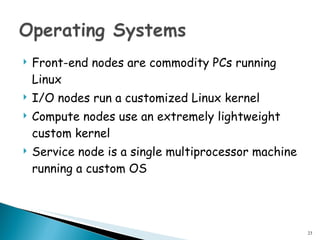

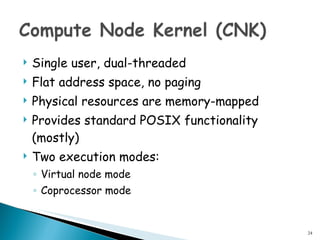

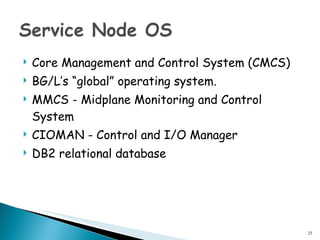

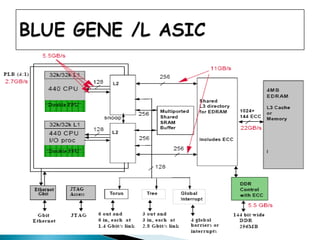

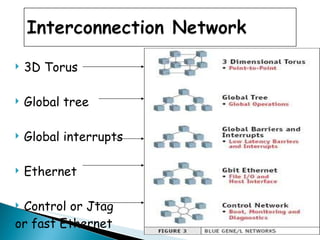

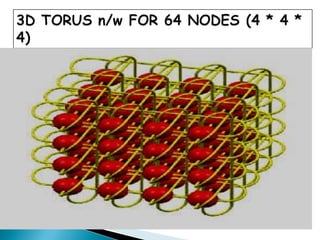

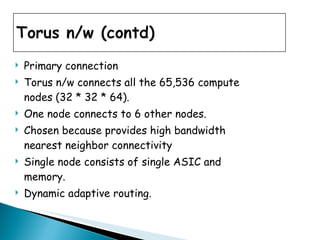

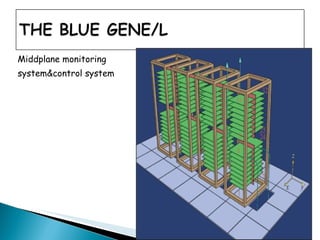

The document summarizes IBM's Blue Gene/L supercomputer. It discusses how IBM created Blue Gene/L in partnership with Lawrence Livermore National Laboratory to build a supercomputer optimized for scalability and efficiency. Blue Gene/L utilized a cellular architecture with 65,536 compute nodes, achieved up to 360 teraflops of performance, and was used by customers such as research laboratories for applications like protein folding analysis.