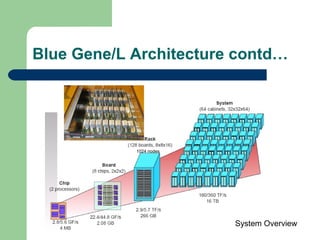

Blue Gene is a massively parallel supercomputer developed by IBM that uses tens of thousands of embedded PowerPC processors and large memory to support computationally intensive tasks like protein folding. The first Blue Gene project, Blue Gene/L, achieved over 280 teraflops of performance and set efficiency records. Subsequent Blue Gene projects, including Blue Gene/P and Blue Gene/Q, aimed for even higher petaflop-scale performance using similar highly parallel, low-power architectures and system software.