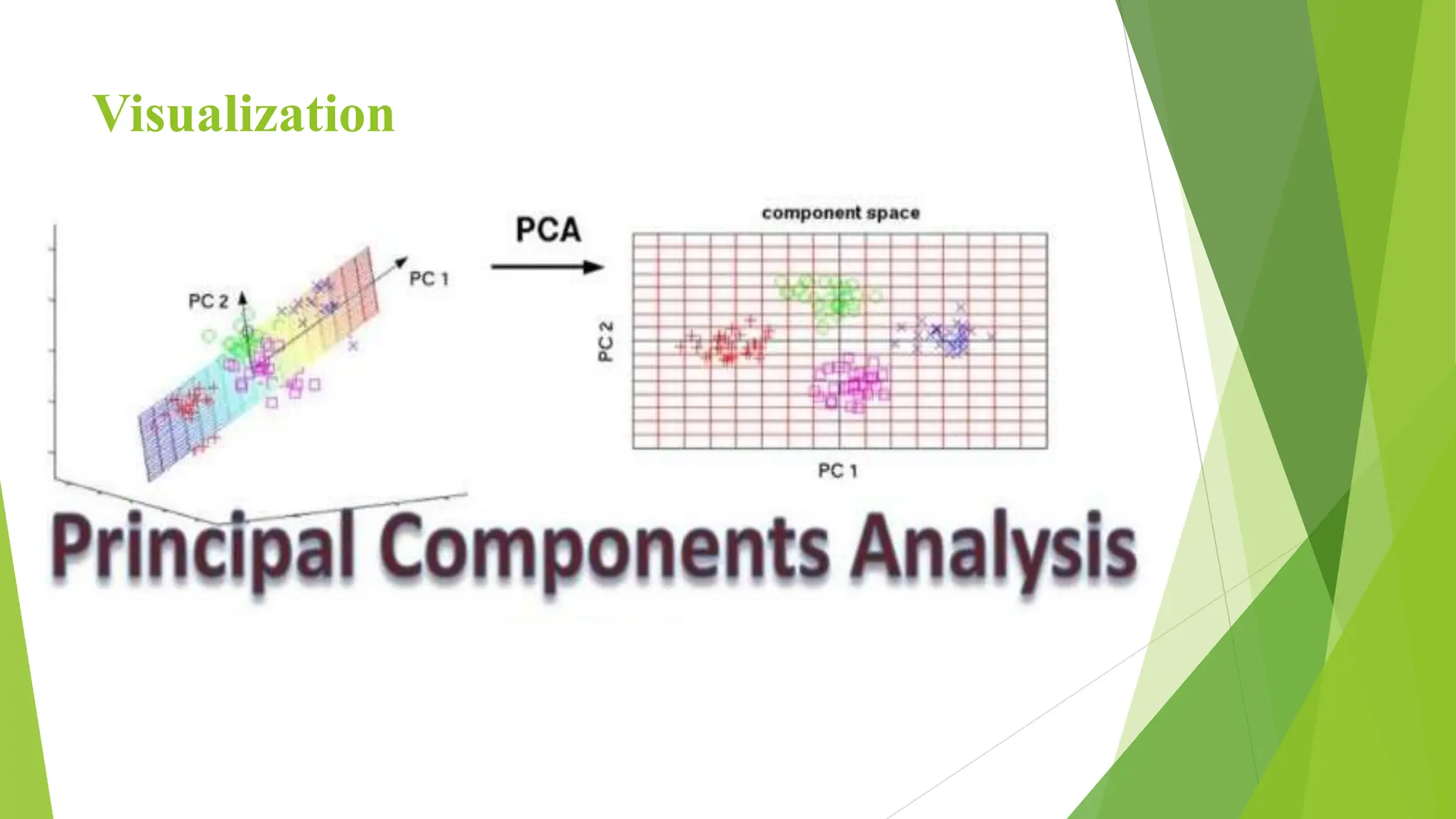

The document discusses principal component analysis (PCA) and the challenges associated with high-dimensional data, referred to as the curse of dimensionality, which makes data sparse and complicates analysis. It outlines PCA as a solution for dimensionality reduction by transforming original variables into principal components, retaining essential information while discarding less relevant features. The document describes the steps involved in PCA, its advantages, and potential drawbacks, including the need for data standardization and the risk of information loss.