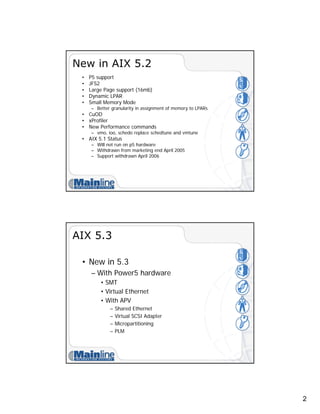

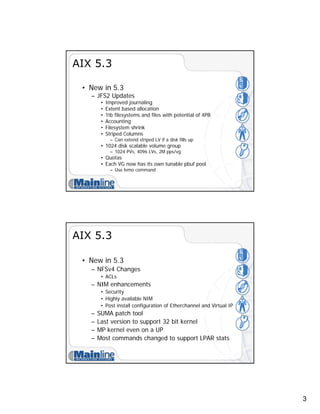

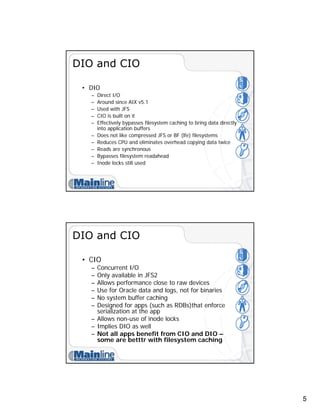

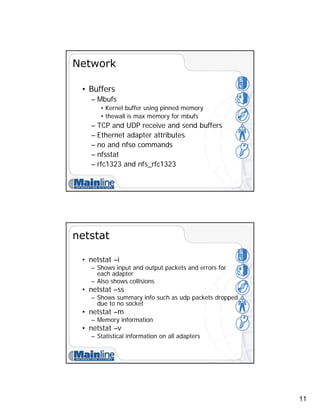

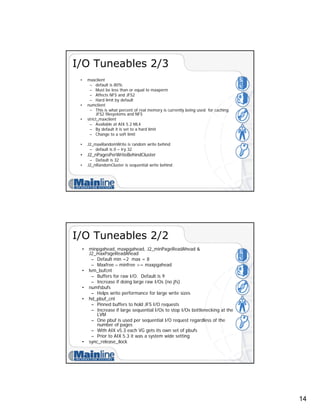

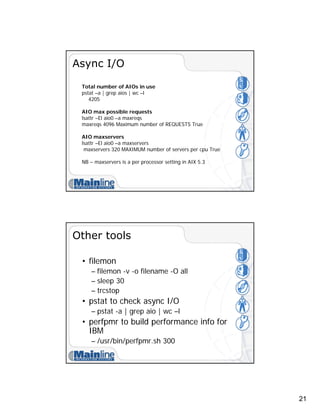

This document covers AIX performance tuning, contrasting versions 5.2 and 5.3, and detailing topics like 32-bit vs 64-bit architectures, JFS vs JFS2 filesystems, and Direct I/O (DIO) and Concurrent I/O (CIO). It provides insights into system performance monitoring through tools such as vmstat, iostat, and nmon, along with recommendations for tuning CPU, memory, network, and I/O settings. Various AIX enhancements, performance tips, and command changes between versions are also discussed.