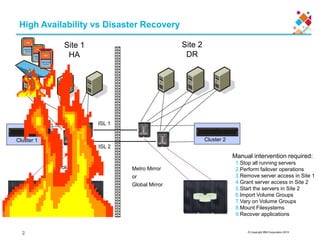

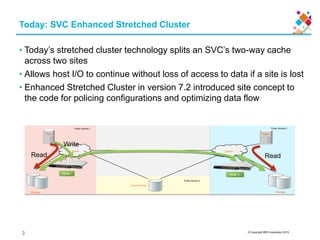

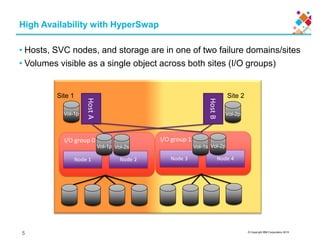

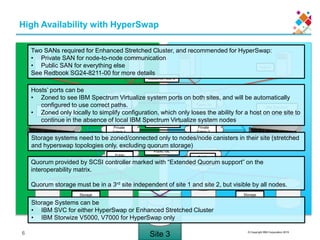

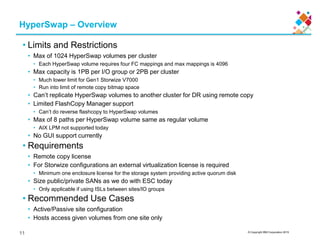

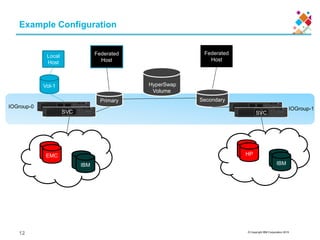

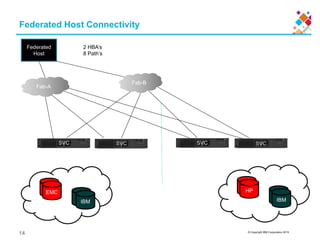

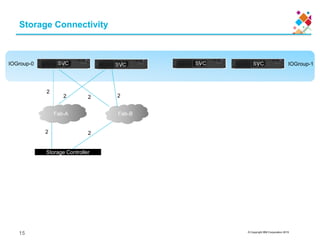

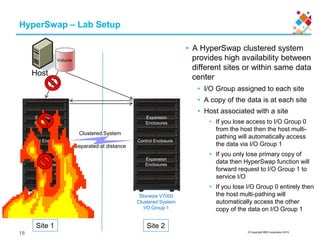

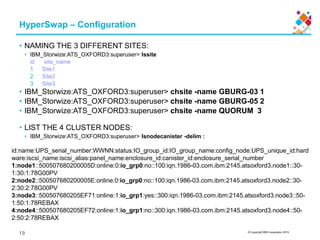

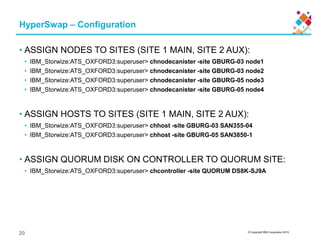

The document provides an overview of IBM Spectrum Virtualize HyperSwap functionality. HyperSwap allows host I/O to continue accessing volumes across two sites without interruption if one site fails. It uses synchronous remote copy between two I/O groups to make volumes accessible across both groups. The document outlines the steps to configure a HyperSwap configuration, including naming sites, assigning nodes and hosts to sites, and defining the topology.

![© Copyright IBM Corporation 2015

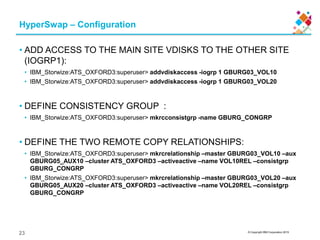

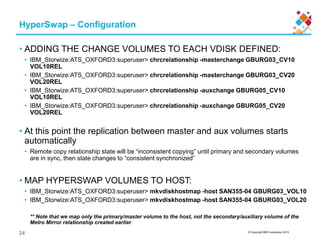

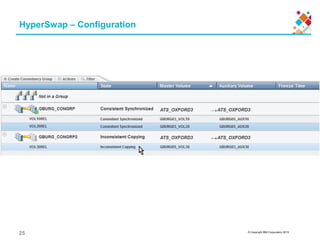

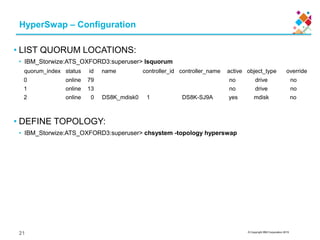

HyperSwap – Configuration

• MAKE VDISKS (SITE 1 MAIN, SITE 2 AUX):

• IBM_Storwize:ATS_OXFORD3:superuser> mkvdisk -name GBURG03_VOL10 -size 10 -unit gb

-iogrp 0 -mdiskgrp GBURG-03_POOL

• IBM_Storwize:ATS_OXFORD3:superuser> mkvdisk -name GBURG03_VOL20 -size 10 -unit gb

-iogrp 0 -mdiskgrp GBURG-03_POOL

• IBM_Storwize:ATS_OXFORD3:superuser> mkvdisk -name GBURG05_AUX10 -size 10 -unit gb

-iogrp 1 -mdiskgrp GBURG-05_POOL

• Virtual Disk, id [2], successfully created

• IBM_Storwize:ATS_OXFORD3:superuser> mkvdisk -name GBURG05_AUX20 -size 10 -unit gb

-iogrp 1 -mdiskgrp GBURG-05_POOL

• MAKE CHANGE VOLUME VDISKS (SITE 1 MAIN, SITE 2 AUX):

• IBM_Storwize:ATS_OXFORD3:superuser> mkvdisk -name GBURG03_CV10 -size 10 -unit gb

-iogrp 0 -mdiskgrp GBURG-03_POOL -rsize 1% -autoexpand

• IBM_Storwize:ATS_OXFORD3:superuser> mkvdisk -name GBURG03_CV20 -size 10 -unit gb

-iogrp 0 -mdiskgrp GBURG-03_POOL -rsize 1% -autoexpand

• IBM_Storwize:ATS_OXFORD3:superuser> mkvdisk -name GBURG05_CV10 -size 10 -unit gb

-iogrp 1 -mdiskgrp GBURG-05_POOL -rsize 1% -autoexpand

• IBM_Storwize:ATS_OXFORD3:superuser> mkvdisk -name GBURG05_CV20 -size 10 -unit gb

-iogrp 1 -mdiskgrp GBURG-05_POOL -rsize 1% -autoexpand

22](https://image.slidesharecdn.com/acceleratewithibmstorage-ibmspectrumvirtualizehyperswapdeepdive-150731110227-lva1-app6892/85/Accelerate-with-ibm-storage-ibm-spectrum-virtualize-hyper-swap-deep-dive-23-320.jpg)