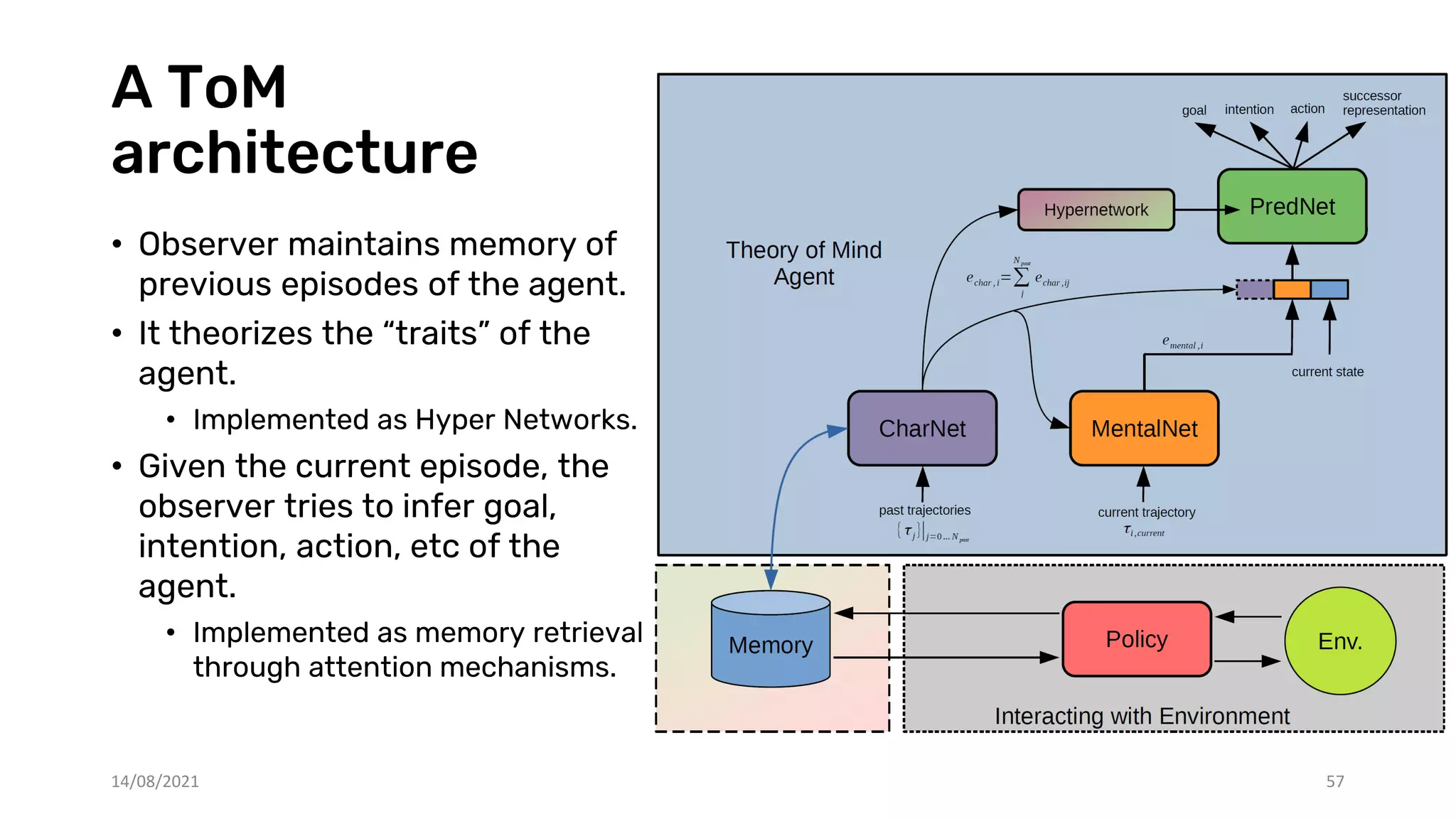

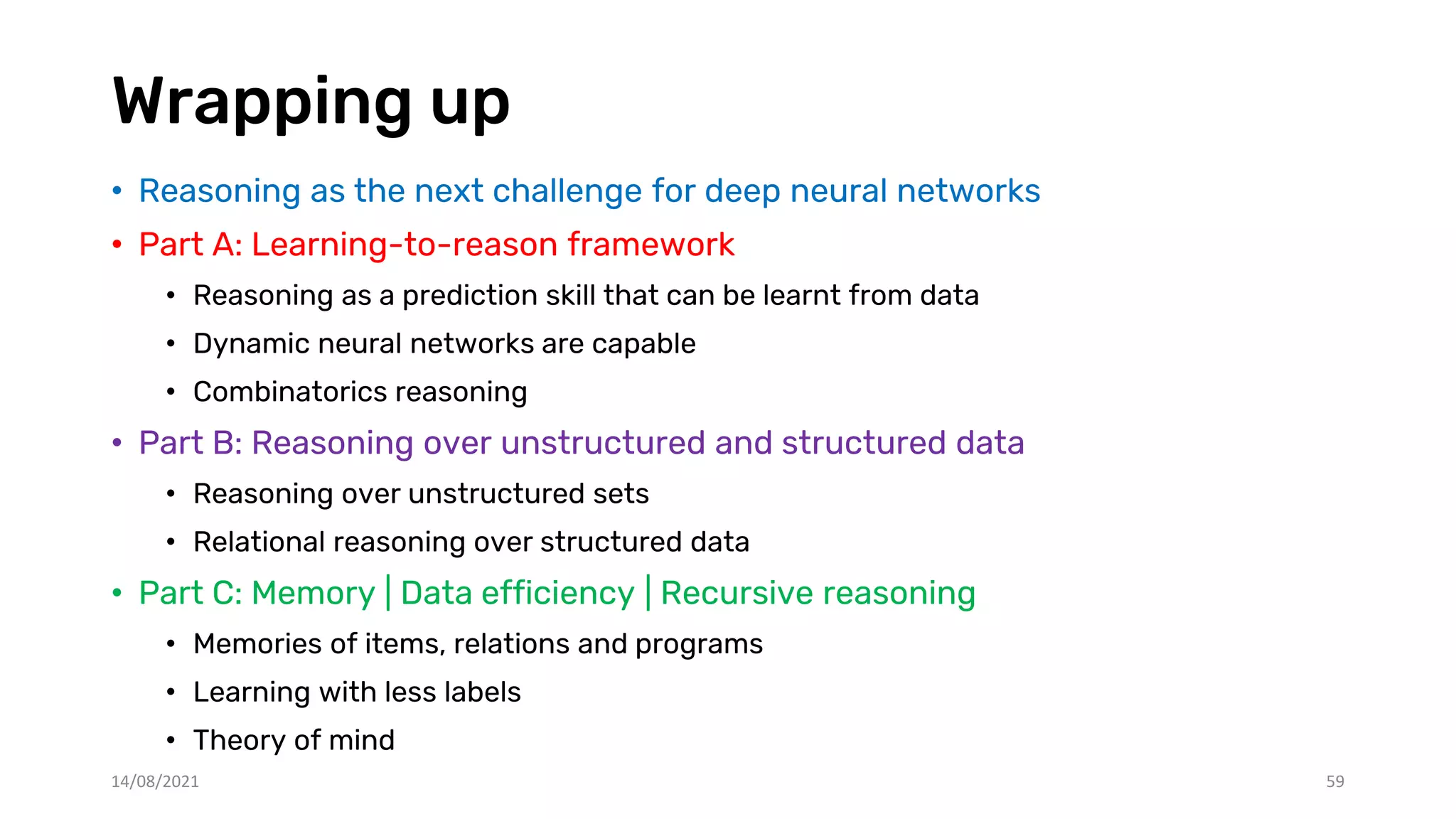

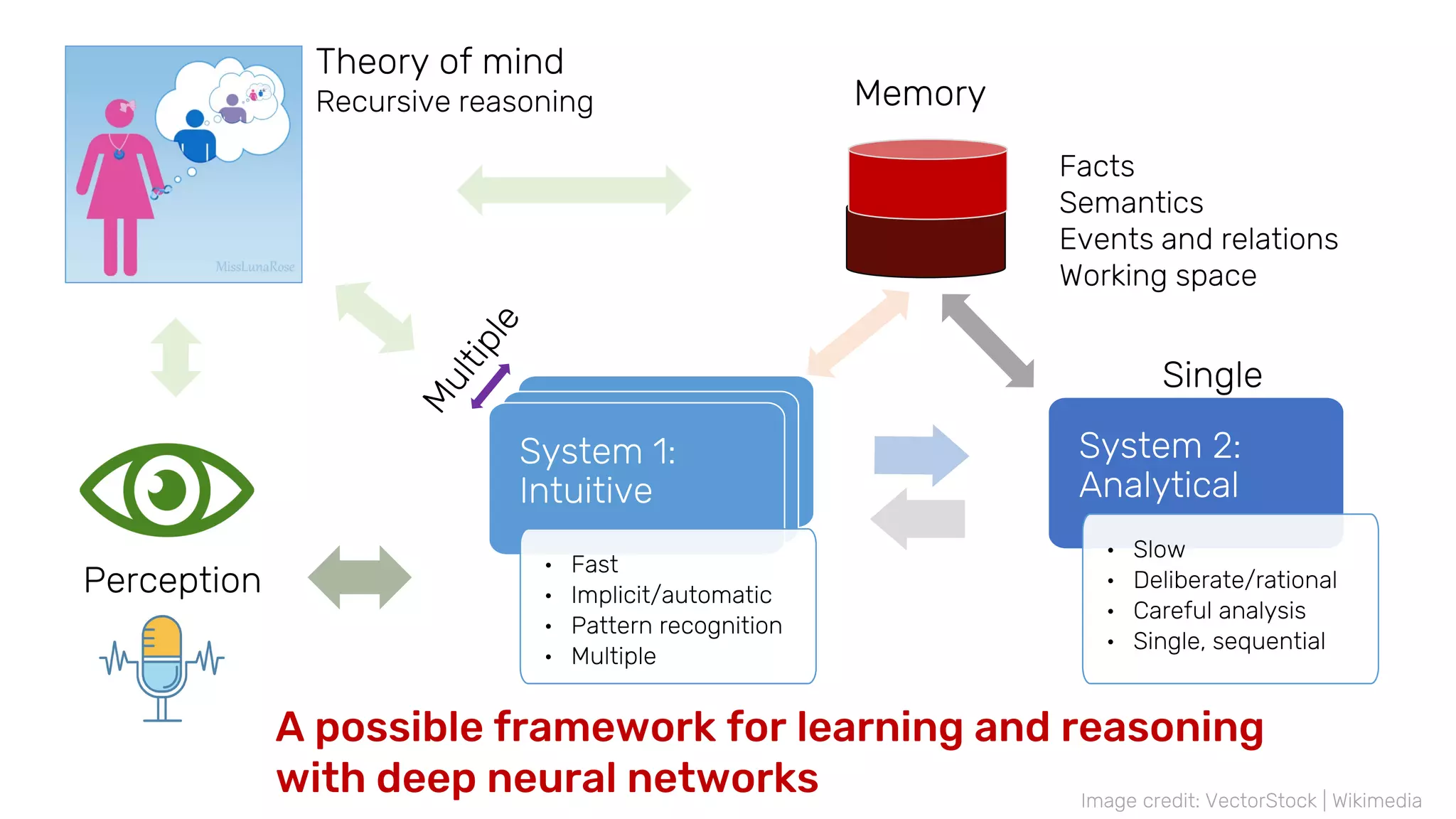

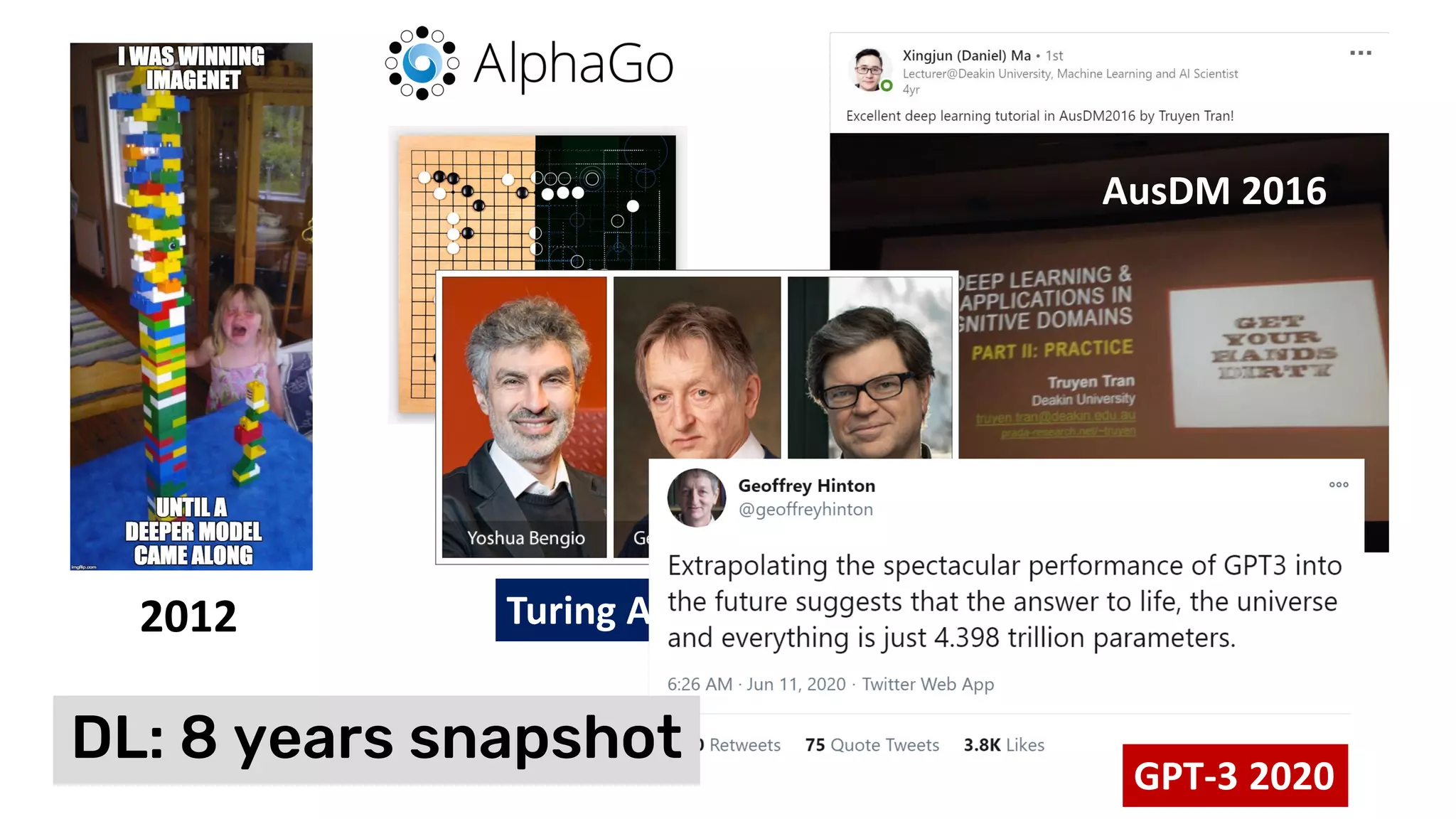

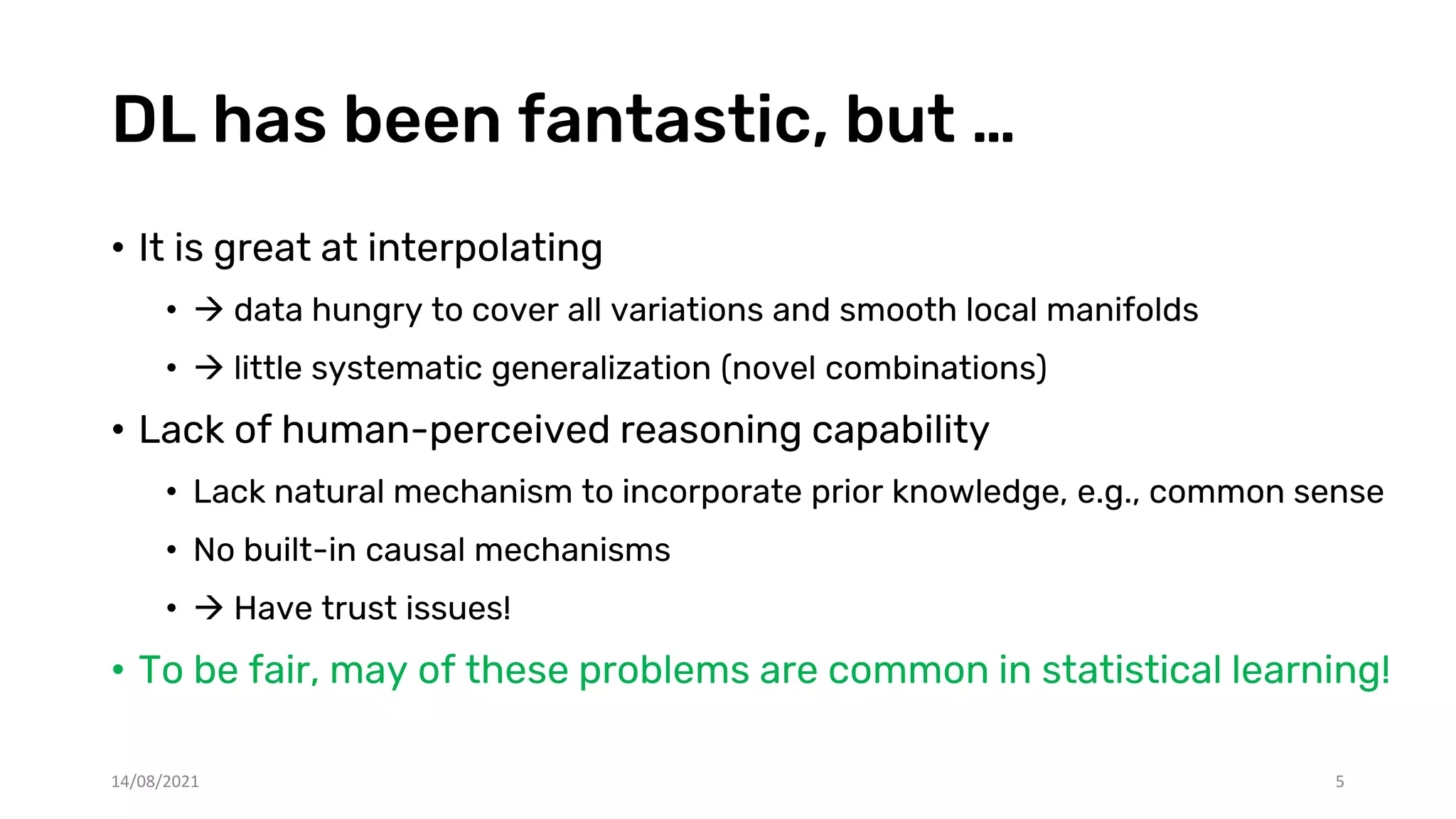

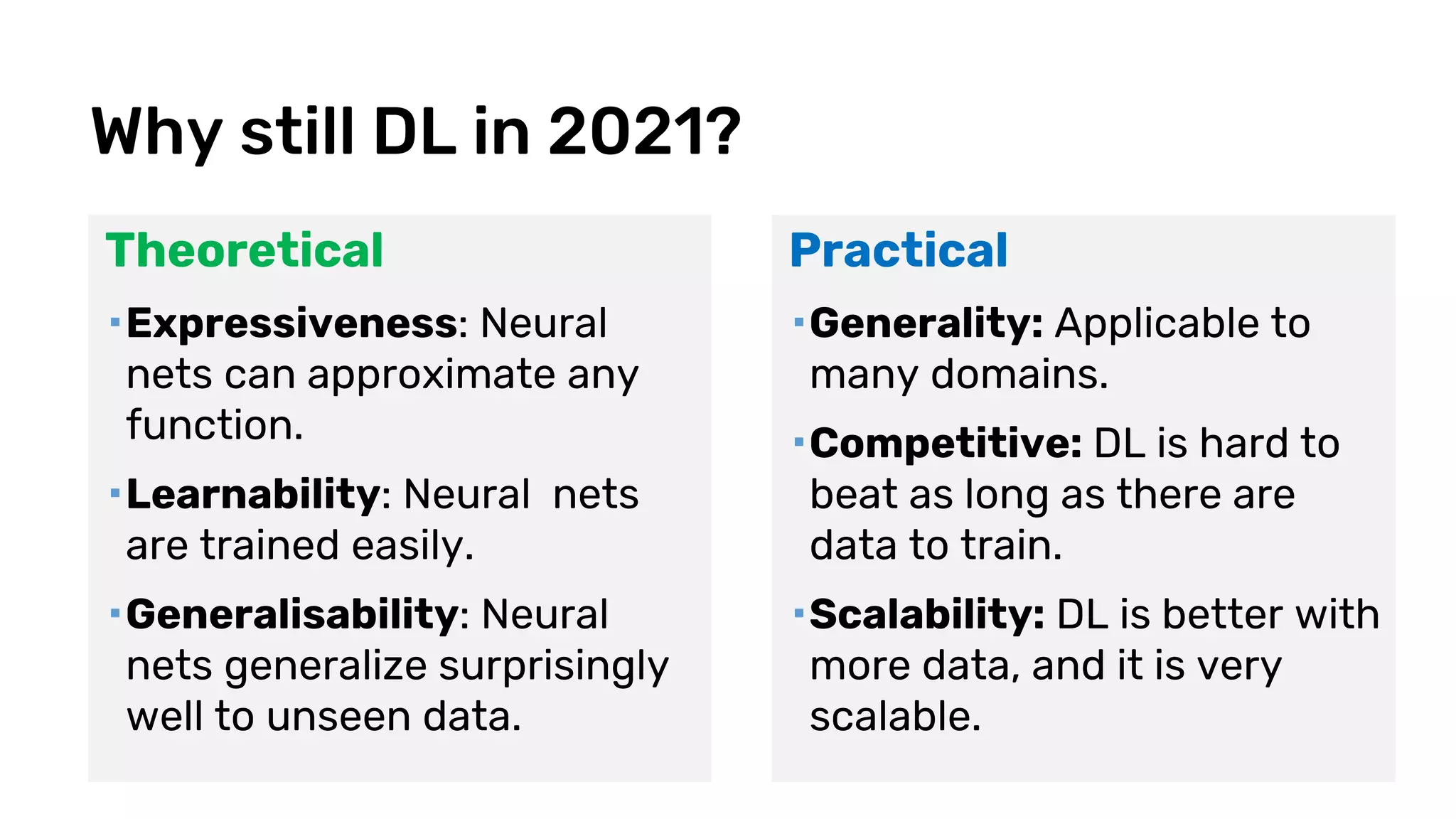

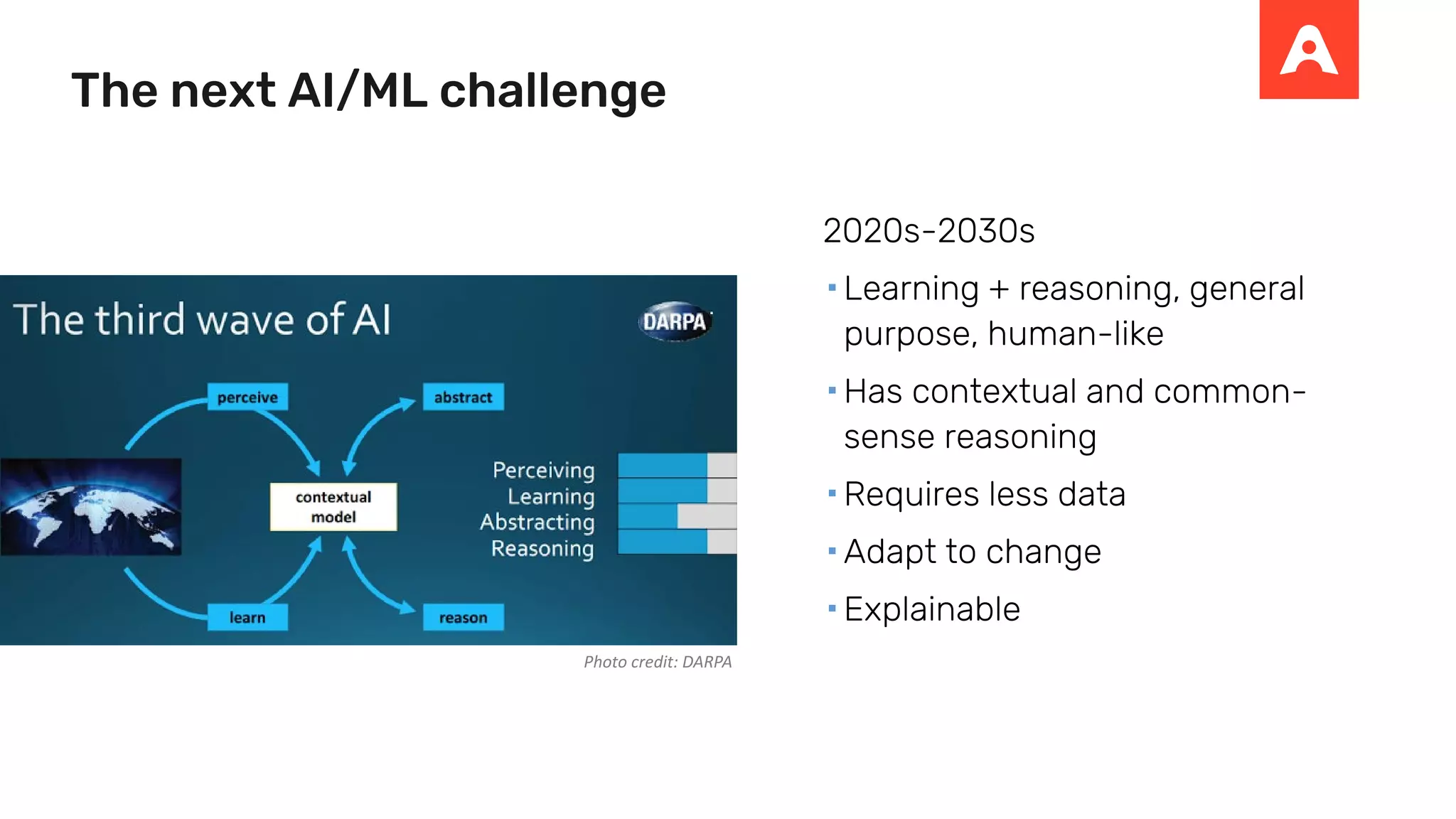

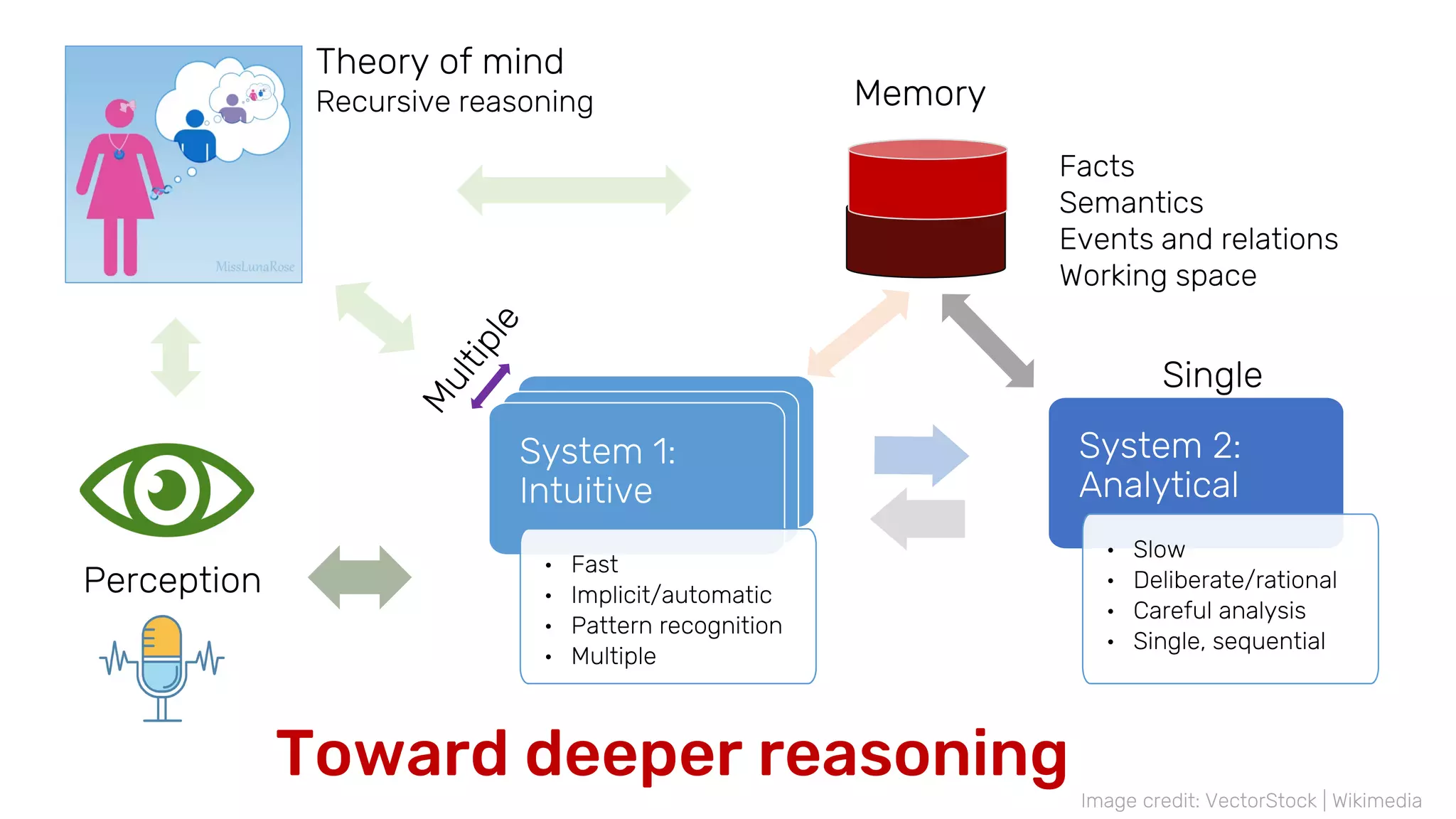

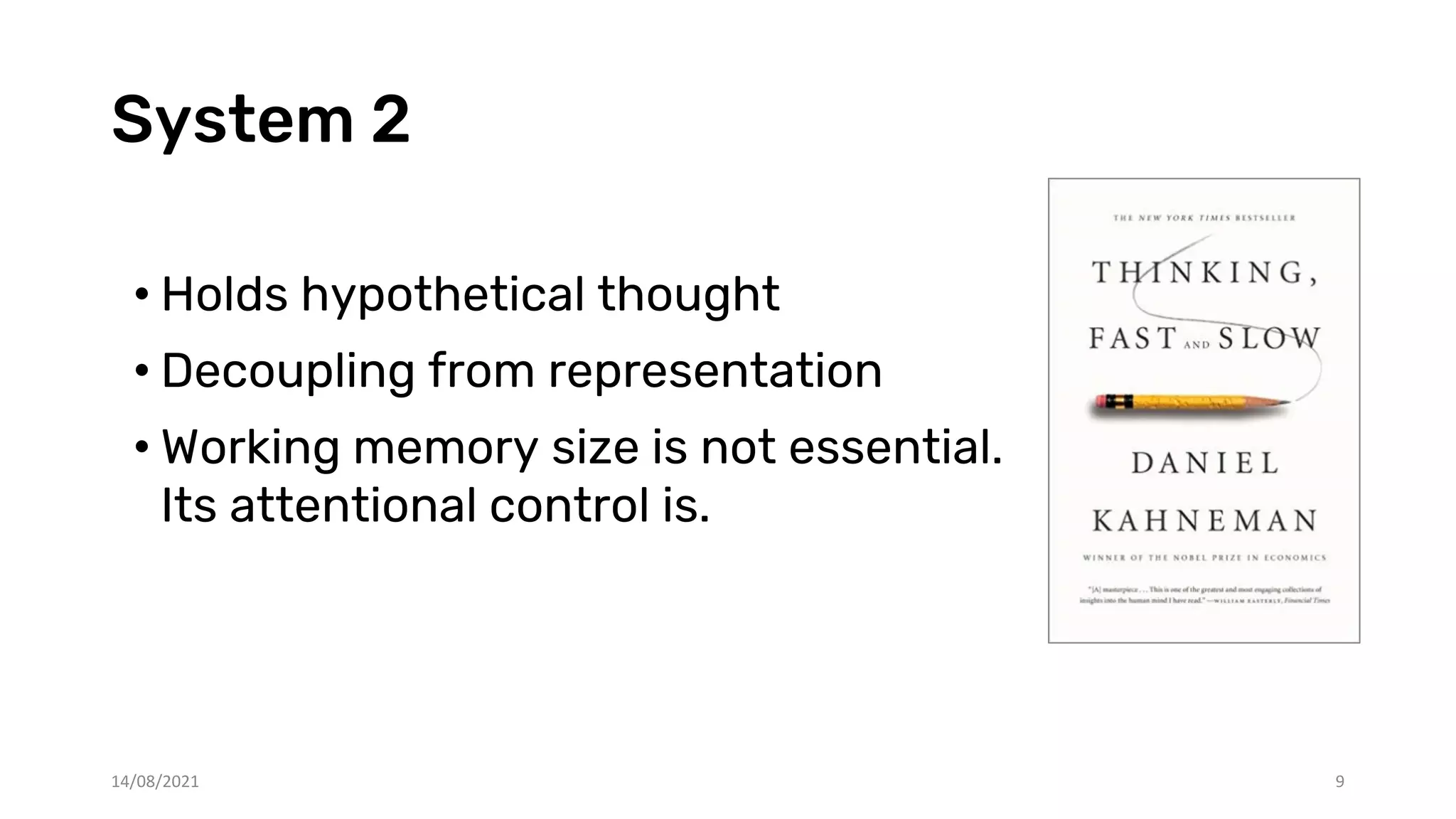

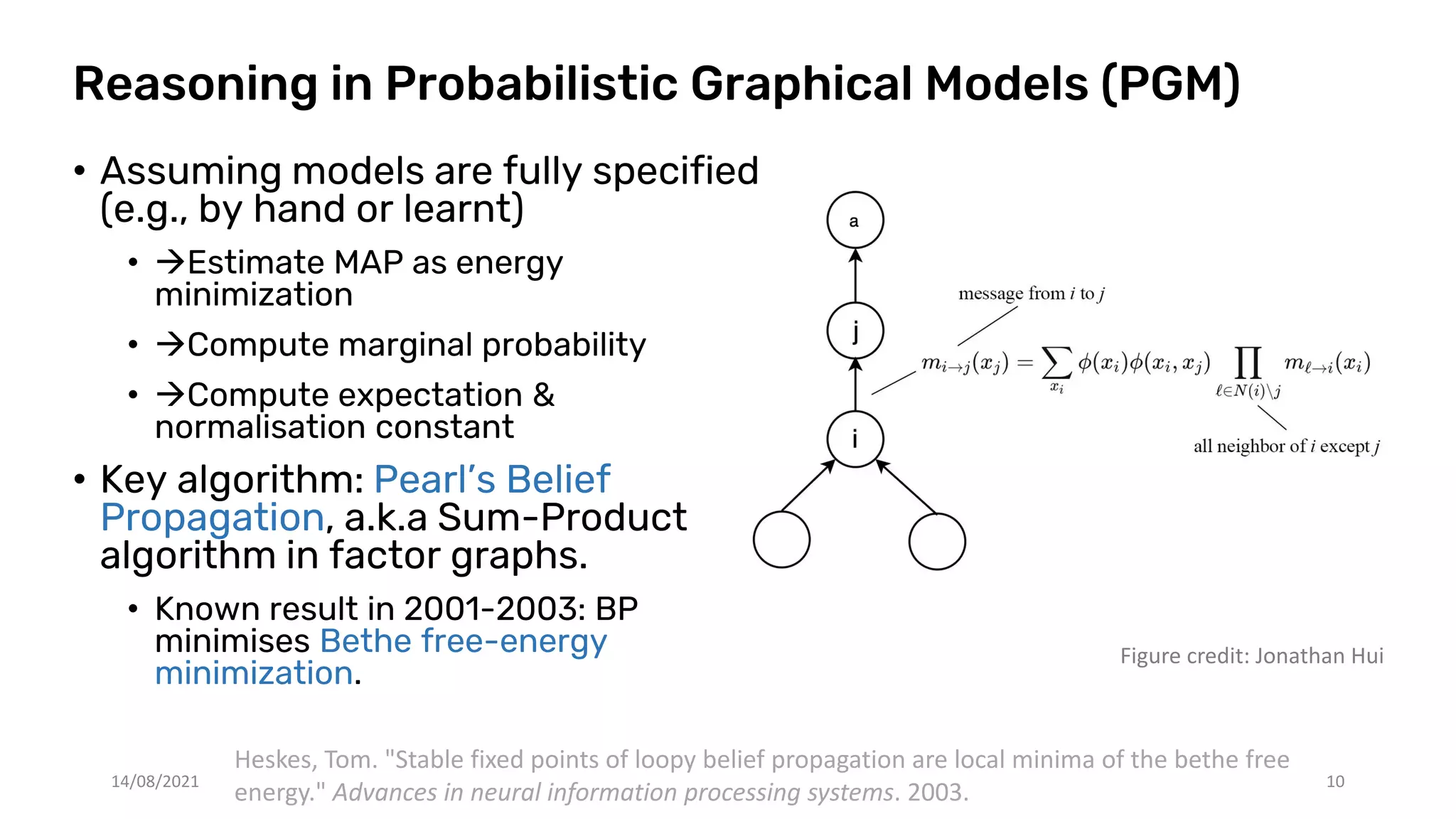

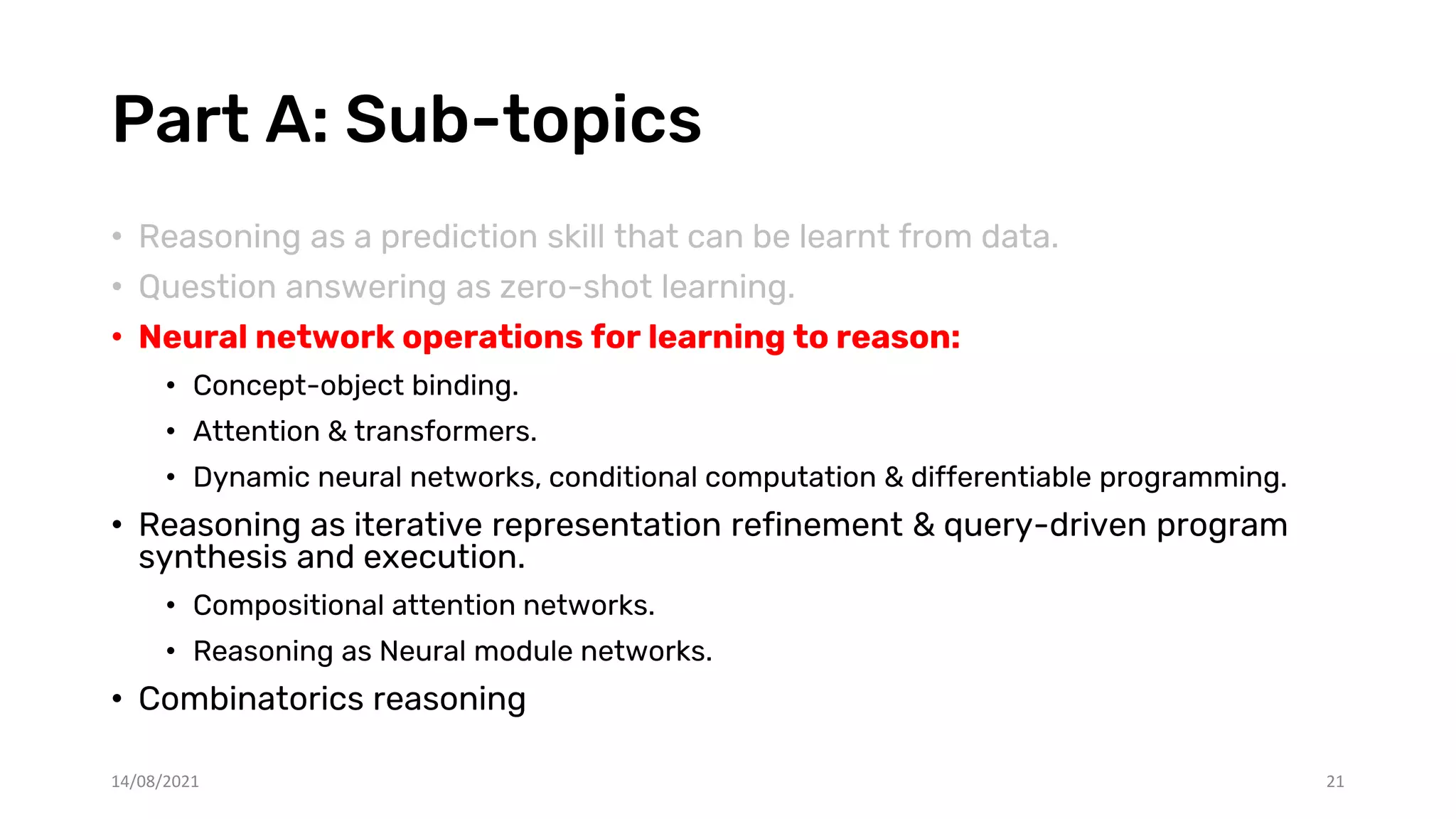

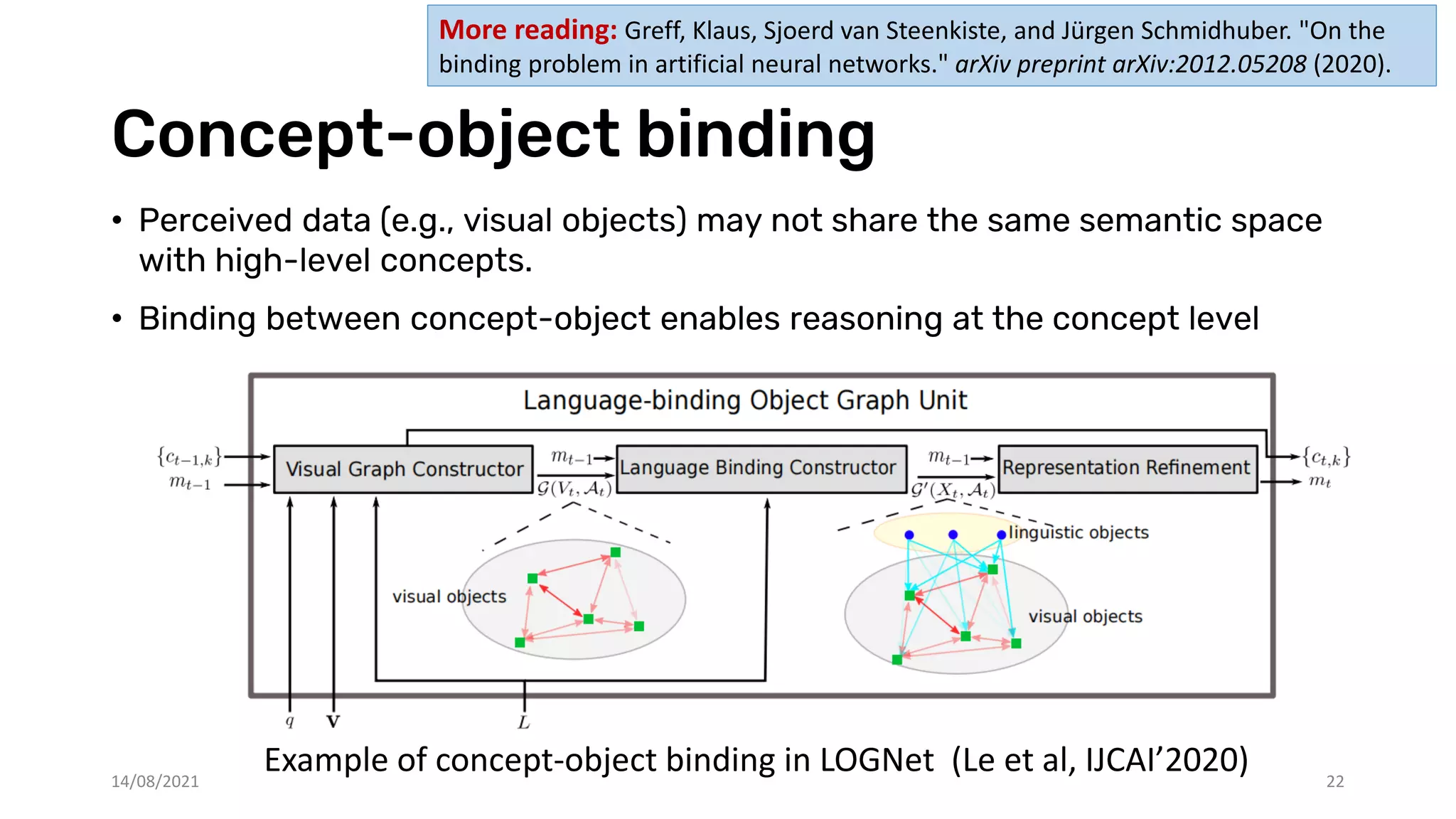

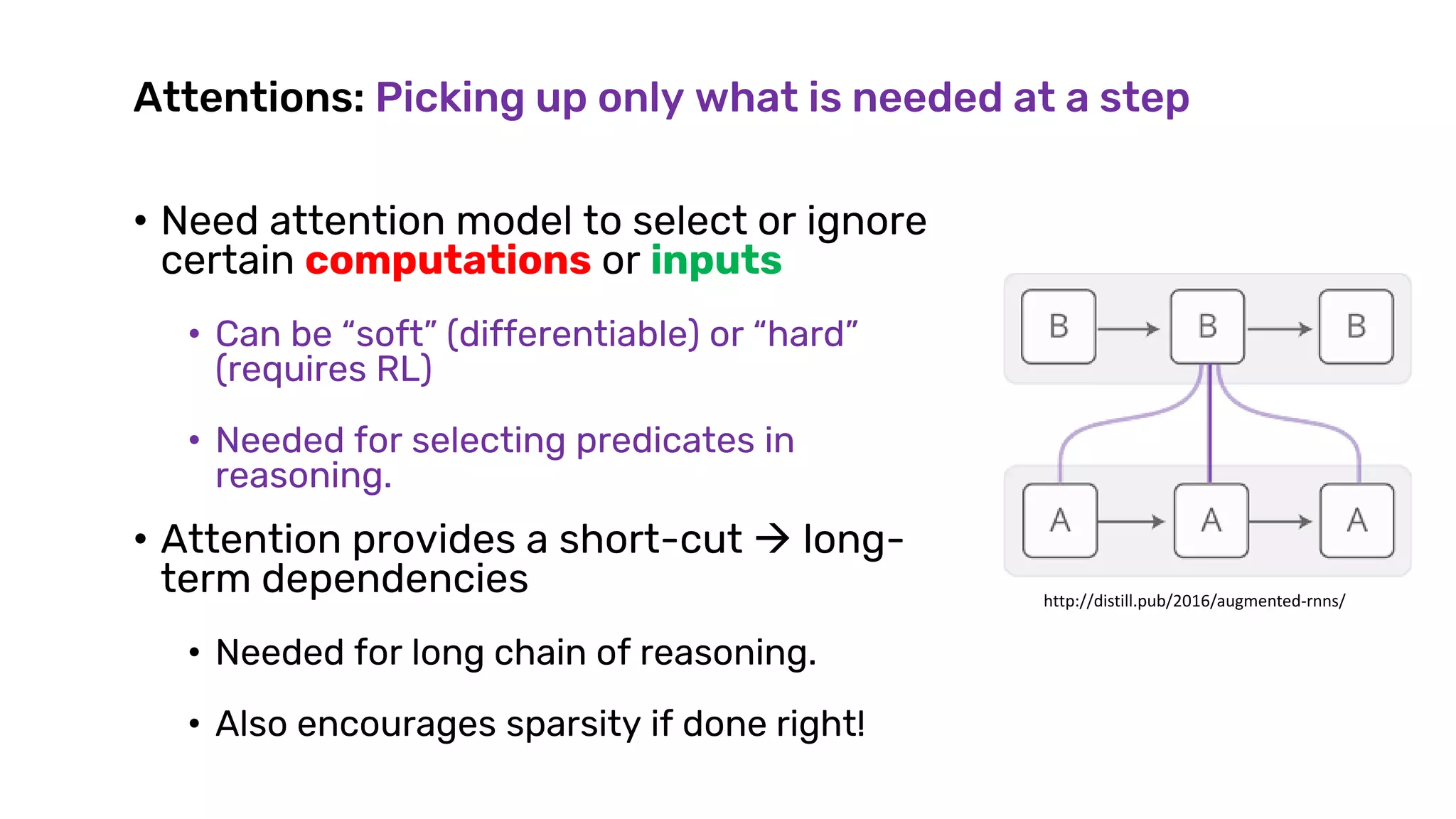

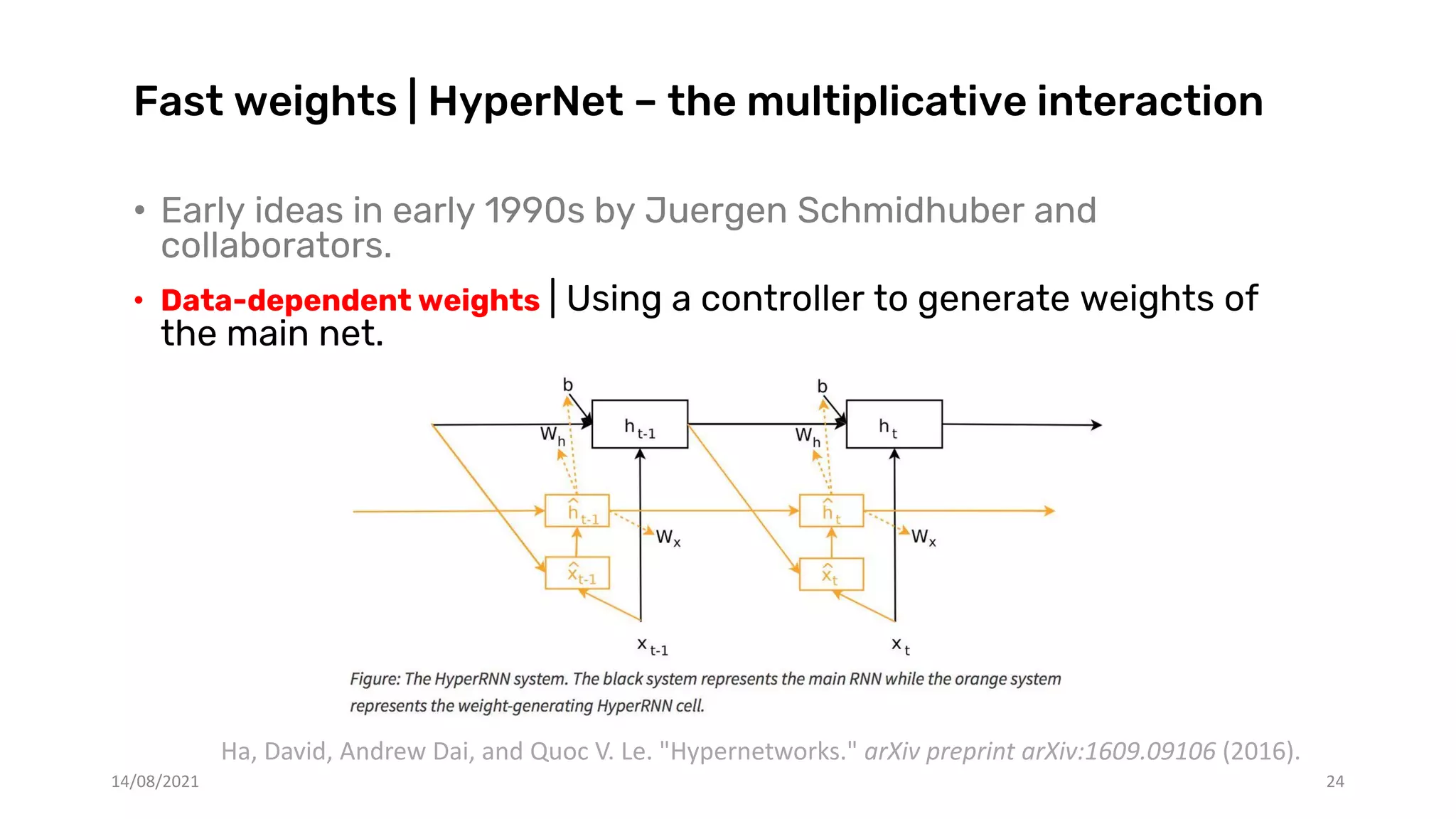

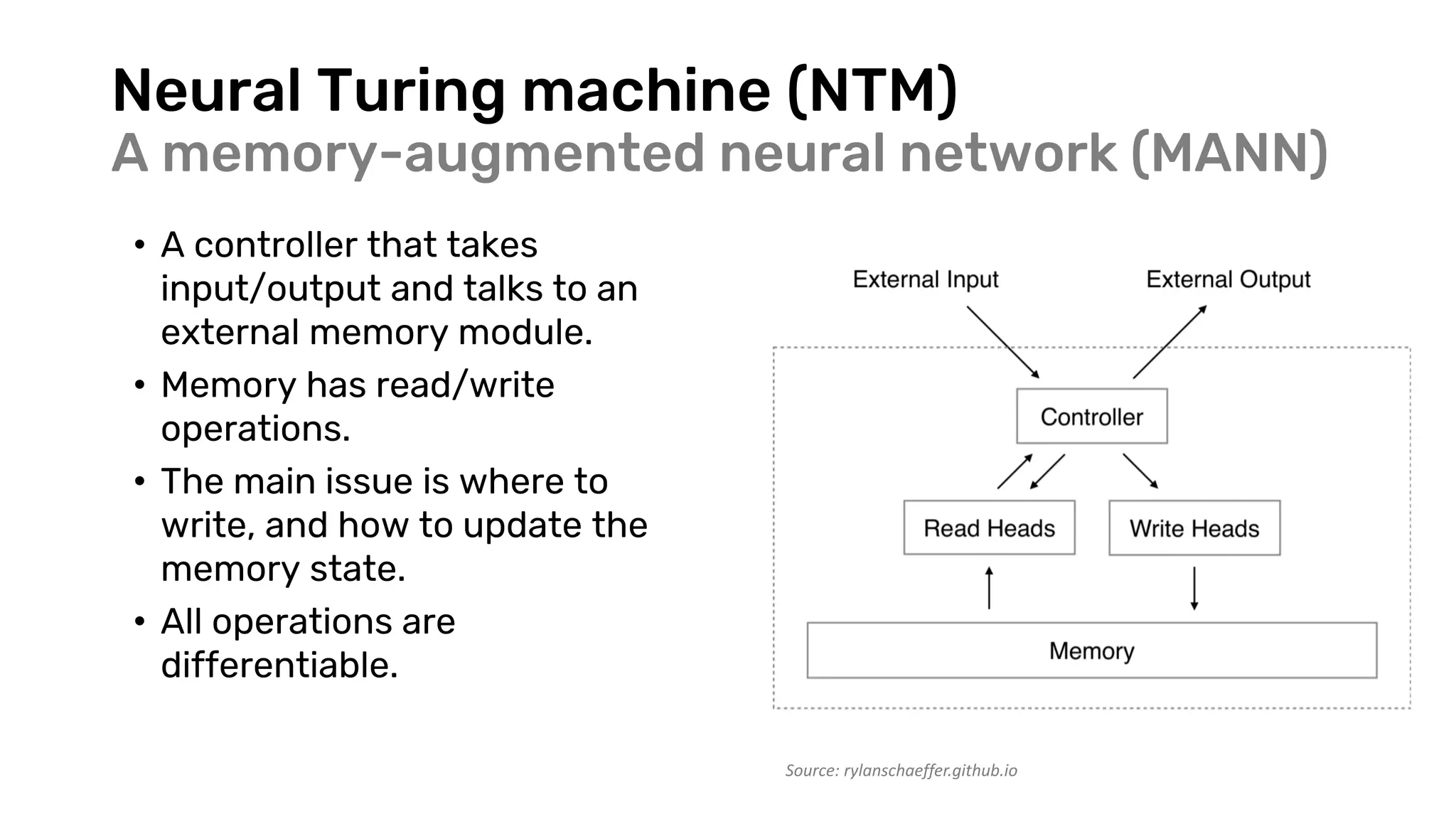

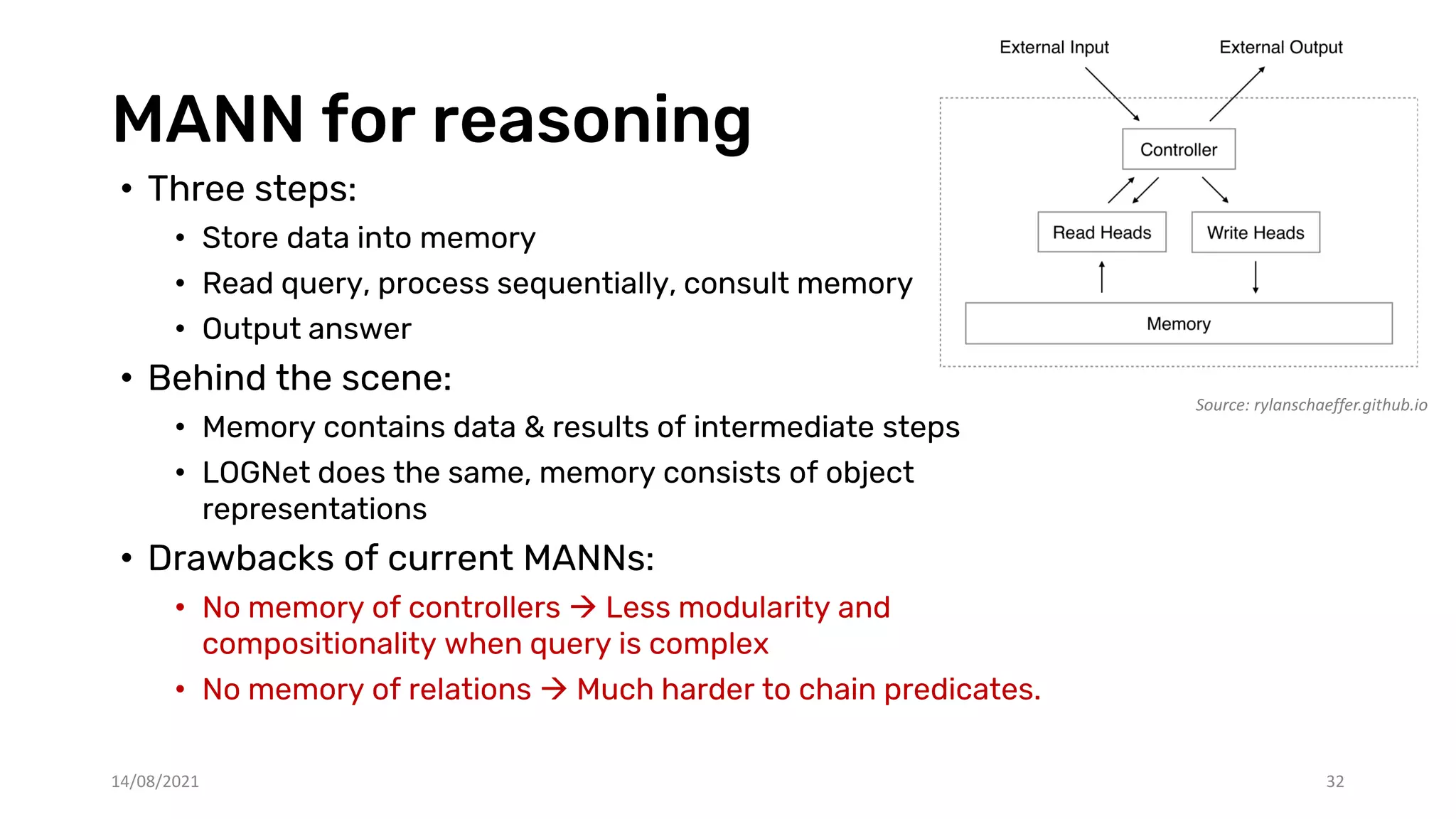

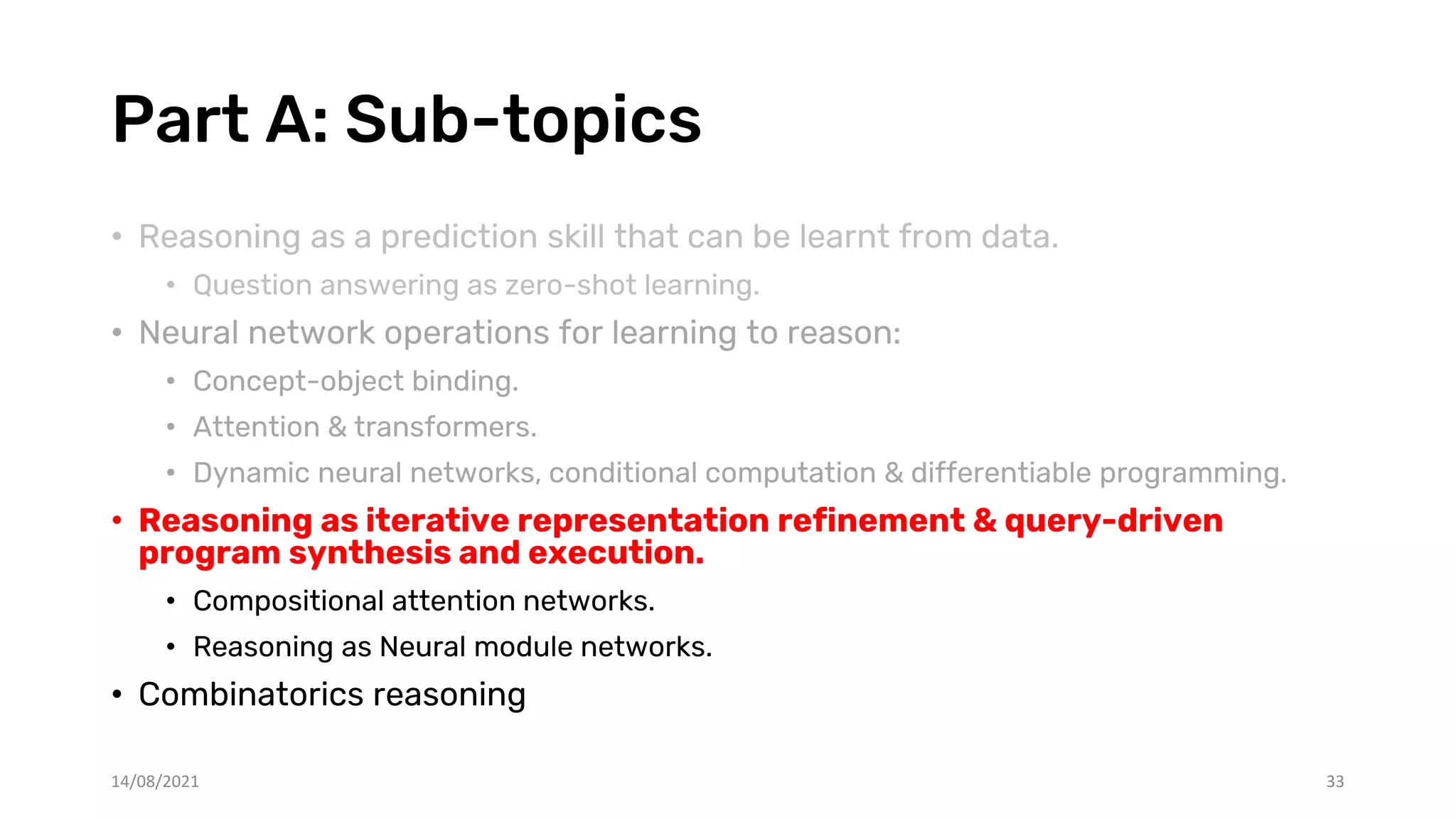

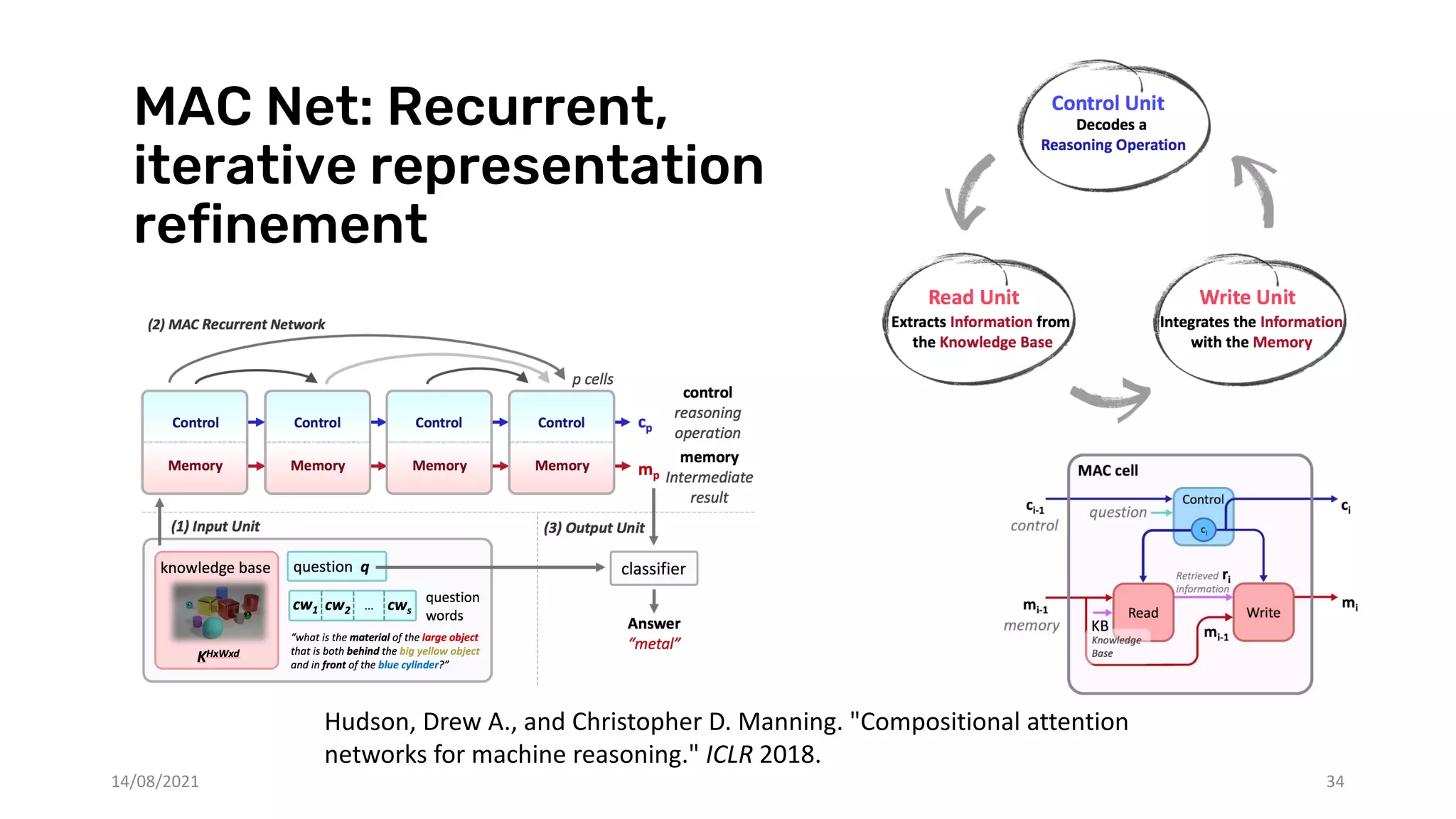

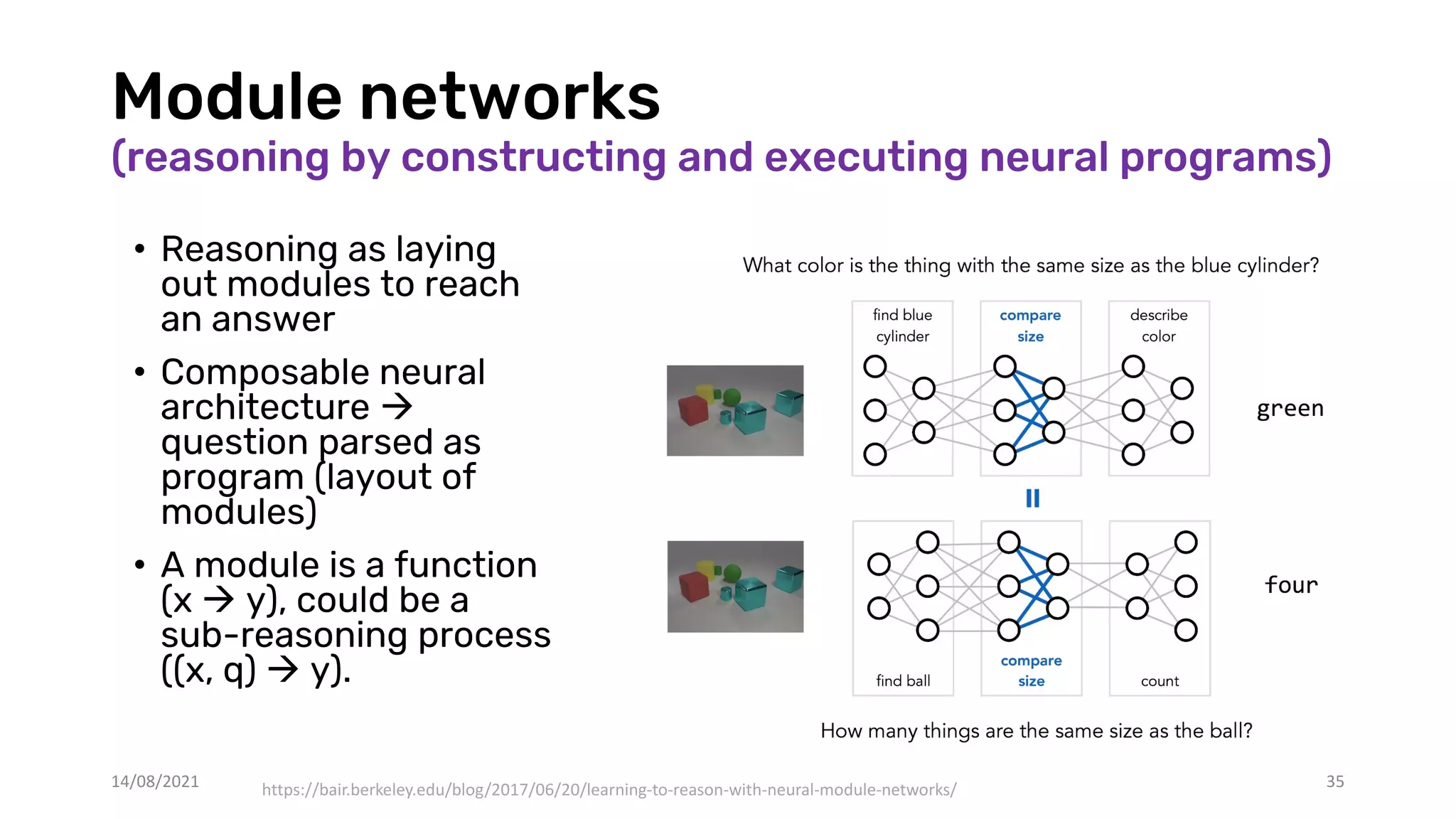

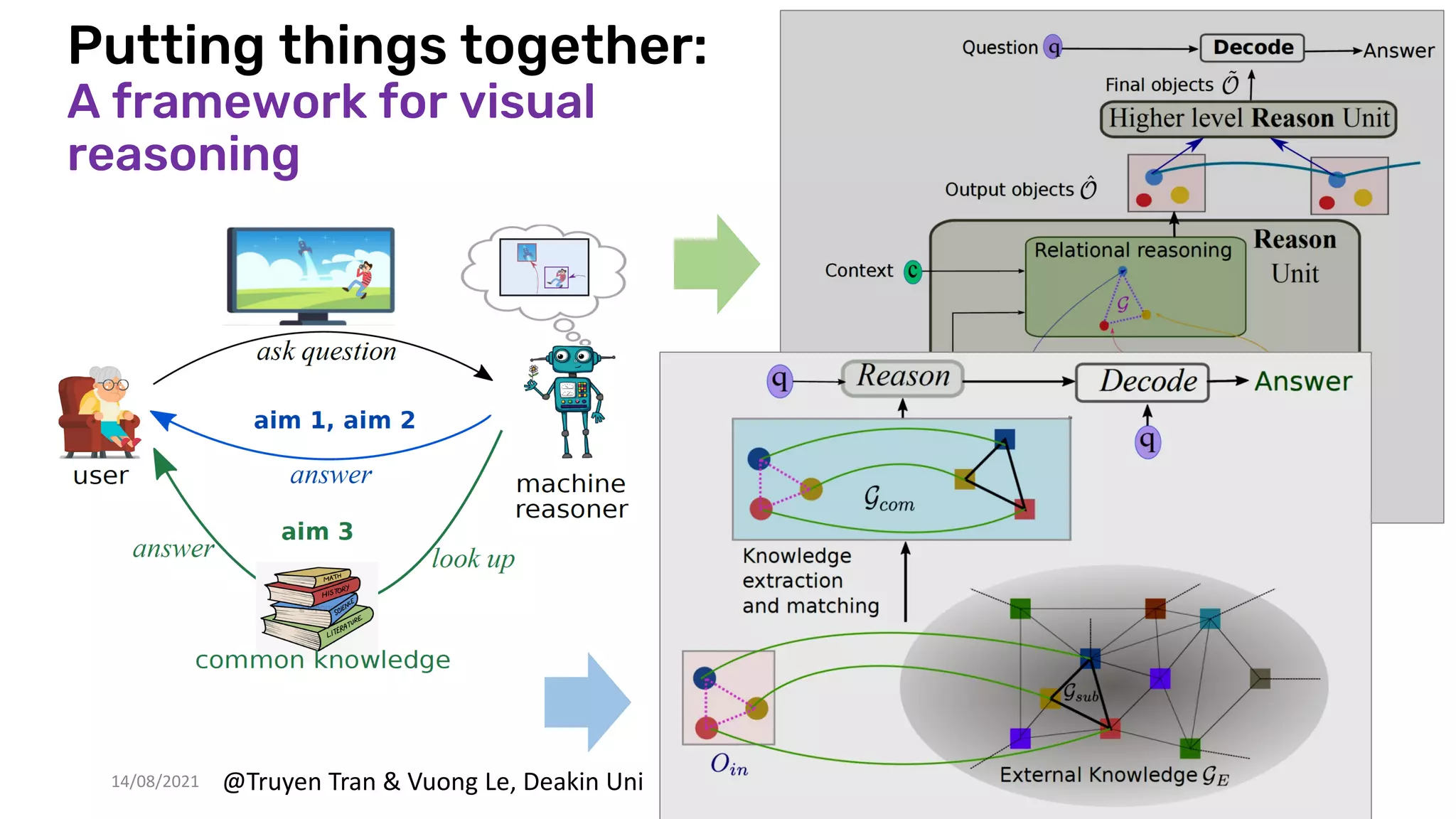

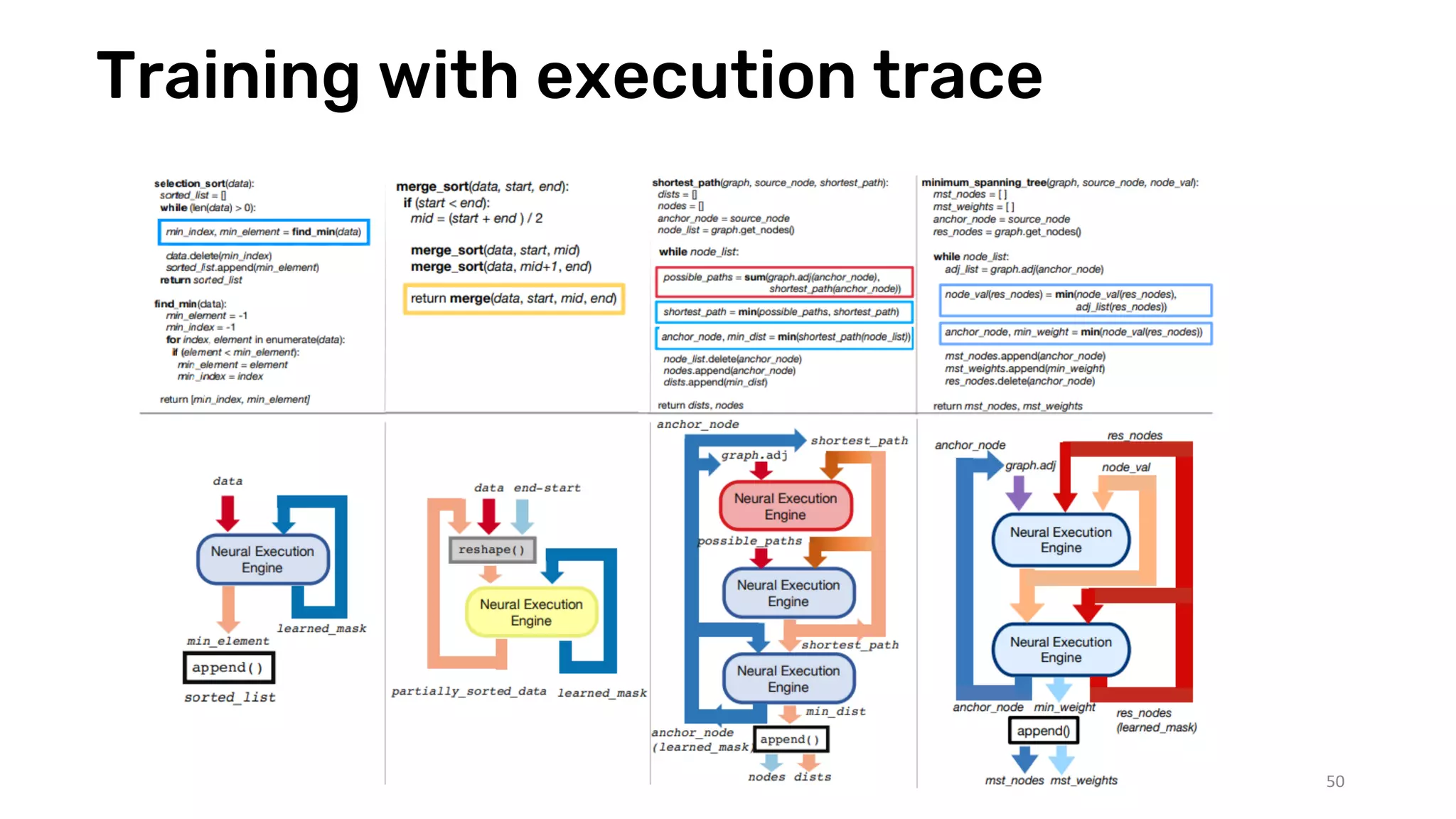

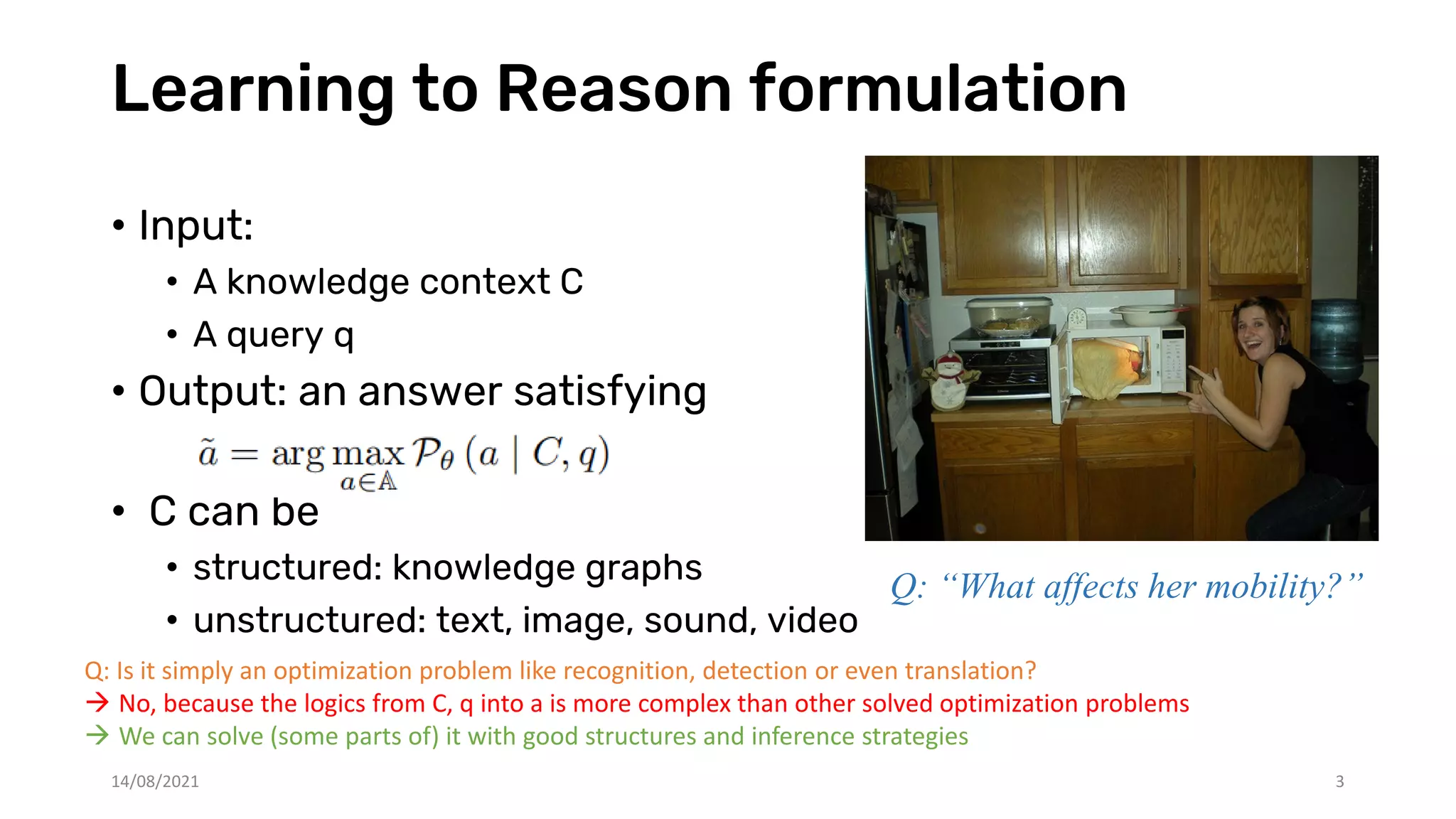

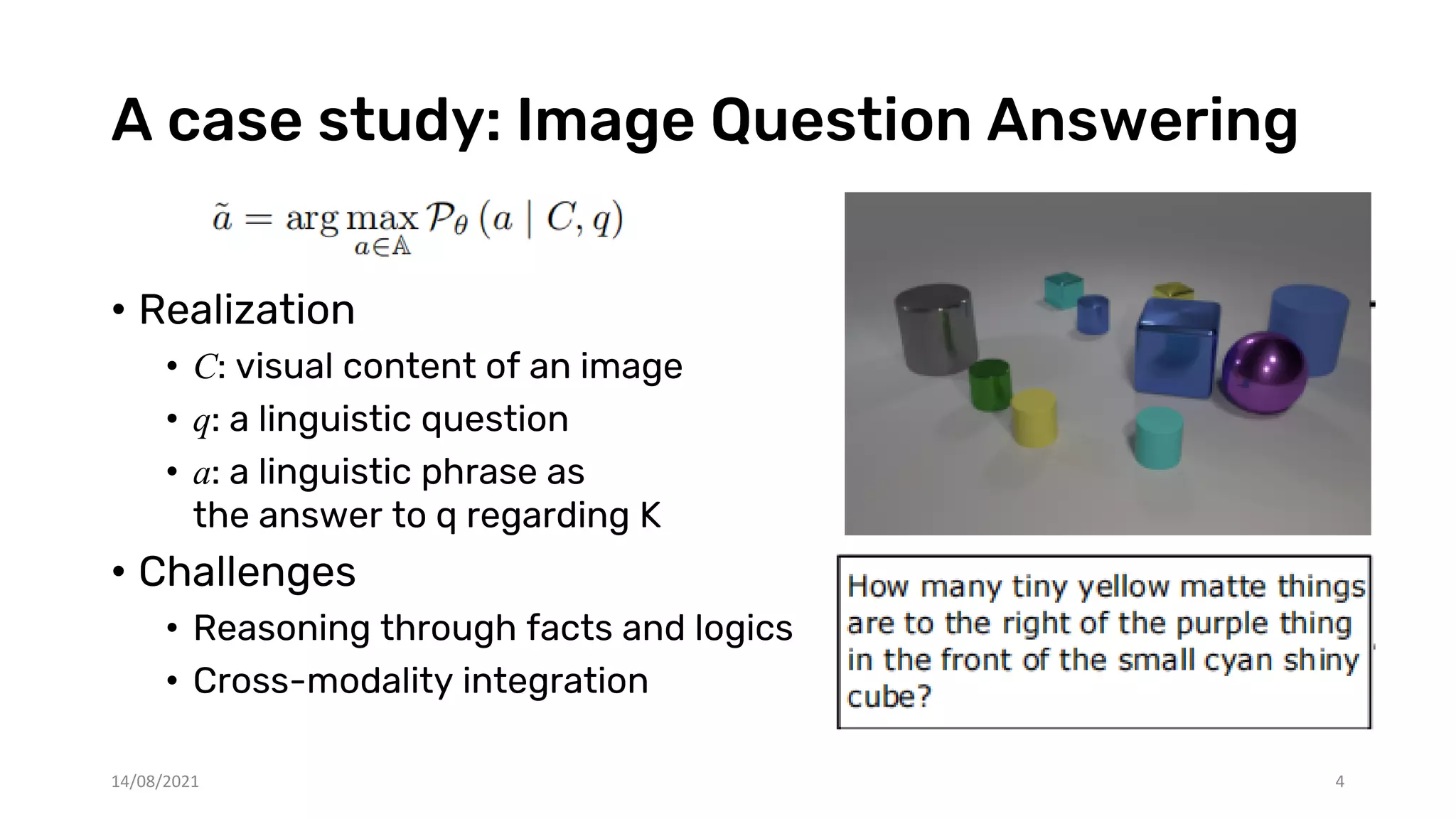

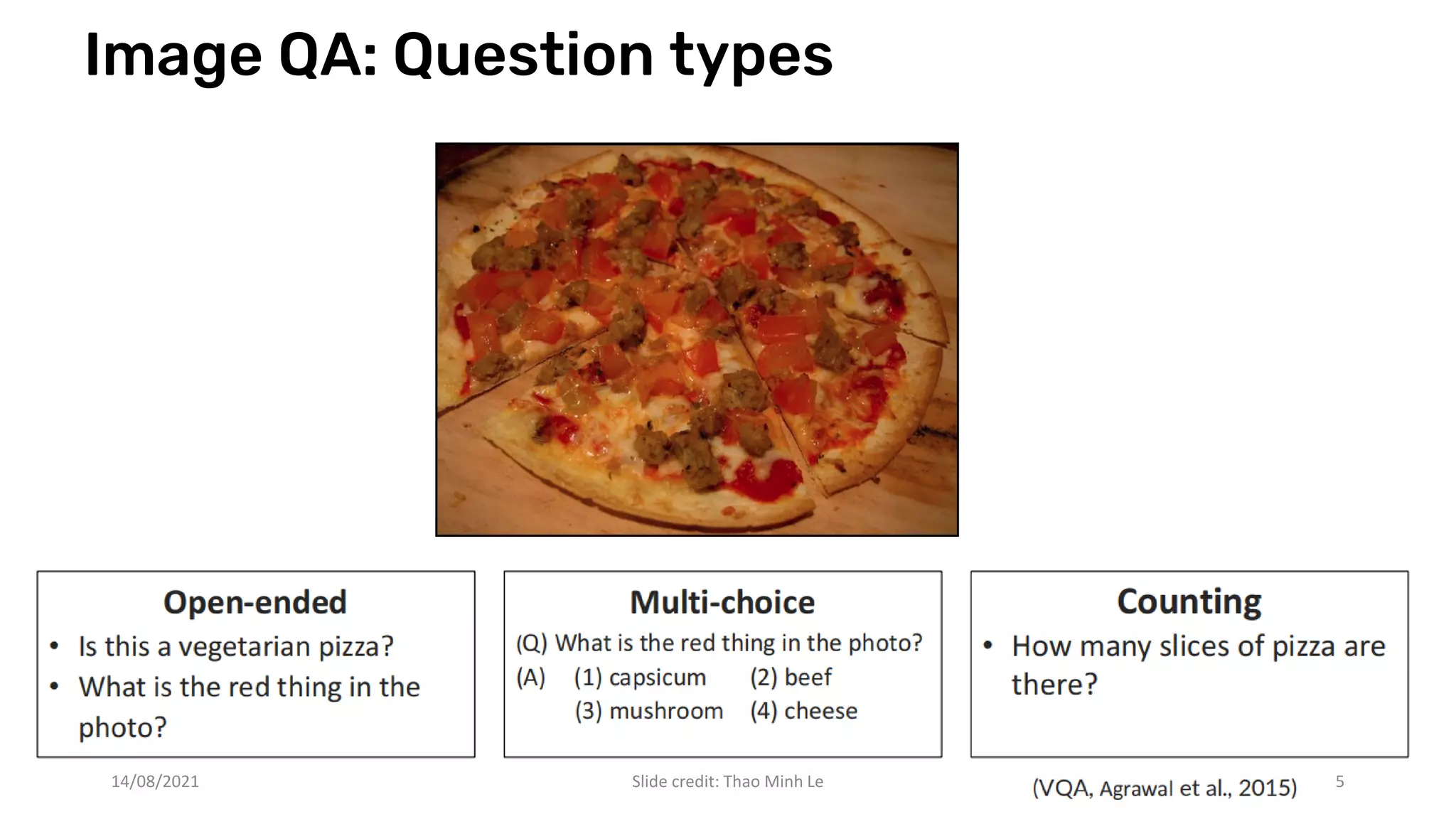

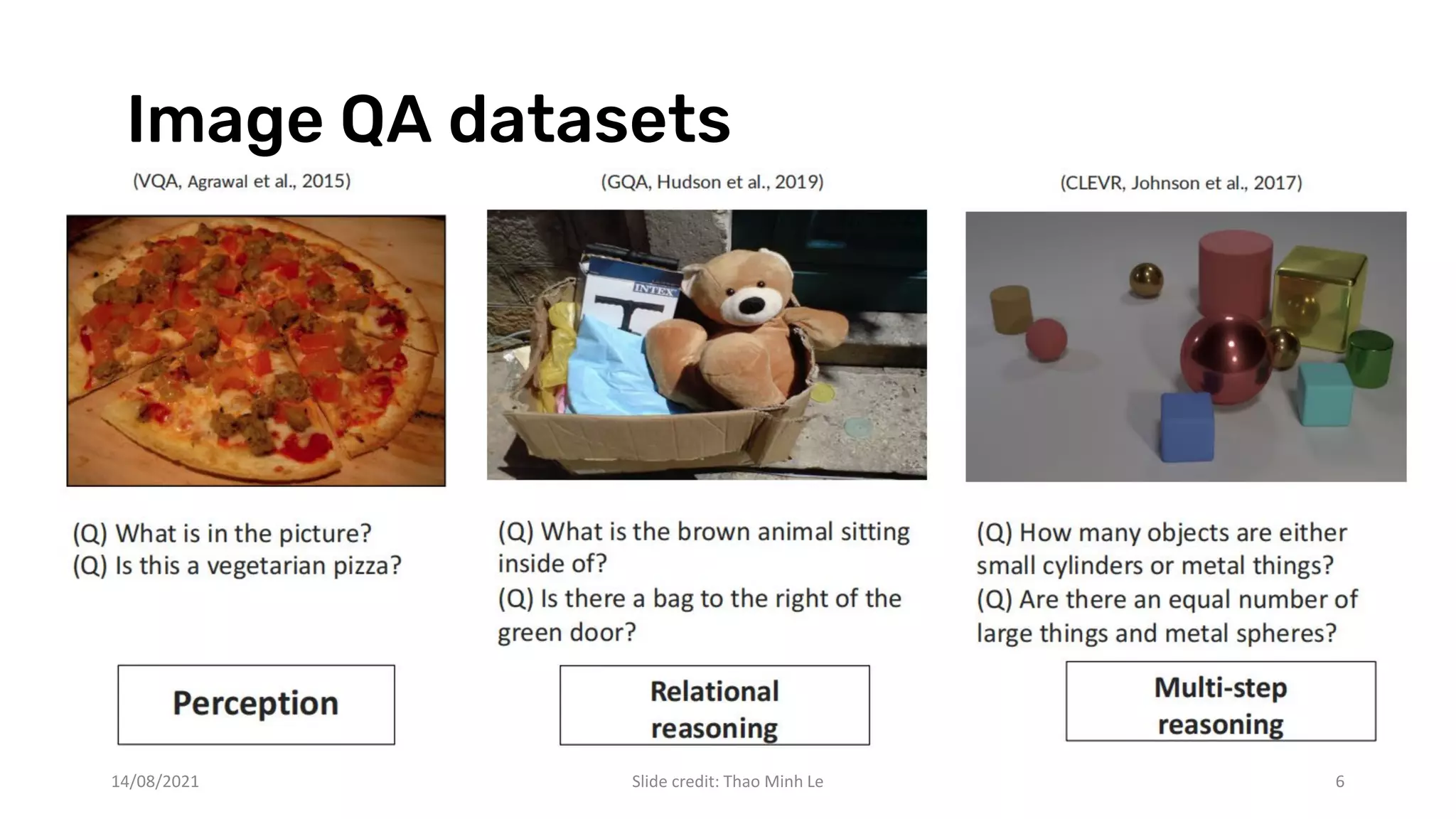

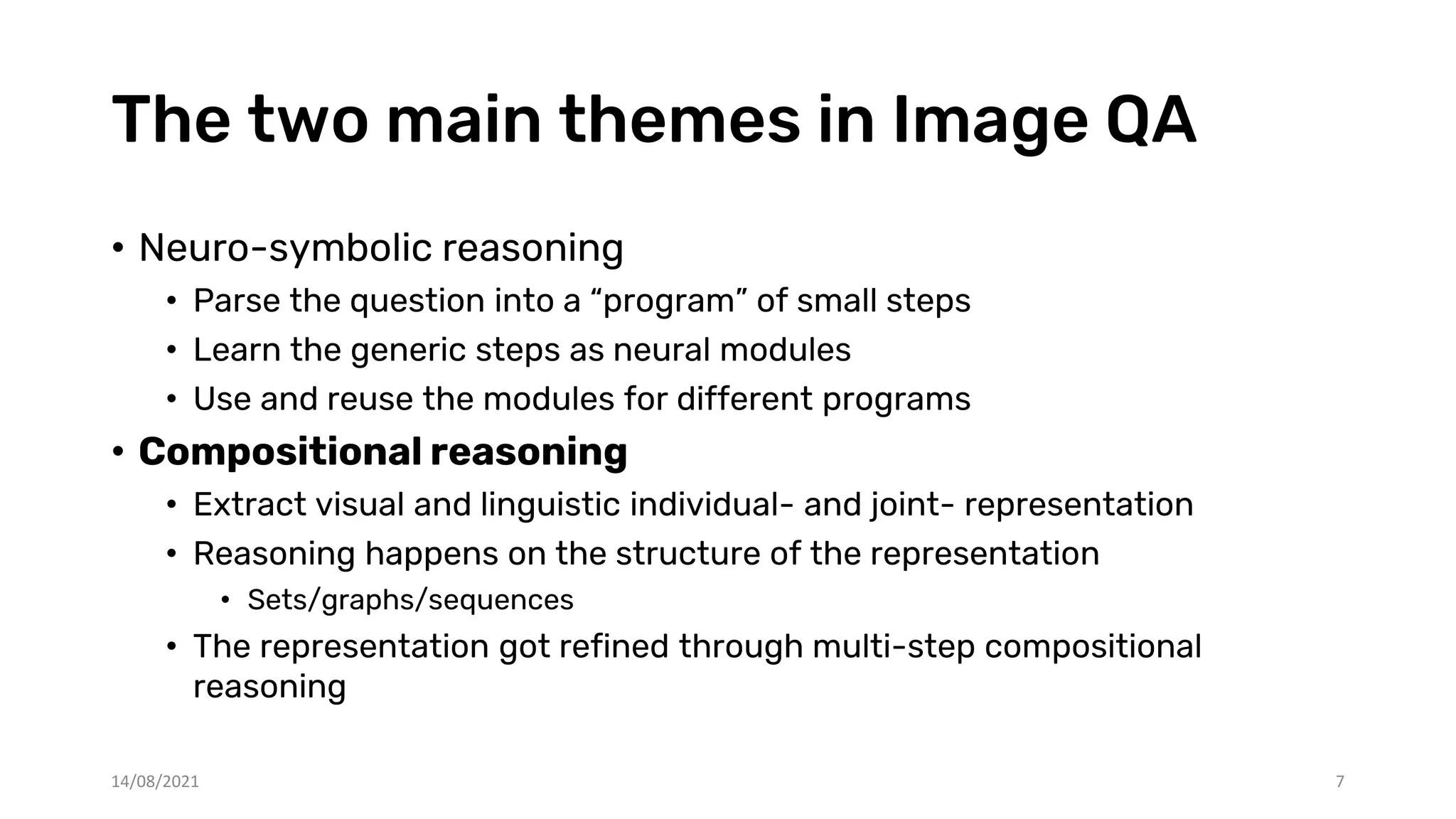

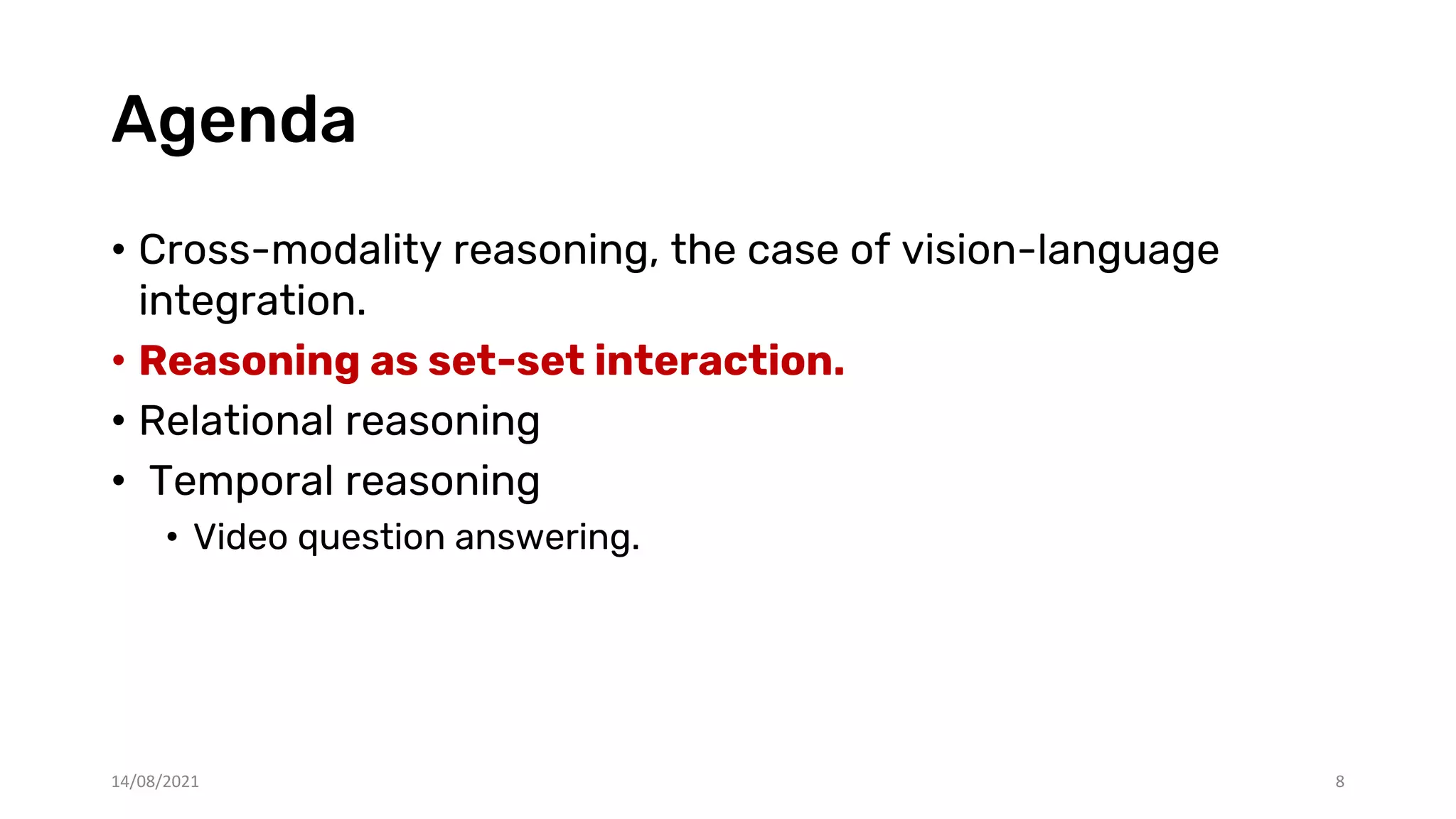

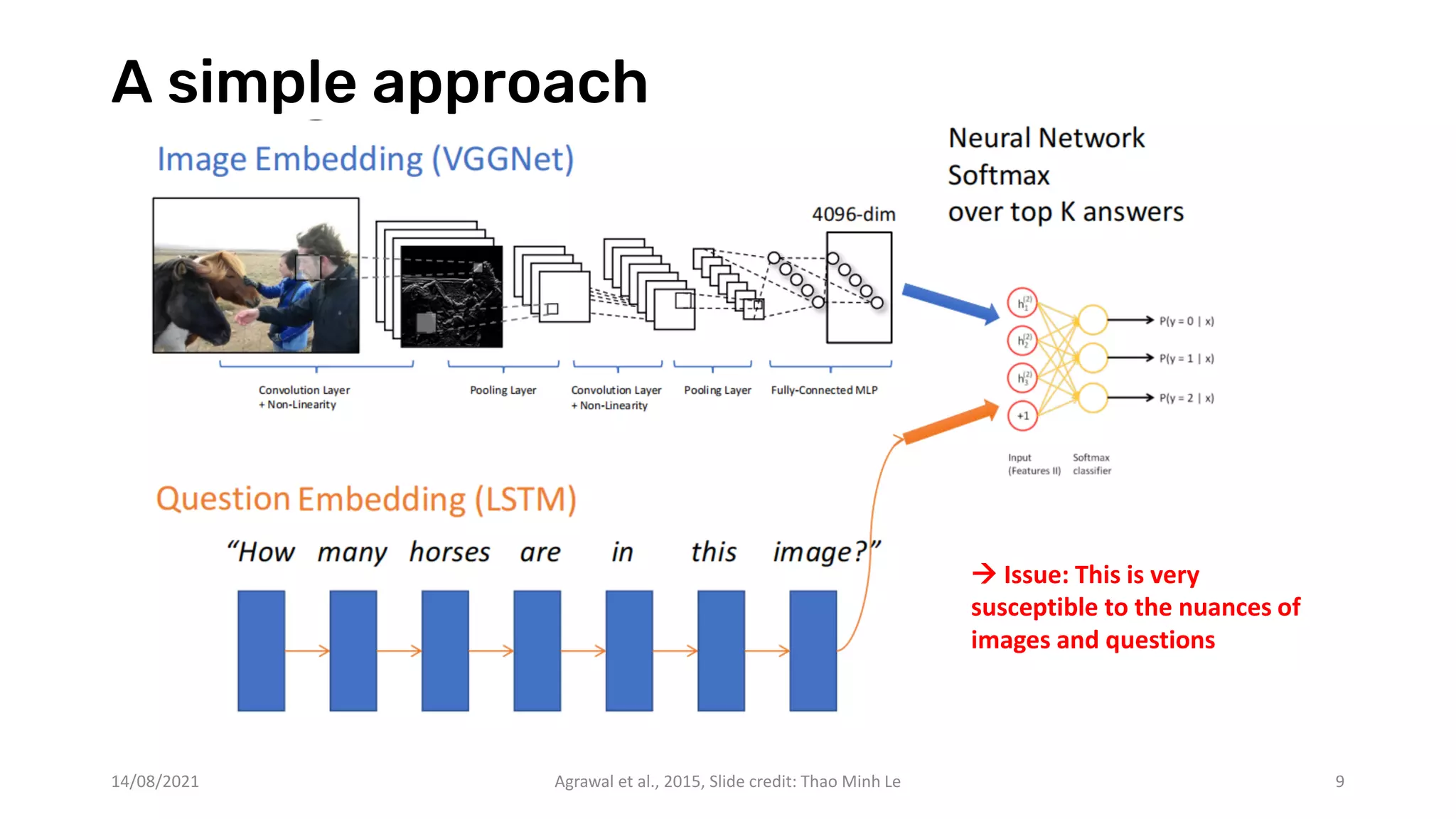

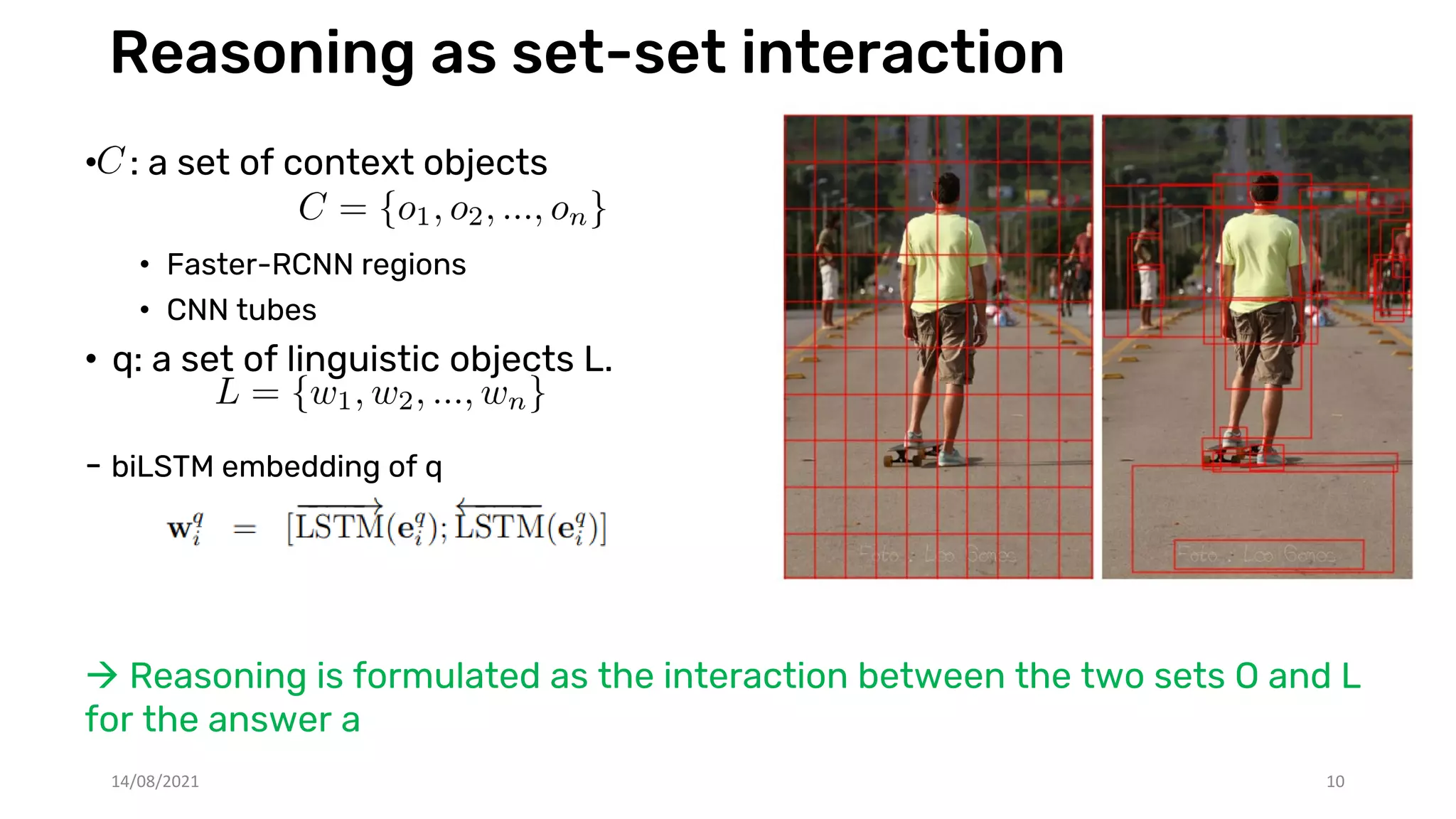

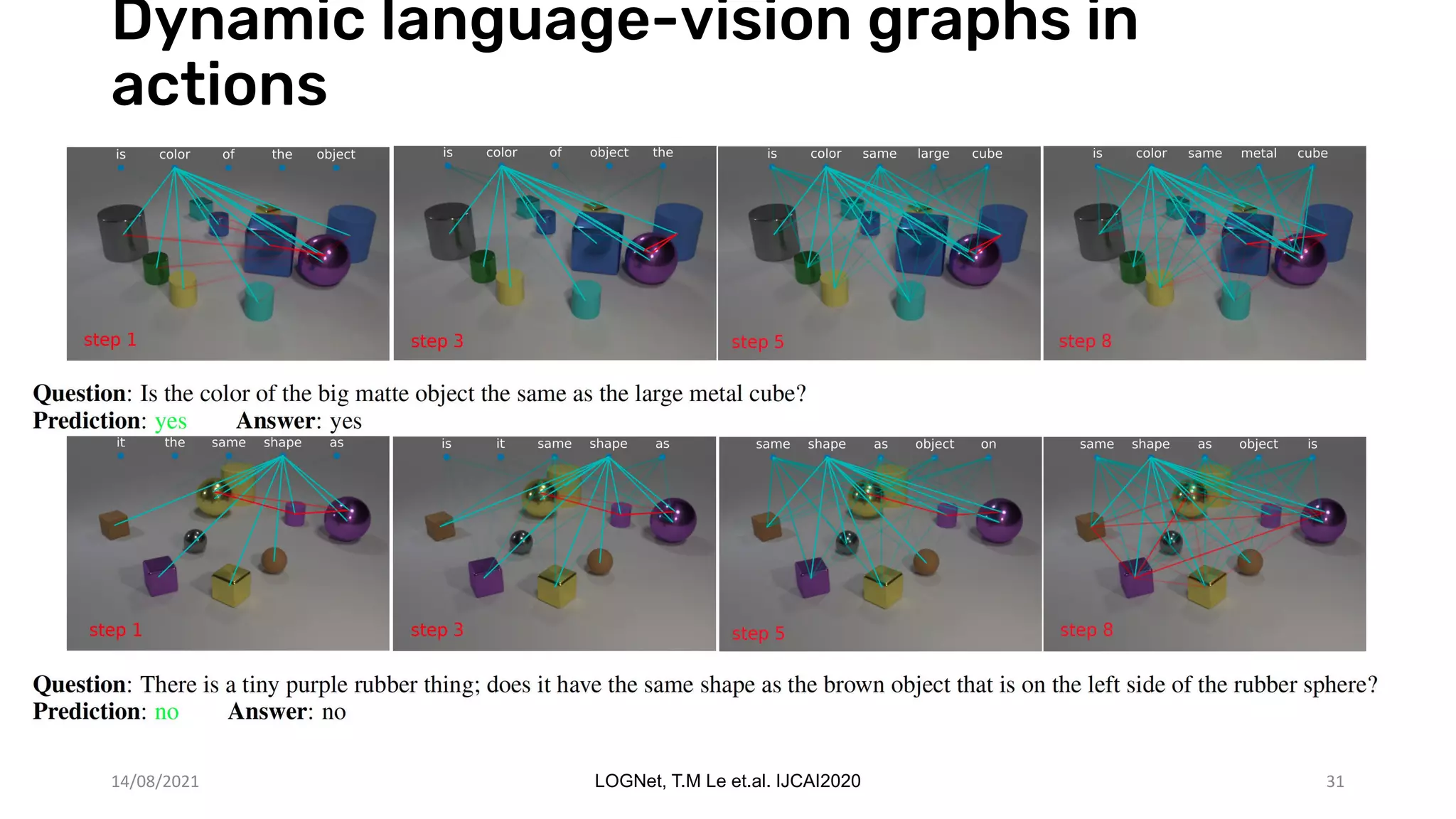

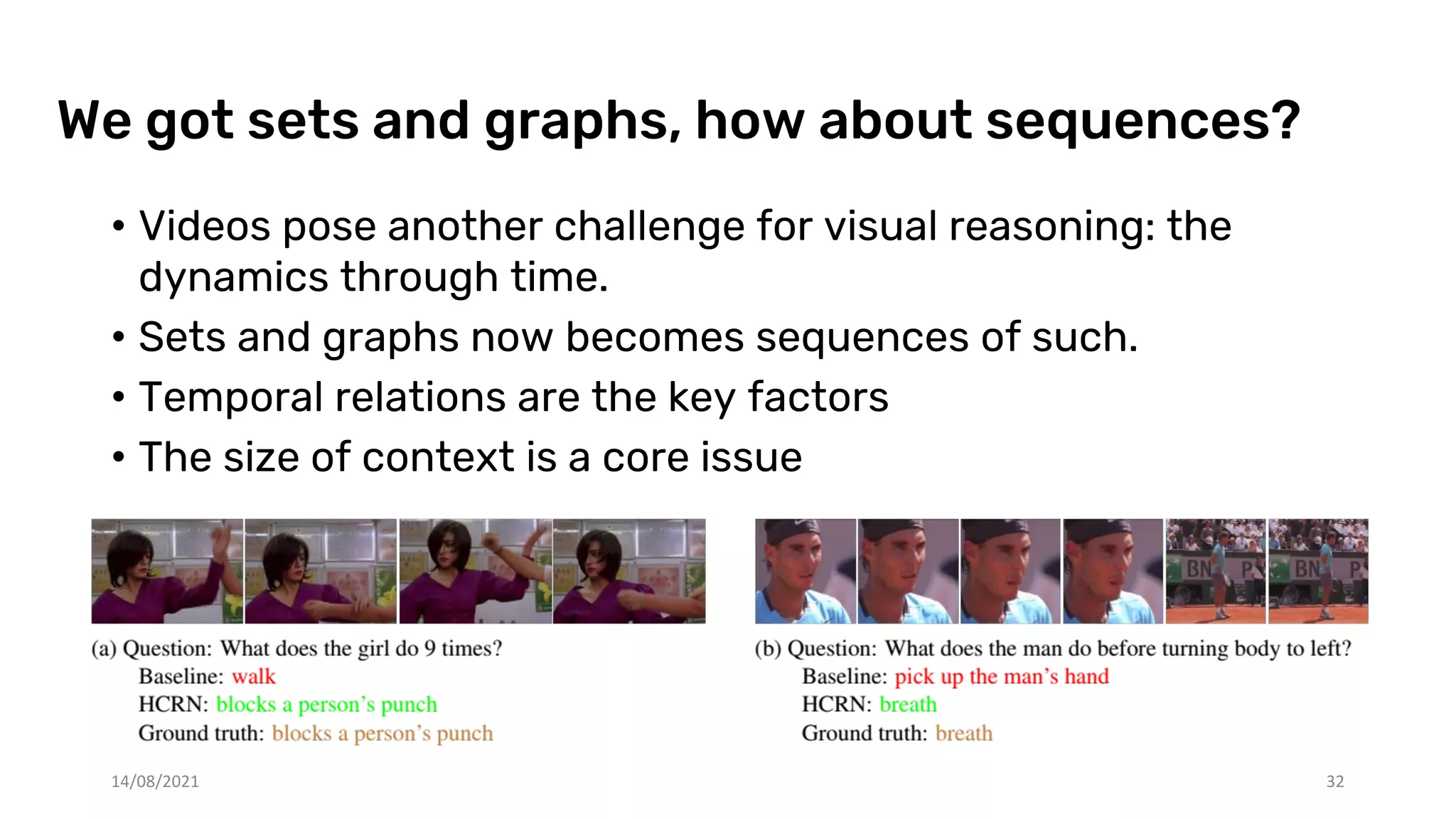

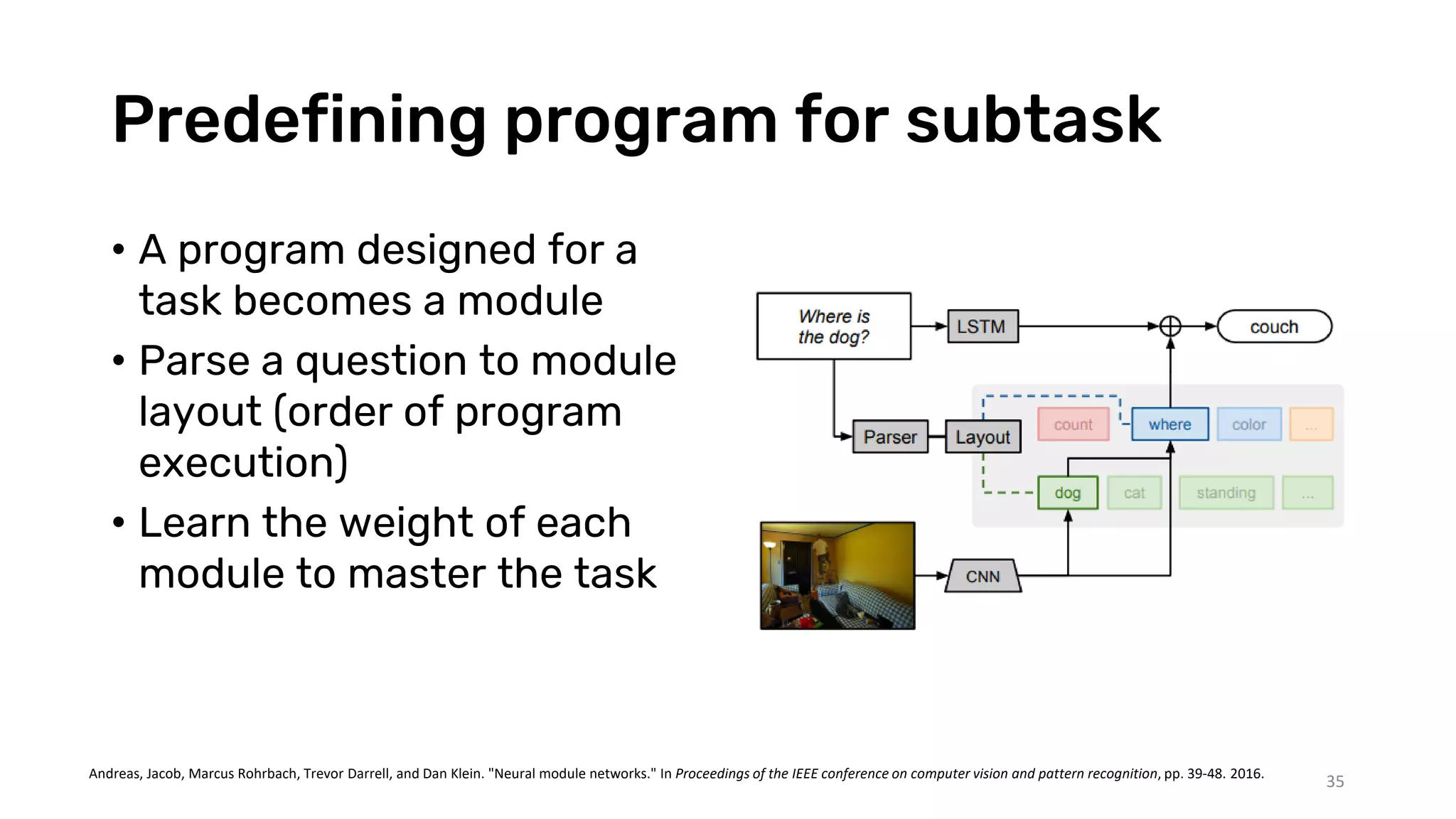

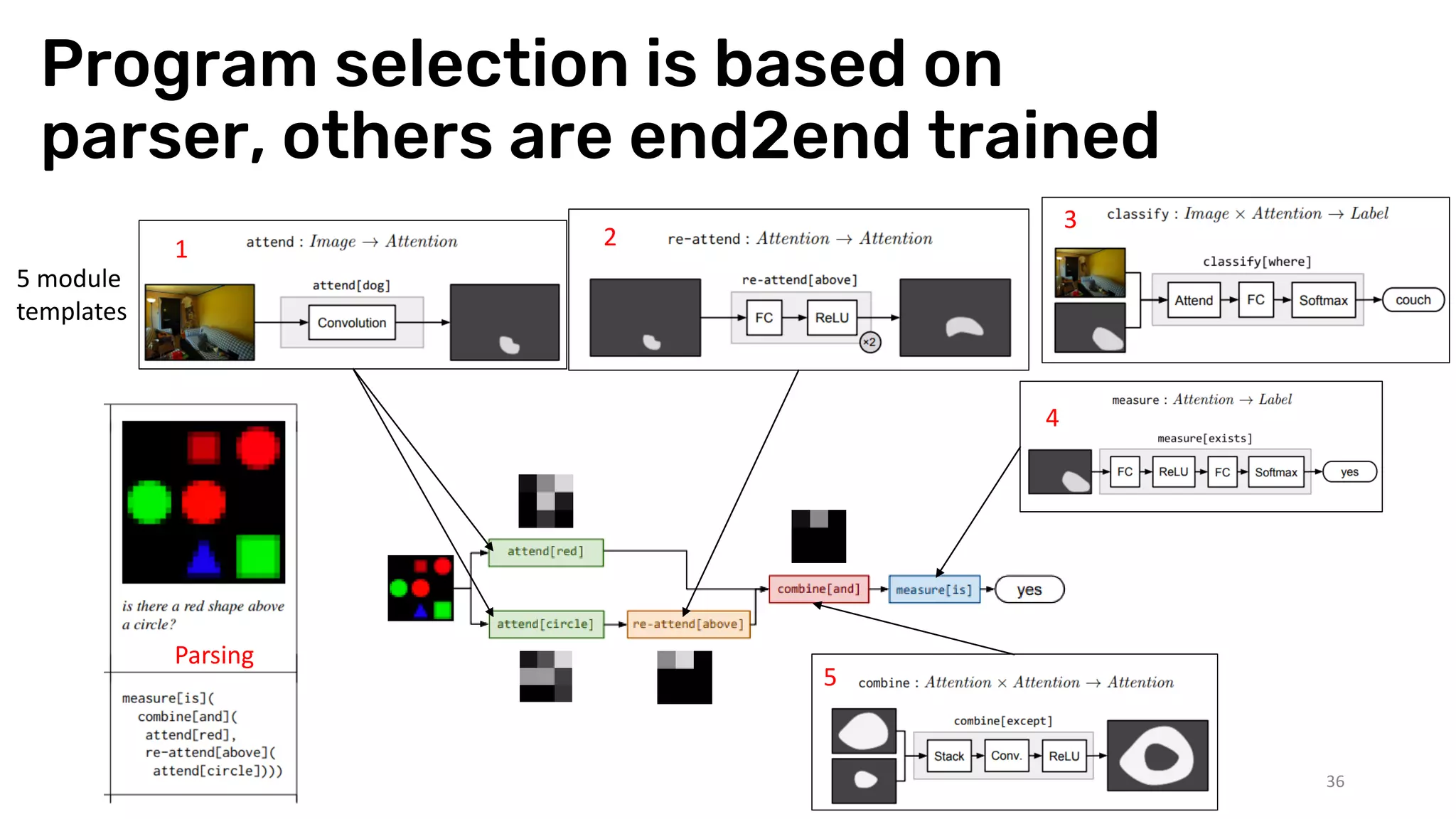

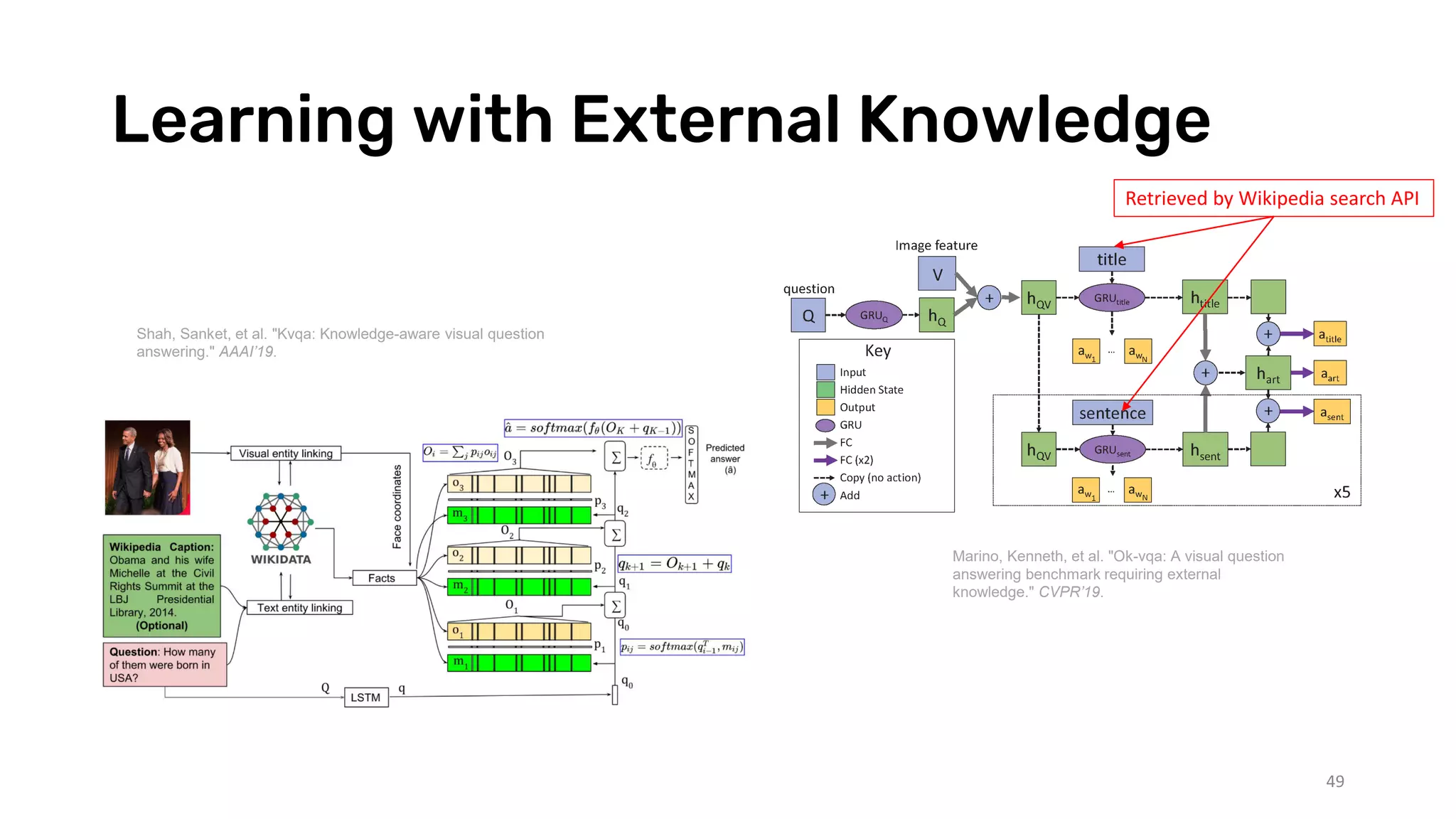

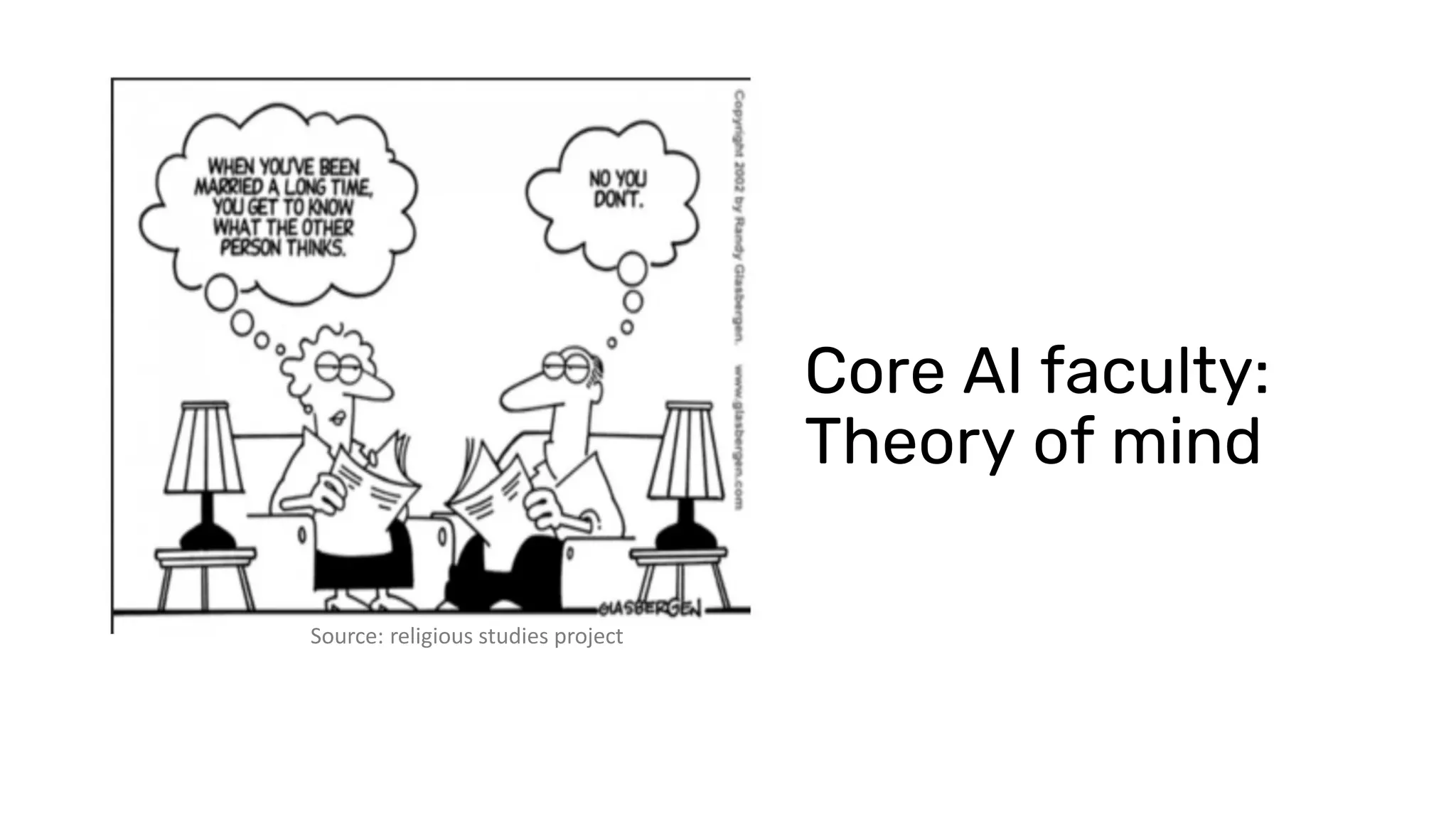

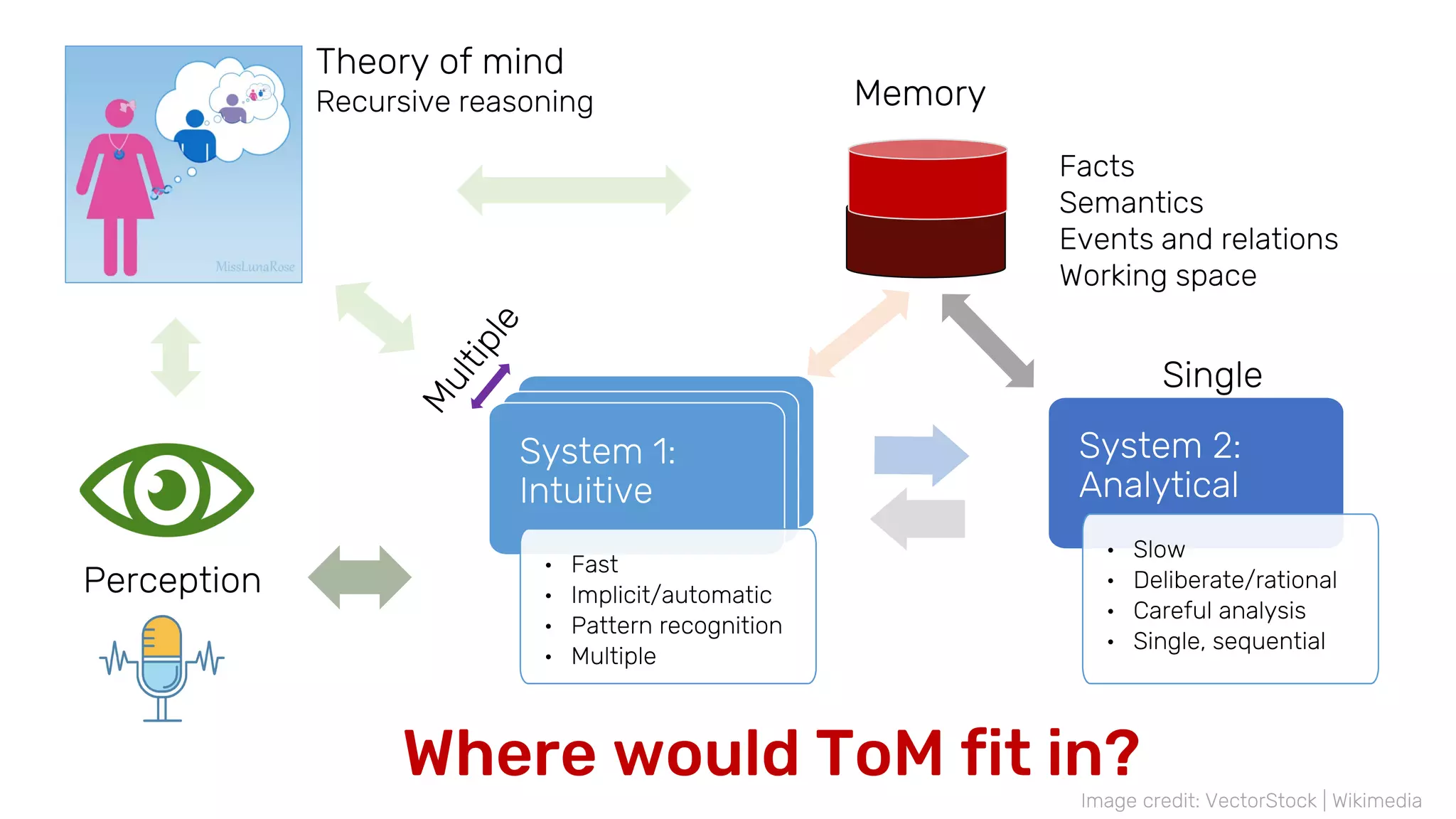

The document discusses advancements in deep learning towards integrating reasoning capabilities within AI systems, emphasizing the need for generalization, human-like reasoning, and reduced data dependency. It outlines various frameworks and methodologies for learning to reason, such as neural module networks, attention mechanisms, and probabilistic graphical models. Additionally, it highlights the importance of compositionality and memory systems in improving reasoning skills in neural networks.

![Can neural networks reason?

Reasoning is not necessarily

achieved by making logical

inferences

There is a continuity between

[algebraically rich inference] and

[connecting together trainable

learning systems]

Central to reasoning is composition

rules to guide the combinations of

modules to address new tasks

14/08/2021 17

“When we observe a visual scene, when we

hear a complex sentence, we are able to

explain in formal terms the relation of the

objects in the scene, or the precise meaning

of the sentence components. However, there

is no evidence that such a formal analysis

necessarily takes place: we see a scene, we

hear a sentence, and we just know what they

mean. This suggests the existence of a

middle layer, already a form of reasoning, but

not yet formal or logical.”

Bottou, Léon. "From machine learning to machine

reasoning." Machine learning 94.2 (2014): 133-149.](https://image.slidesharecdn.com/kdd2021-tute-all-211006044956/75/From-deep-learning-to-deep-reasoning-17-2048.jpg)

![Sequential encoding of graphs

41

• Each node is associated with random one-hot

or binary features

• Output is the features of the solution

[x1,y1, feature1],

[x2,y2, feature2],

…

[feature4],

[feature2],

…

Geometry

[node_feature1, node_feature2, edge12],

[node_feature1, node_feature2, edge13],

…

[node_feature4],

[node_feature2],

…

Graph

Convex

Hull

TSP

Shortest

Path

Minimum

Spanning

Tree

Le, Hung, Truyen Tran, and Svetha Venkatesh. "Self-attentive associative memory." In International Conference on Machine Learning, pp. 5682-5691. PMLR, 2020.](https://image.slidesharecdn.com/kdd2021-tute-all-211006044956/75/From-deep-learning-to-deep-reasoning-41-2048.jpg)

![BERT: Transformer That Predicts Its Own

Masked Parts

46

BERT is like parallel

approximate pseudo-

likelihood

• ~ Maximizing the

conditional likelihood of

some variables given the

rest.

• When the number of

variables is large, this

converses to MLE

(maximum likelihood

estimate).

[Slide credit: Truyen Tran]

https://towardsdatascience.com/bert-explained-state-of-the-art-language-model-for-nlp-f8b21a9b6270](https://image.slidesharecdn.com/kdd2021-tute-all-211006044956/75/From-deep-learning-to-deep-reasoning-150-2048.jpg)

![Visual QA as a Down-stream Task of Visual-

Language BERT Pre-trained Models

47

Numerous pre-trained visual language models during 2019-2021.

VisualBERT (Li, Liunian Harold, et al., 2019)

VL-BERT (Su, Weijie, et al., 2019)

UNITER (Chen, Yen-Chun, et al., 2019)

12-in-1 (Lu, Jiasen, et al., 2020)

Pixel-BERT (Huang, Zhicheng, et al., 2019)

OSCAR (Li, Xiujun, et al., 2020)

Single-stream model Two-stream model

ViLBERT (Lu, Jiasen, et al. , 2019)

LXMERT (Tan, Hao, and Mohit Bansal, 2019)

[Slide credit: Licheng Yu et al.]](https://image.slidesharecdn.com/kdd2021-tute-all-211006044956/75/From-deep-learning-to-deep-reasoning-151-2048.jpg)

![Theory of Mind Agent with Guilt Aversion (ToMAGA)

Update Theory of Mind

• Predict whether other’s behaviour are

cooperative or uncooperative

• Updated the zero-order belief (what

other will do)

• Update the first-order belief (what other

think about me)

Guilt Aversion

• Compute the expected material reward

of other based on Theory of Mind

• Compute the psychological rewards, i.e.

“feeling guilty”

• Reward shaping: subtract the expected

loss of the other.

Nguyen, Dung, et al. "Theory of Mind with Guilt Aversion Facilitates

Cooperative Reinforcement Learning." Asian Conference on Machine

Learning. PMLR, 2020.

[Slide credit: Dung Nguyen]](https://image.slidesharecdn.com/kdd2021-tute-all-211006044956/75/From-deep-learning-to-deep-reasoning-159-2048.jpg)

![Machine Theory of Mind Architecture (inside the Observer)

Successor

representations

next-step action

probability

goal

Rabinowitz, Neil, et al. "Machine theory of mind." International conference on machine learning. PMLR, 2018.

[Slide credit: Dung Nguyen]](https://image.slidesharecdn.com/kdd2021-tute-all-211006044956/75/From-deep-learning-to-deep-reasoning-160-2048.jpg)