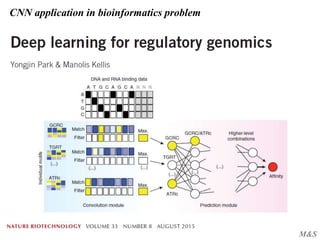

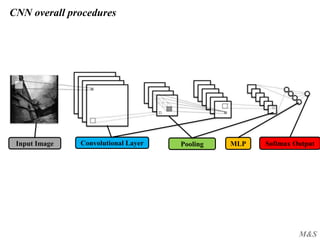

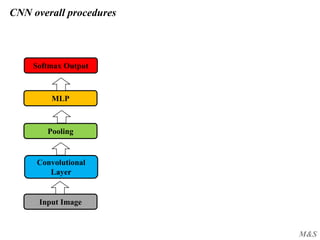

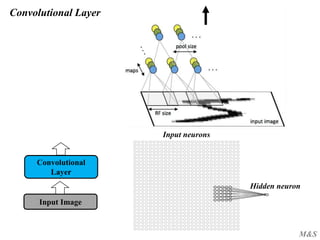

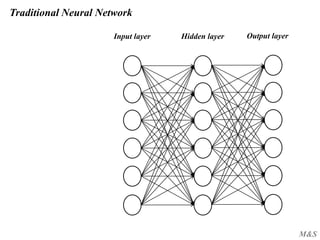

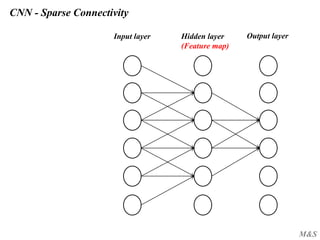

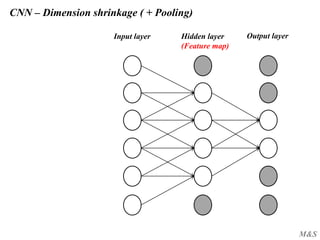

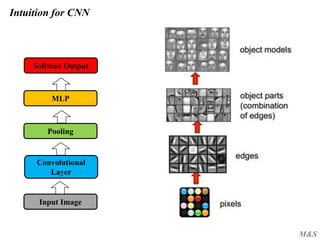

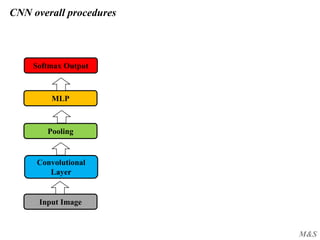

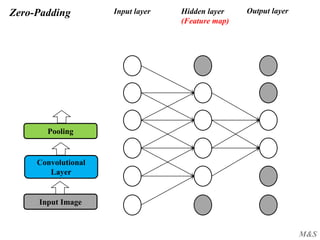

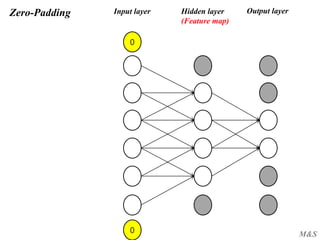

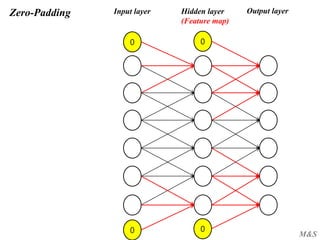

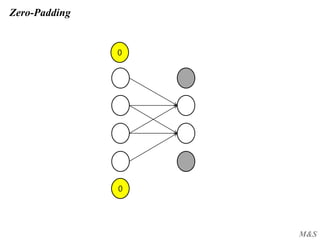

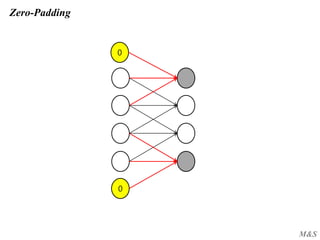

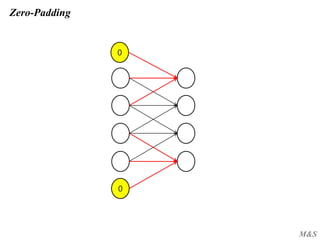

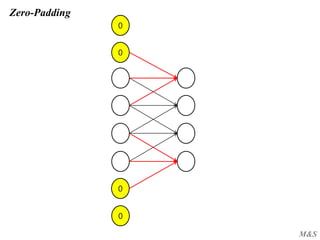

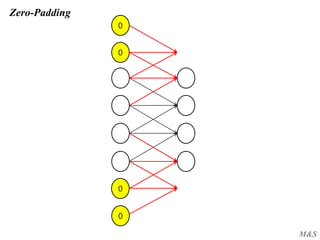

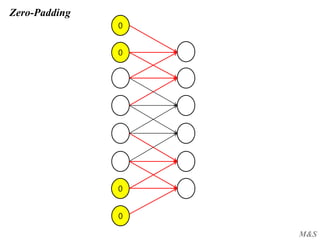

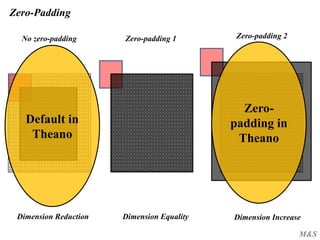

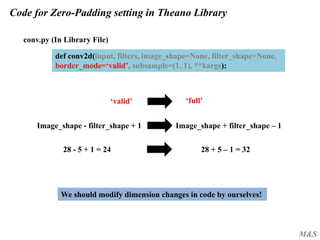

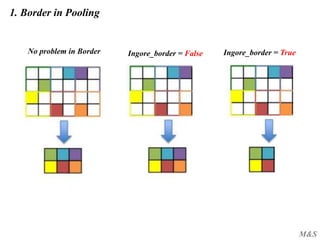

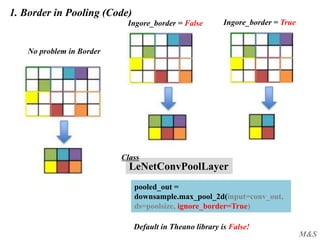

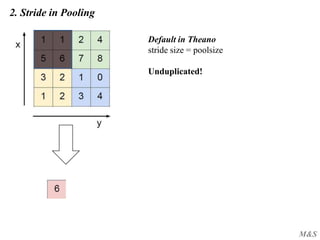

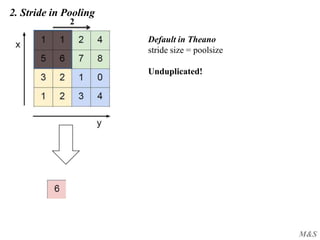

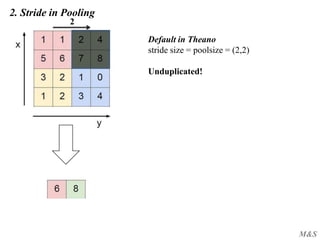

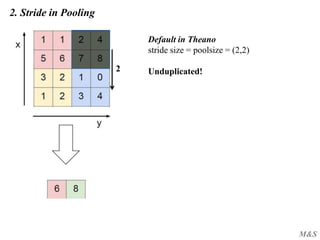

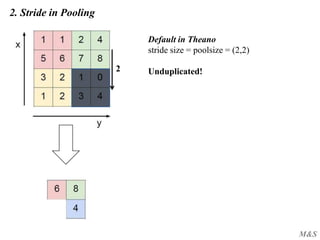

The document discusses convolutional neural networks (CNNs), detailing various concepts such as tensor notation, convolution operations, pooling methods, and the architecture of CNNs including input layers, convolutional layers, and multi-layer perceptrons (MLPs). It includes code examples, particularly in Theano, illustrating the implementation of CNNs, and elaborates on the importance of components like zero-padding and activation functions. Additionally, it touches on the application of CNNs in bioinformatics.

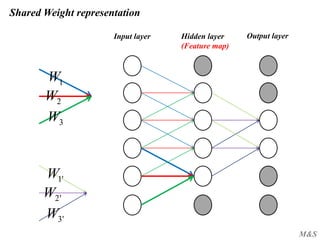

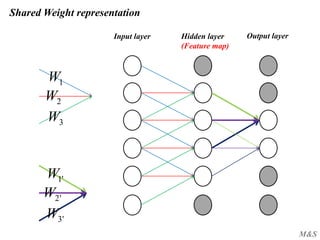

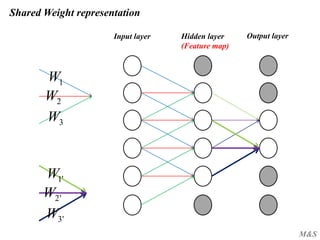

![M&S

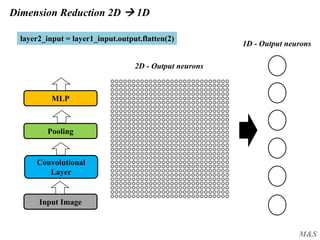

CNN Tensor notation in Theano

- Input Images -

4D tensor

1D tensor

[number of feature maps at layer m, number of feature maps at layer m-1,

filter height, filter width]

ij

klx

op

qrW

mb

[ i, j, k, l ] =

[ o, p, q, r ] =

[ m ] =

- Weight -

- Bias -

[n’th feature map number]

[mini-batch size, number of input feature

maps, image height, image width]](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-15-320.jpg)

![M&S

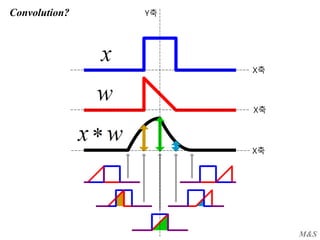

Convolution?

daatwax

twxty

)()(

))(()(

a

anwaxny ][][][

- Continuous Variables -

- Discrete Variables -](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-17-320.jpg)

![M&S

Convolution?

a

anwaxny ][][][

- Discrete Variables -

][][ awax ][][ awax

)]([][ nawax

Y-axis transformation](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-18-320.jpg)

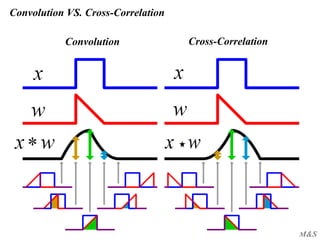

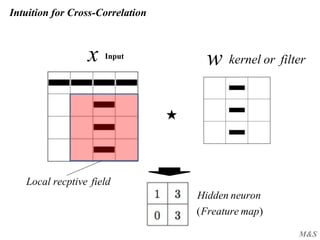

![M&S

Cross-Correlation?

a

nawaxnwxny ][][])[(][

- Discrete Variables (In real number) -

][][ awax ][][ nawax n step move

★](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-20-320.jpg)

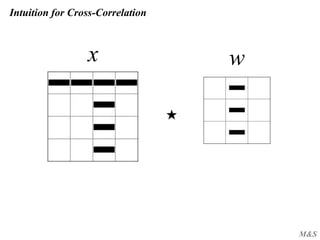

![M&S

Cross-Correlation in 2D

Output (y) Kernel (w) Input (x)

n m

nmwjnimx

jiwxjiy

],[],[

],)[(],[](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-22-320.jpg)

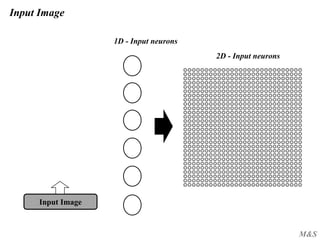

![M&S

Input Image

Input Image

- Input Images -

4D tensor

[mini-batch size, number of input feature

maps, image height, image width]ij

klx

5

...

28

28

500

7

[ i, j, k, l ] =

Mini batch 1

5

...

28

28

500

8

Mini batch 100

. . .

50,000 images

in the training data](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-54-320.jpg)

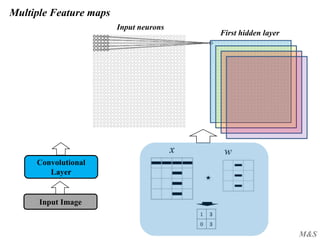

![M&S

Weight tensor

Input Image

Convolutional

Layer

4D tensor

[number of feature maps at layer m, number of feature maps at layer m-1,

filter height, filter width]

op

qrW [ o, p, q, r ] =

- Weight -](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-55-320.jpg)

![M&S

Exercise for Input and Weight tensor

11

11x

11

11W

Input layer

Convolutional layer 1 Convolutional layer 2

[ 1, 1, 1, 1 ]

[ 1, 1, 1, 1 ]](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-56-320.jpg)

![M&S

Code for Convolutional Layer

28

28

8

def evaluate_lenet5(learning_rate=0.1,

n_epochs=2, dataset=‘minist.pkl.gz’,

nkerns=[20, 50], batch_size=500):

LeNetConvPoolLayer

image_shape=(batch_size, 1, 28, 28)

filter_shape=(nkerns[0], 1, 5, 5)

poolsize=(2, 2)

image_shape=(batch_size, nkerns[0], 12, 12)

filter_shape=(nkerns[1], nkerns[0], 5, 5)

poolsize=(2, 2)

Layer0 – Convolutional layer 1

Layer1 – Convolutional layer 2

5

5

20

24

24

20

5

5

12

12

8

8

4

4

20 50 50 50

28 – 5 + 1 = 24

Convolution

24 / 2 = 12

Pooling

12 – 5 + 1 = 8

Convolution

8 / 2 = 4

Pooling

Class](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-57-320.jpg)

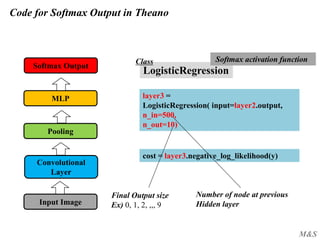

![M&S

Code for MLP in Theano

Input Image

Convolutional

Layer

Pooling

MLP layer2 = HiddenLayer( rng,

input=layer2_input,

n_in-nkerns[1] * 4 * 4,

n_out=500,

activation = T.tanh)

HiddenLayer

Class

Last output size for C+P

Number of node at Hidden layer

Activation function at Hidden layer

***In order to extend the number of Hidden Layer in MLP,

We need to make layer3 by copying this code***](https://image.slidesharecdn.com/cnnpresentation-160427115016/85/Convolutional-Neural-Network-CNN-presentation-from-theory-to-code-in-Theano-85-320.jpg)