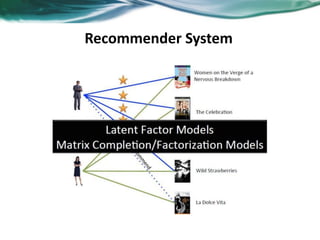

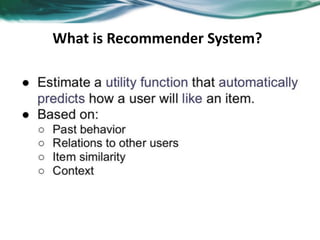

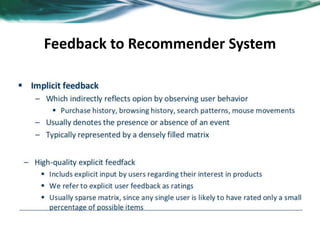

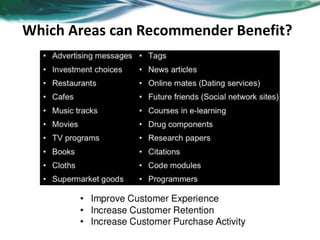

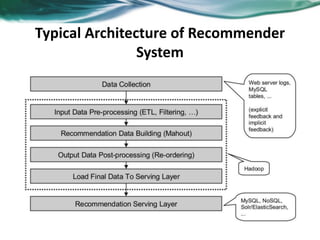

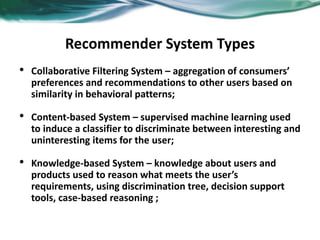

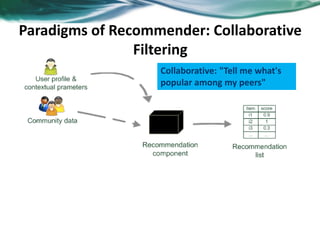

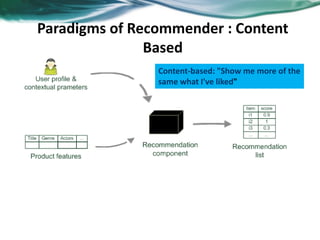

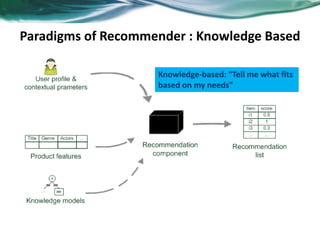

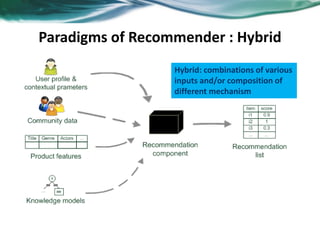

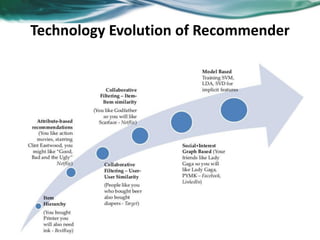

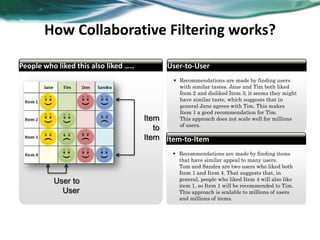

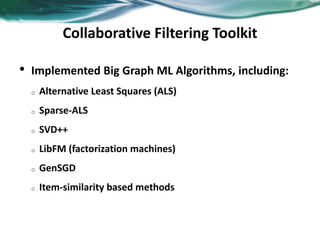

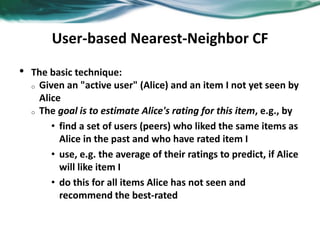

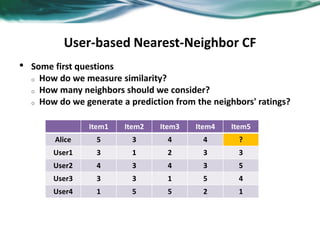

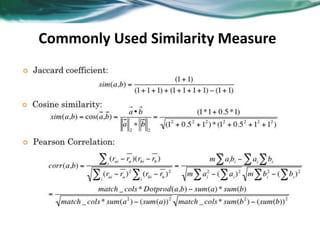

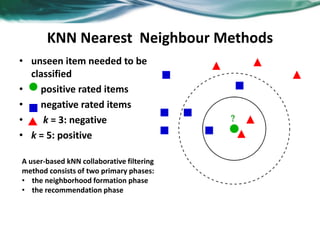

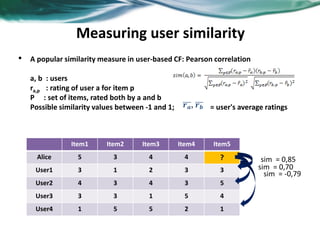

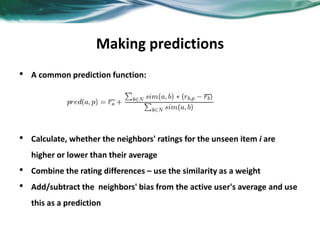

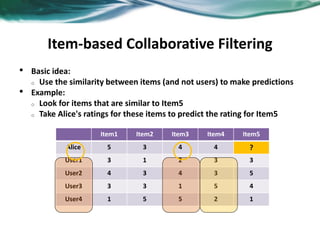

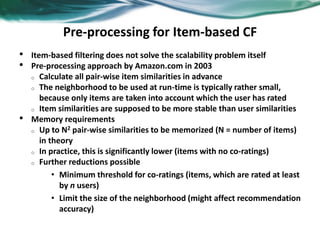

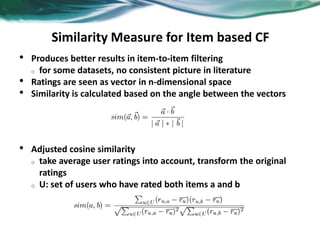

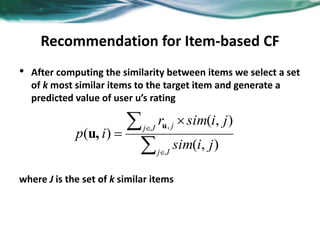

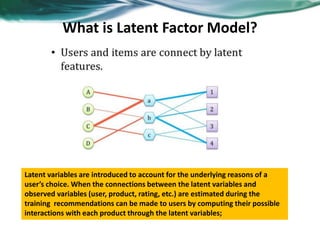

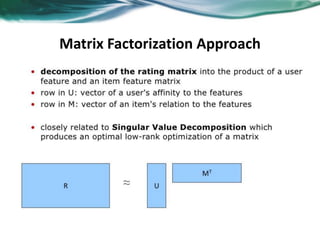

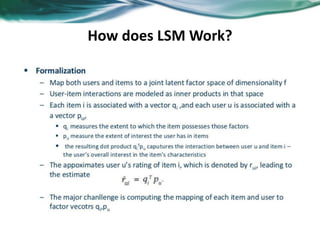

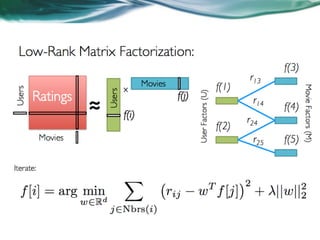

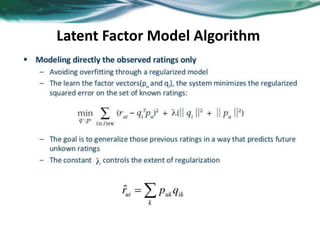

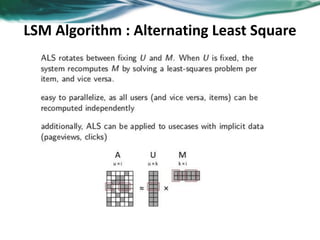

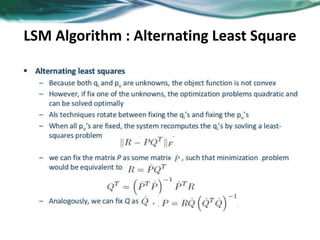

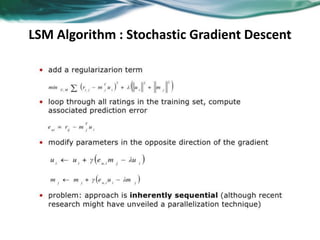

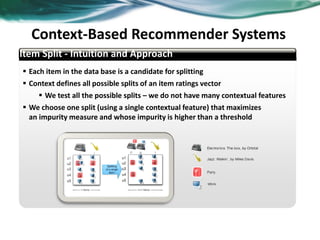

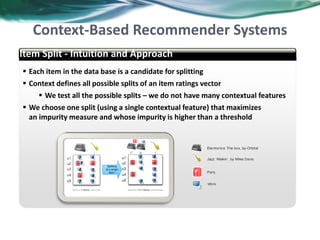

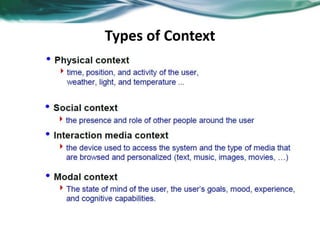

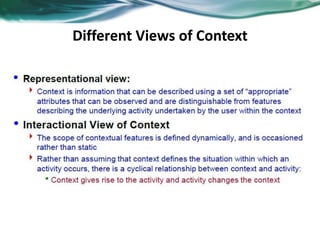

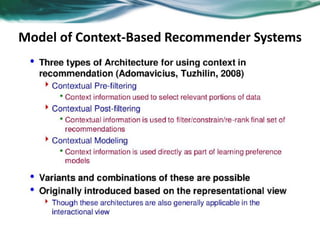

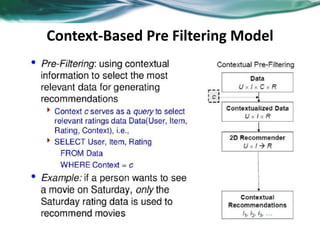

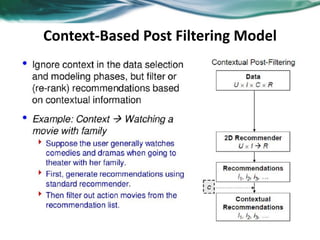

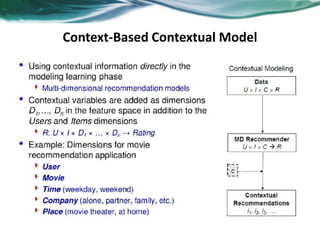

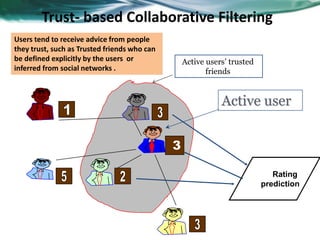

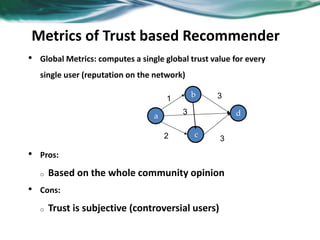

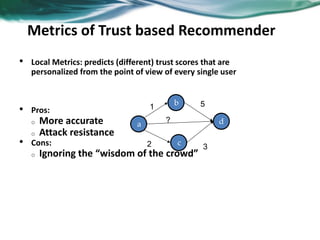

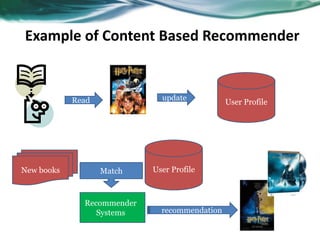

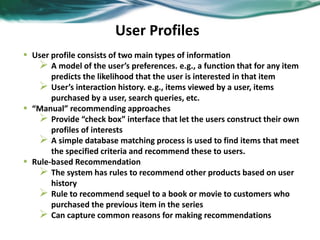

The document provides an overview of recommender systems. It discusses the typical architecture of recommender systems and describes three main types: collaborative filtering systems, content-based systems, and knowledge-based systems. It also covers paradigms like collaborative filtering, content-based, knowledge-based, and hybrid recommender systems. The document then focuses on collaborative filtering techniques like user-based nearest neighbor collaborative filtering and item-based collaborative filtering. It also discusses latent factor models, matrix factorization approaches, and context-based recommender systems.