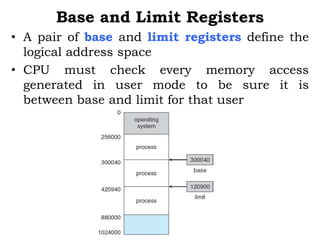

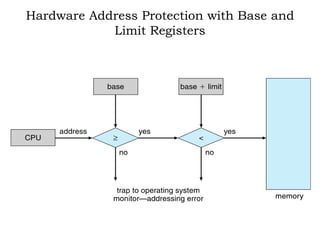

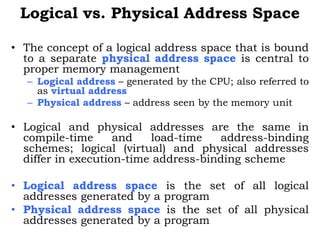

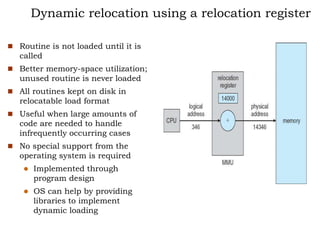

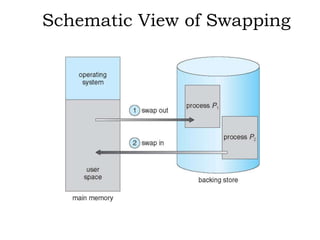

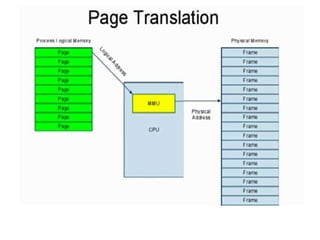

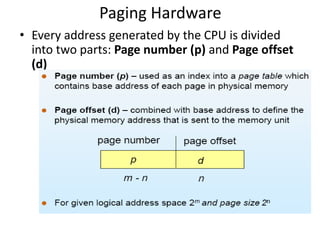

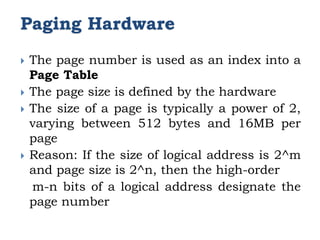

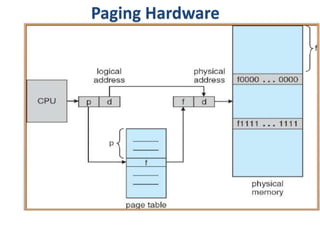

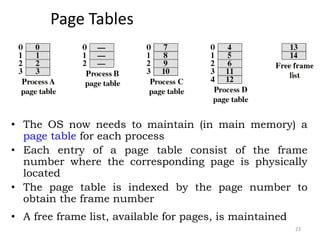

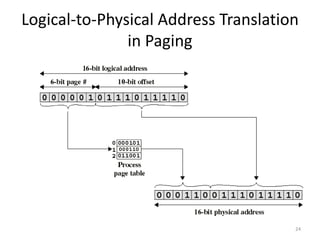

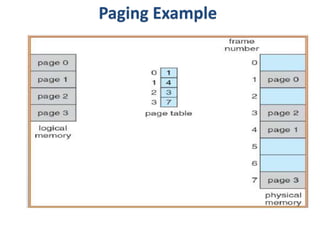

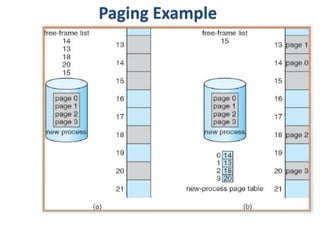

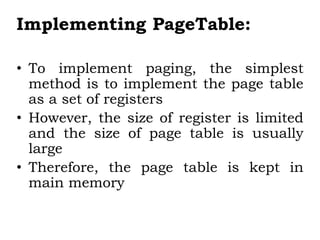

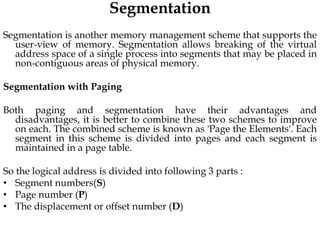

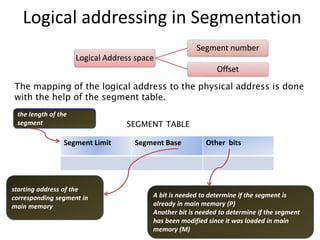

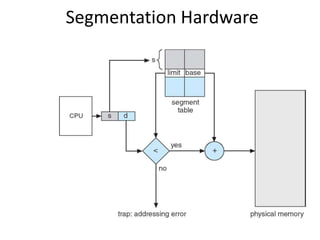

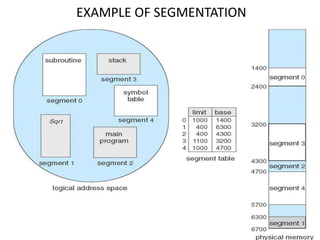

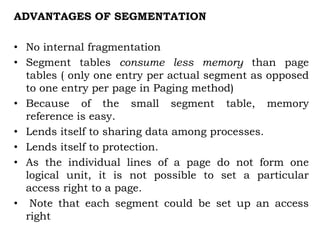

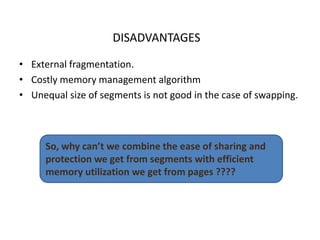

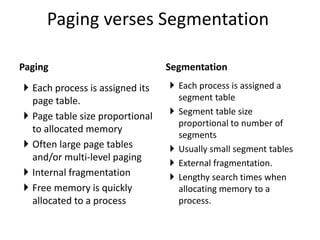

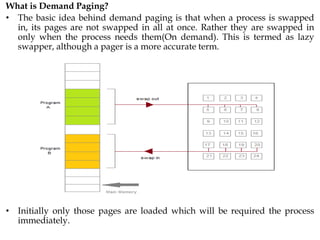

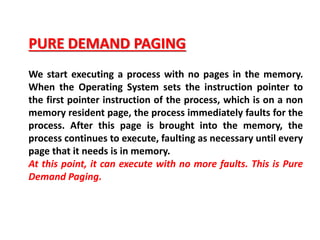

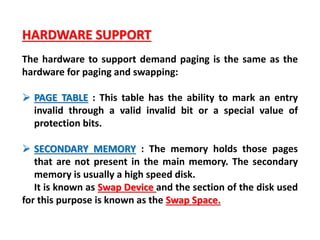

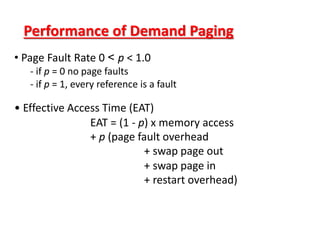

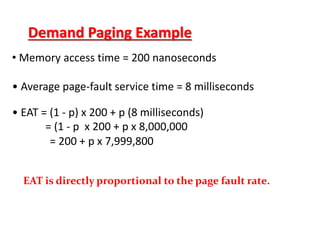

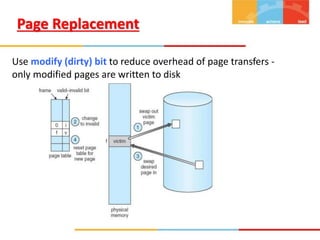

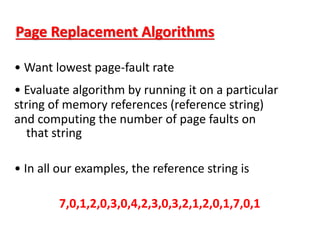

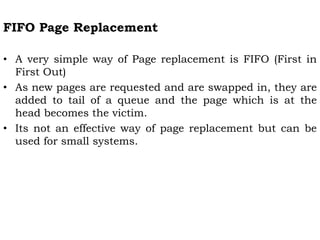

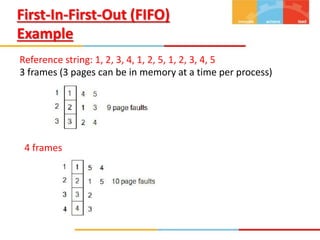

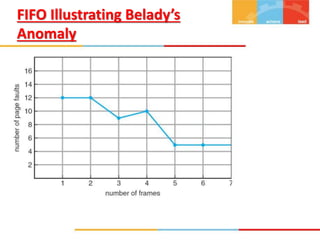

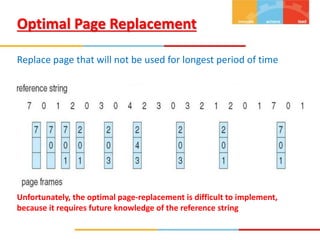

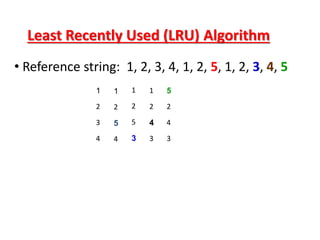

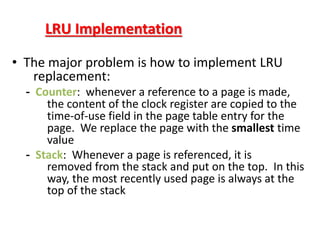

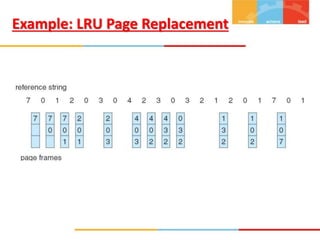

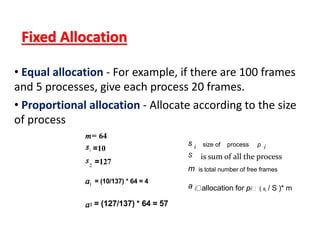

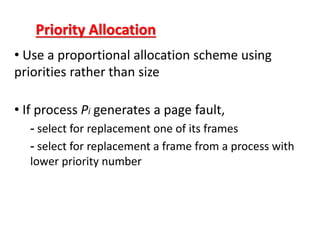

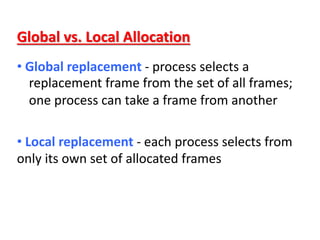

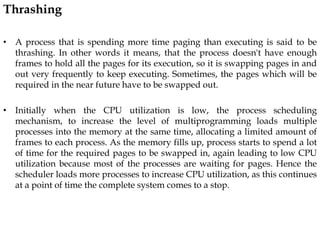

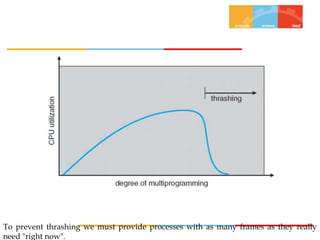

The document discusses various topics related to memory management in operating systems including swapping, contiguous memory allocation, paging, segmentation, virtual memory concepts like demand paging, page replacement, and thrashing. It provides details on page tables, segmentation hardware, logical to physical address translation, and performance aspects of demand paging. The key aspects covered are memory management techniques to overcome fragmentation and enable efficient use of limited main memory.