This document outlines strategies for tuning program performance on POWER9 processors. It discusses how performance bottlenecks can arise in the processor front-end and back-end and describes some compiler flags, pragmas, and source code techniques for addressing these bottlenecks. These include techniques like unrolling, inlining, prefetching, parallelization with OpenMP, and leveraging GPUs with OpenACC. Hands-on exercises are provided to demonstrate applying these optimizations to a Jacobi application and measuring the performance impacts.

![17

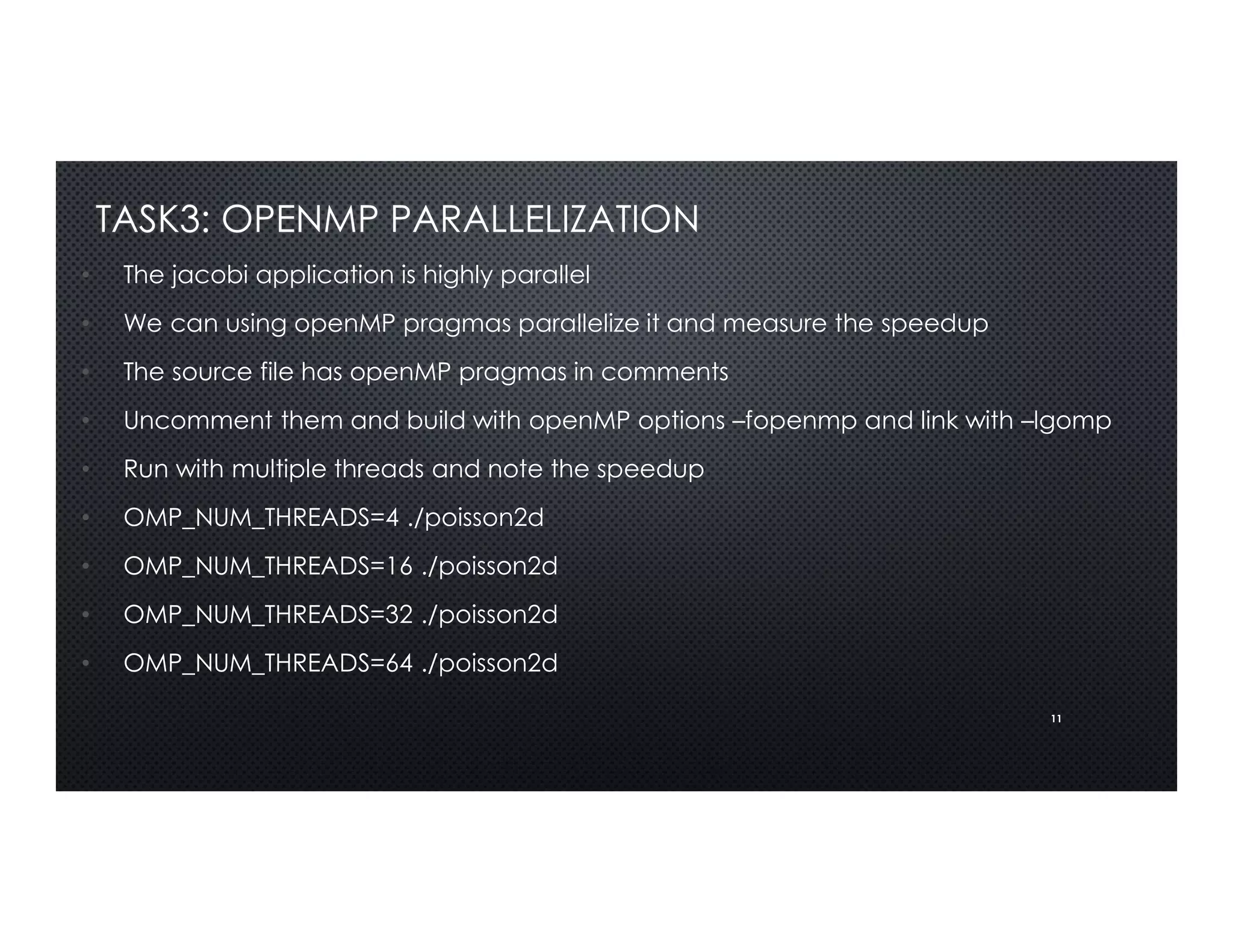

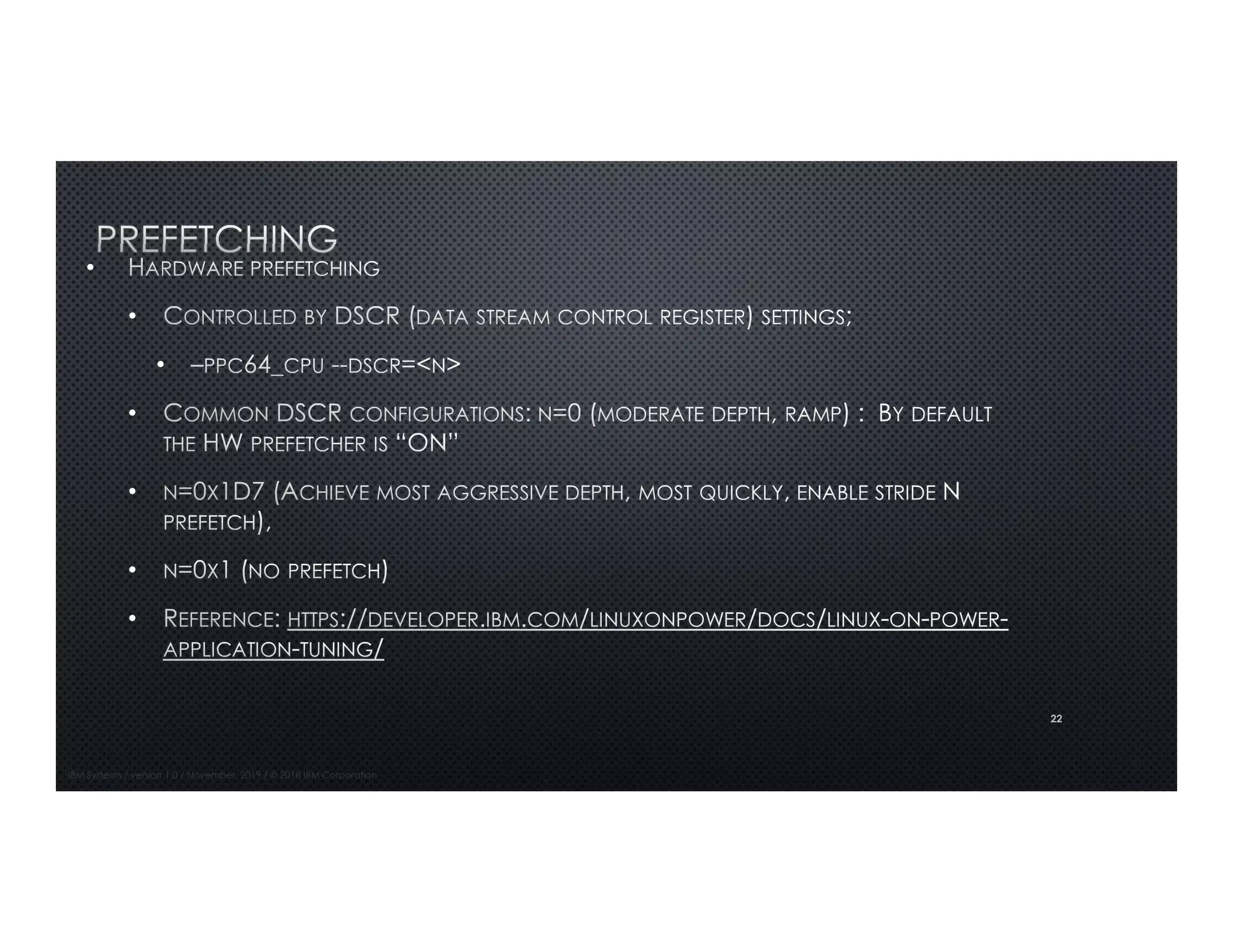

TASK3.1: OPENMP PARALLELIZATION

• Running the openMP parallel version you will see speedups with increasing number of OMP_NUM_THREADS

• [student02@gorgon Task3]$ OMP_NUM_THREADS=1 ./poisson2d

• 1000x1000: Ref: 2.3467 s, This: 2.5508 s, speedup: 0.92

• [student02@gorgon Task3]$ OMP_NUM_THREADS=4 ./poisson2d

• 1000x1000: Ref: 2.3309 s, This: 0.6394 s, speedup: 3.65

• [student02@gorgon Task3]$ OMP_NUM_THREADS=16 ./poisson2d

• 1000x1000: Ref: 2.3309 s, This: 0.6394 s, speedup: 4.18

• Likewise if you bind threads across different cores you will see greater speedup

• [student02@gorgon Task3]$ OMP_PLACES="{0},{1},{2},{3}" OMP_NUM_THREADS=4 ./poisson2d

• 1000x1000: Ref: 2.3490 s, This: 1.9622 s, speedup: 1.20

• [student02@gorgon Task3]$ OMP_PLACES="{0},{5},{10},{15}" OMP_NUM_THREADS=4 ./poisson2d

• 1000x1000: Ref: 2.3694 s, This: 0.6735 s, speedup: 3.52](https://image.slidesharecdn.com/openpoweriiscdec4-v1-191218233428/75/OpenPOWER-Application-Optimization-17-2048.jpg)

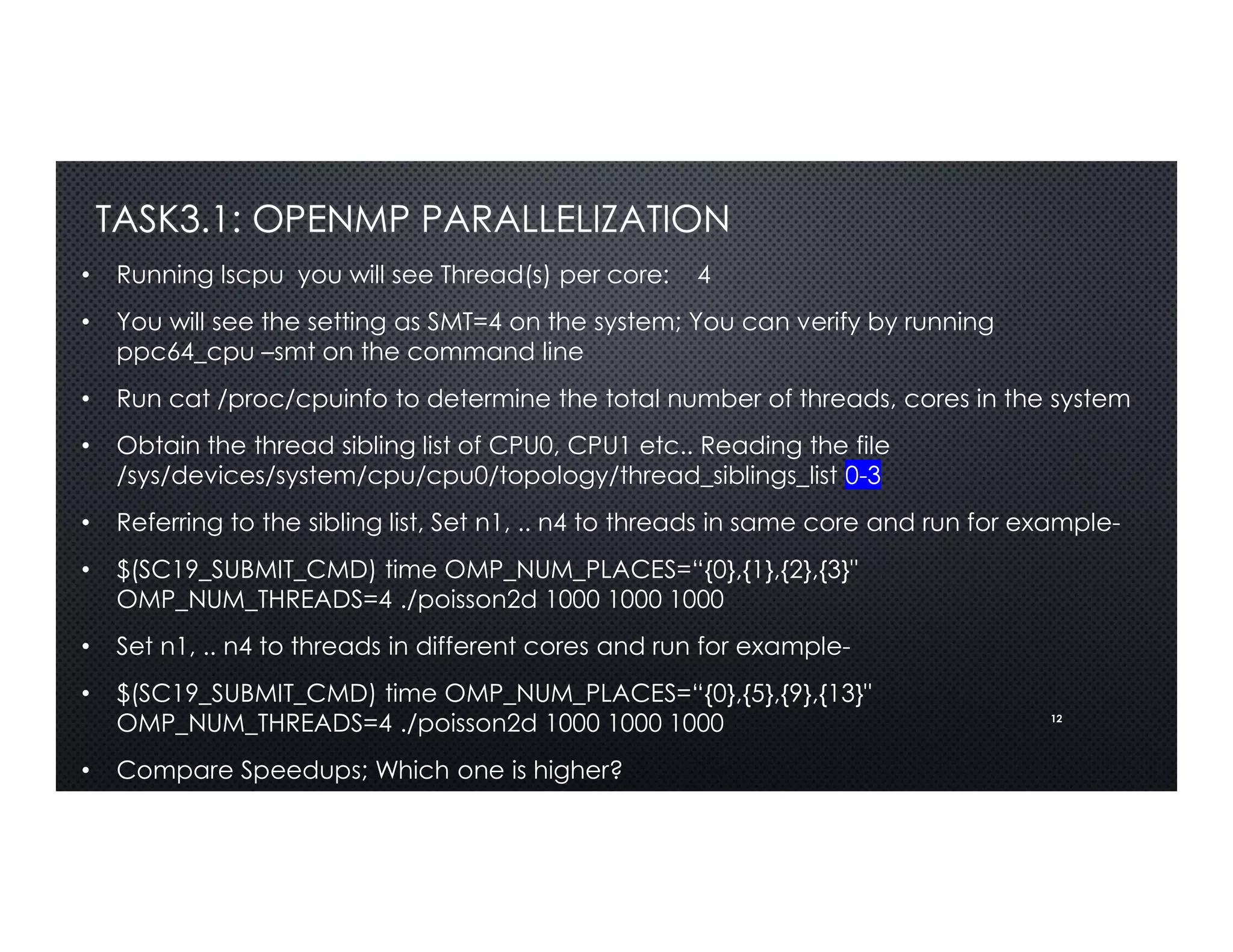

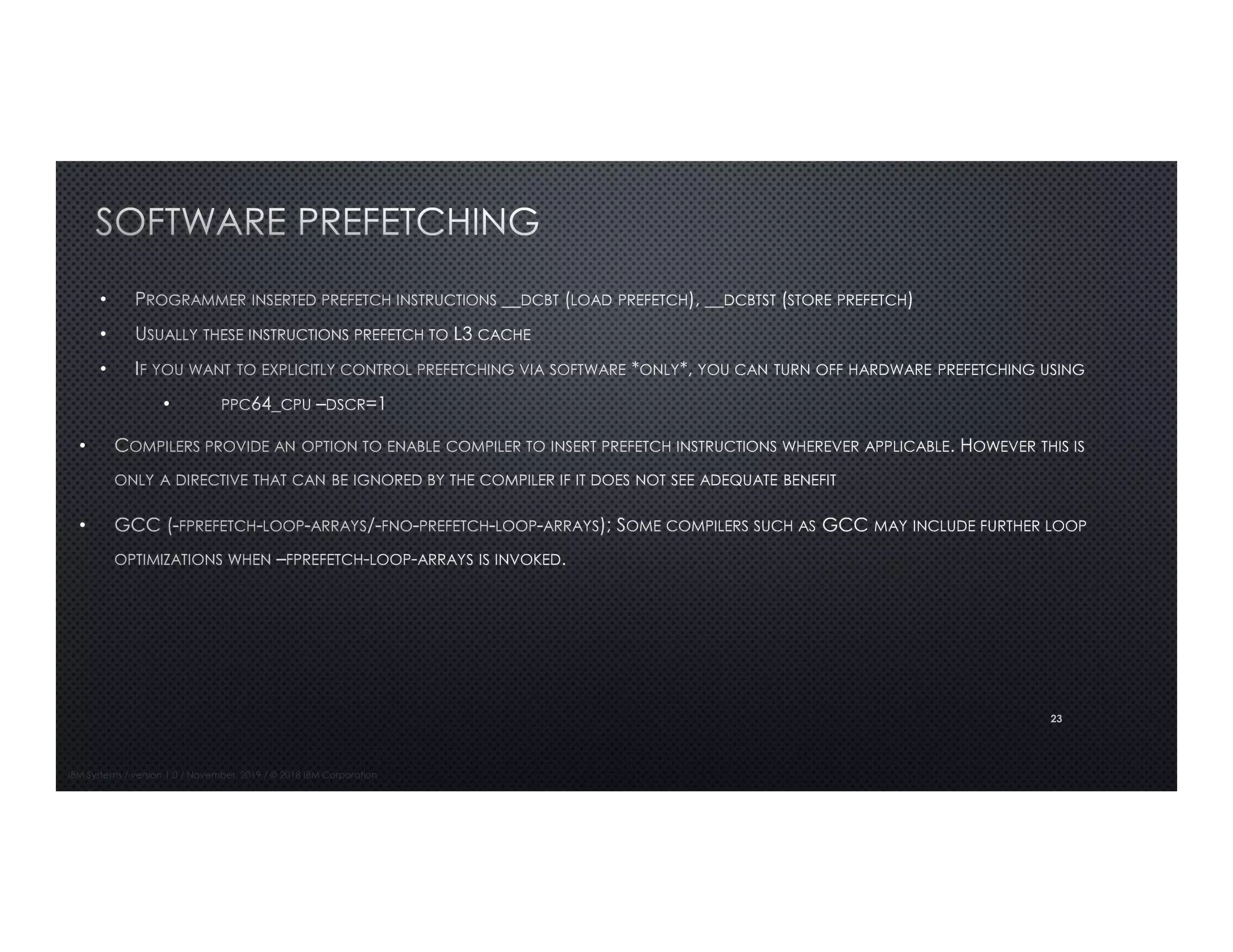

![18

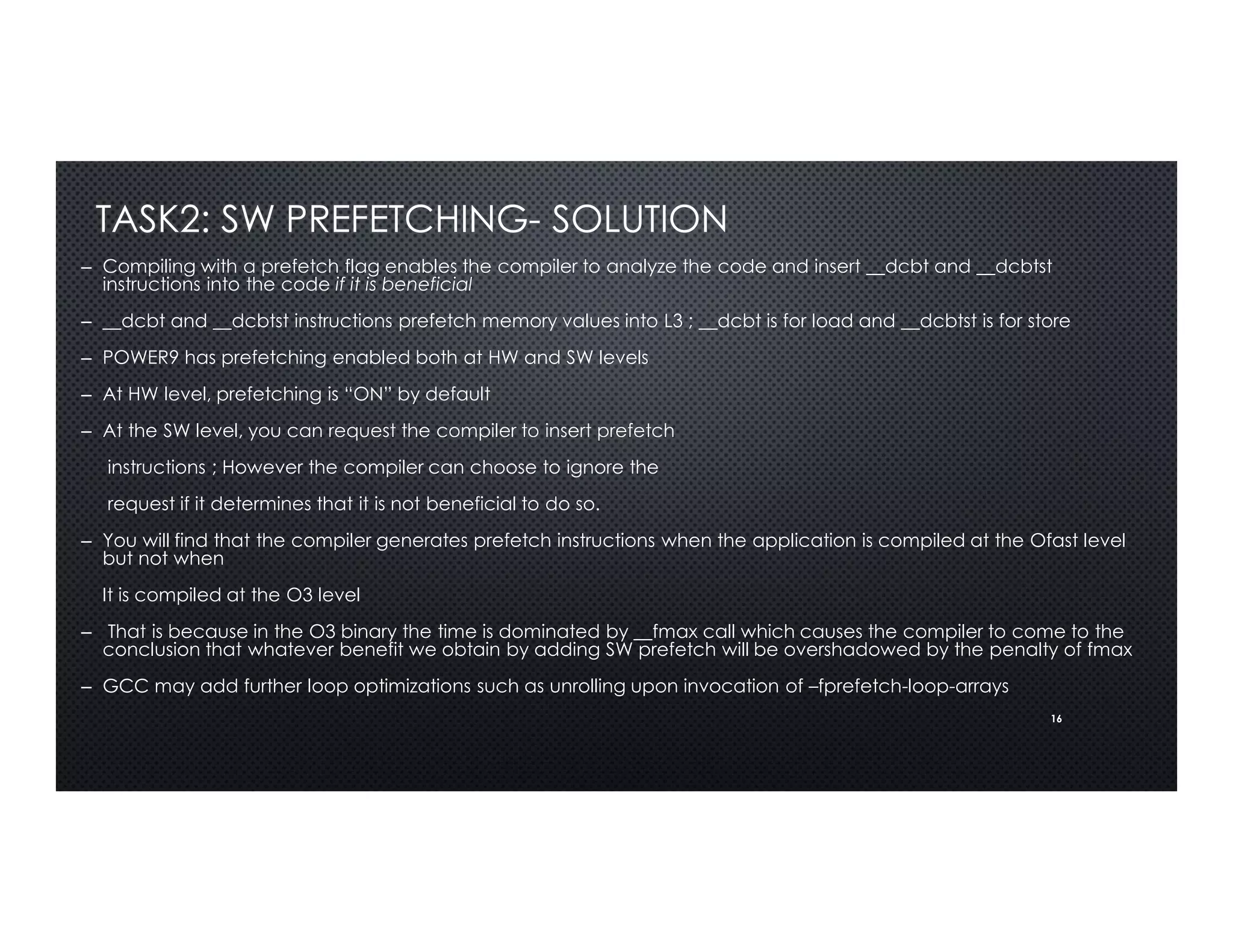

TASK4: ACCELERATE USING GPUS

• Building and running poisson2d as it is, you will see no speedups

• [student02@gorgon Task4]$ make poisson2d

• /opt/pgi/linuxpower/19.10/bin/pgcc -c -DUSE_DOUBLE -Minfo=accel -fast -acc -ta=tesla:cc70,managed poisson2d_serial.c -o

poisson2d_serial.o

• /opt/pgi/linuxpower/19.10/bin/pgcc -DUSE_DOUBLE -Minfo=accel -fast -acc -ta=tesla:cc70,managed poisson2d.c poisson2d_serial.o -

o poisson2d

• [student02@gorgon Task4]$ ./poisson2d

• ….

• 2048x2048: 1 CPU: 5.0743 s, 1 GPU: 4.9631 s, speedup: 1.02

• If you build poisson2d.solution which is the same as poisson2d.c with the OpenACC pragmas and run them on the platform which will

accelerate by pushing the parallel portions to the GPU you will see a massive speedup

• [student02@gorgon Task4]$ make poisson2d.solution

• /opt/pgi/linuxpower/19.10/bin/pgcc -DUSE_DOUBLE -Minfo=accel -fast -acc -ta=tesla:cc70,managed poisson2d.solution.c

poisson2d_serial.o -o poisson2d.solution

• [student02@gorgon Task4]$ ./poisson2d.solution

• 2048x2048: 1 CPU: 5.0941 s, 1 GPU: 0.1811 s, speedup: 28.13](https://image.slidesharecdn.com/openpoweriiscdec4-v1-191218233428/75/OpenPOWER-Application-Optimization-18-2048.jpg)

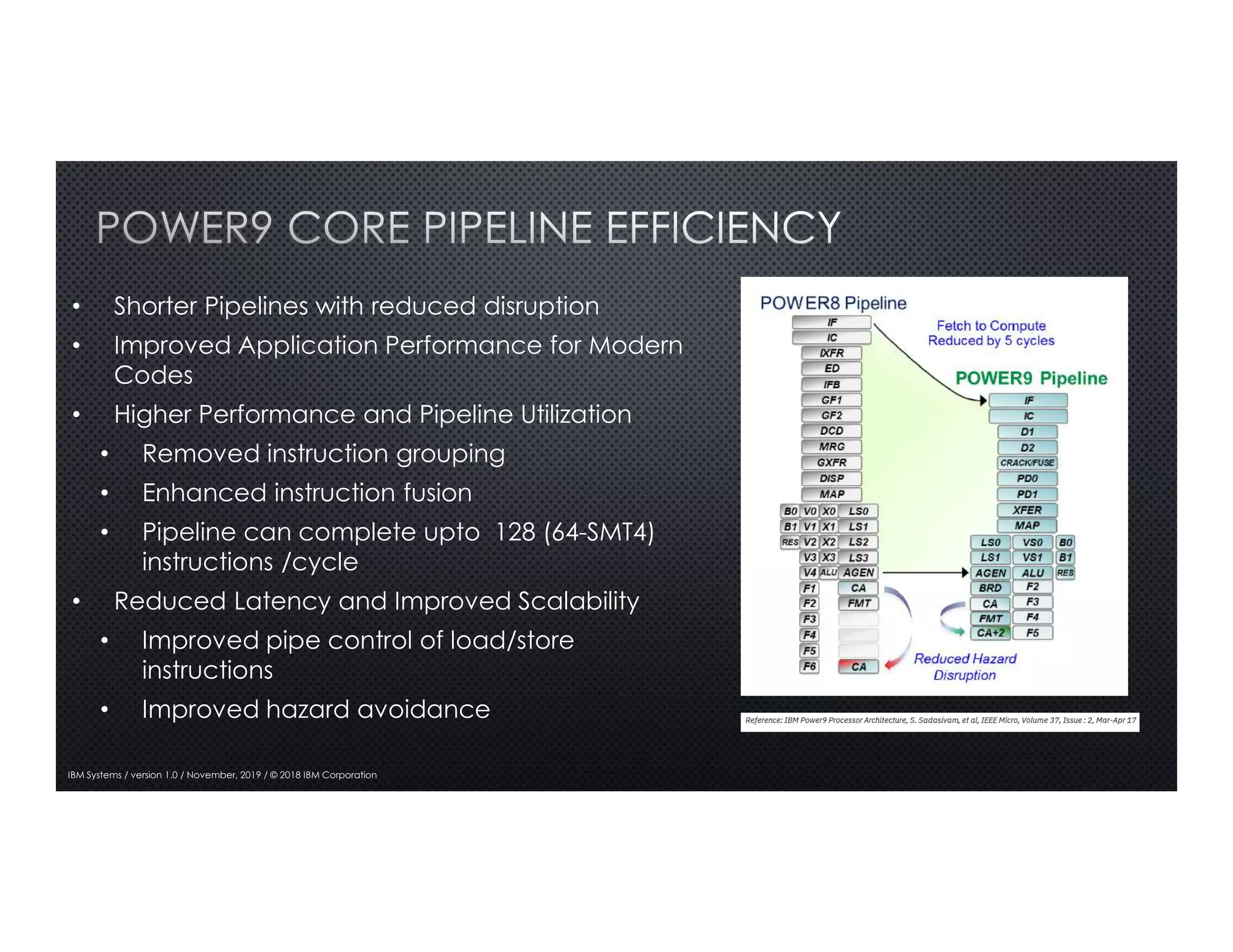

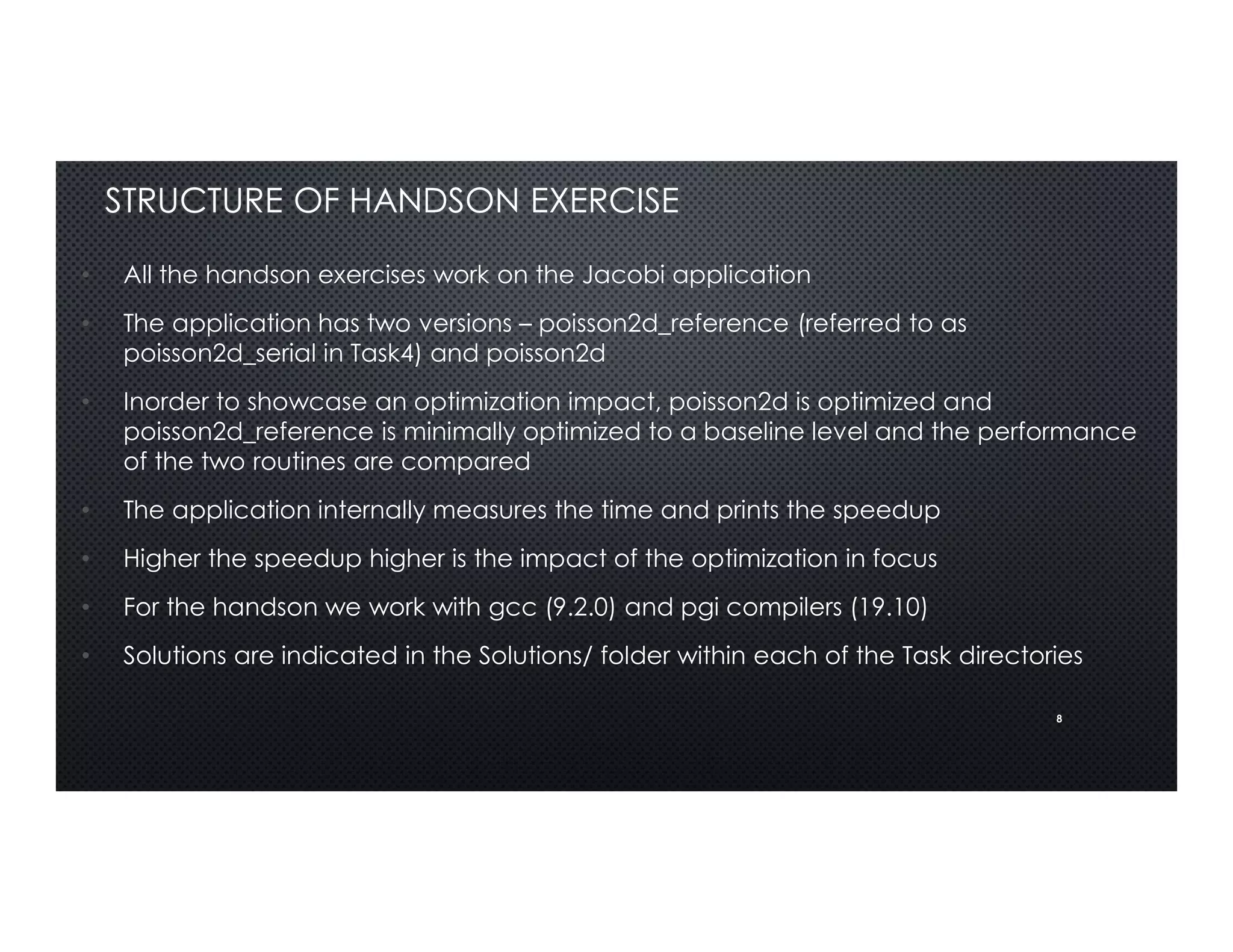

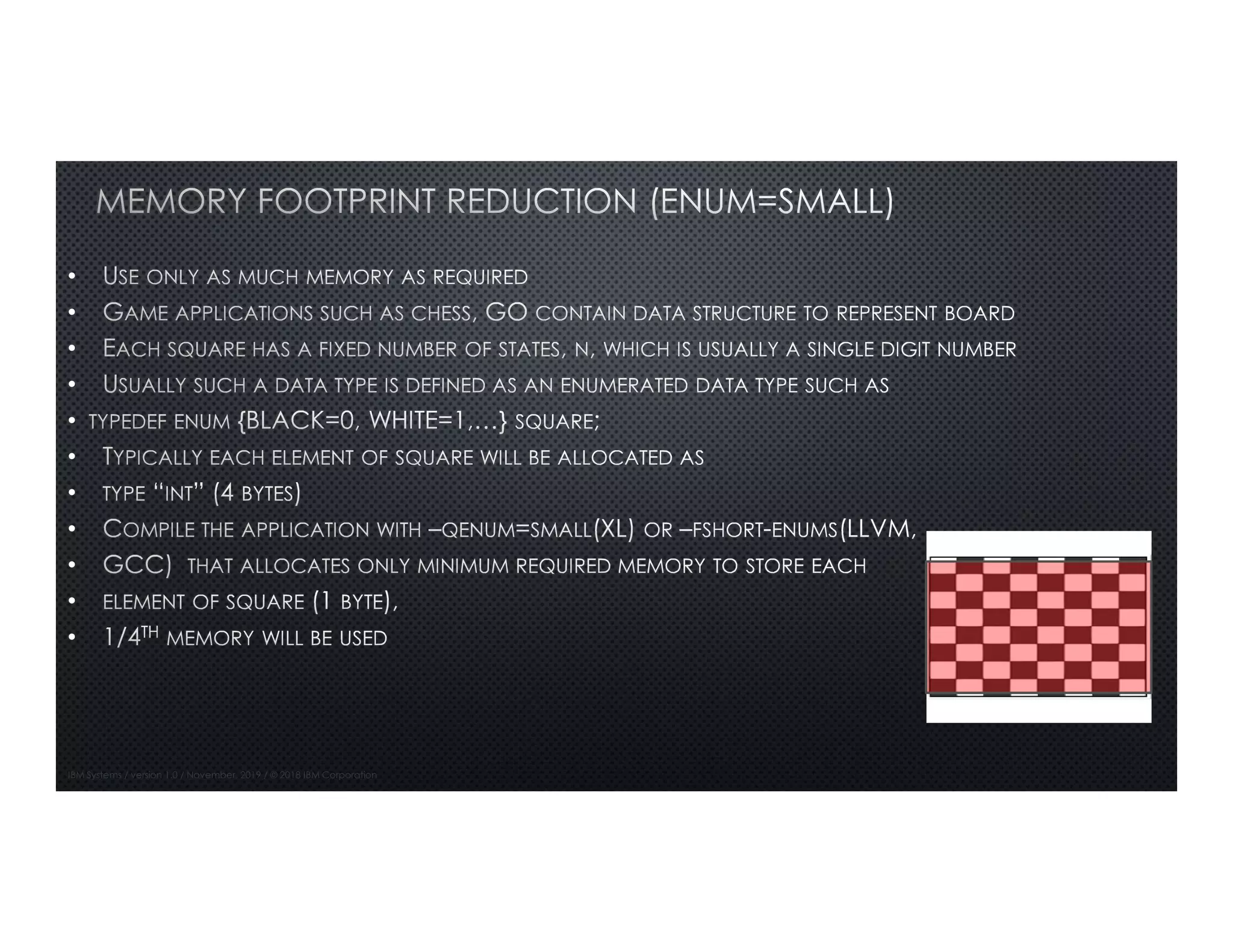

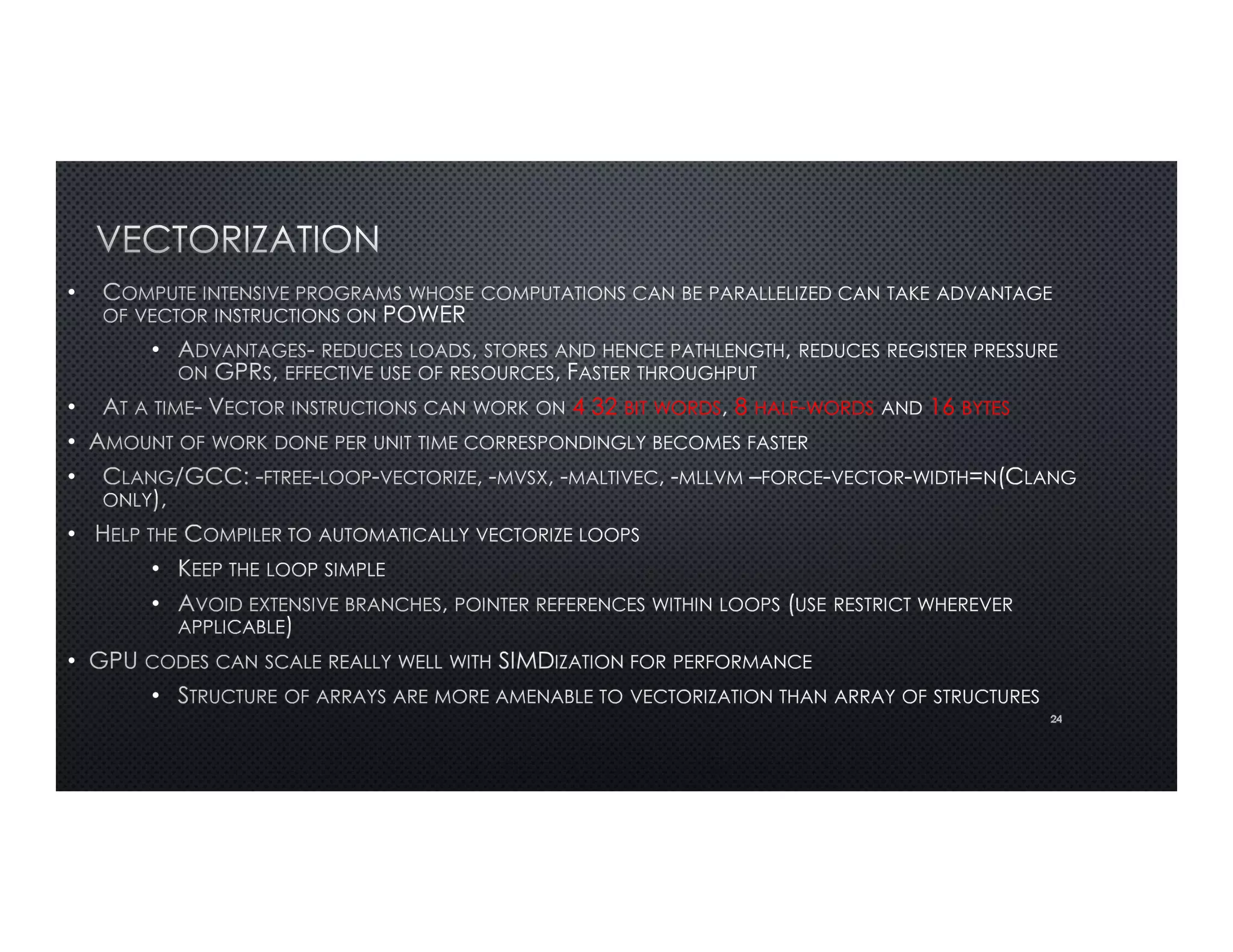

![25

Flag Kind XL GCC/LLVM

Can be simulated

in source

Benefit Drawbacks

Unrolling -qunroll -funroll-loops

#pragma

unroll(N)

Unrolls loops ; increases

opportunities pertaining to

scheduling for compiler Increases register pressure

Inlining -qinline=auto:level=N -finline-functions

Inline always

attribute or

manual inlining

increases opportunities for

scheduling; Reduces

branches and loads/stores

Increases register

pressure; increases code

size

Enum small -qenum=small -fshort-enums -manual typedef Reduces memory footprint

Can cause issues in

alignment

isel

instructions -misel Using ?: operator

generates isel instruction

instead of branch;

reduces pressure on branch

predictor unit

latency of isel is a bit

higher; Use if branches

are not predictable easily

General

tuning

-qarch=pwr9,

-qtune=pwr9

-mcpu=power8,

-mtune=power9

Turns on platform specific

tuning

64bit

compilation-q64 -m64

Prefetching

-

qprefetch[=aggressiv

e] -fprefetch-loop-arrays

__dcbt/__dcbtst,

_builtin_prefetch reduces cache misses

Can increase memory

traffic particularly if

prefetched values are not

used

Link time

optimizatio

n -qipo -flto , -flto=thin

Enables Interprocedural

optimizations

Can increase overall

compilation time

Profile

directed

-fprofile-generate and

–fprofile-use LLVM has

an intermediate step](https://image.slidesharecdn.com/openpoweriiscdec4-v1-191218233428/75/OpenPOWER-Application-Optimization-25-2048.jpg)