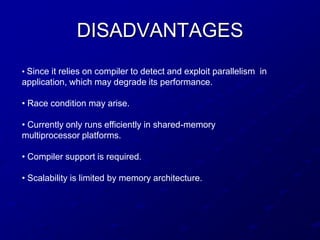

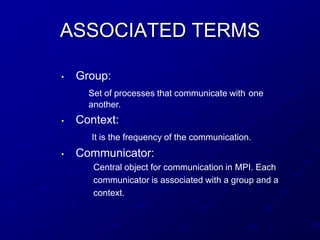

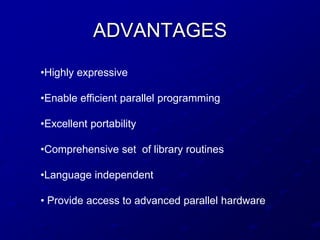

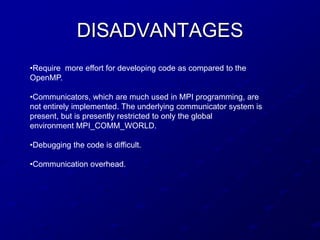

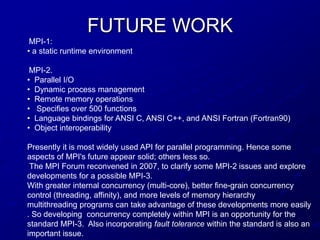

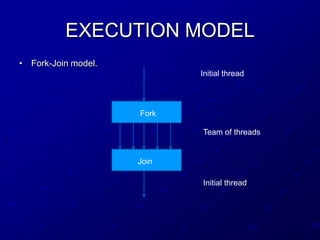

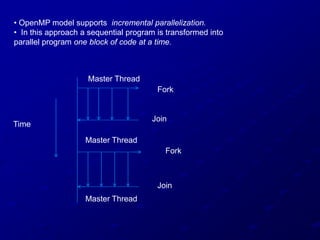

OpenMP and MPI are two common APIs for parallel programming. OpenMP uses a shared memory model where threads have access to shared memory and can synchronize access. It is best for multi-core processors. MPI uses a message passing model where separate processes communicate by exchanging messages. It provides portability and is useful for distributed memory systems. Both have advantages like performance and portability but also disadvantages like difficulty of debugging for MPI. Future work may include improvements to threading support and fault tolerance in MPI.

![DIRECTIVES

• Parallel construct

#pragma omp parallel [clause,clause,…]

structured block

• Work sharing constuct

• Loop construct

#pragma omp for [clause, clause,…]

for loop

• Section construct

#pragma omp sections [clause,clause,…]

{

#pragma omp section

structured block

#pragma omp section

structured block

}](https://image.slidesharecdn.com/2012cs13aca-121212043702-phpapp01/85/MPI-n-OpenMP-8-320.jpg)

![•Single construct

#pragma omp single [clause, clause,…]

structured block

•Work sharing construct

• Combined parallel work sharing construct

#pragma omp parallel

{ #pragma omp parallel for

#pragma omp for for loop

for loop

}](https://image.slidesharecdn.com/2012cs13aca-121212043702-phpapp01/85/MPI-n-OpenMP-9-320.jpg)

![SYNCHRONIZATION

CONSTRUCTS

• Barrier construct

#pragma omp barrier

• Ordered construct

#pragma omp ordered

• Critical construct

#pragma omp critical [name]

structured block

• Atomic construct

#pragma omp atomic

statement

• Master construct

#pragma omp master

structured block

• Locks](https://image.slidesharecdn.com/2012cs13aca-121212043702-phpapp01/85/MPI-n-OpenMP-10-320.jpg)

![OTHER DIRECTIVE

• Flush directive

#pragma omp flush [(list)]

• Threadprivate direcive

#pragma omp threadprivate (list)](https://image.slidesharecdn.com/2012cs13aca-121212043702-phpapp01/85/MPI-n-OpenMP-13-320.jpg)