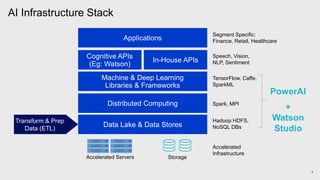

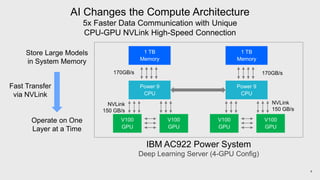

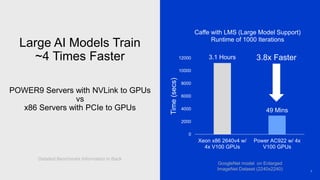

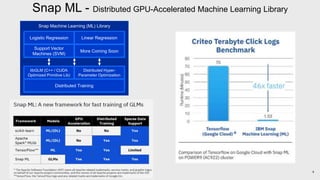

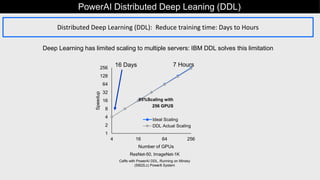

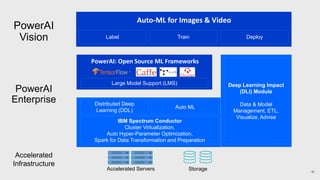

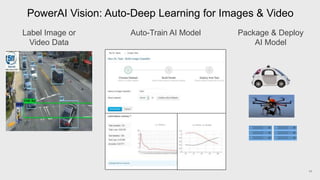

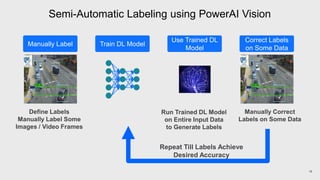

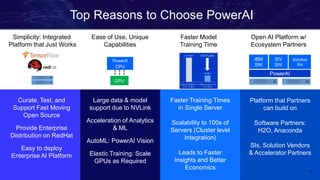

PowerAI is IBM's open-source based enterprise AI platform. It provides faster training times through hardware and software optimizations like GPU acceleration. PowerAI uses open-source frameworks like Caffe and TensorFlow and tools for data scientists. It also offers capabilities like distributed deep learning to reduce training time from days to hours across many servers. PowerAI Vision provides auto-deep learning for images and video with automatic model training and deployment.