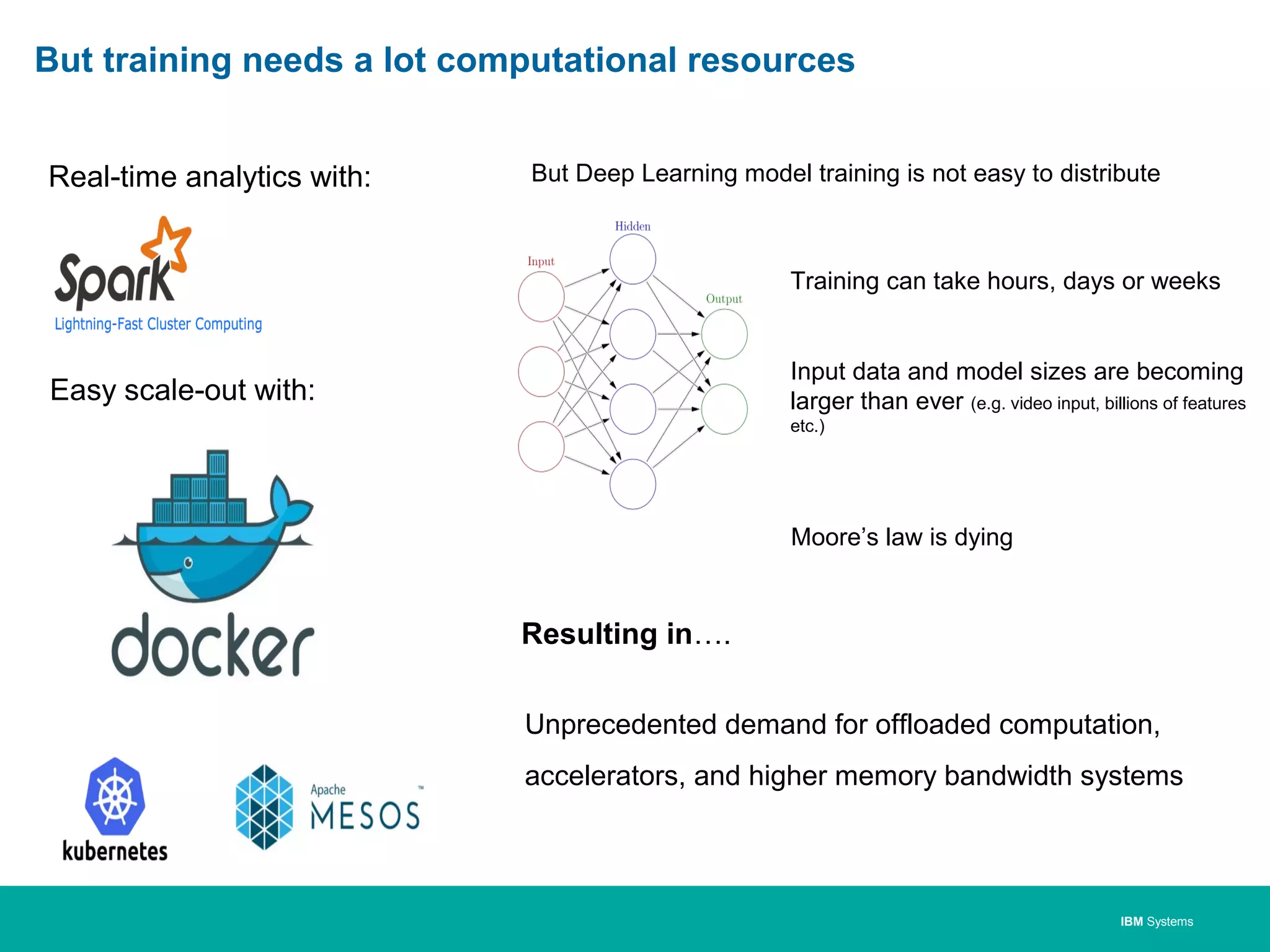

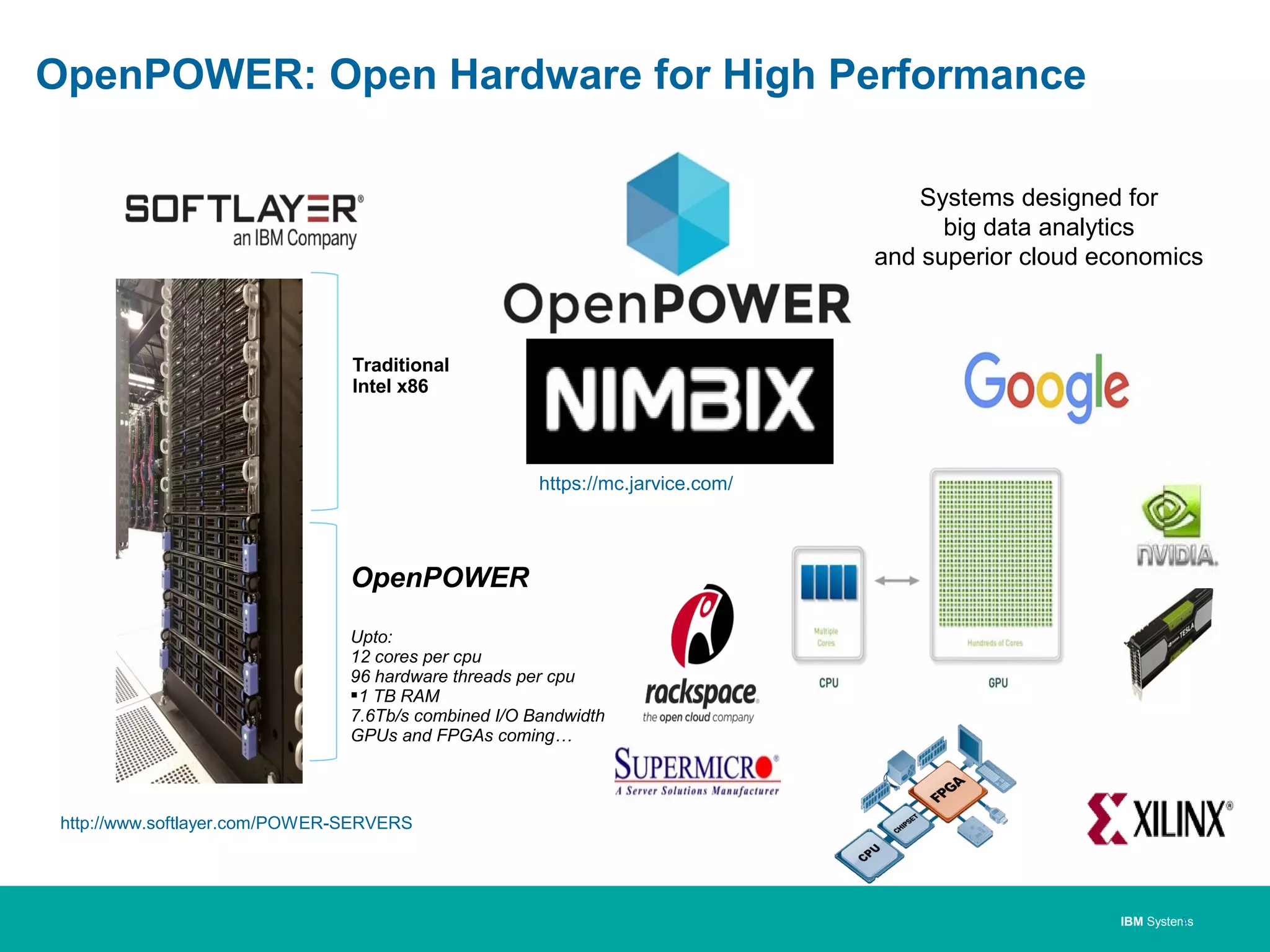

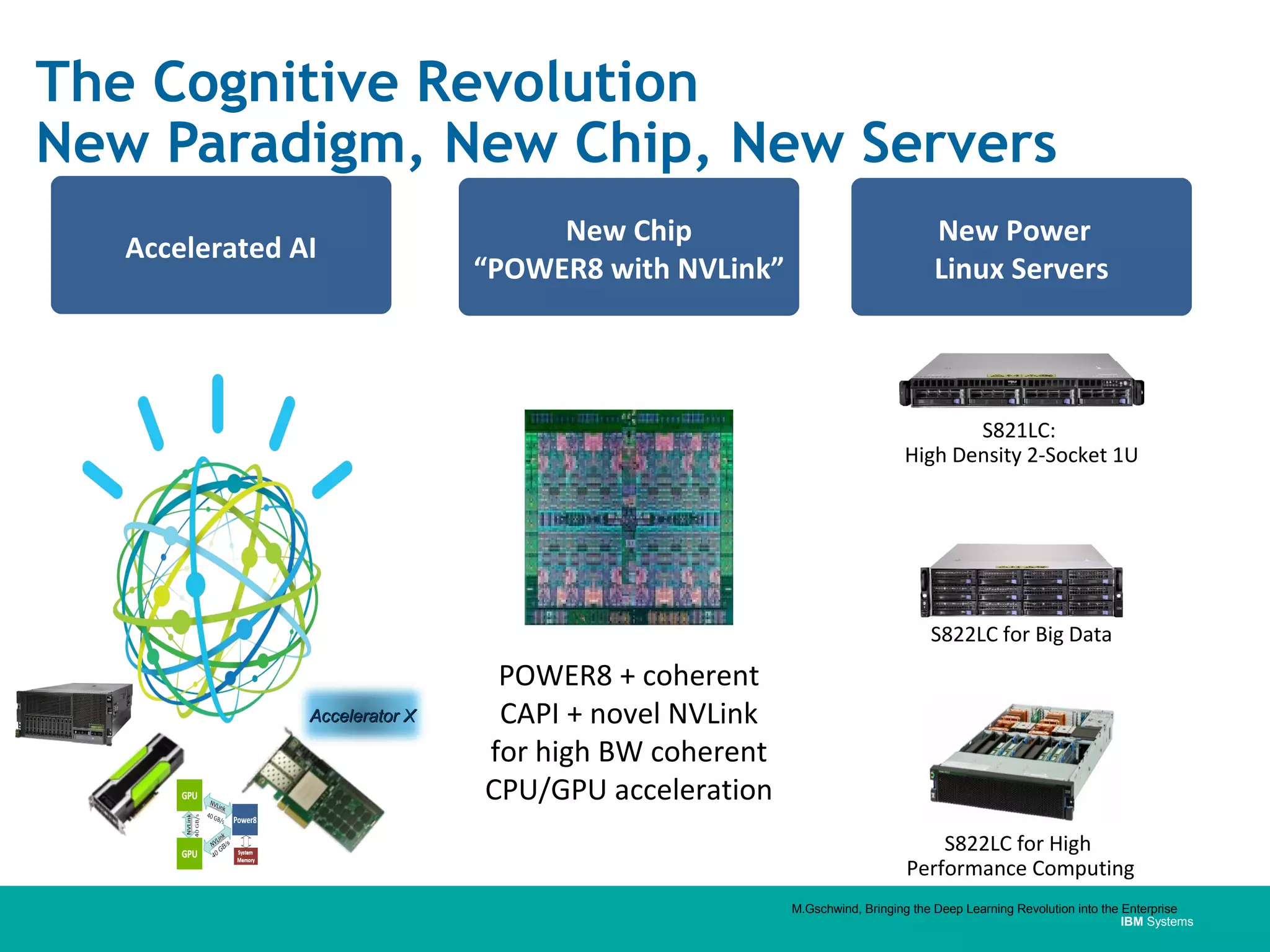

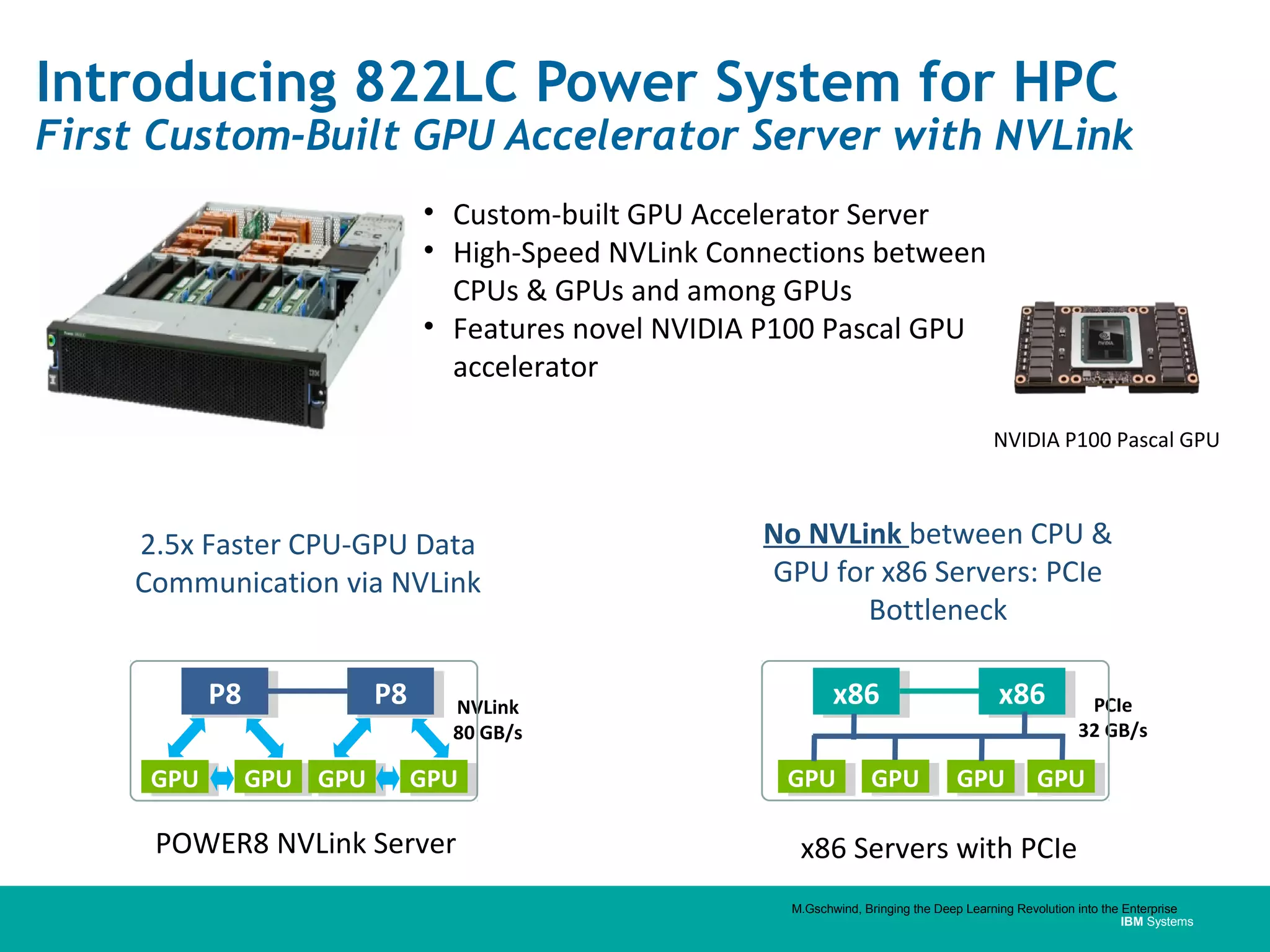

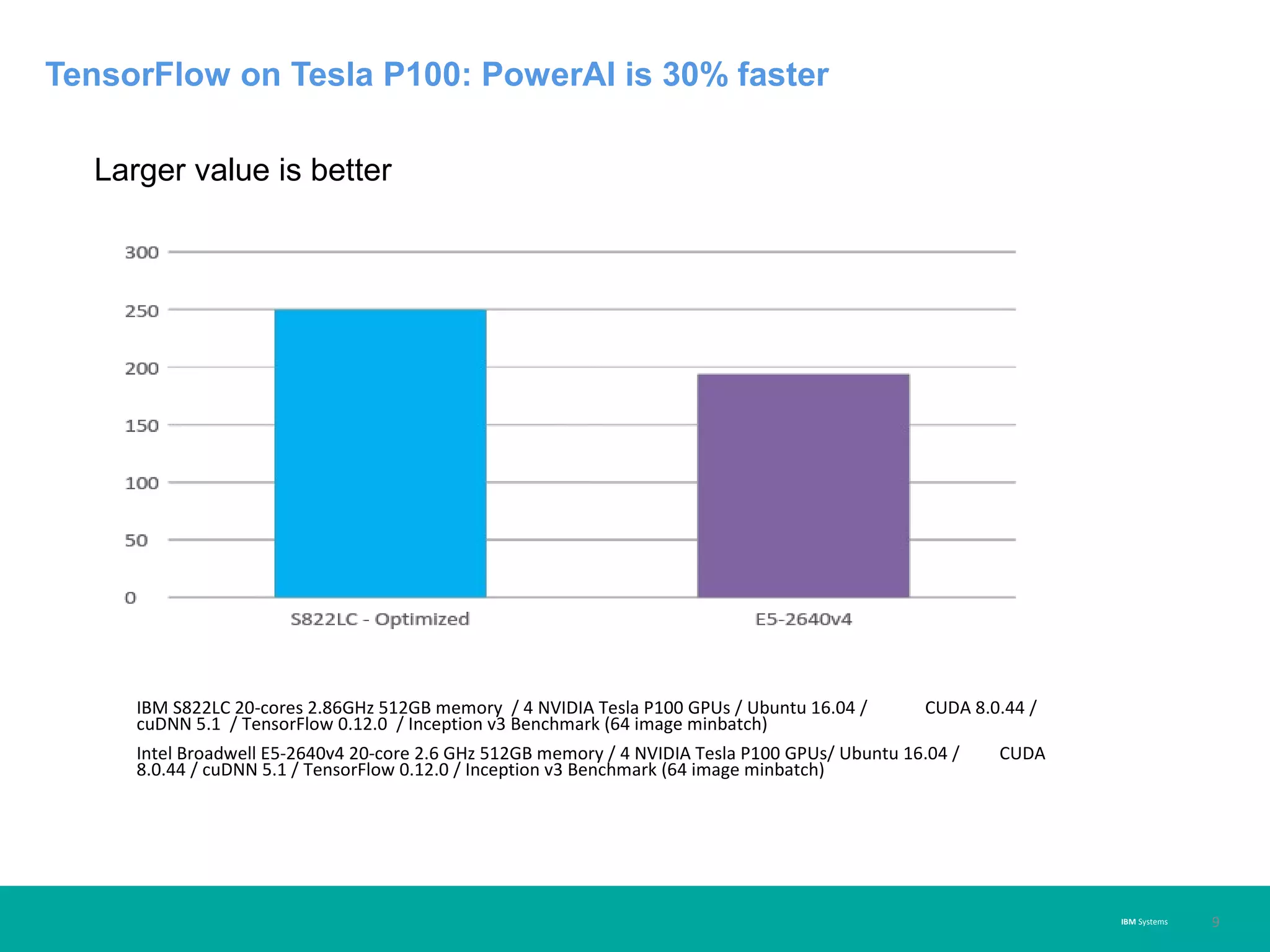

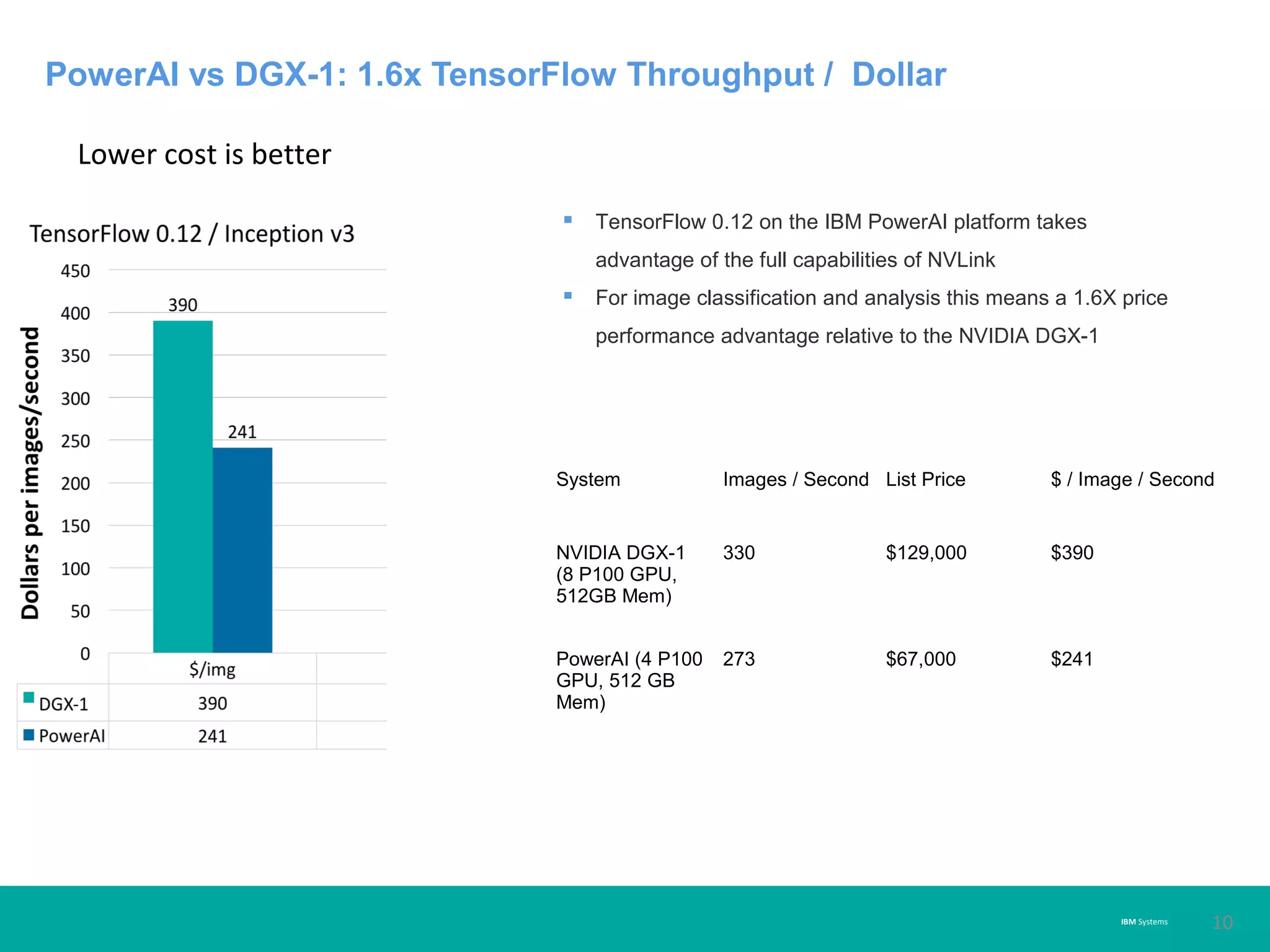

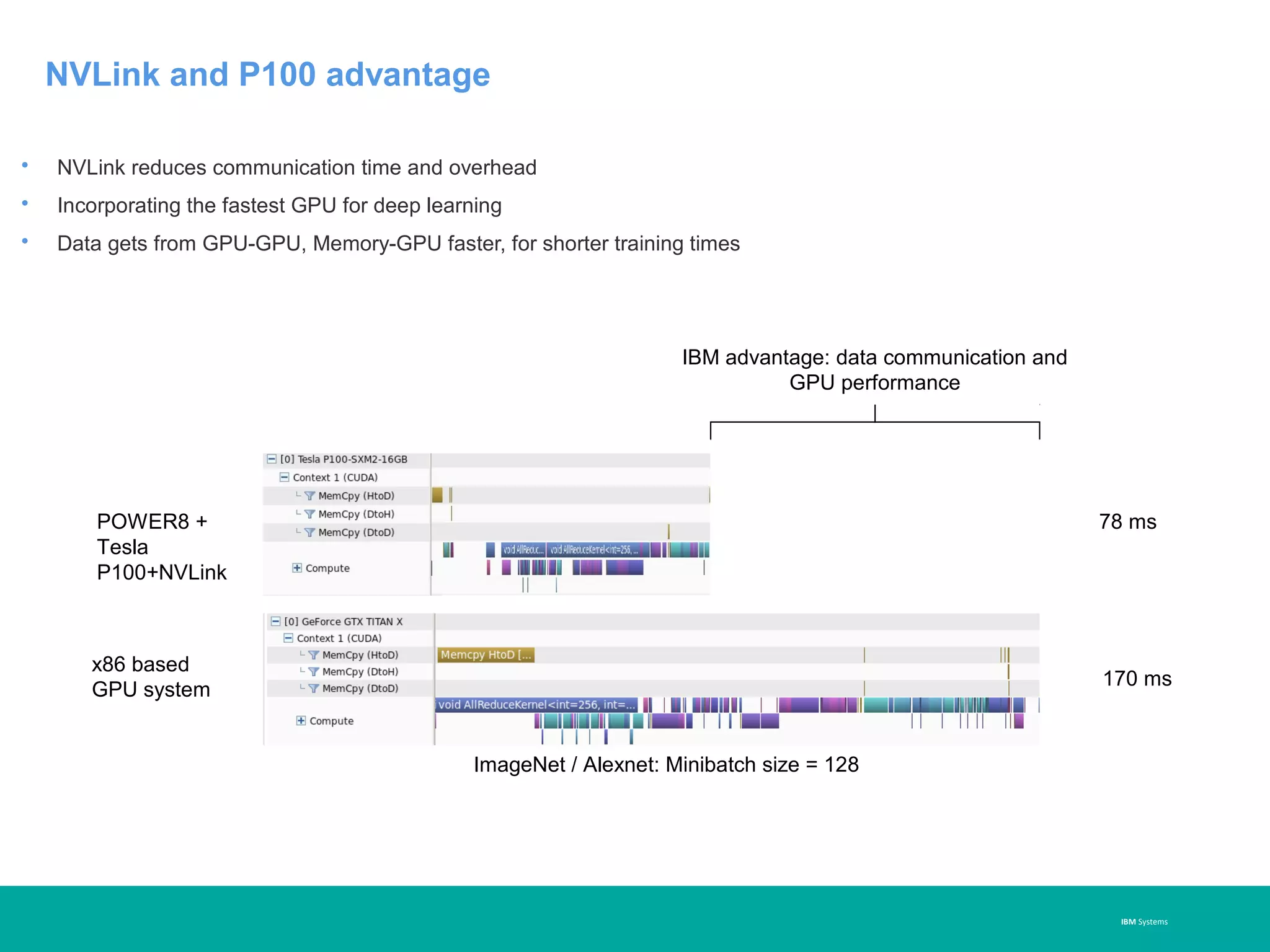

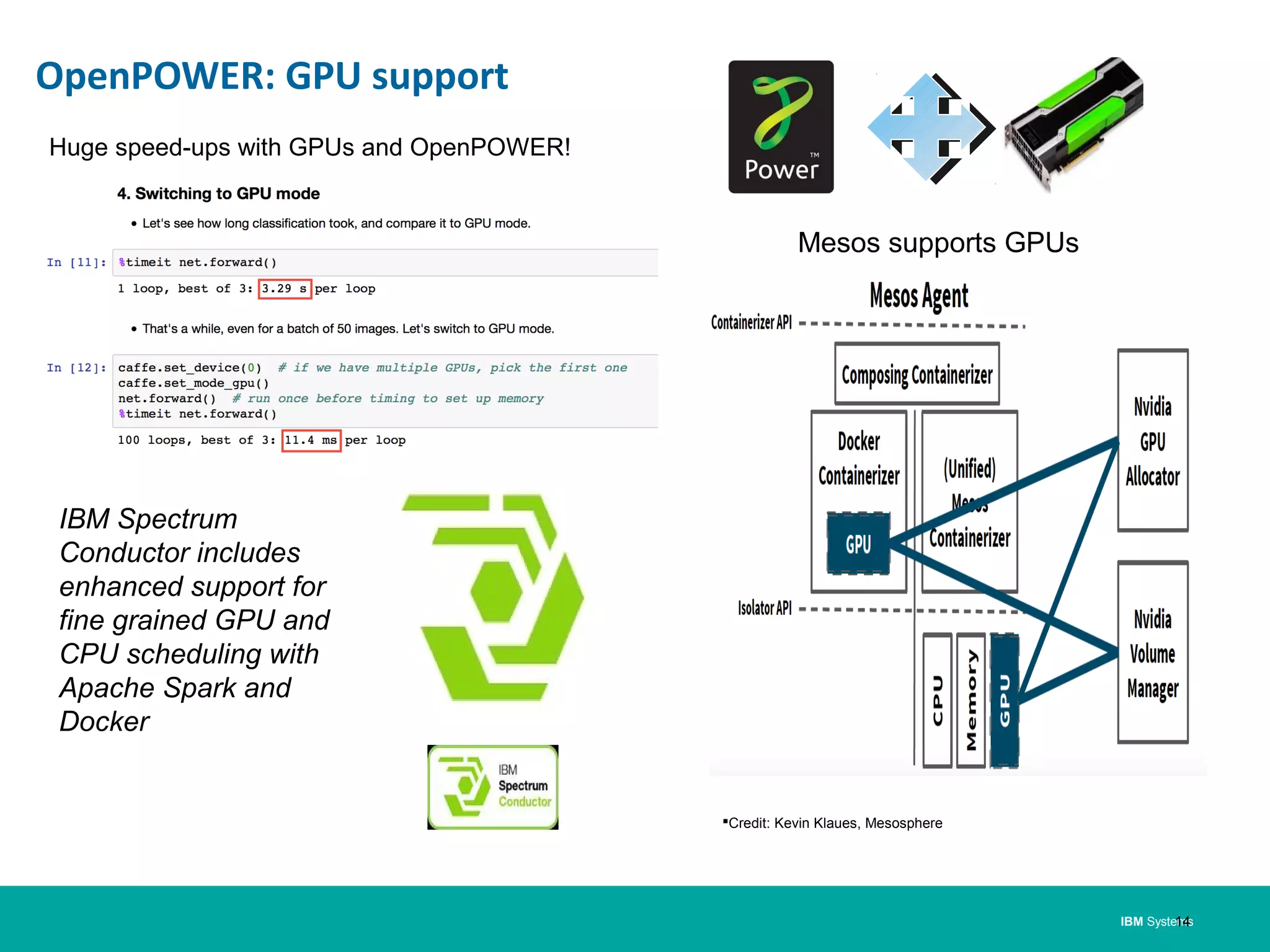

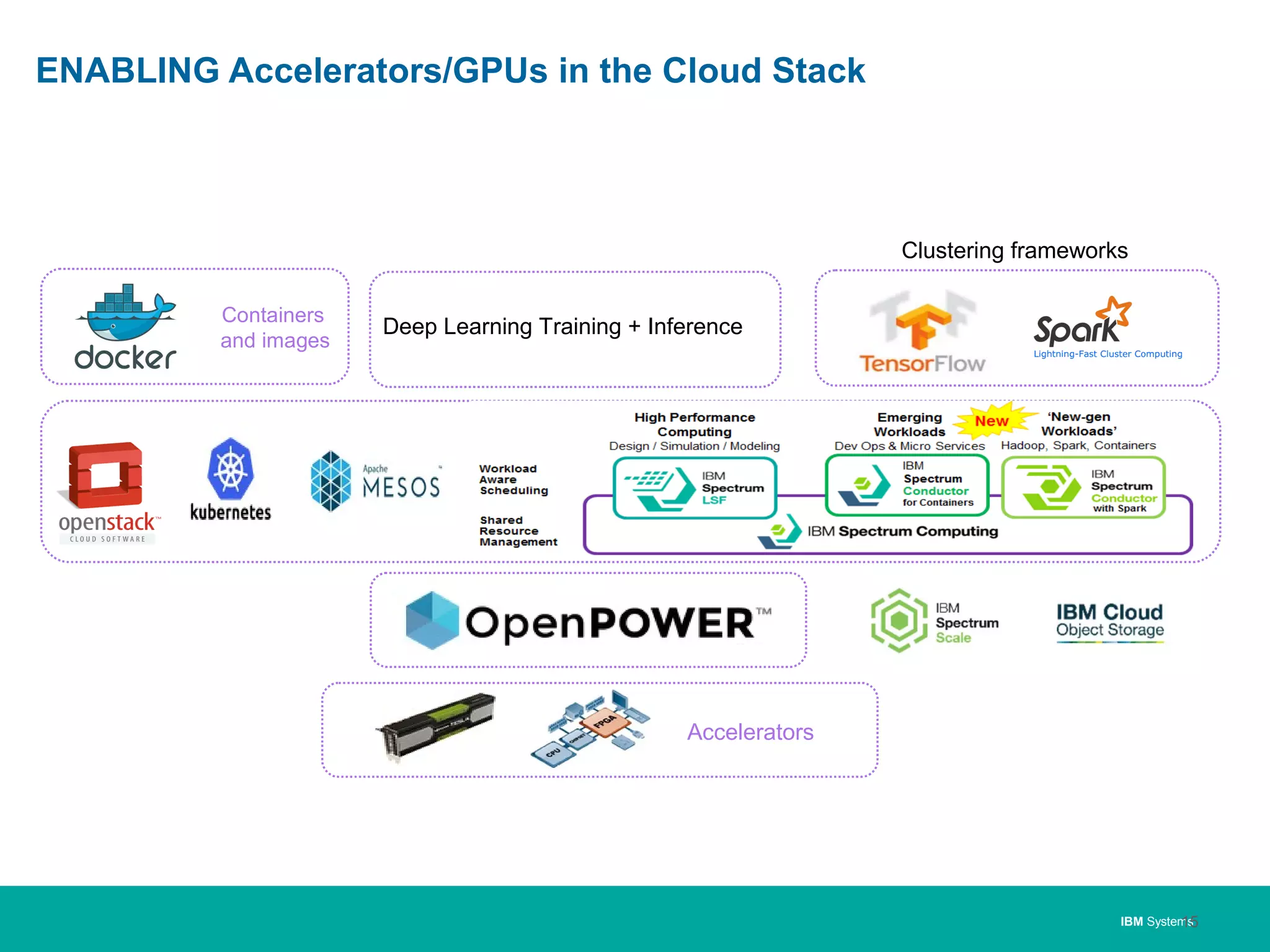

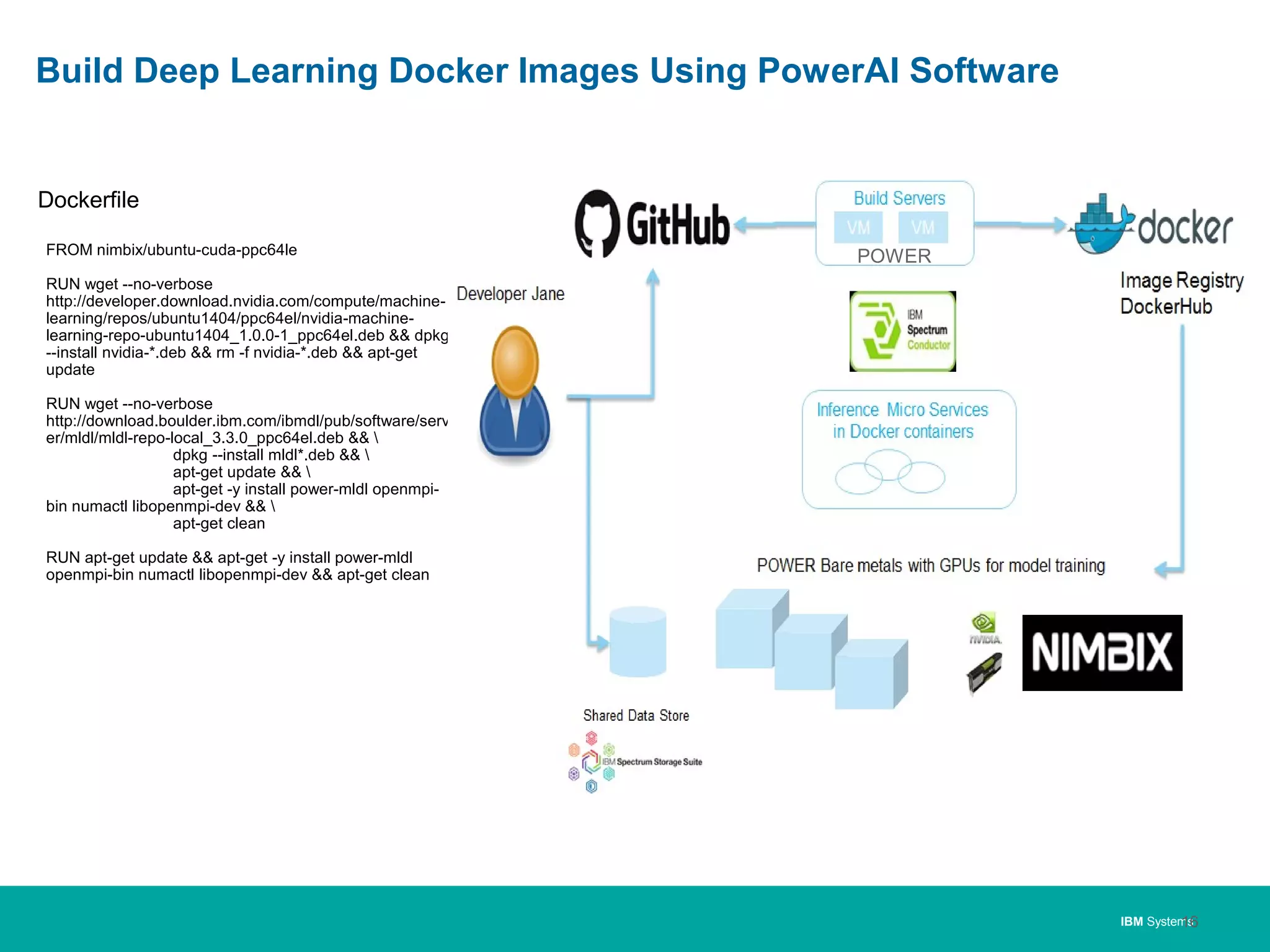

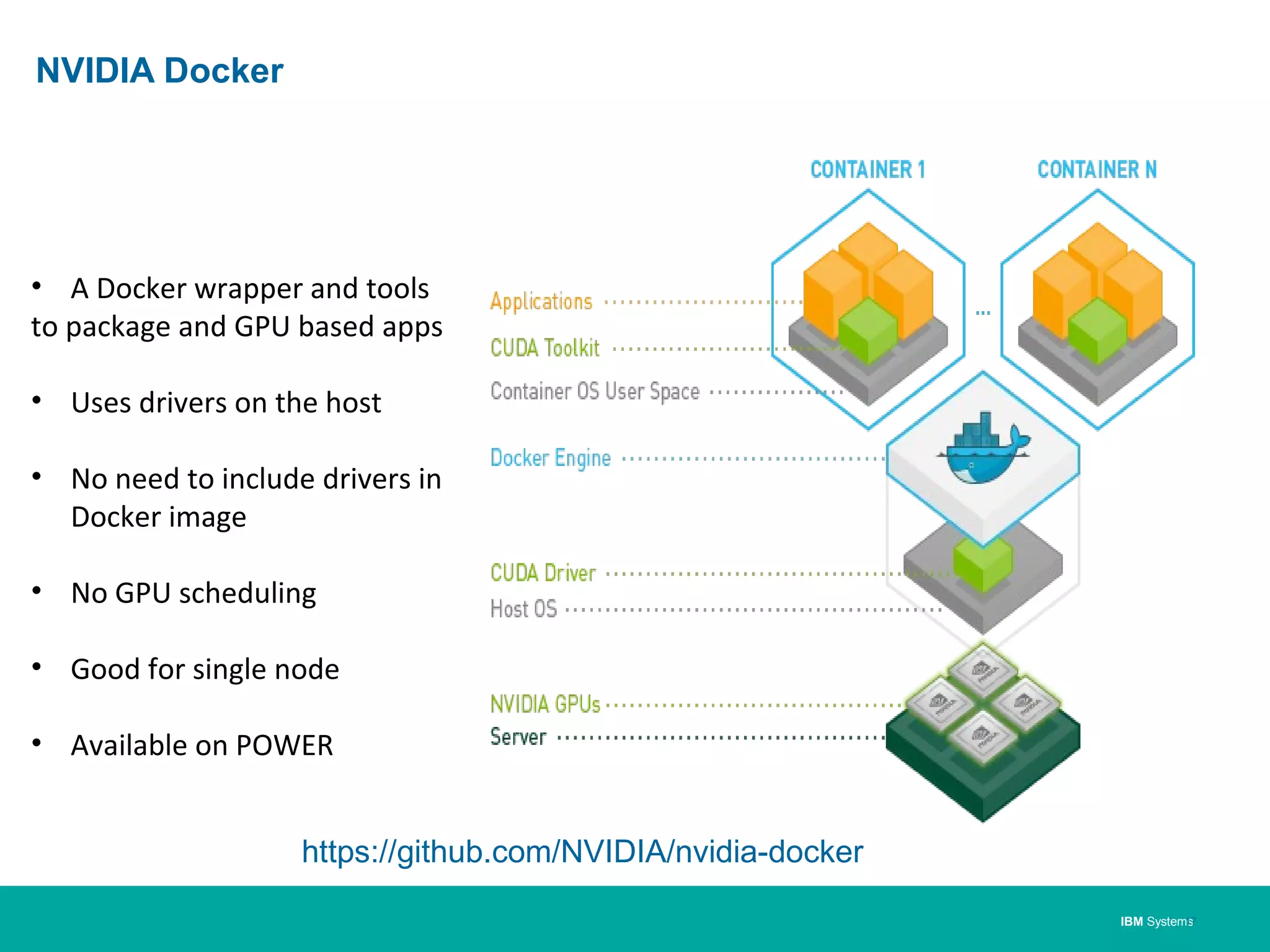

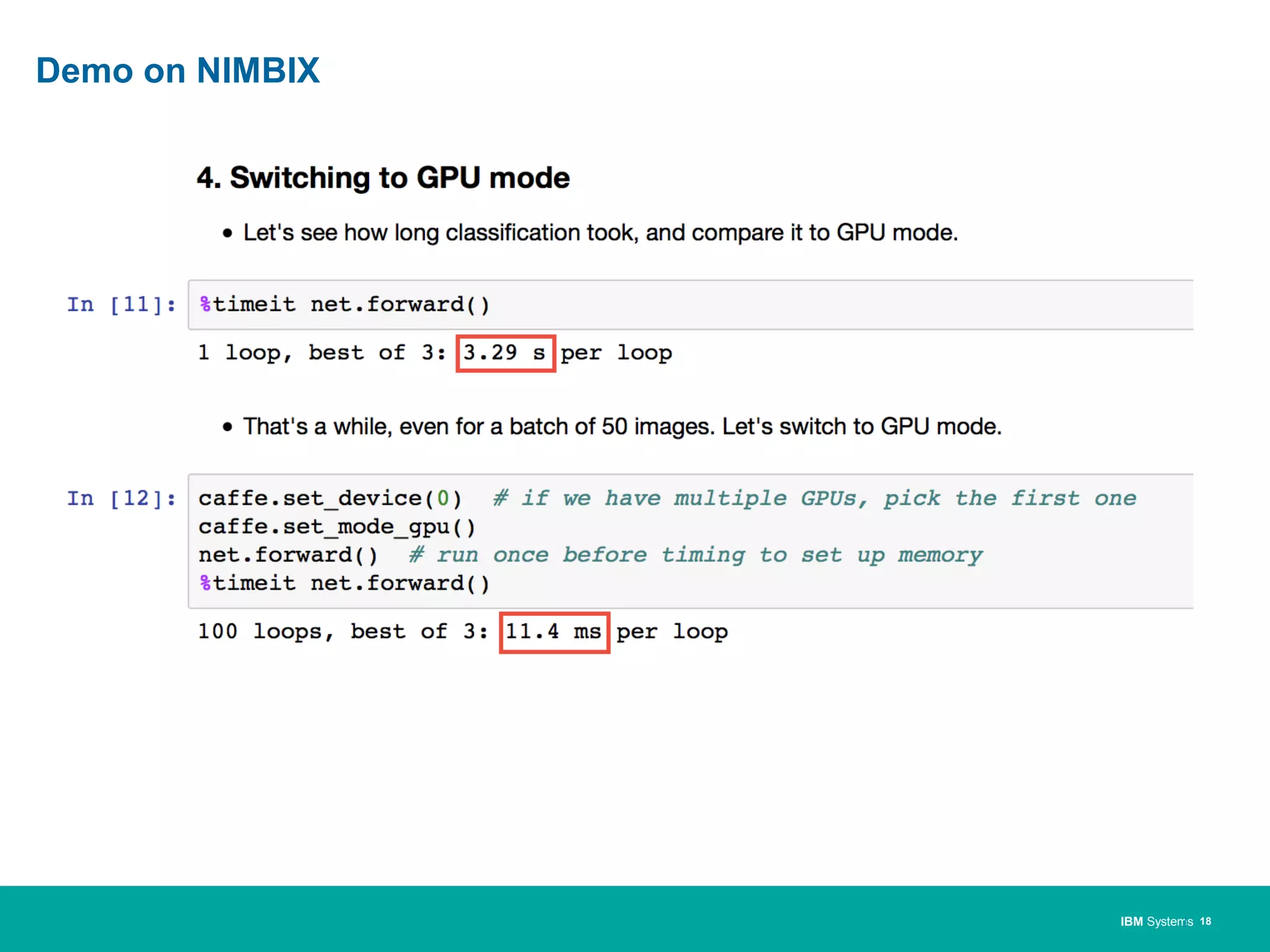

The document discusses the use of Docker to build fast deep learning applications on IBM's OpenPOWER architecture, leveraging GPU-accelerated training and inference. It highlights the advantages of OpenPOWER systems for high-performance computing, including enhanced data communication through NVLink and cost efficiency compared to traditional x86 systems. Additionally, it introduces POWER AI as a platform for deep learning, providing pre-compiled frameworks and enterprise-class support.