This document discusses three key artificial intelligence capabilities of IBM's Power9 architecture:

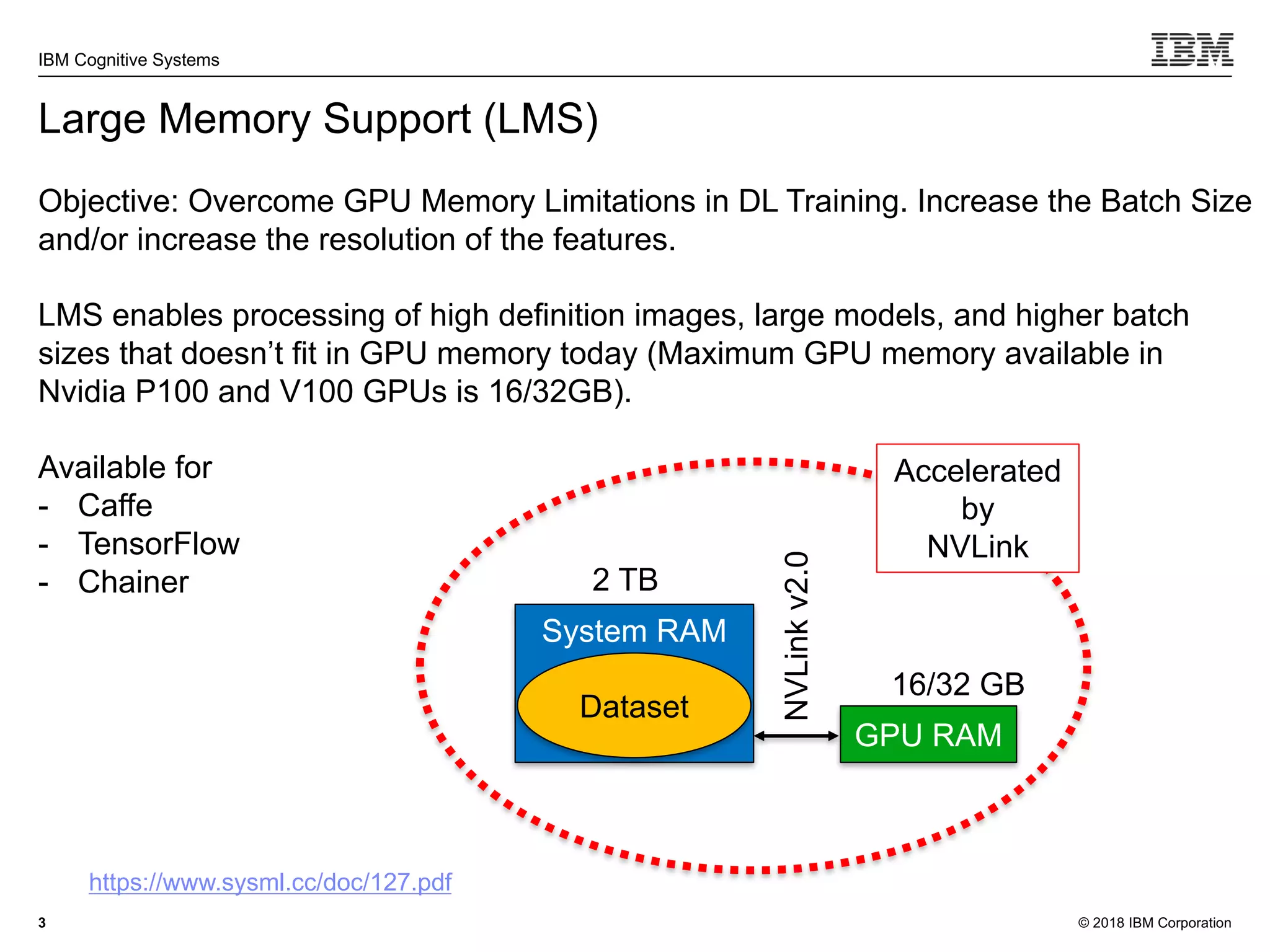

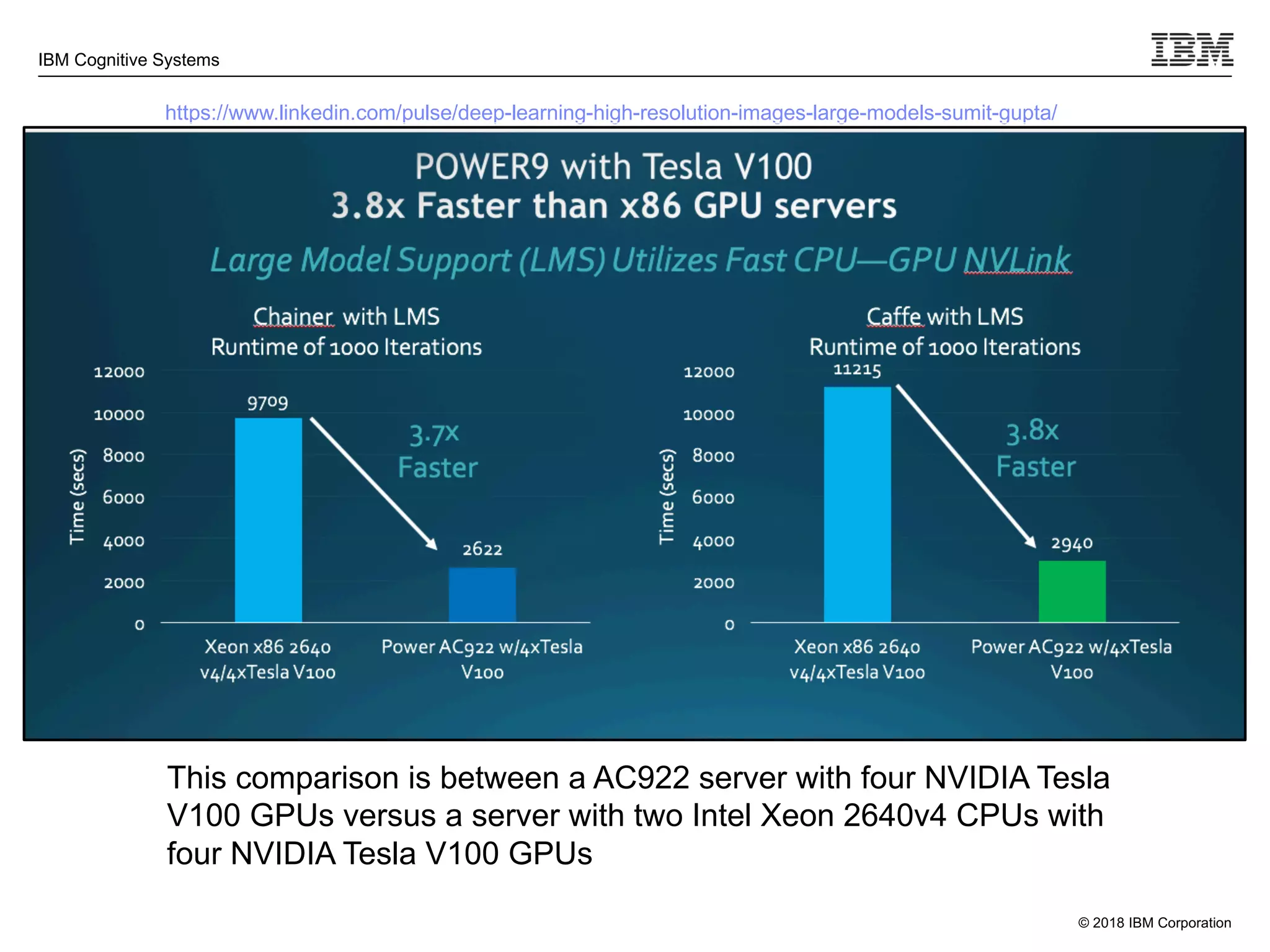

1) Large Memory Support enables processing of high-definition images and large models that exceed GPU memory limits.

2) Distributed Deep Learning allows scaling to multiple servers for faster and more accurate training on large datasets.

3) PowerAI Vision provides tools for labeling data, training models for computer vision tasks, and deploying models for production use.

![© 2018 IBM Corporation

IBM Cognitive Systems

Demo

13

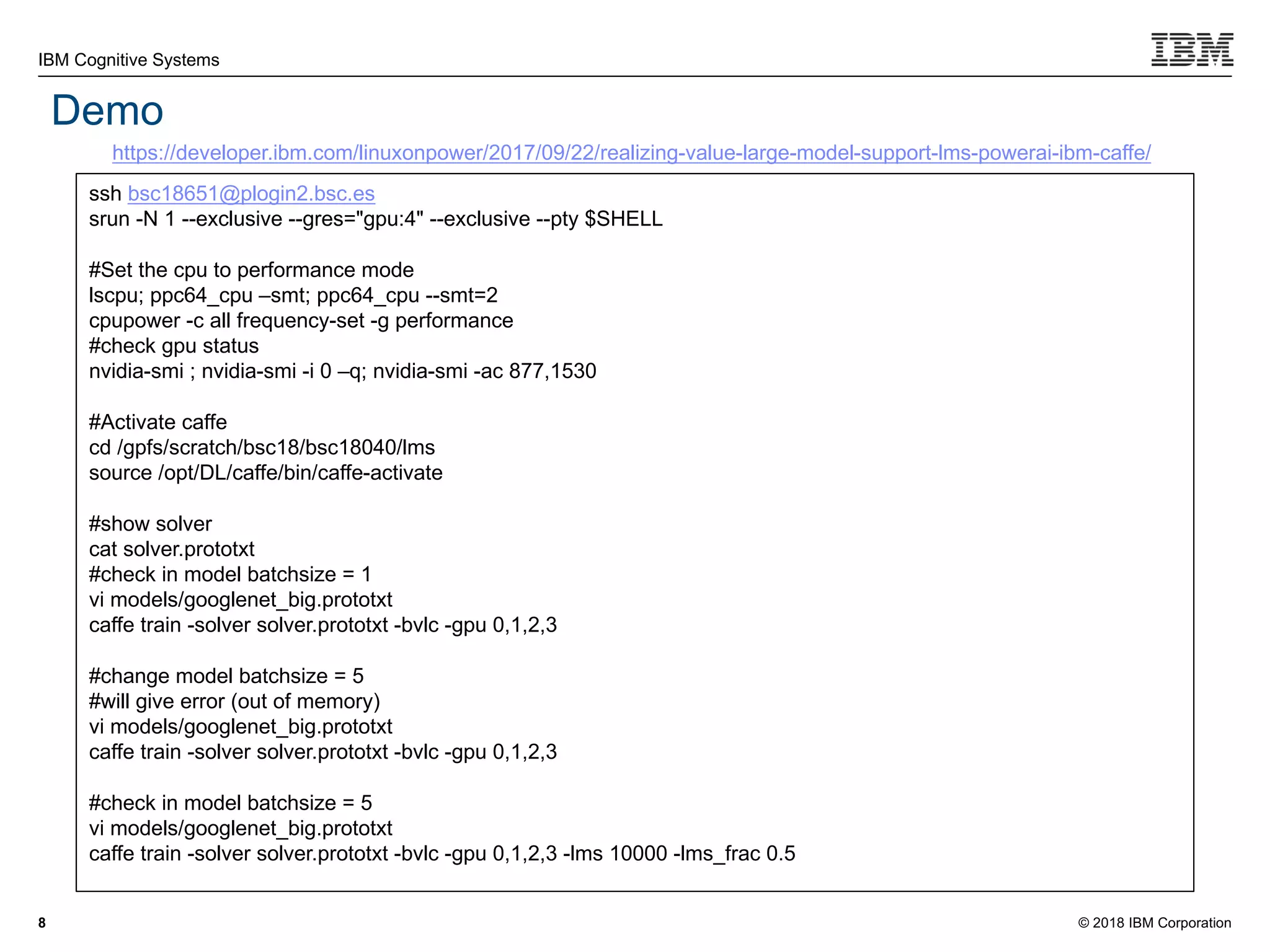

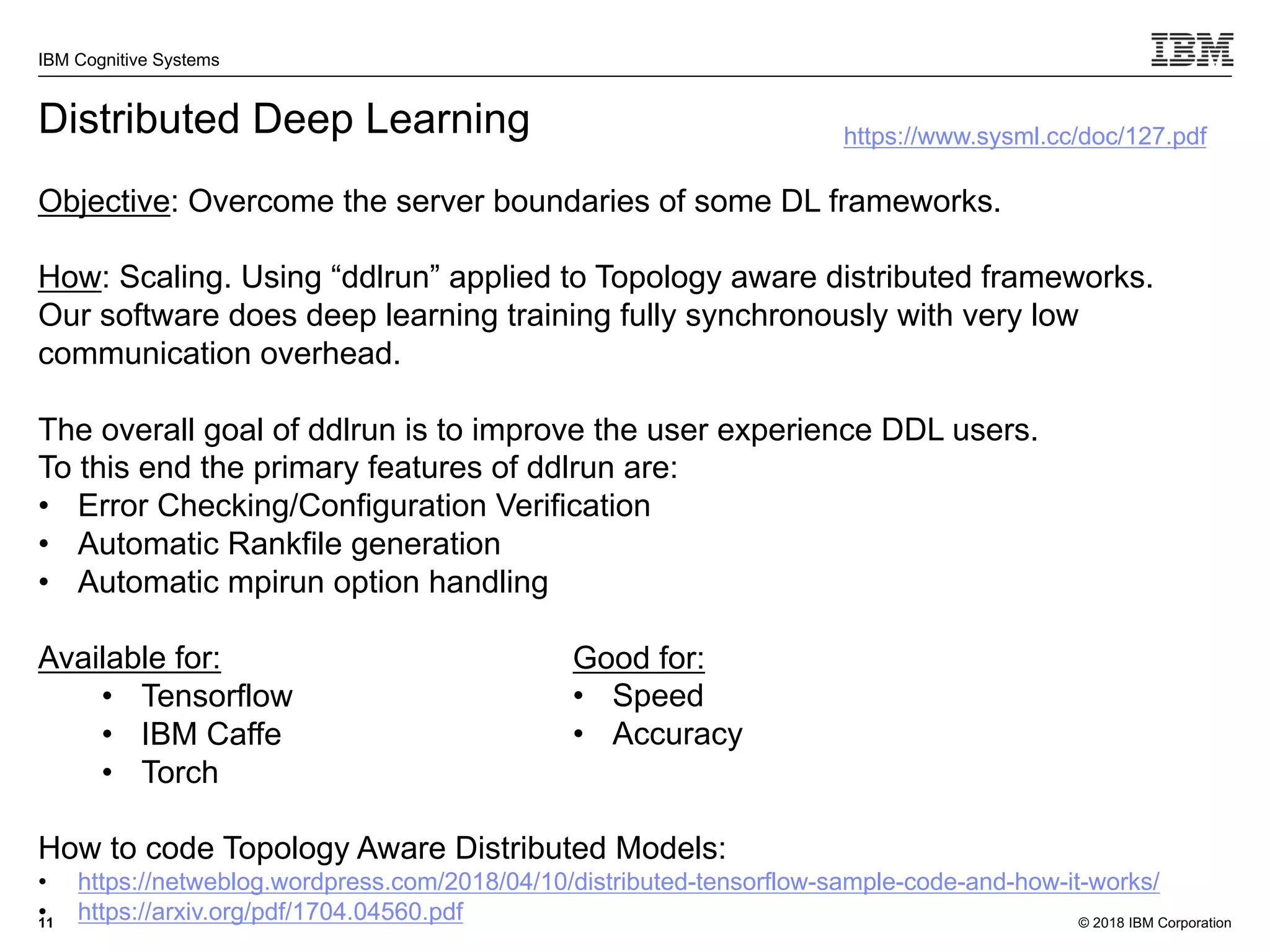

ssh bsc18651@plogin2.bsc.es #Slurm login node

# You should have a ~/data dir with the dataset downloaded or internet conection to download it

#Edit and include the following line in ~/.bashrc

export TMPDIR=/tmp/

# To pass all the variables, like activate ...., you may need to write a simple submission script: Run as “sbatch script.sh”

[bsc18651@p9login2 ~]$ cat script.sh

#!/bin/bash

#SBATCH -J test

#SBATCH -D .

#SBATCH -o test_%j.out

#SBATCH -e test_%j.err

#SBATCH -N 2

#SBATCH --ntasks-per-node=4

#SBATCH --gres="gpu:4"

#SBATCH --time=01:00:00

module purge

module load anaconda2 powerAI

source /opt/DL/ddl-tensorflow/bin/ddl-tensorflow-activate

export TMPDIR="/tmp/"

export DDL_OPTIONS="-mode b:4x2"

NODE_LIST=$(scontrol show hostname $SLURM_JOB_NODELIST | tr 'n' ',')

NODE_LIST=${NODE_LIST%?}

cd examples/mnist

ddlrun -n 8 -H $NODE_LIST python mnist-init.py --ddl_options="-mode b:4x2" --data_dir /home/bsc18/bsc18651/examples/mnist/data

[bsc18651@p9login2 ~]$ sbatch script.sh

https://developer.ibm.com/linuxonpower/2018/05/01/improved-ease-use-ddl-powerai/](https://image.slidesharecdn.com/2018-bsclmsandddlv4-180704115134/75/BSC-LMS-DDL-13-2048.jpg)