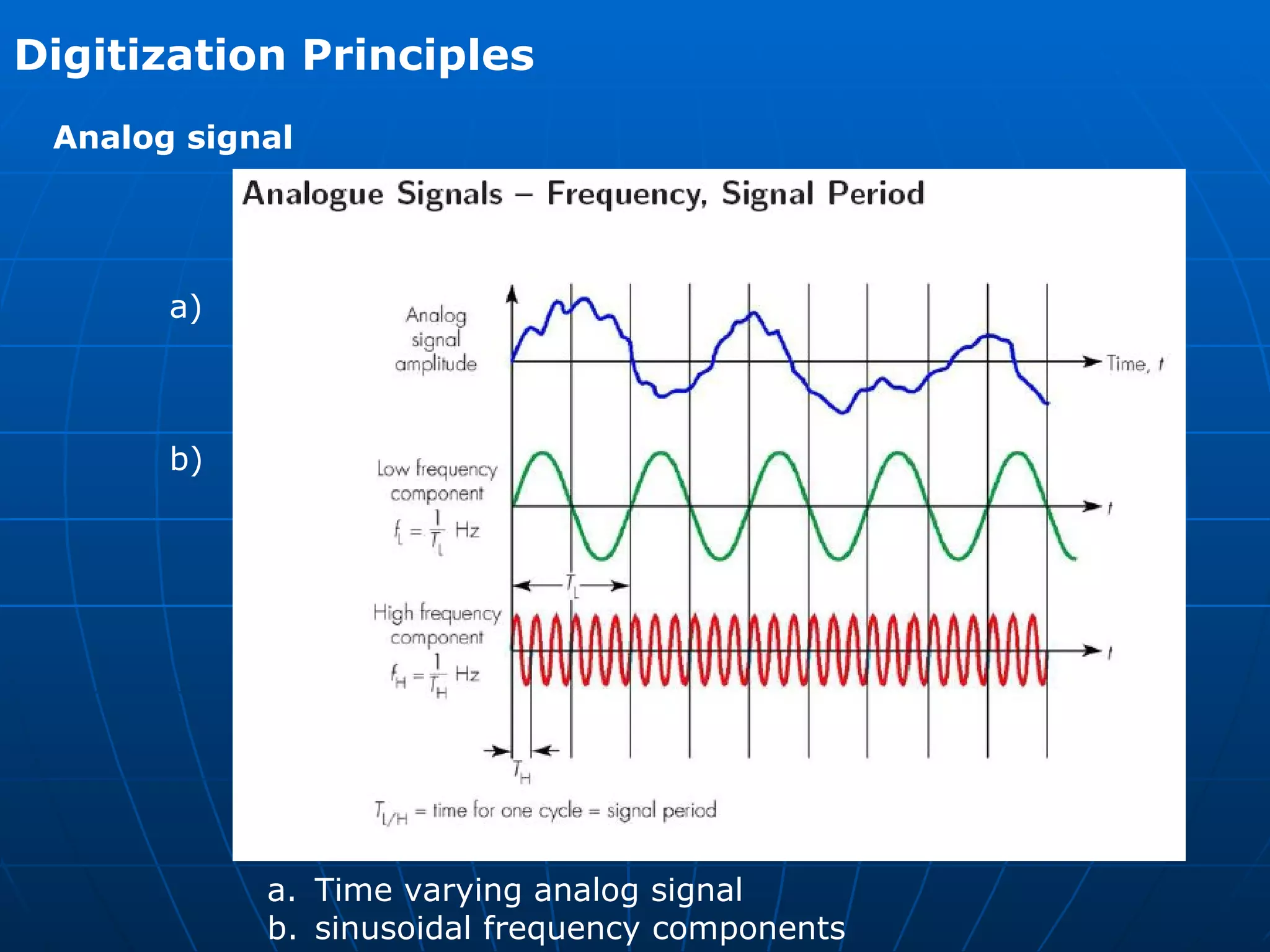

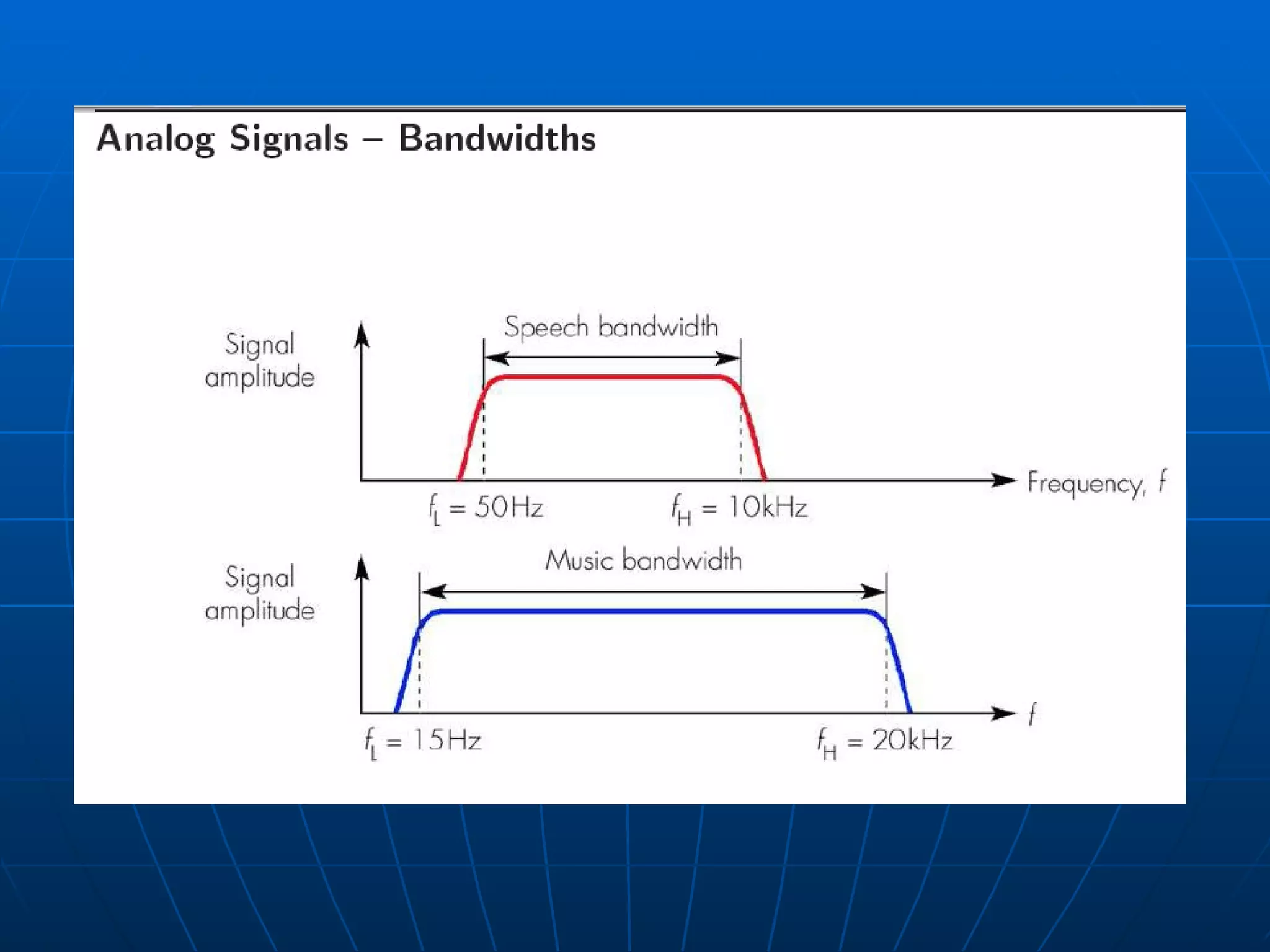

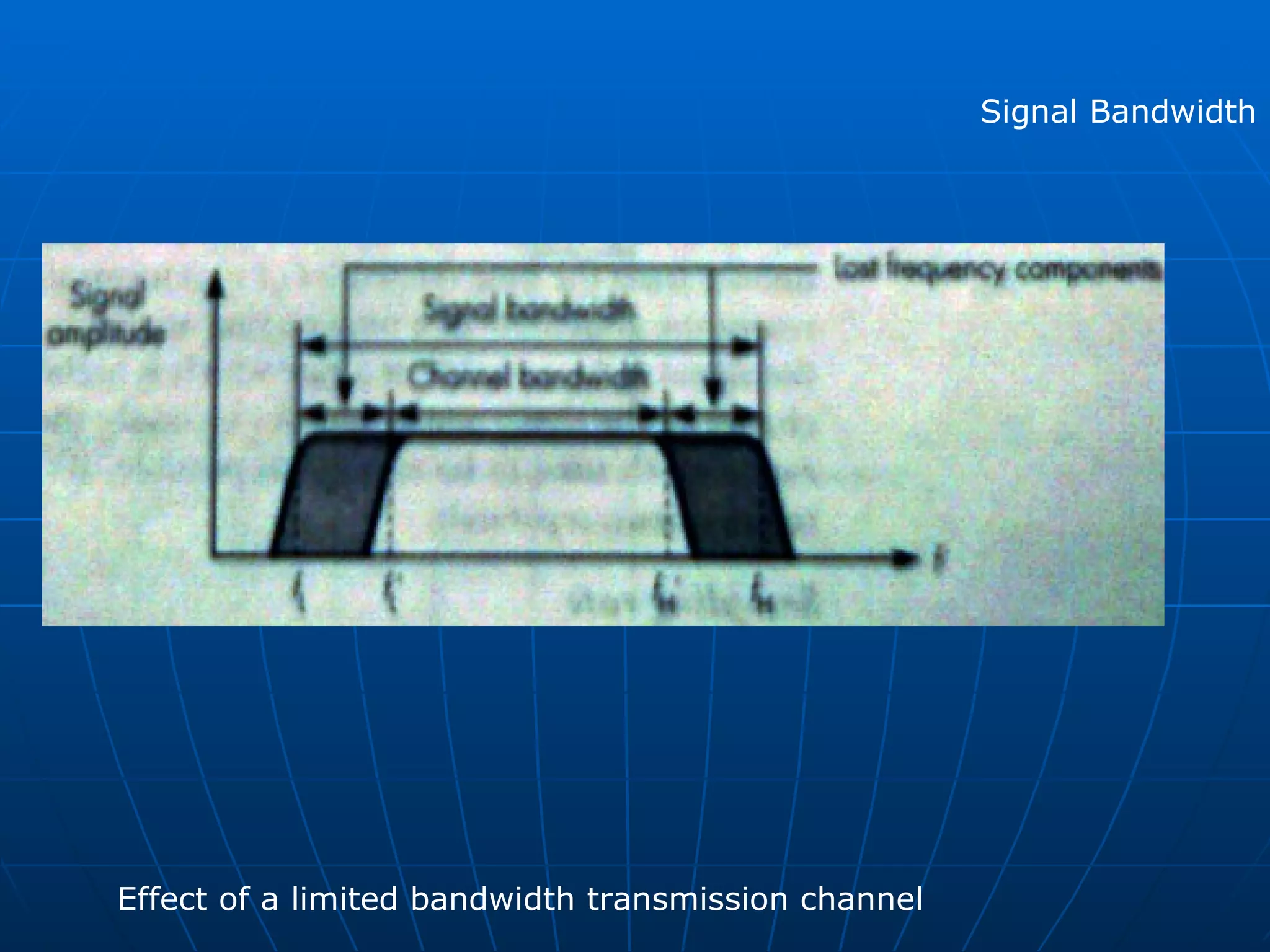

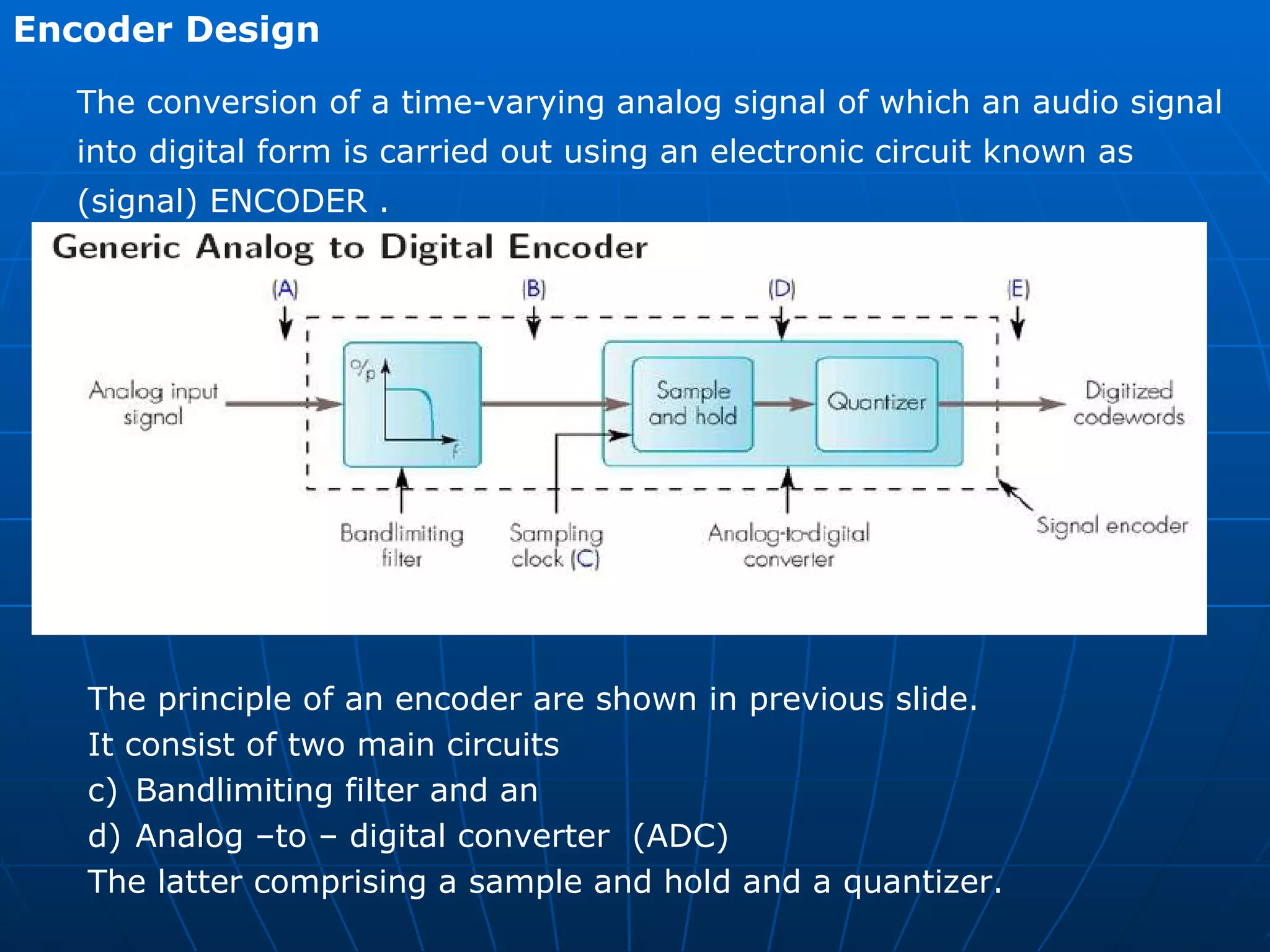

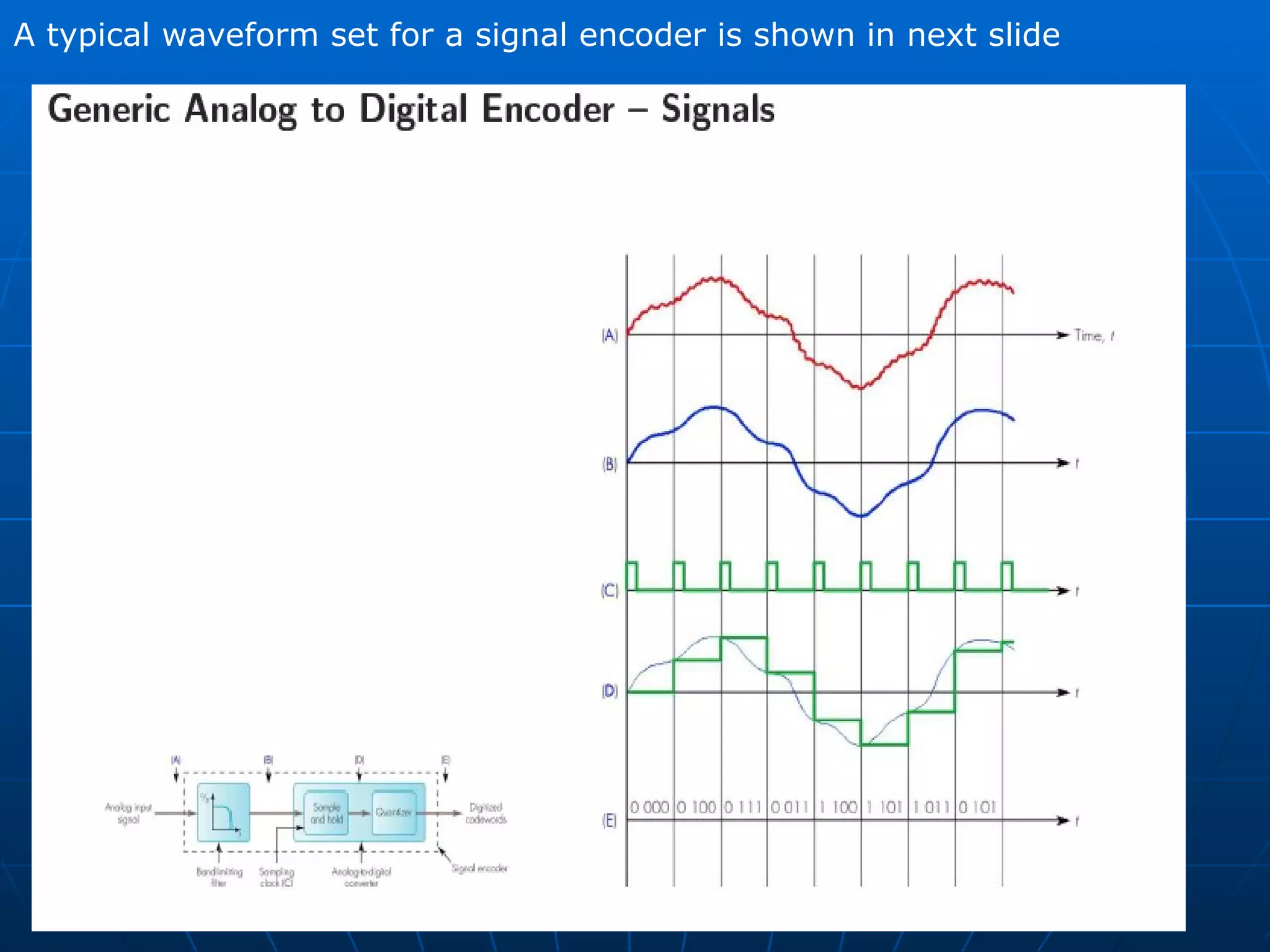

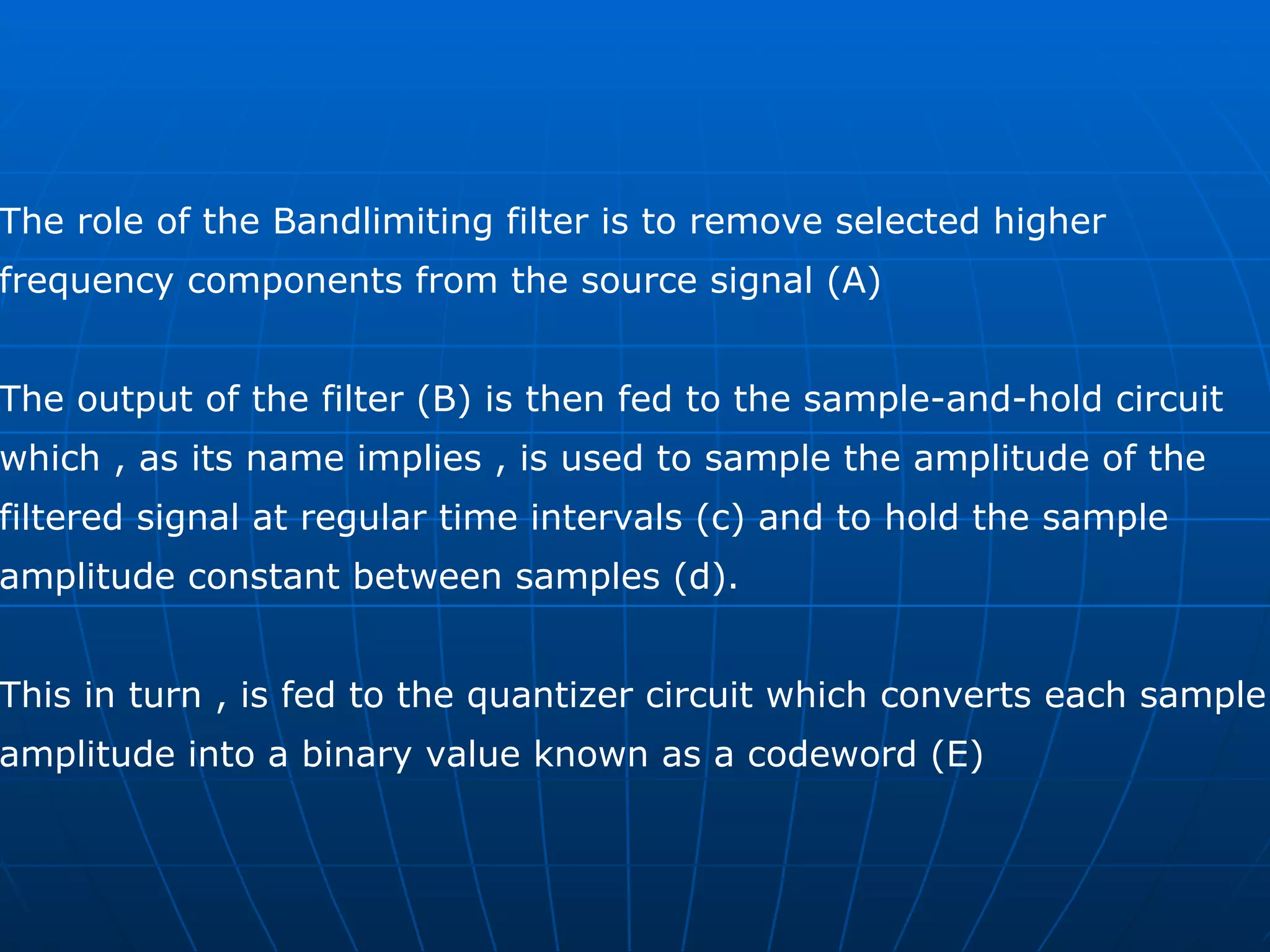

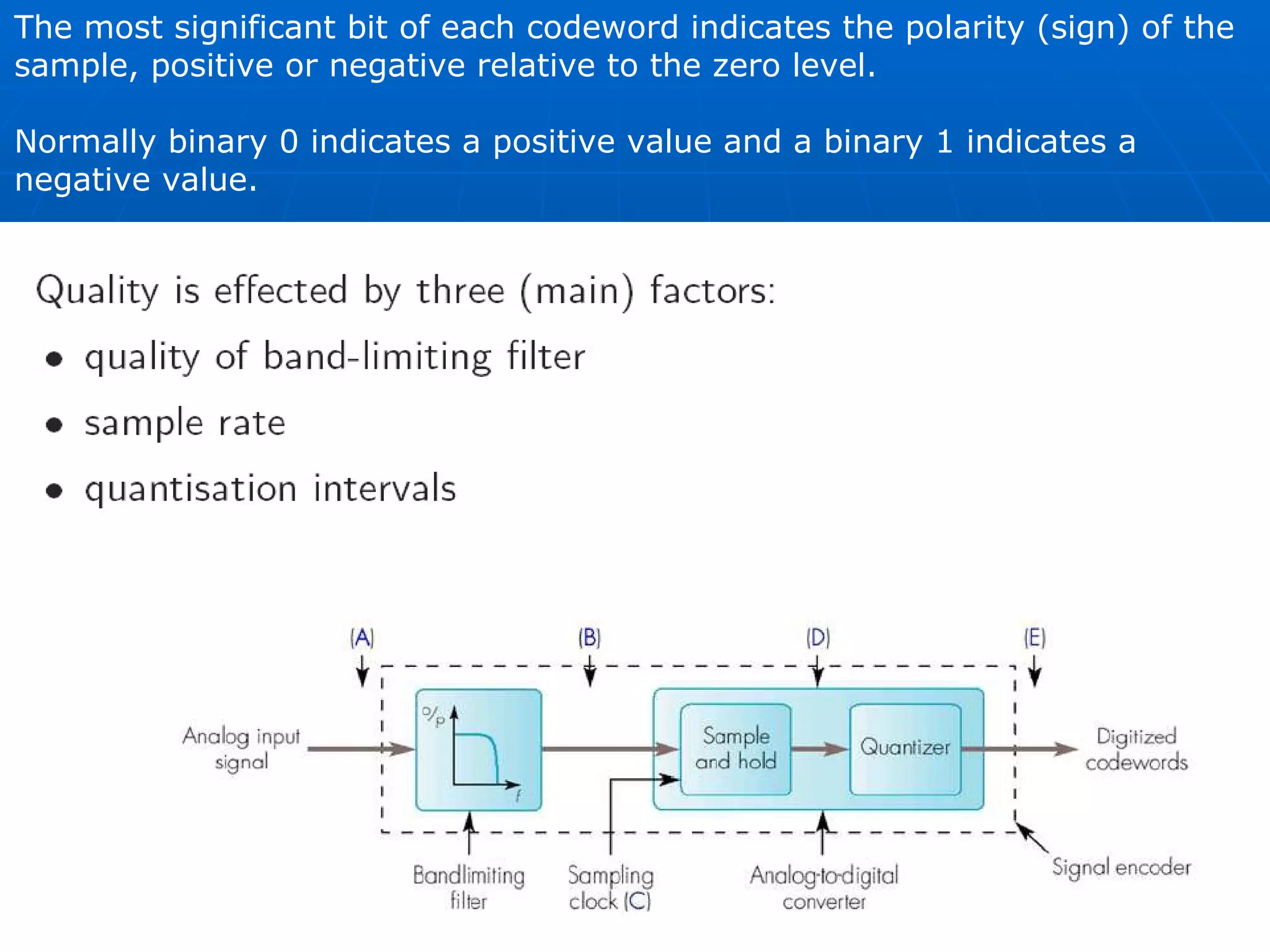

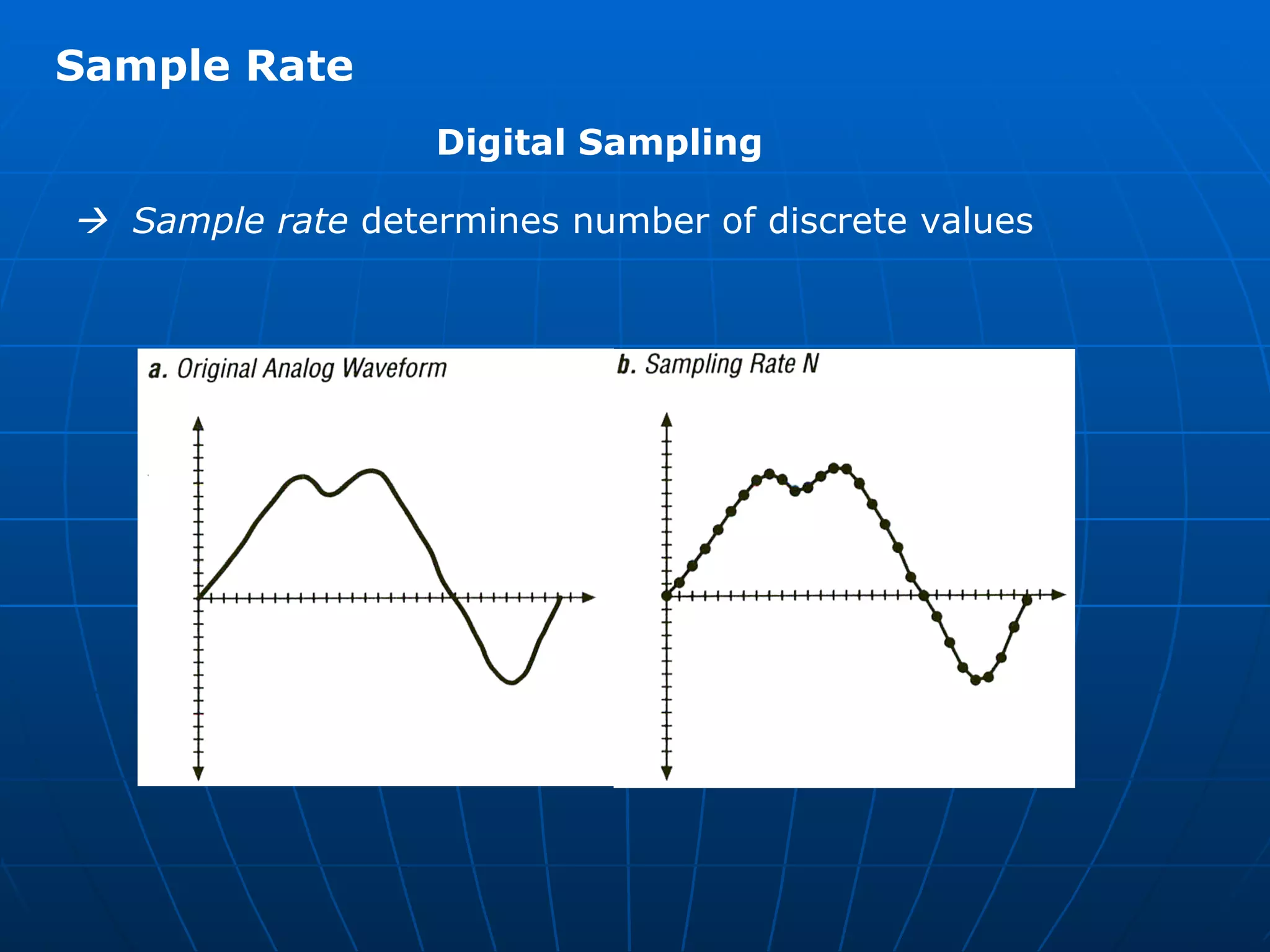

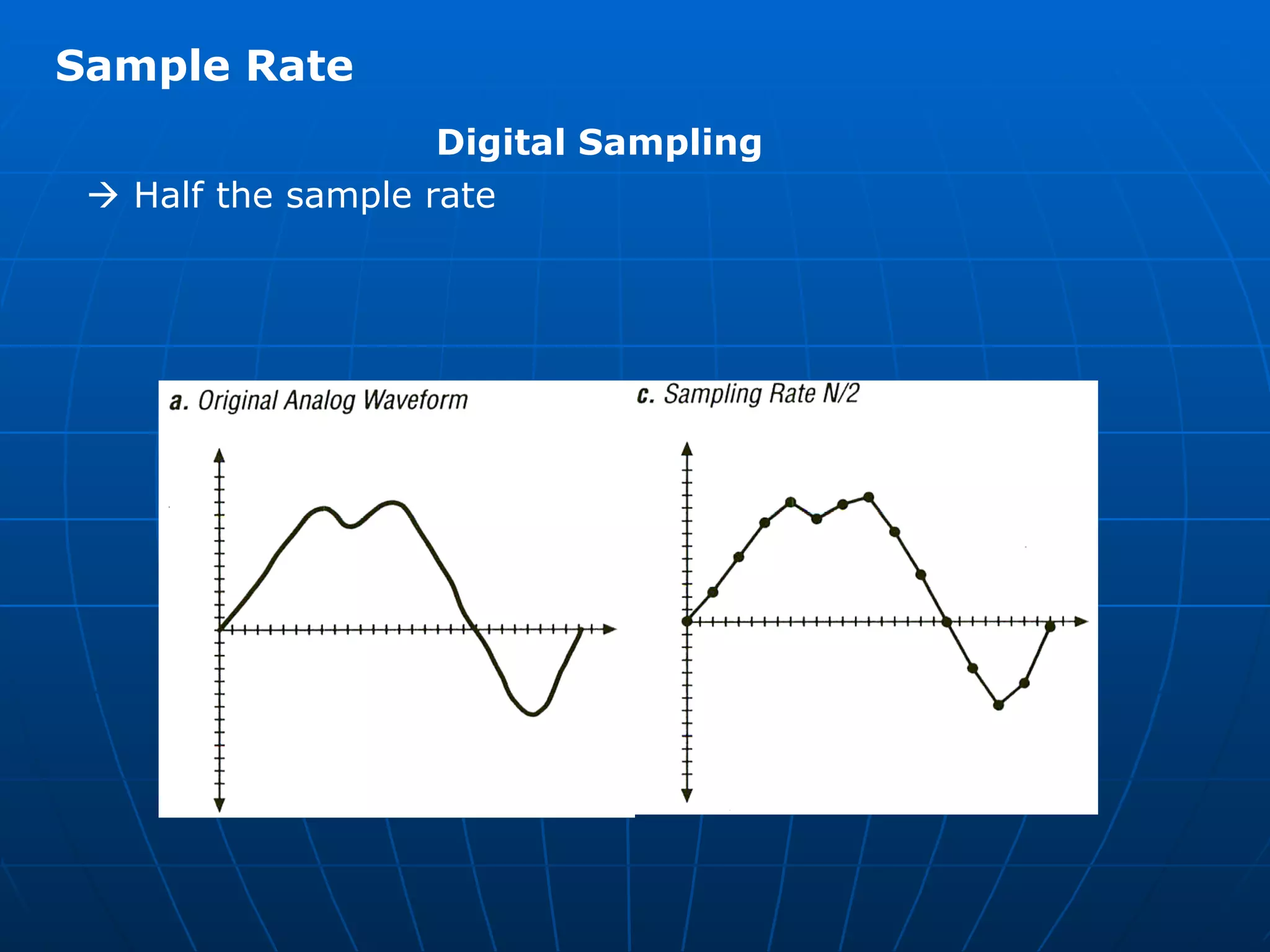

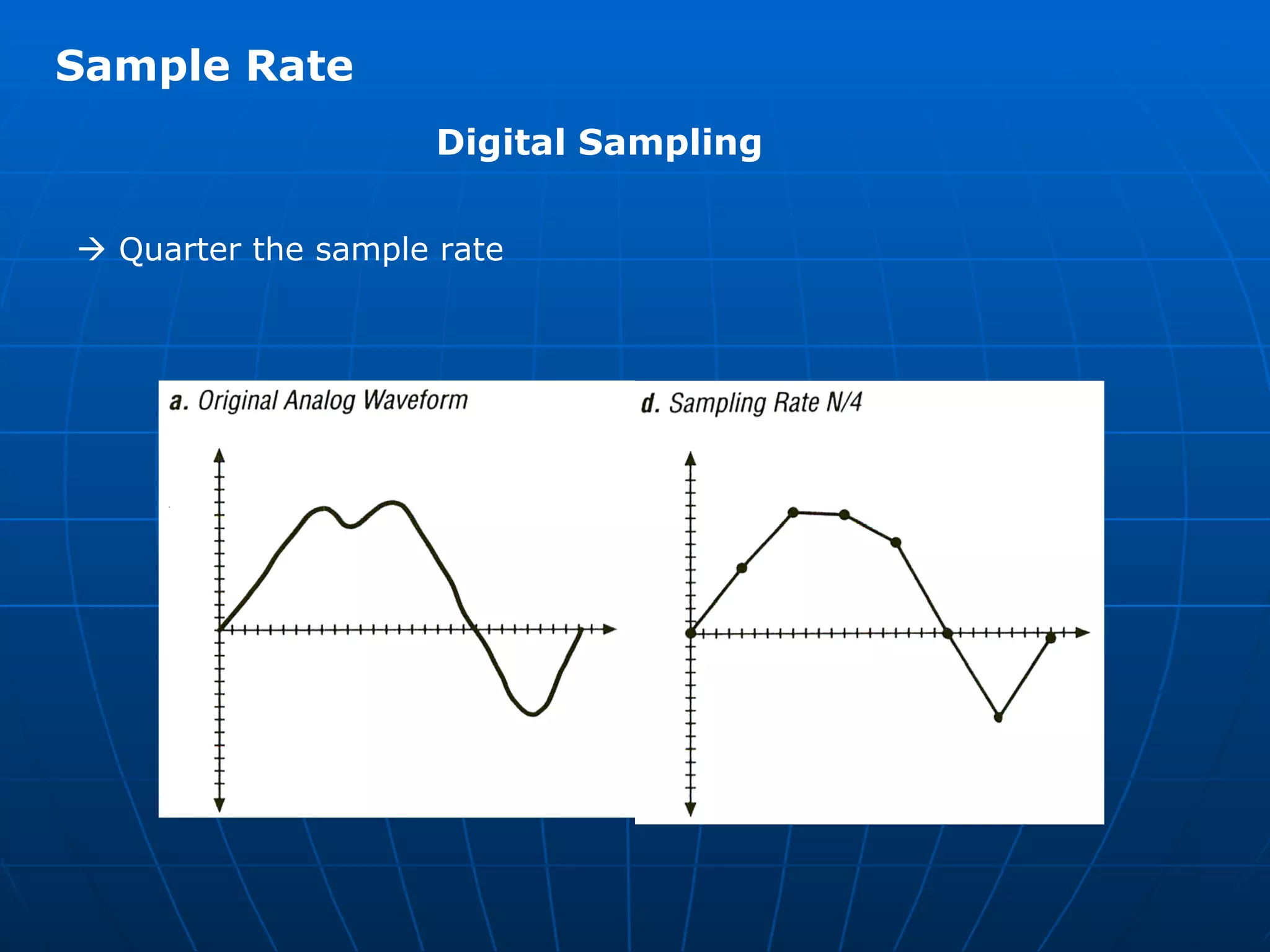

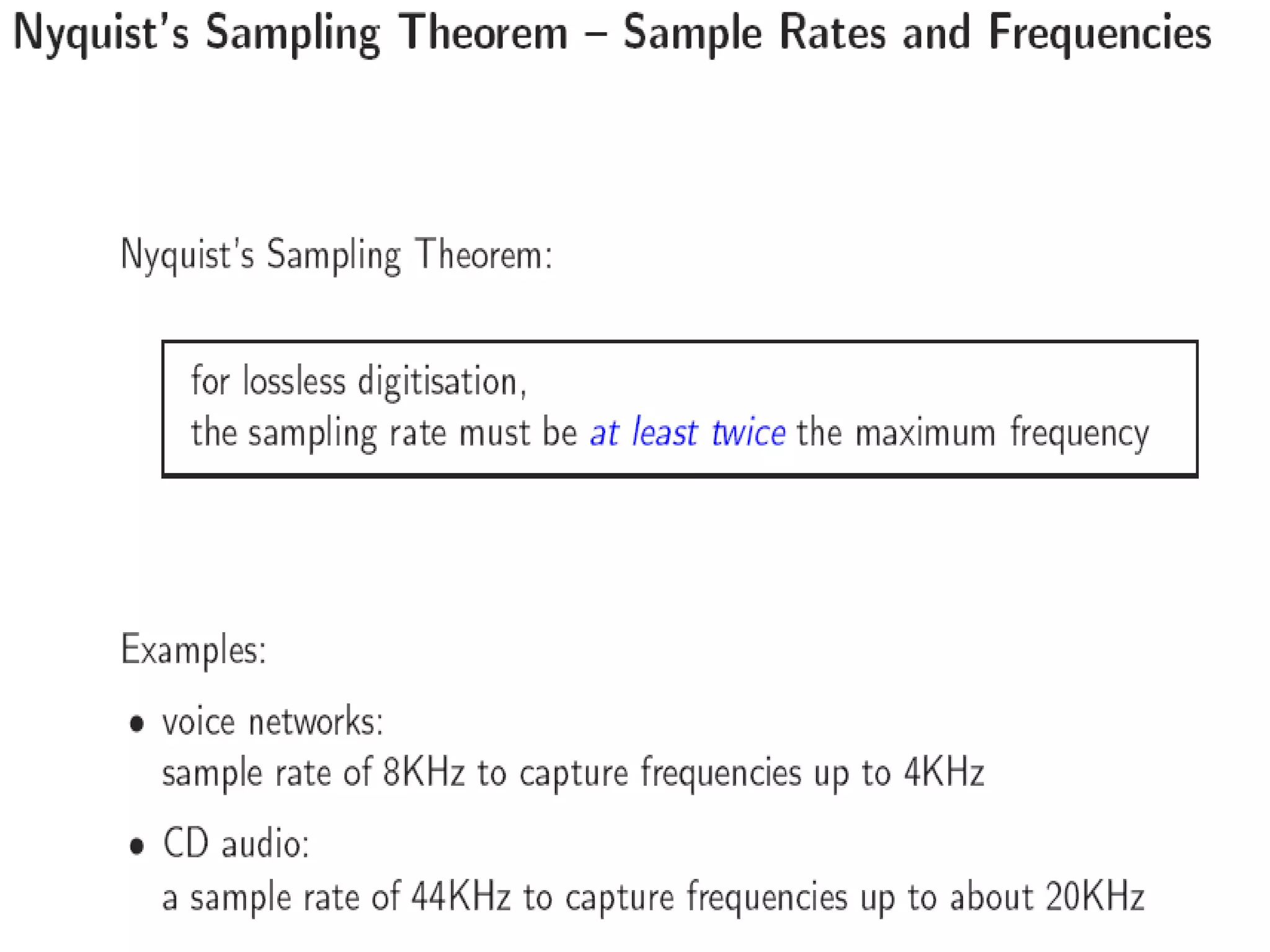

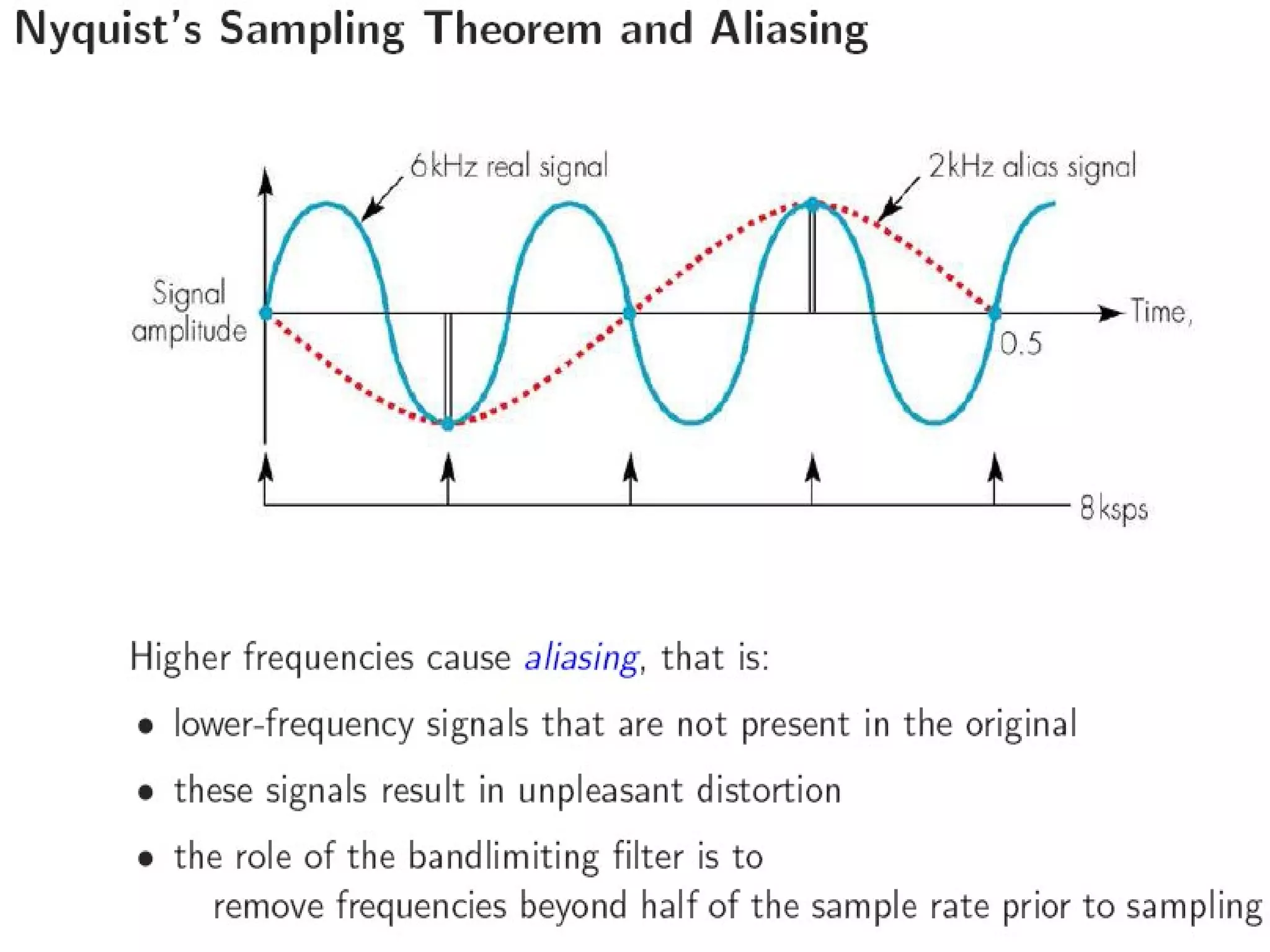

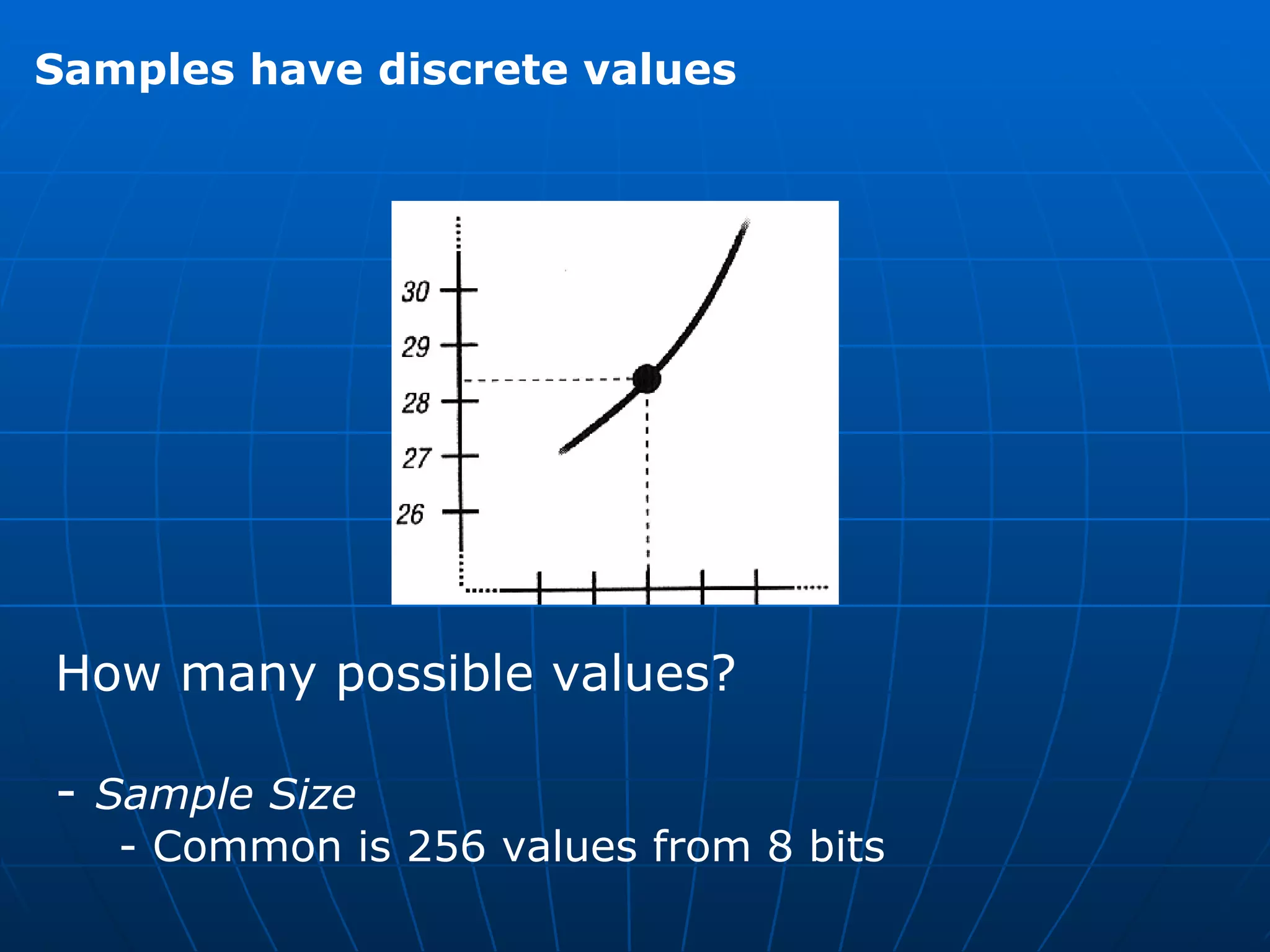

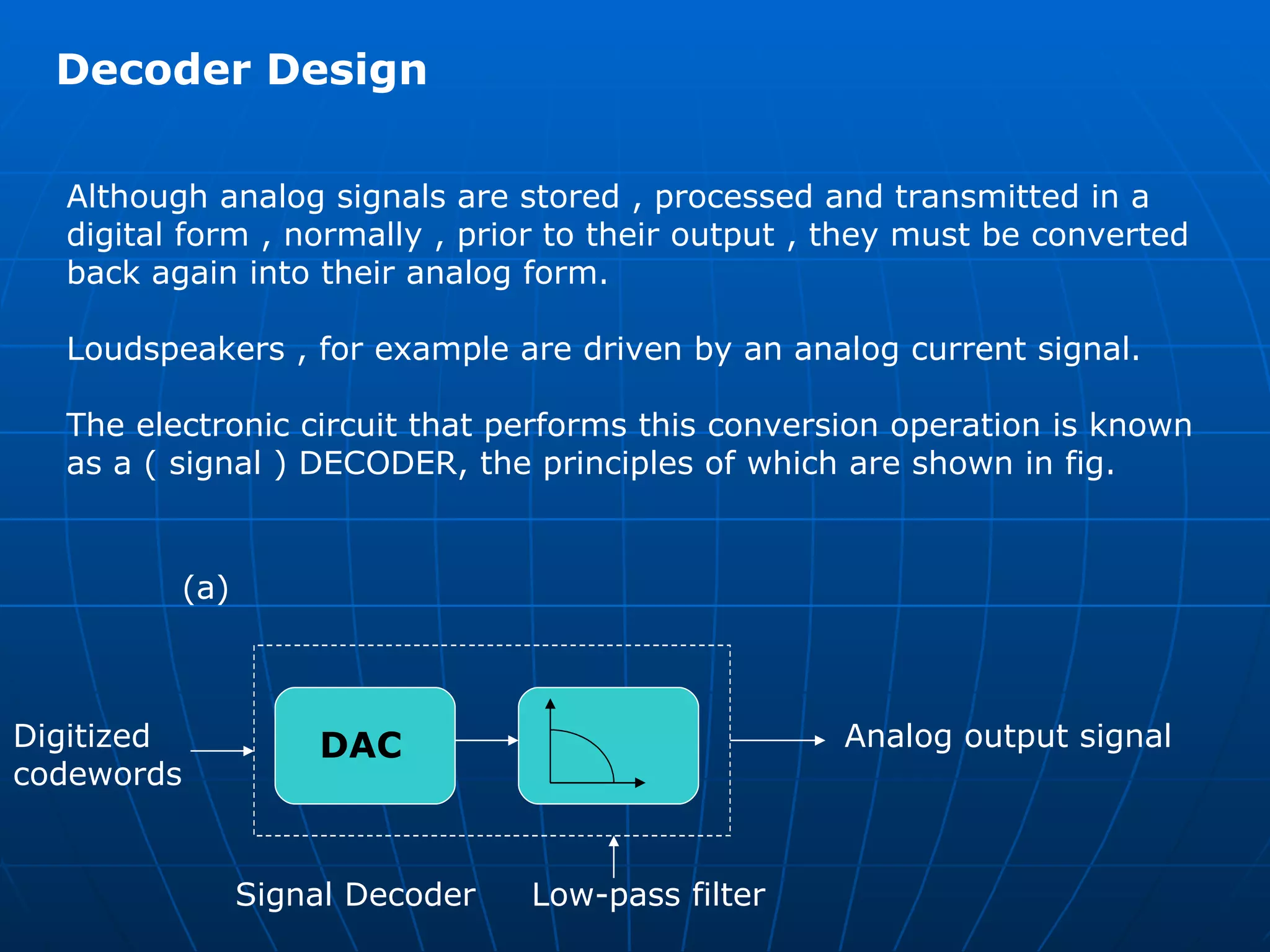

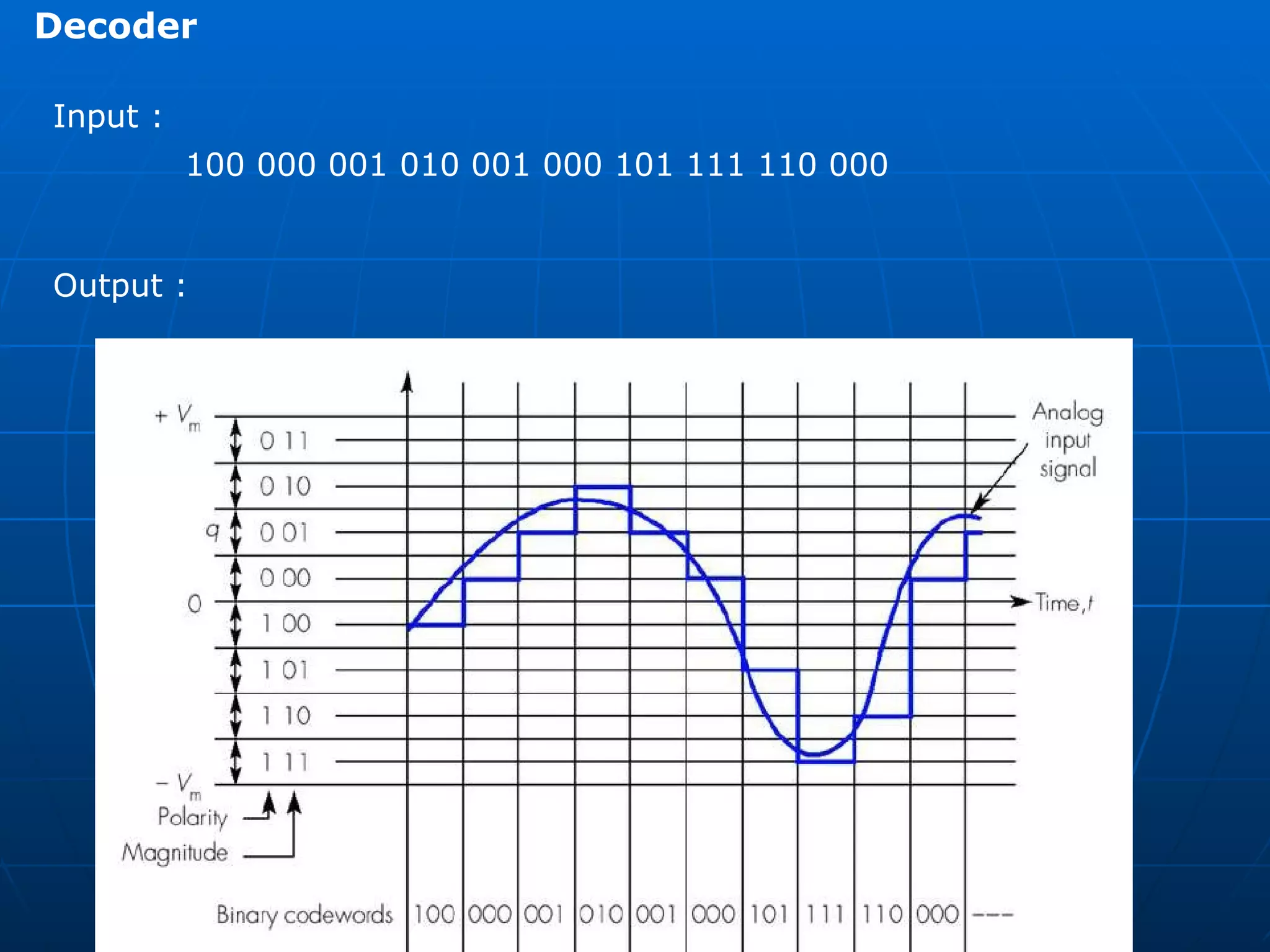

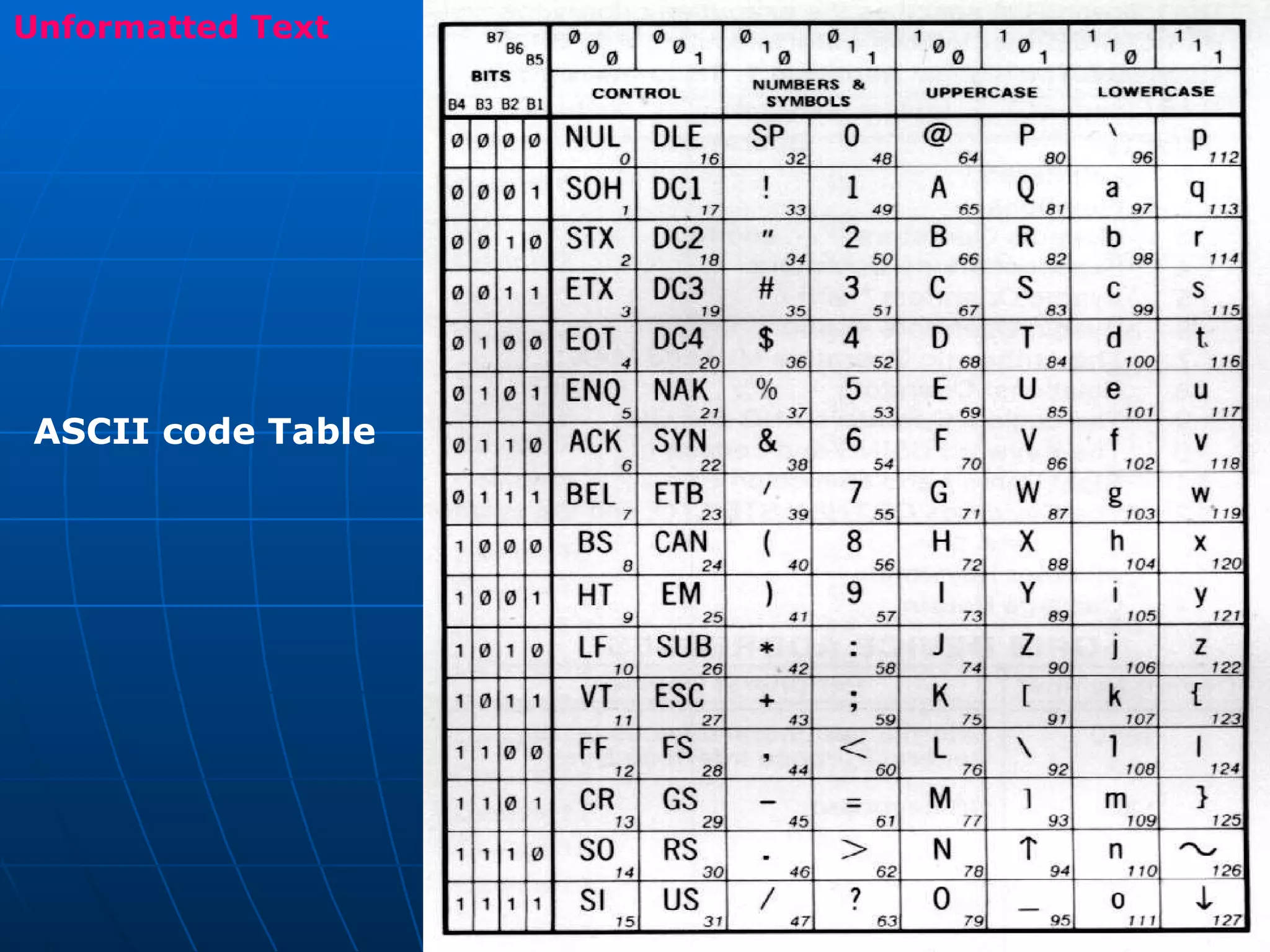

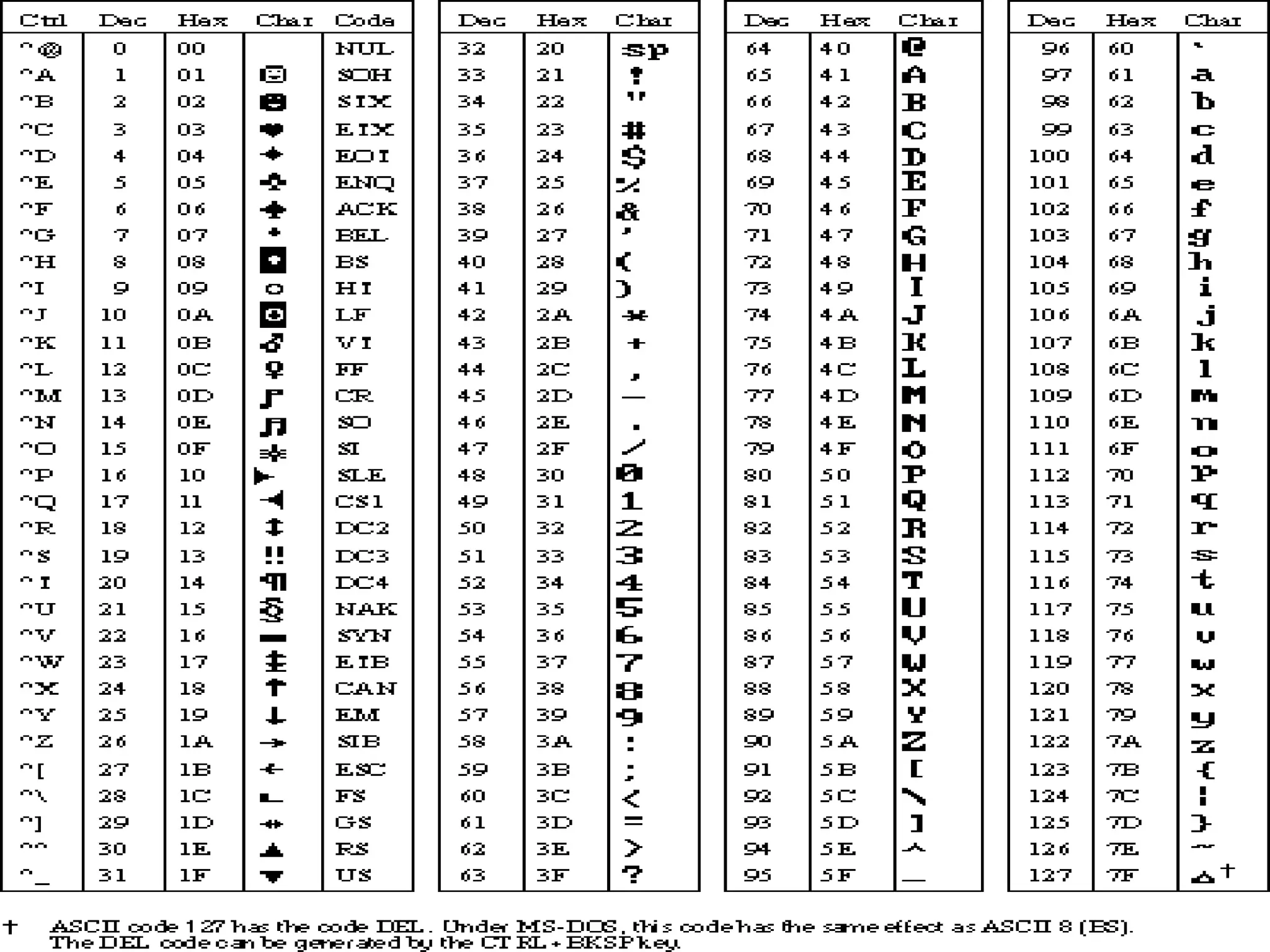

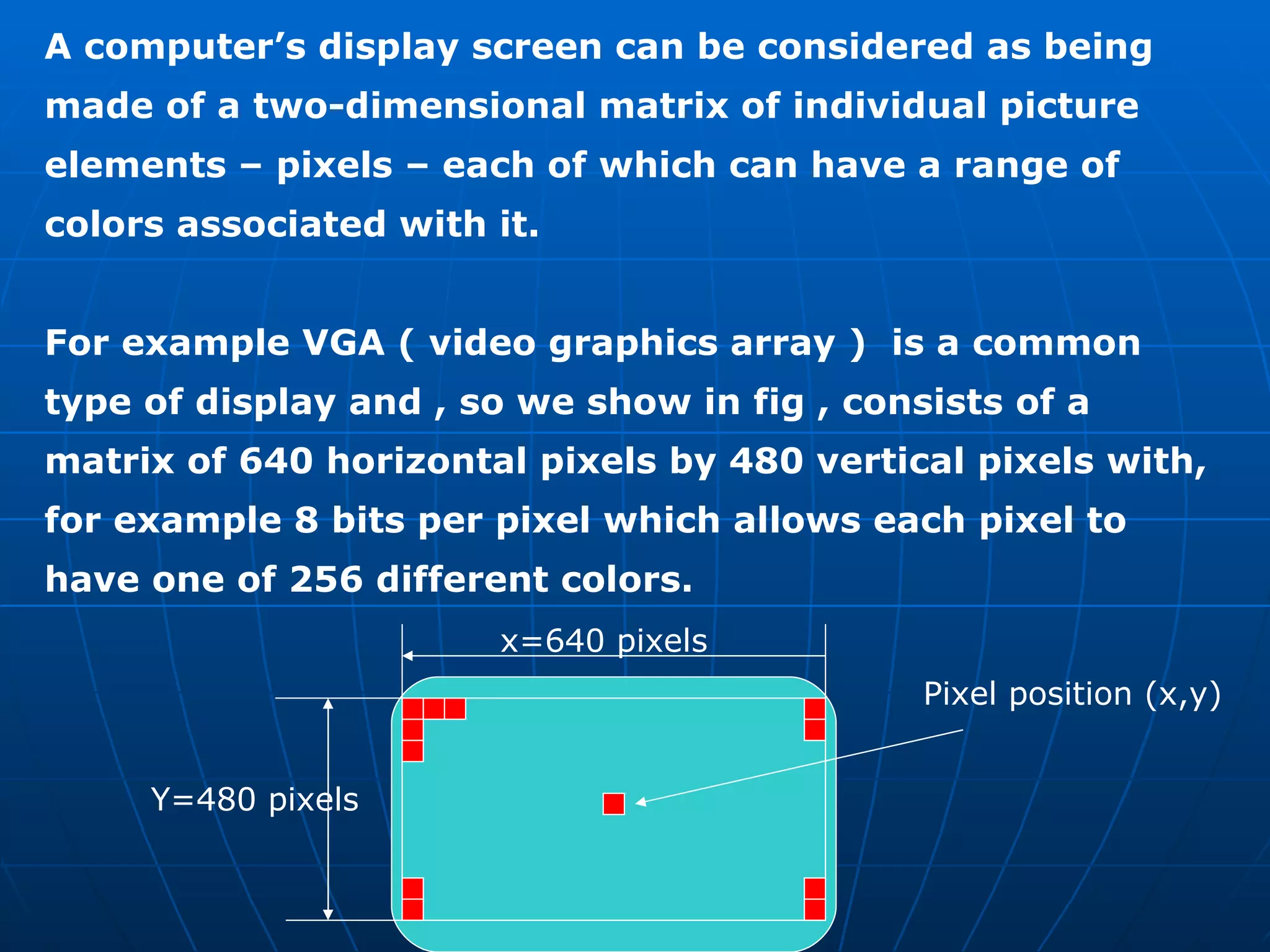

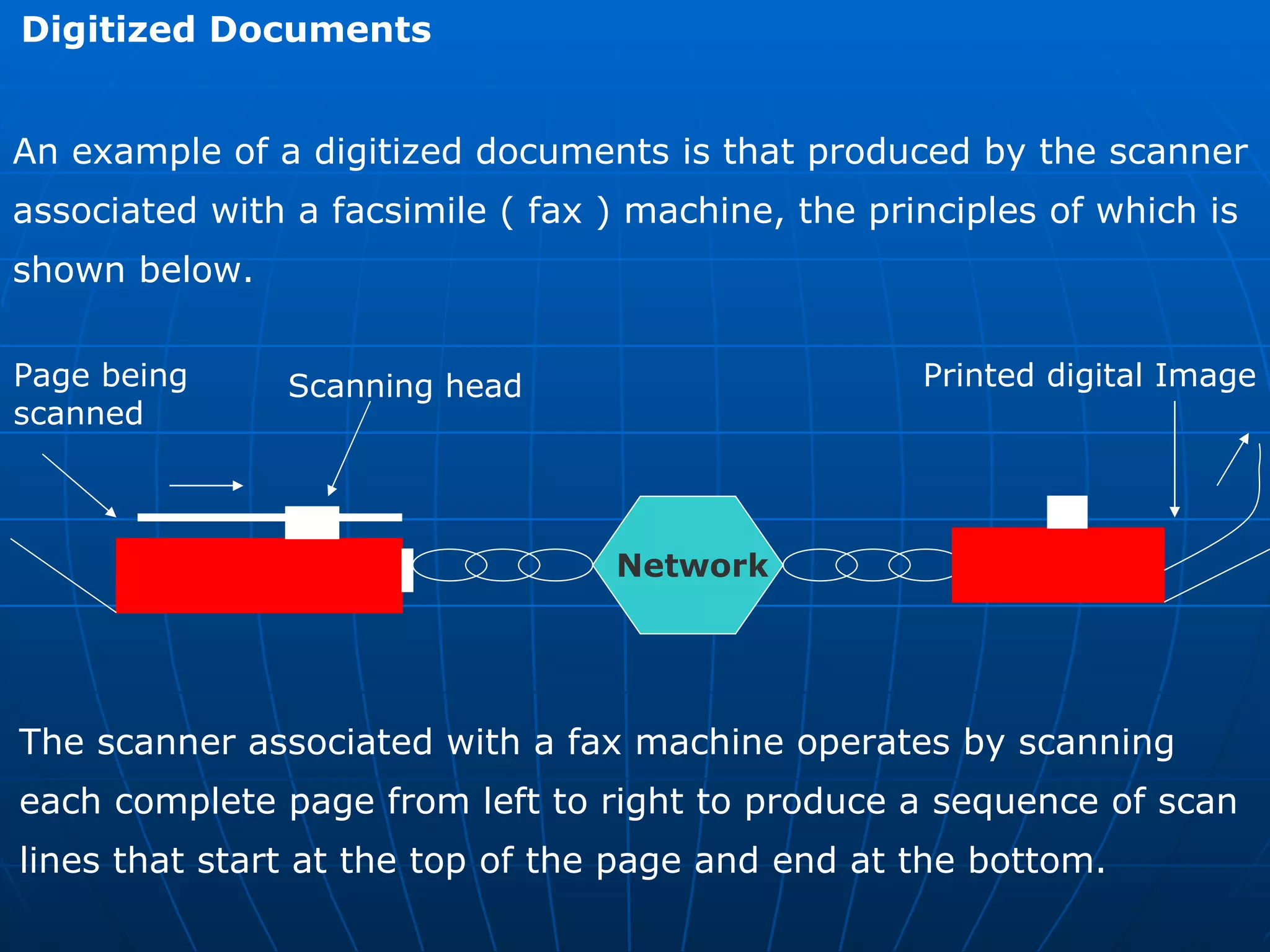

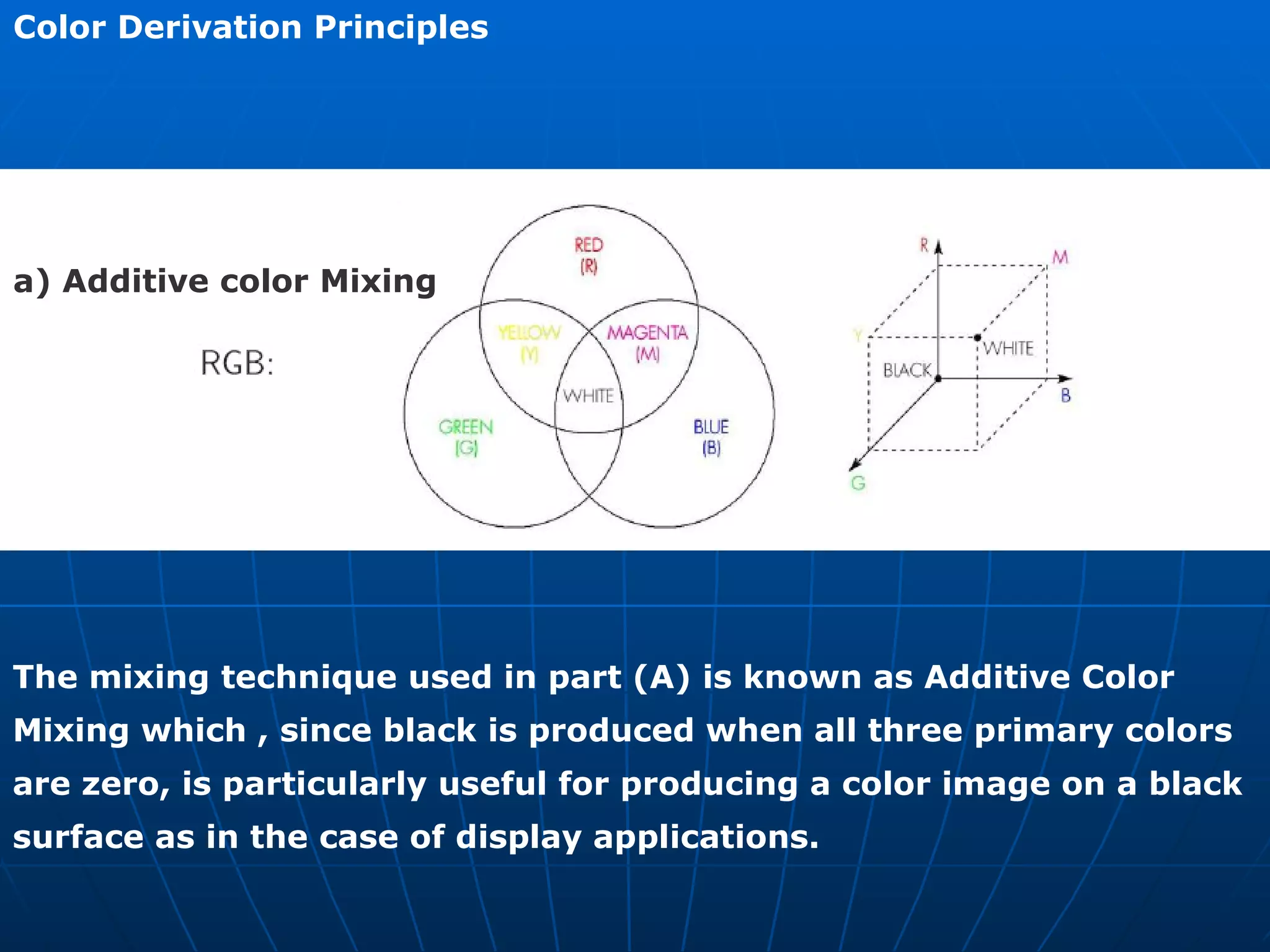

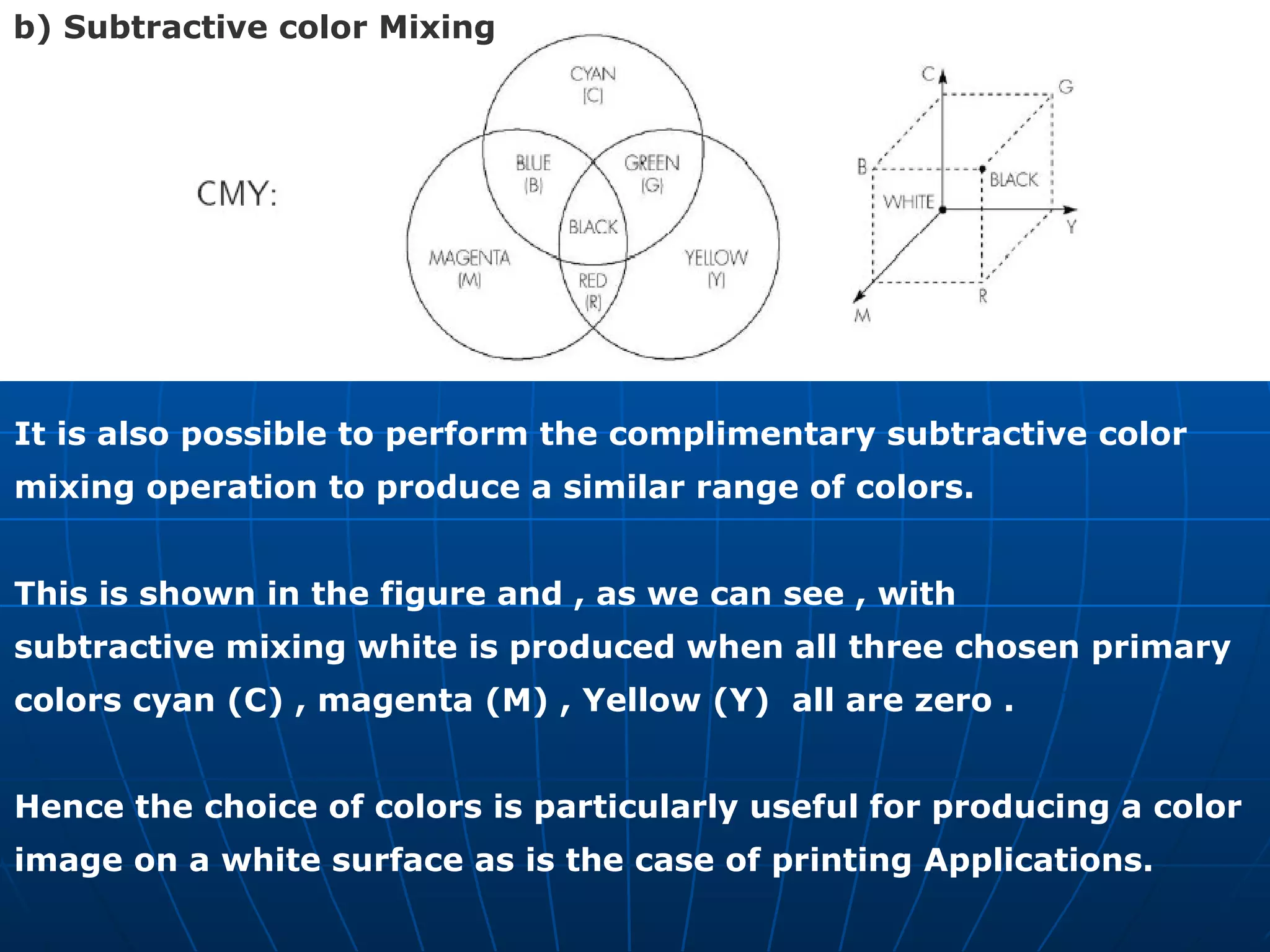

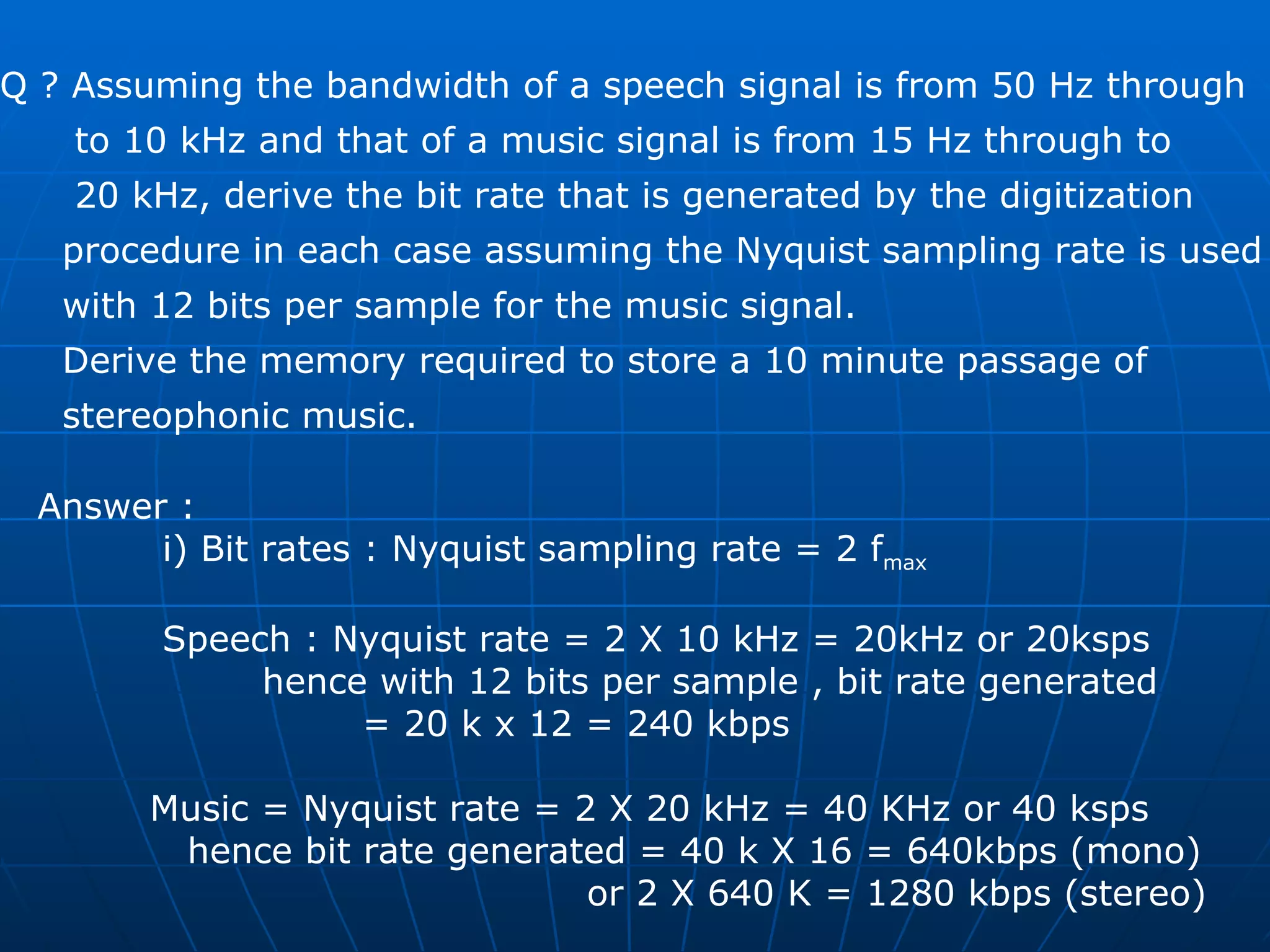

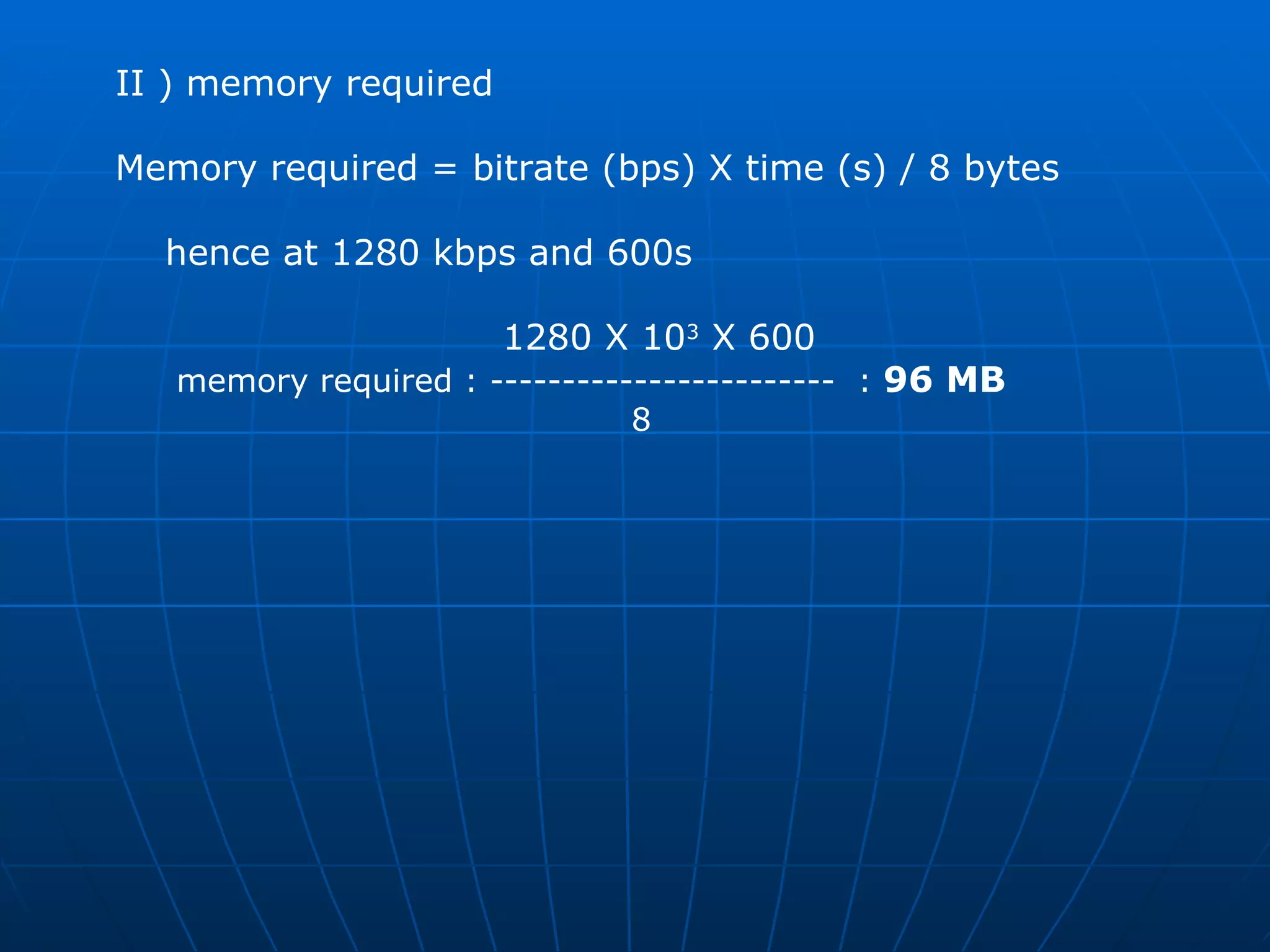

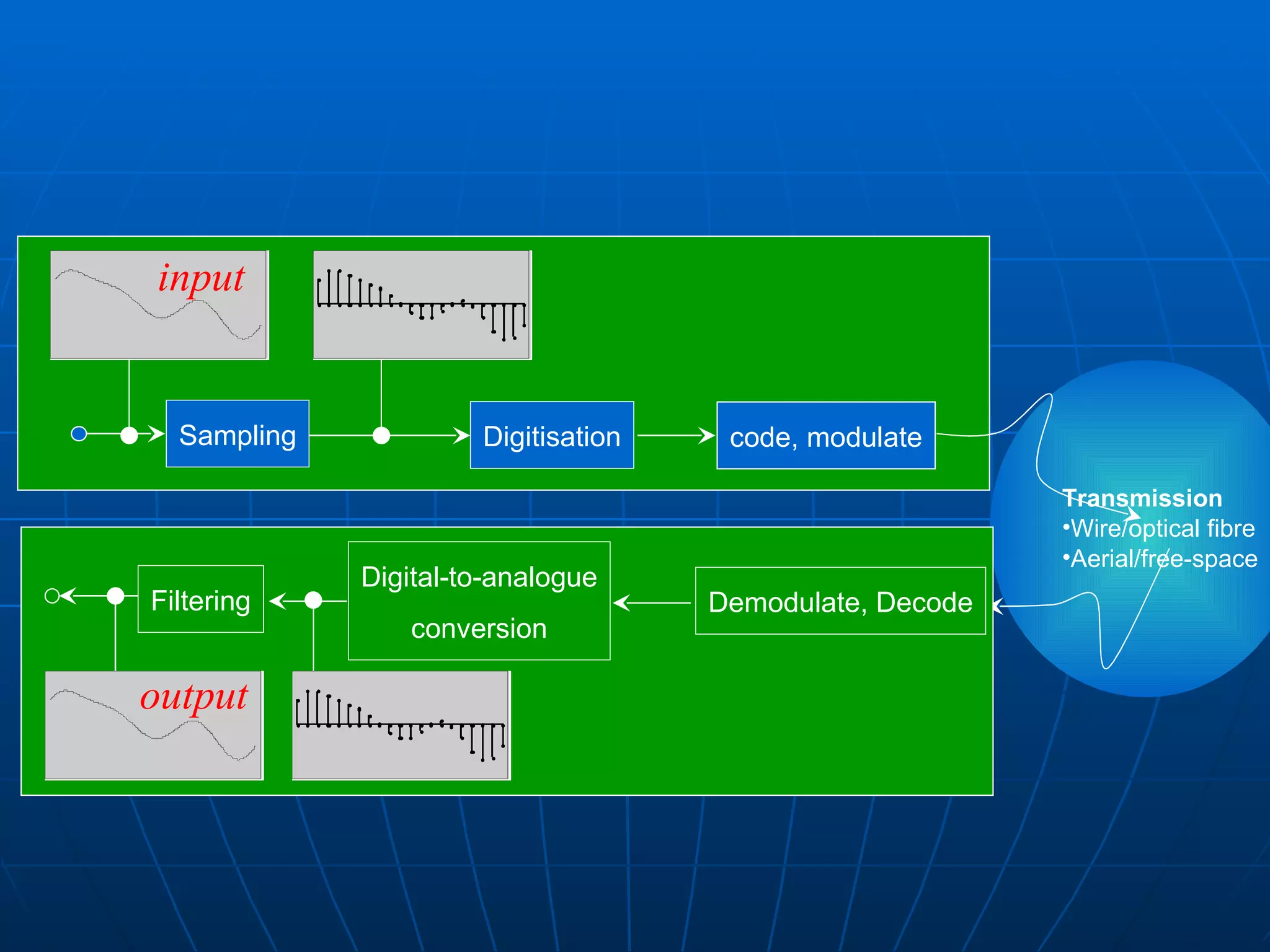

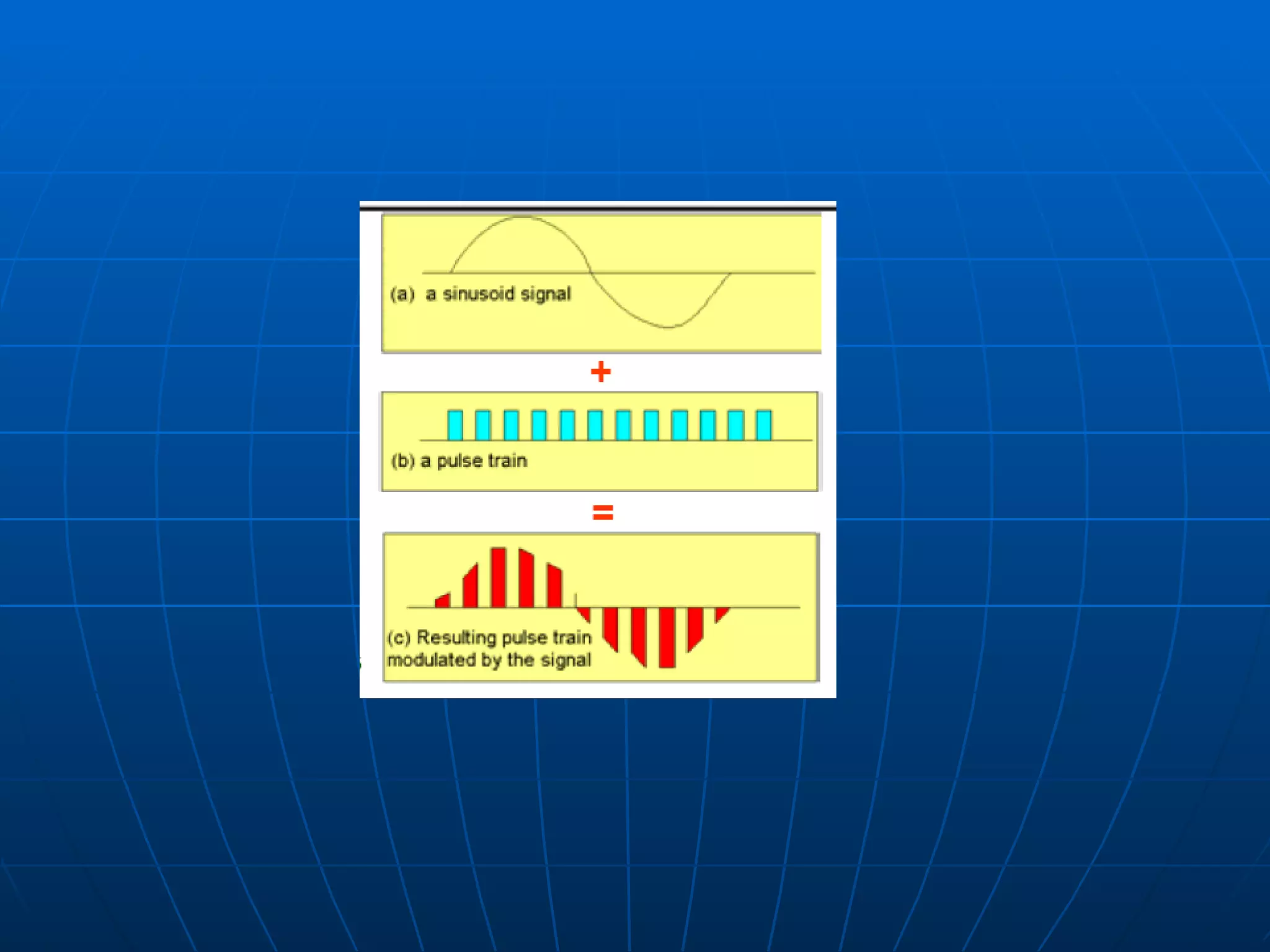

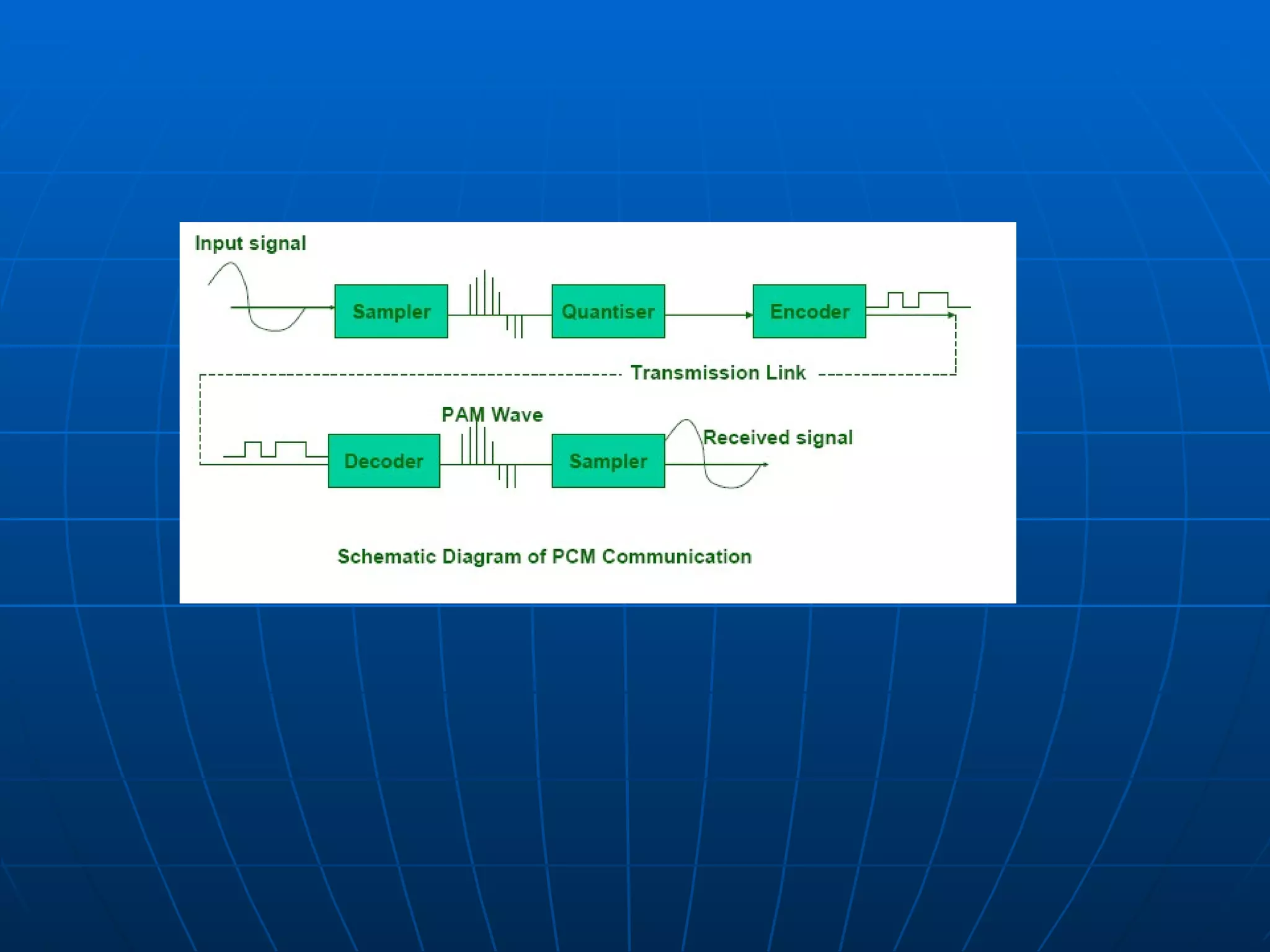

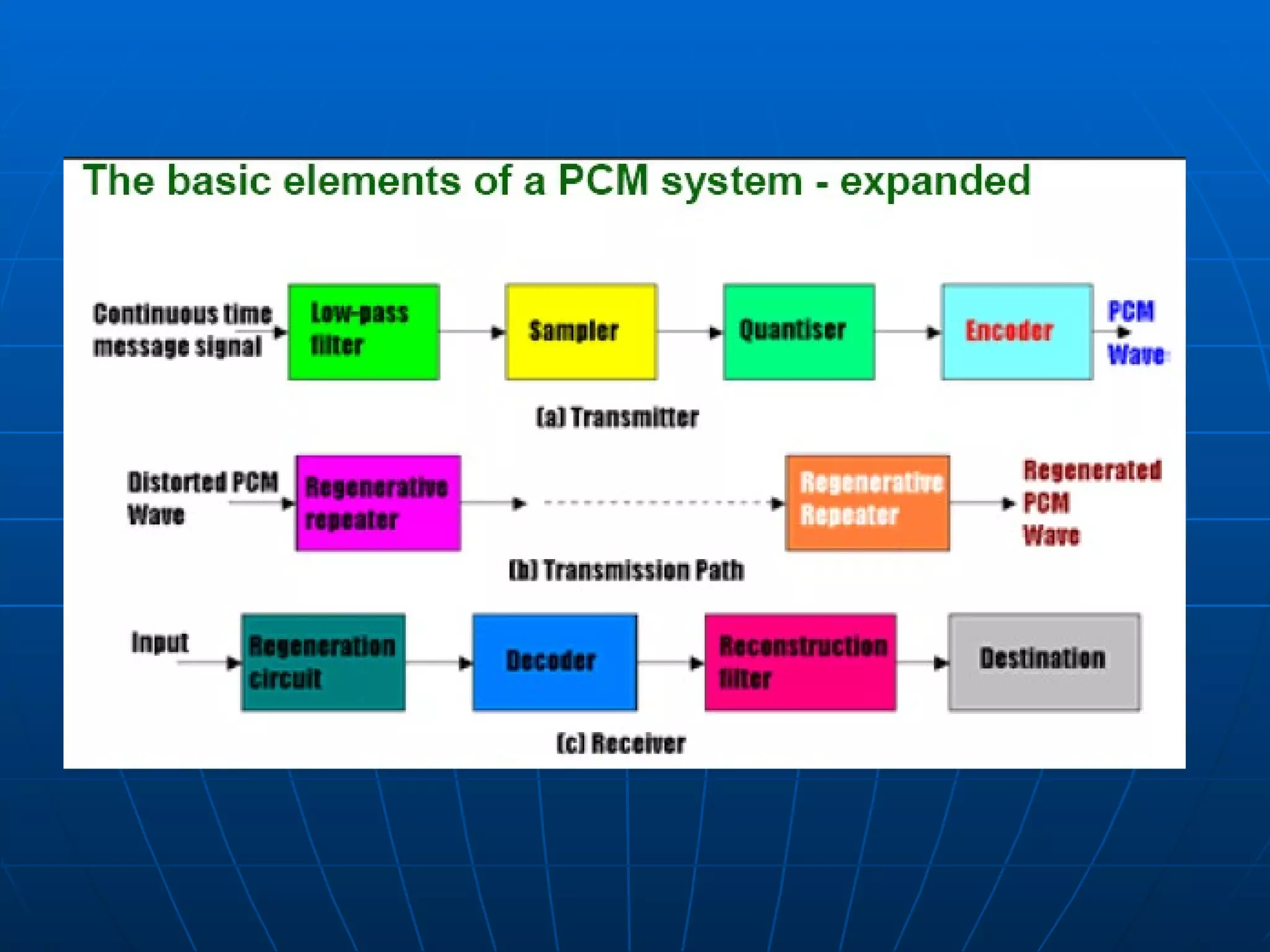

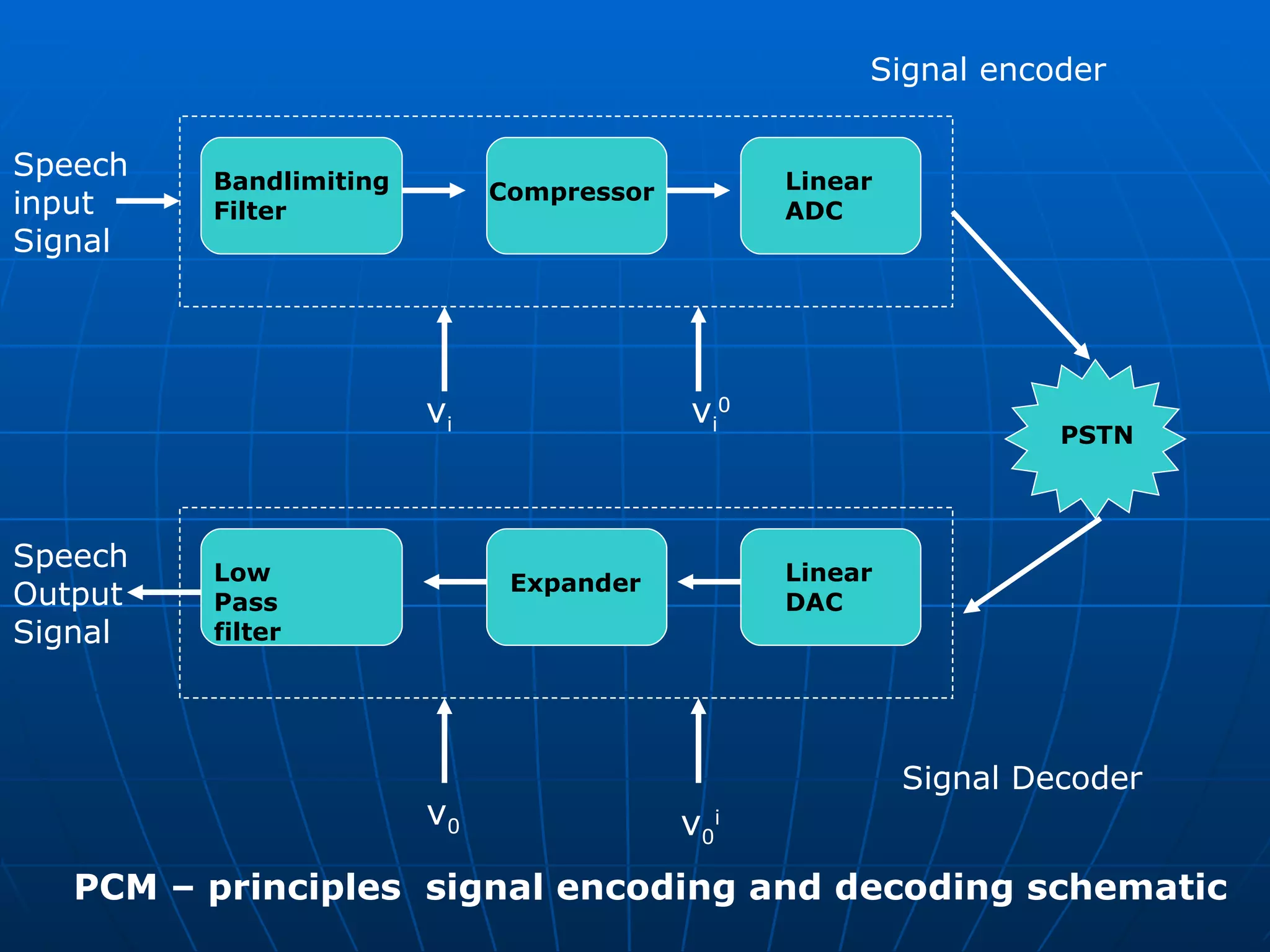

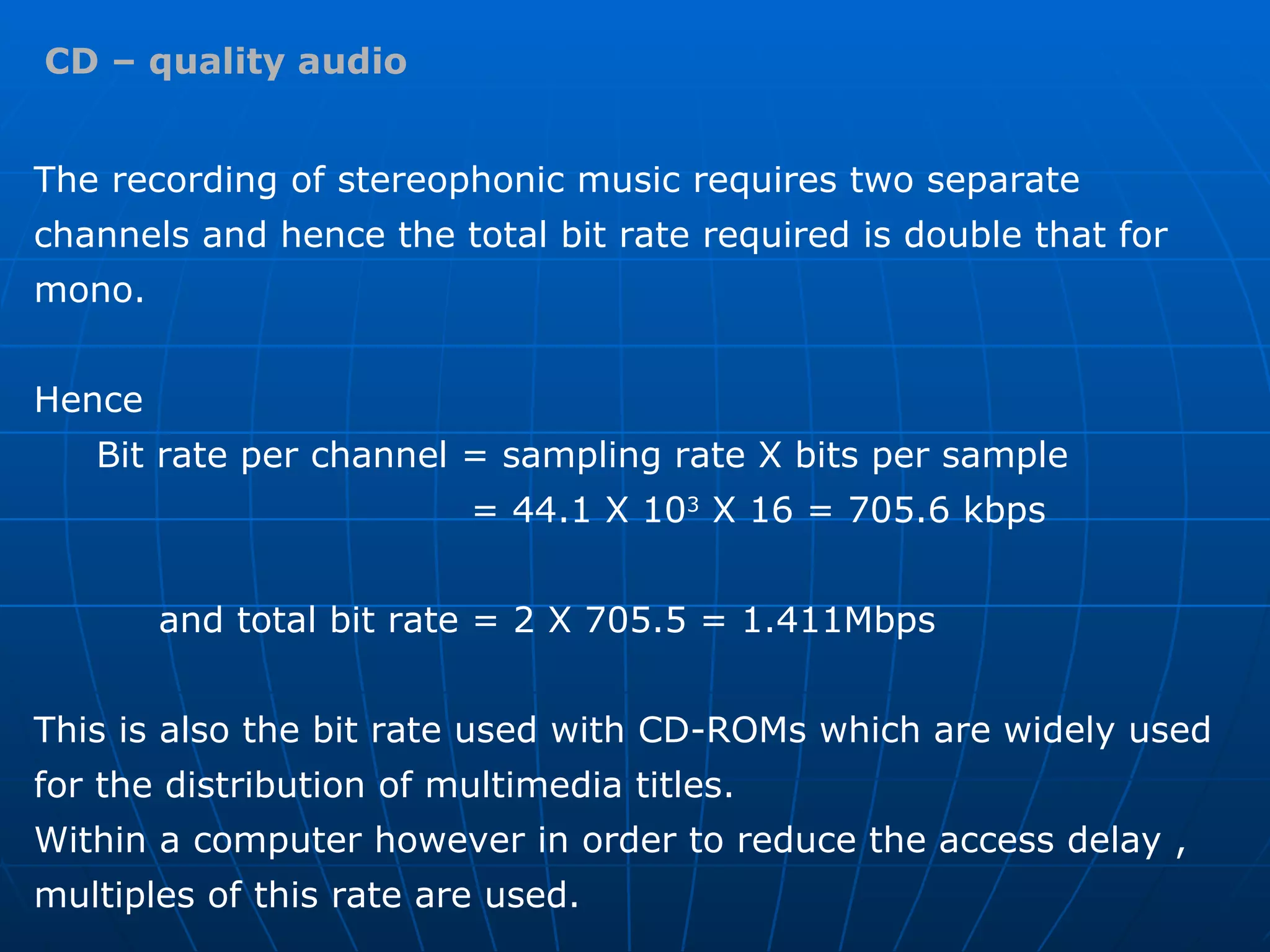

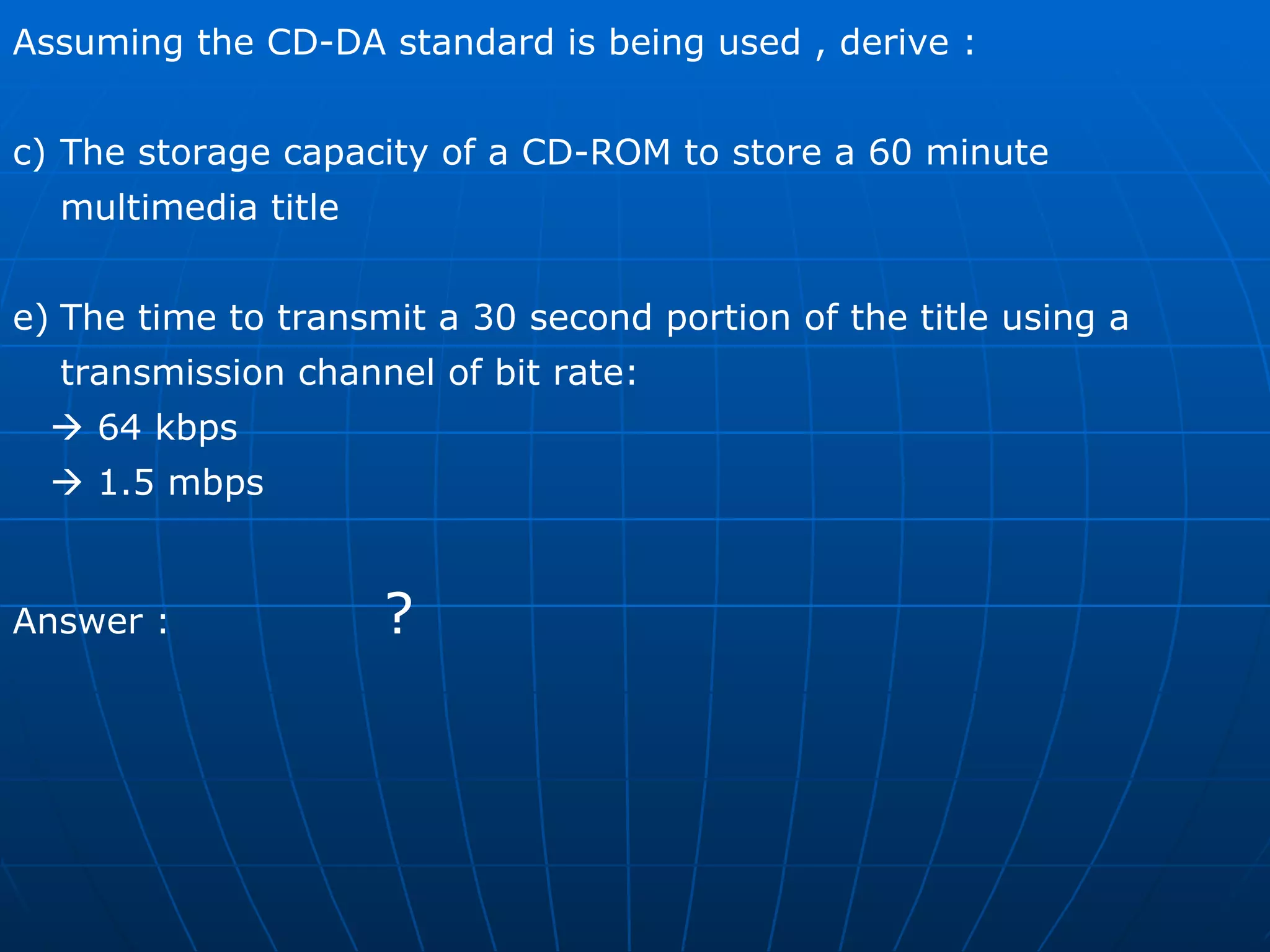

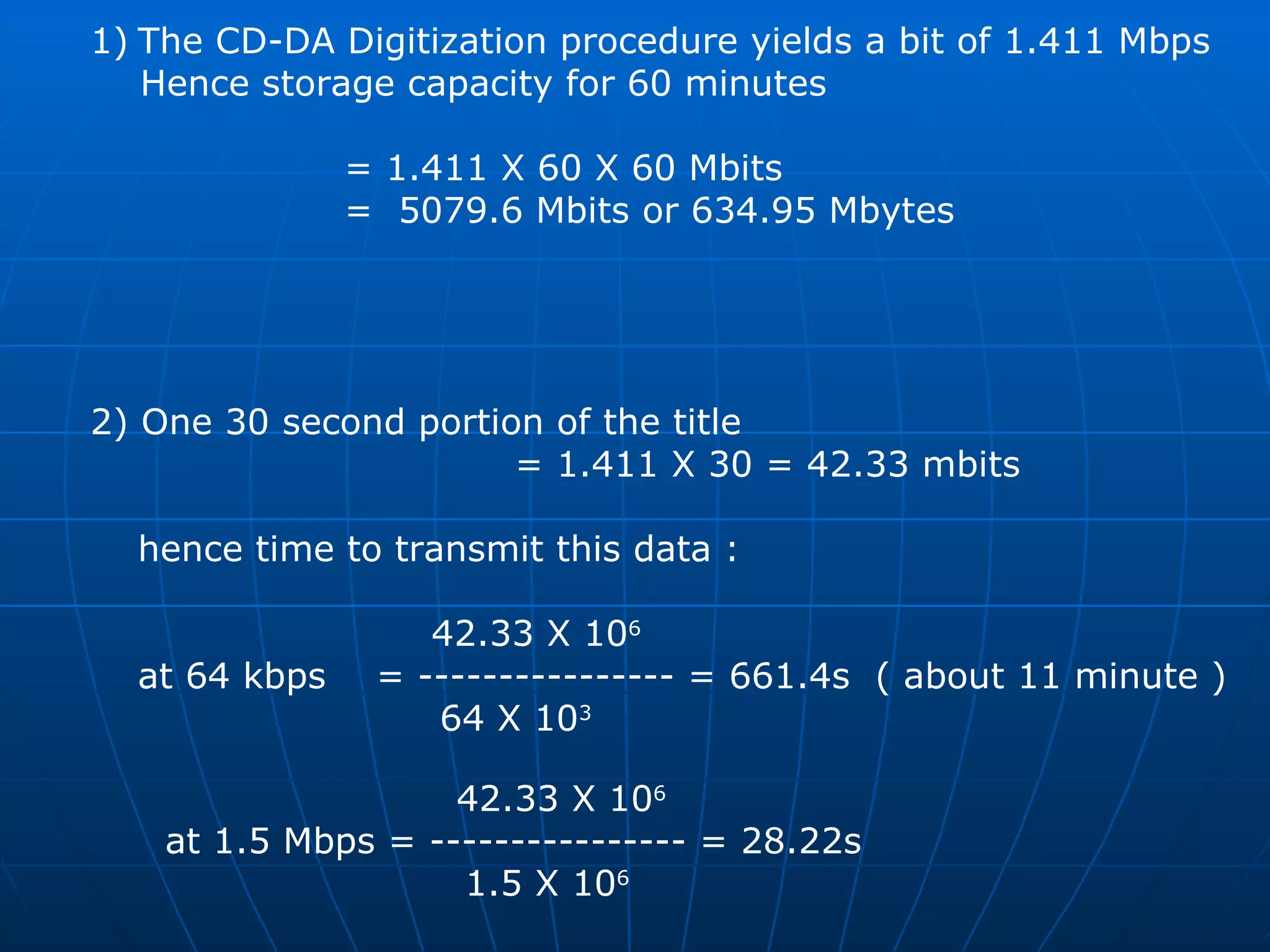

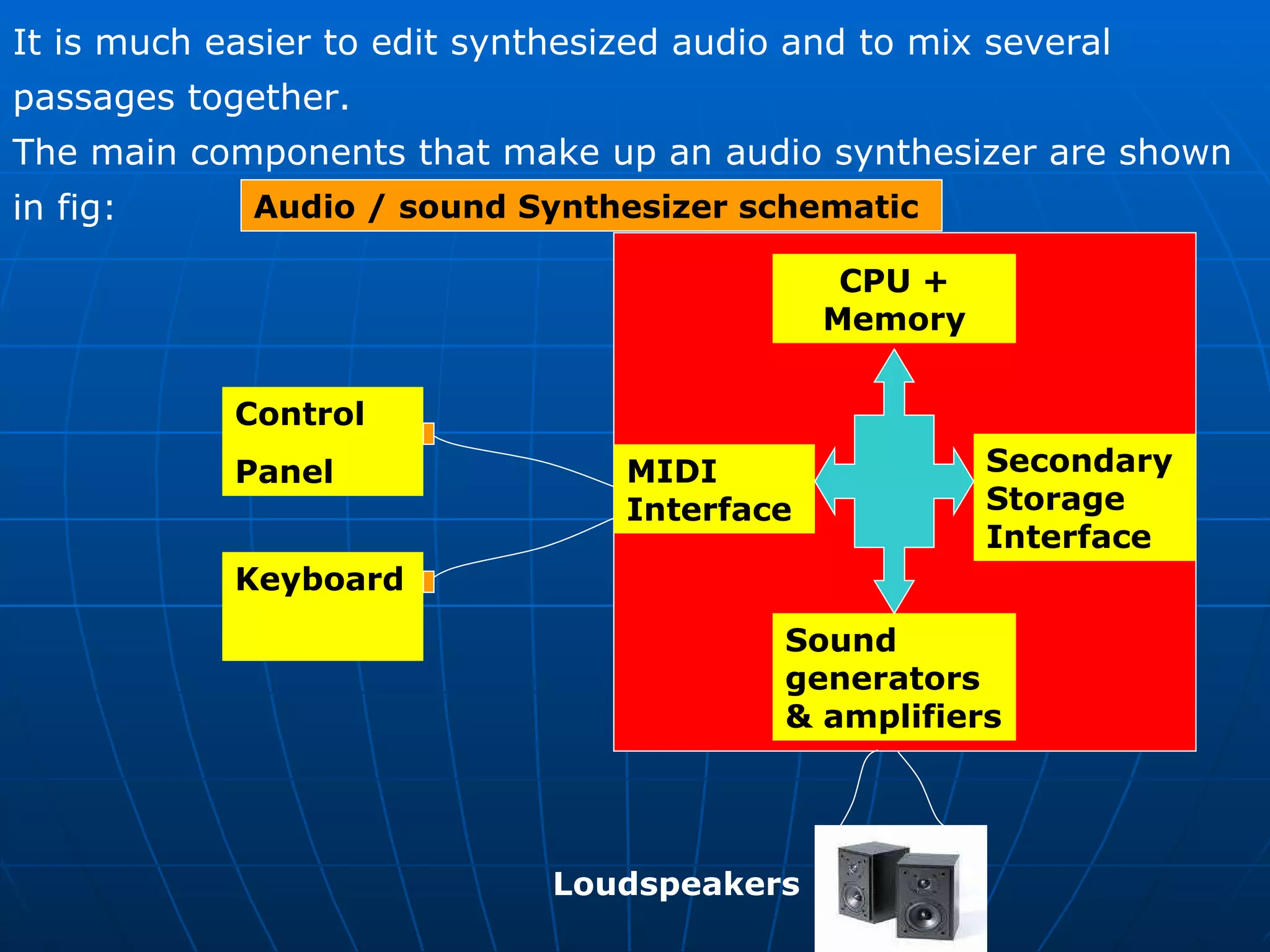

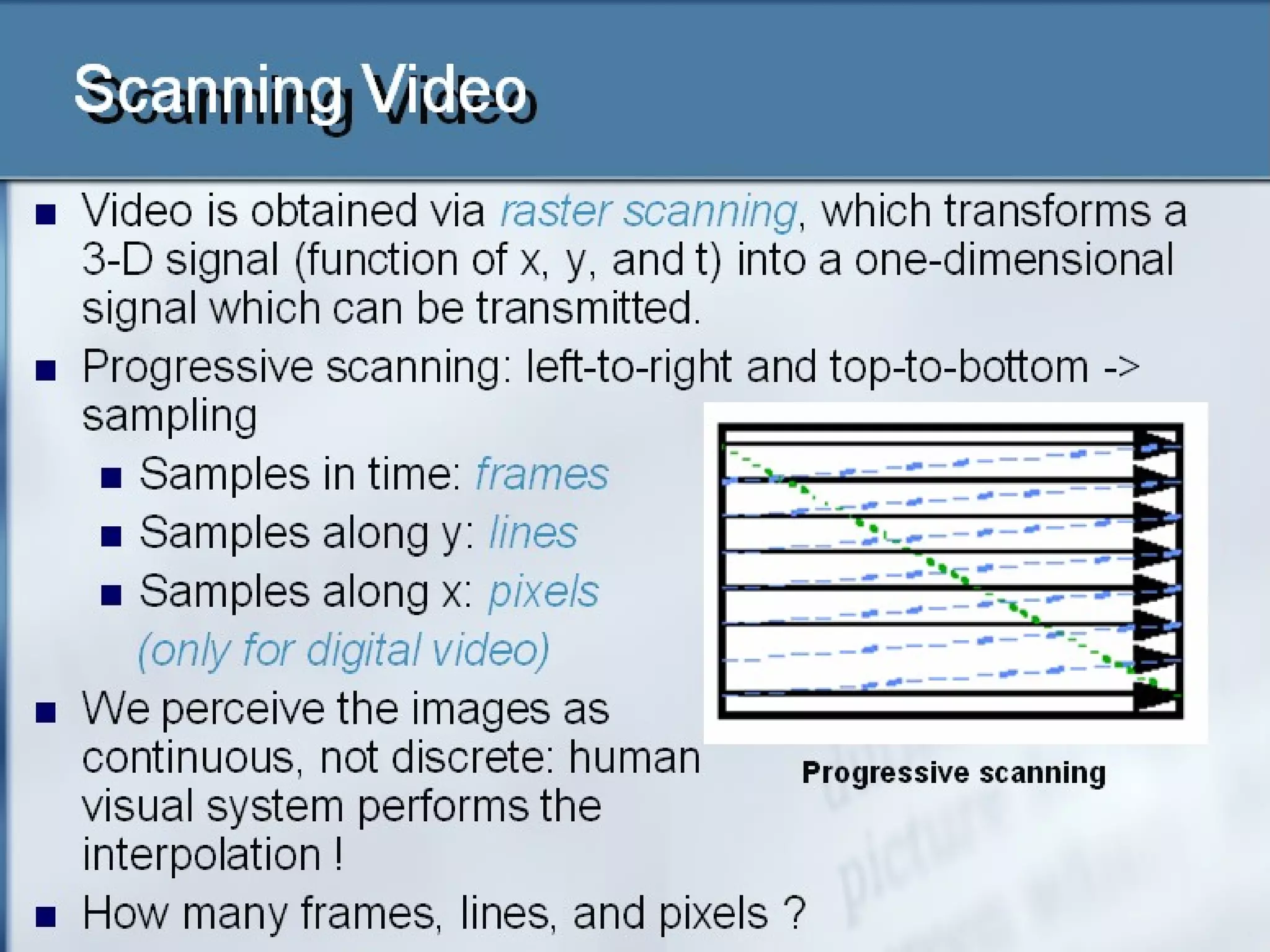

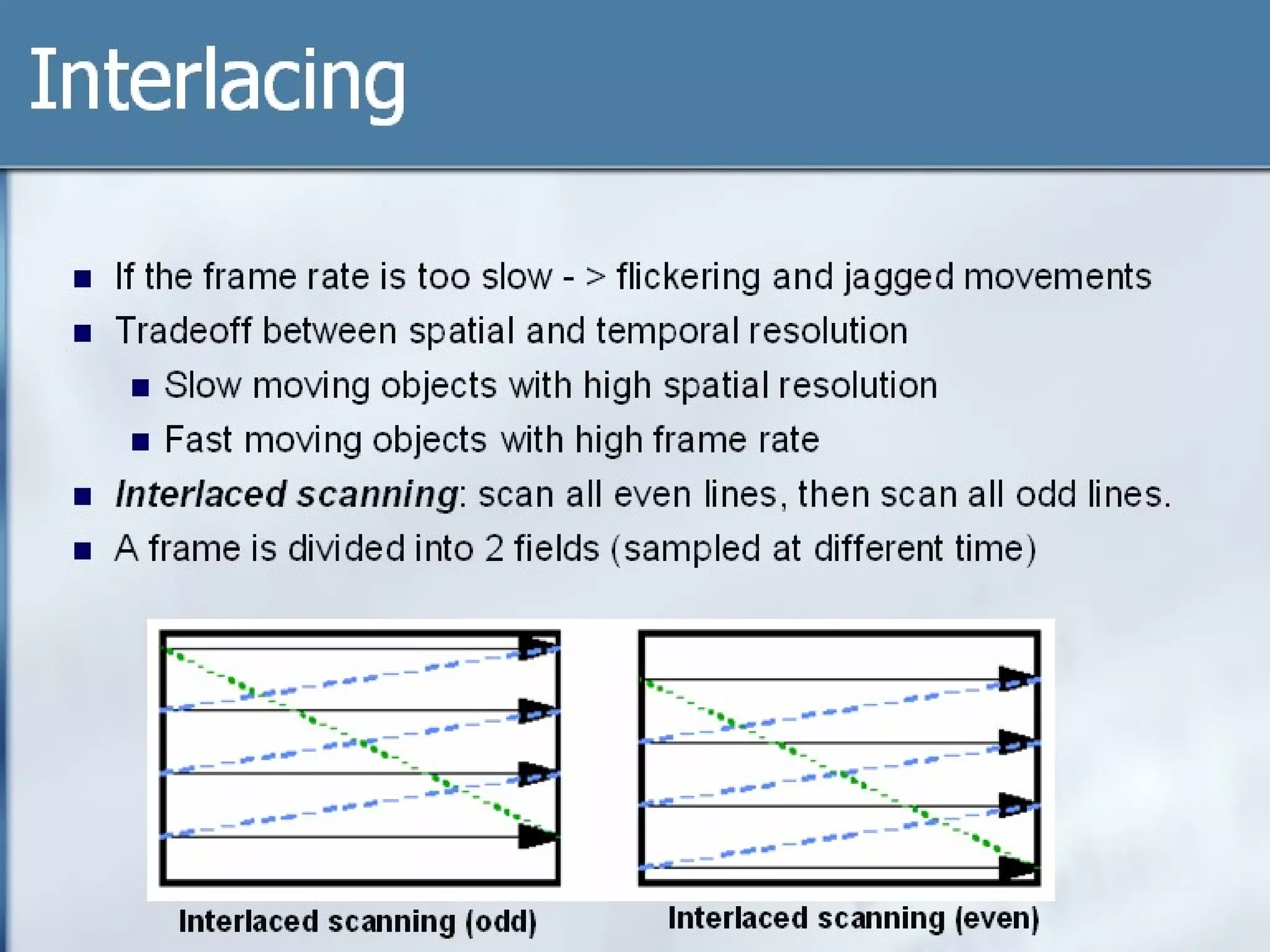

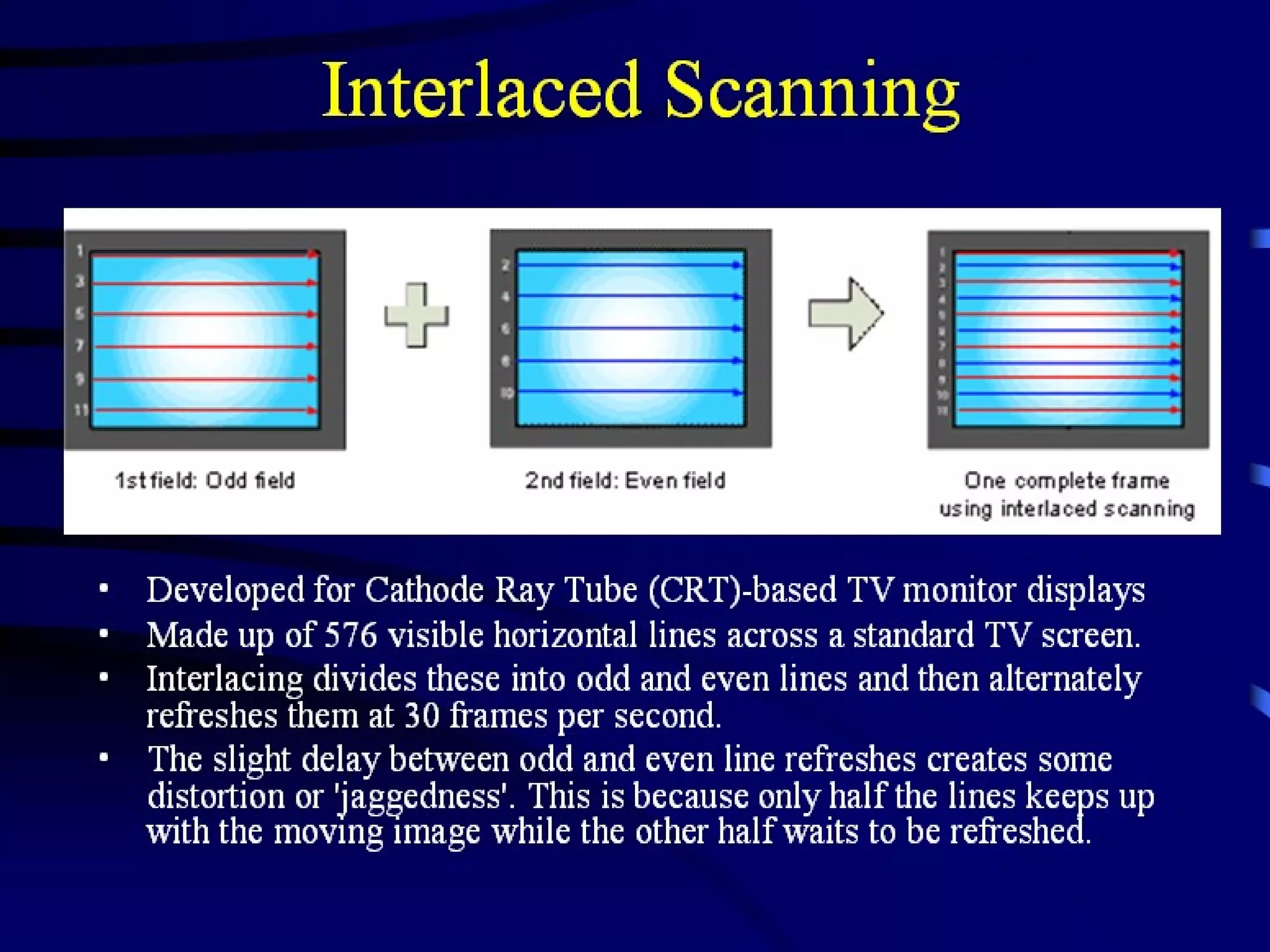

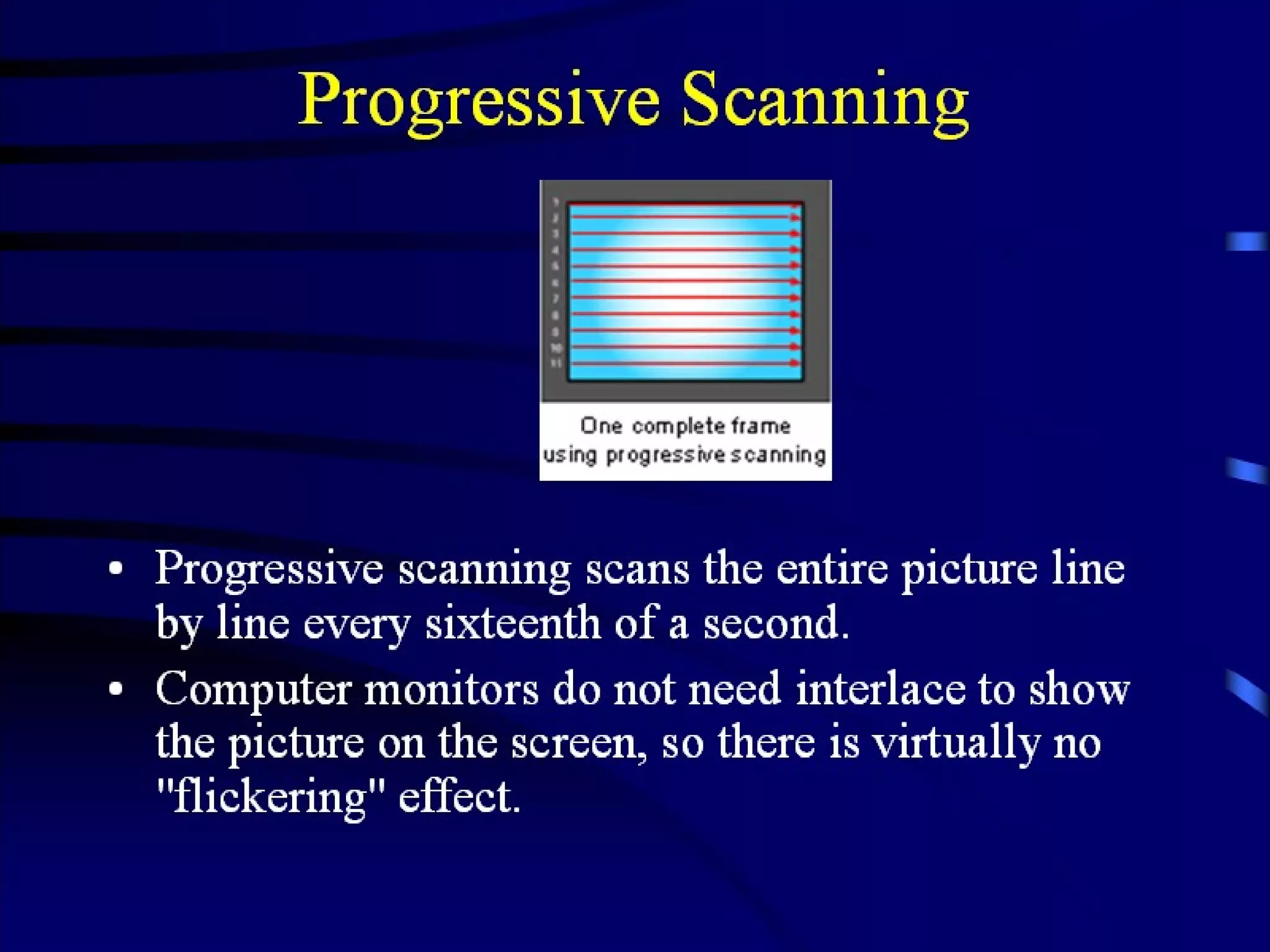

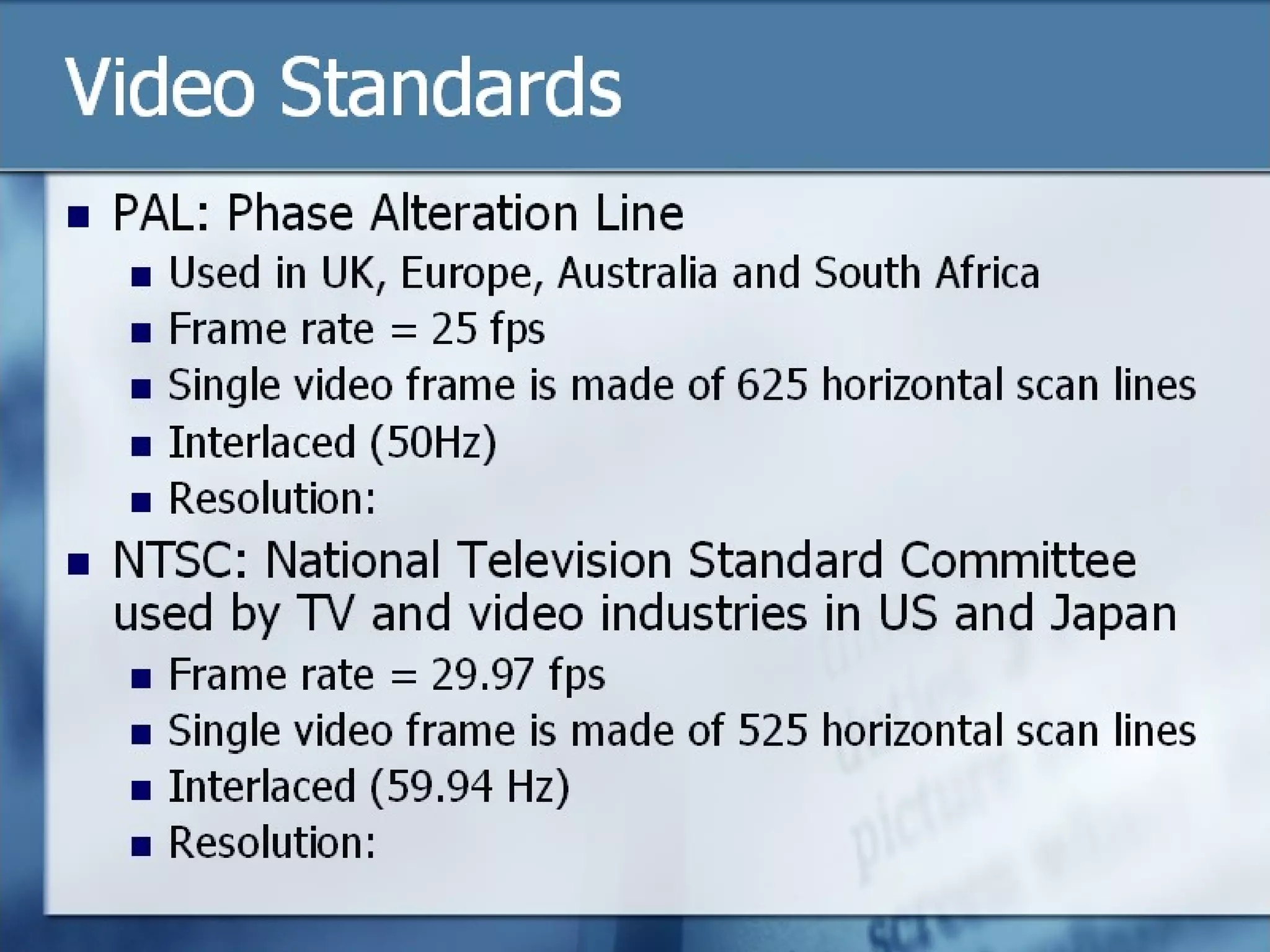

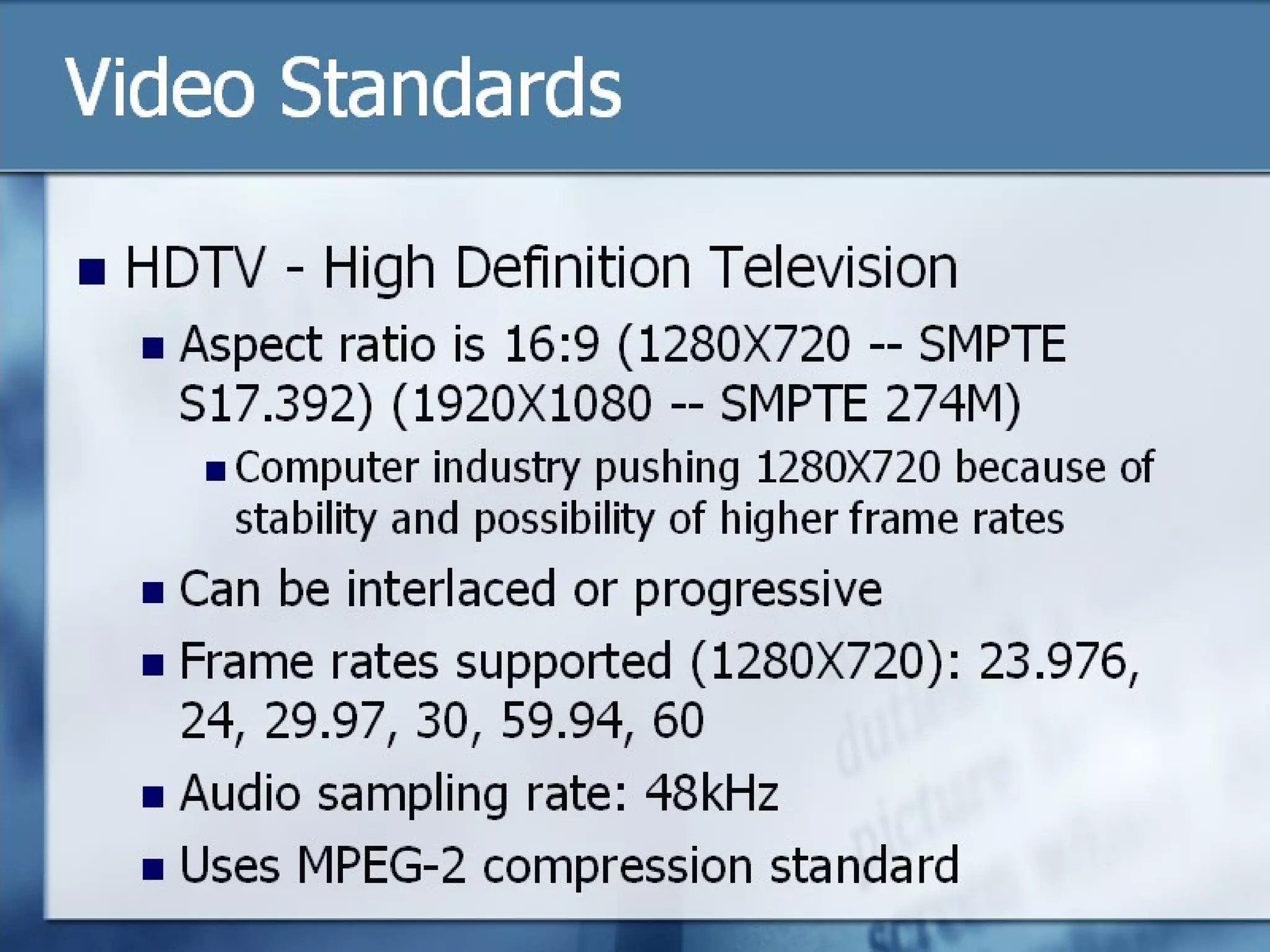

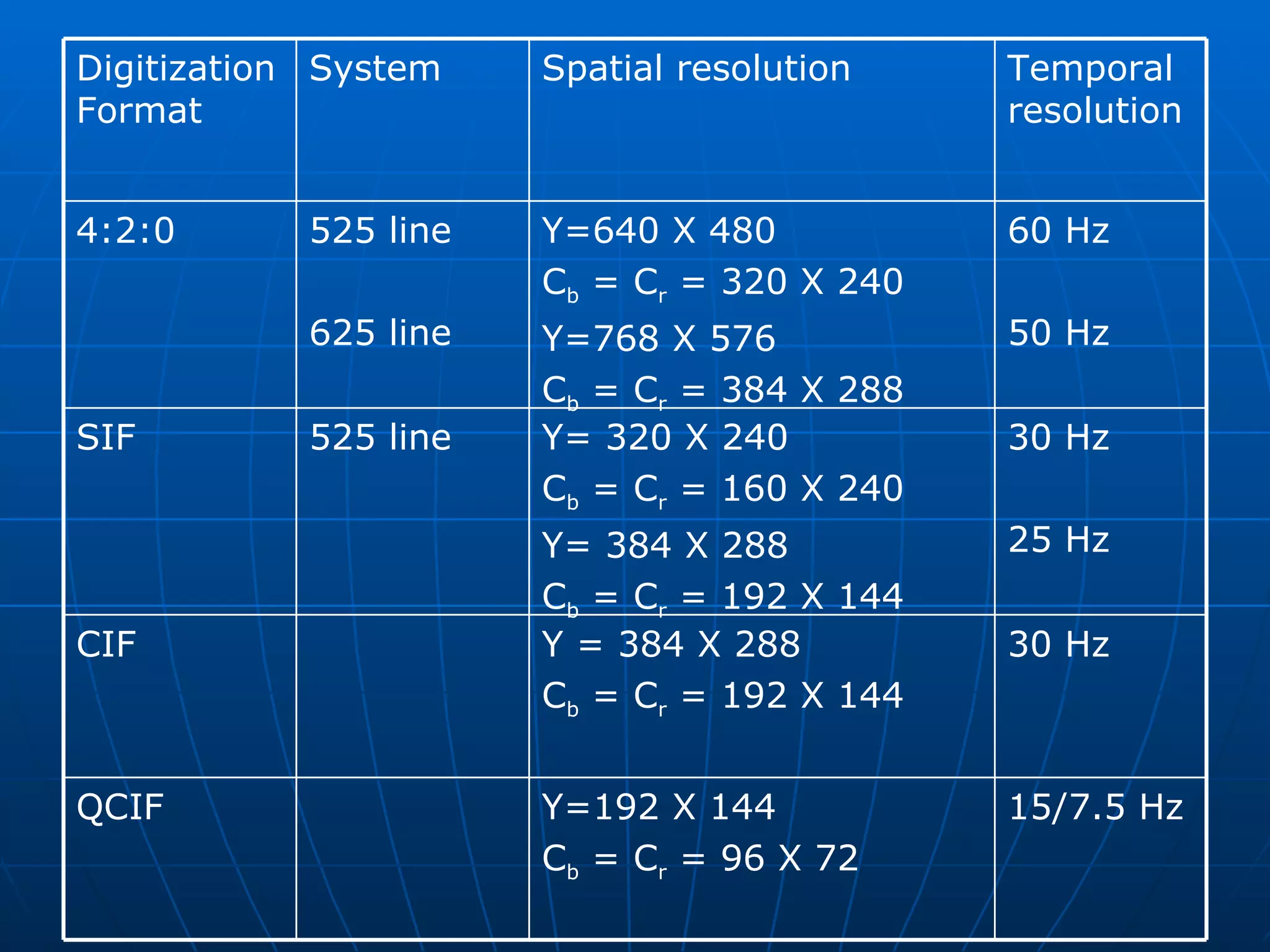

This document discusses multimedia information representation and digitization principles. It covers the different media types used in multimedia like text, images, audio, and video. It explains how each media type is represented digitally and the encoding and decoding processes used to convert analog signals to digital and vice versa. It also discusses topics like digital sampling, quantization, signal bandwidth, encoding design, and image and text representation formats.