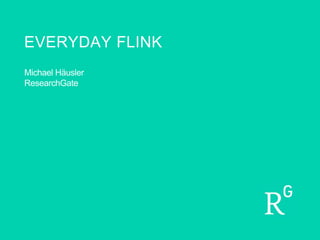

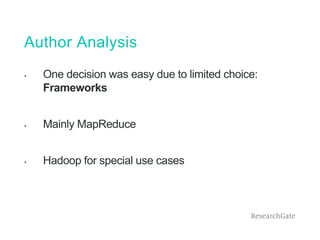

This document discusses different frameworks for big data processing at ResearchGate, including Hive, MapReduce, and Flink. It provides an example of using Hive to find the top 5 coauthors for each author based on publication data. Code snippets in Hive SQL and Java are included to implement the top k coauthors user defined aggregate function (UDAF) in Hive. The document evaluates different frameworks based on criteria like features, performance, and usability.

![Simple Use Case: Top 5 Coauthors

publication = {

"publicationUid": 7,

"title": "Foo",

"authorships": [

{

"authorUid": 23,

"authorName": "Jane"

},

{

"authorUid": 25,

"authorName": "John"

}

]

}

authorAccountMapping = {

"authorUid": 23,

"accountId": 42

}

(7, 23)

(7, 25)

(7, "AC:42")

(7, "AU:25")

topCoauthorStats = {

"authorKey": "AC:42",

"topCoauthors": [

{

"coauthorKey": "AU:23",

"coauthorCount": 1

}

]

}](https://image.slidesharecdn.com/everyday-flink-151019140956-lva1-app6891/85/Michael-Hausler-Everyday-flink-22-320.jpg)

![Hive

package net.researchgate.authorstats.hive;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.apache.hadoop.hive.ql.exec.Description;

import org.apache.hadoop.hive.ql.exec.UDFArgumentTypeException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.parse.SemanticException;

import org.apache.hadoop.hive.ql.udf.generic.AbstractGenericUDAFResolver;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDAFEvaluator;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorUtils;

import org.apache.hadoop.hive.serde2.objectinspector.PrimitiveObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.StandardListObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.StandardStructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.WritableConstantIntObjectInspector;

import org.apache.hadoop.hive.serde2.typeinfo.PrimitiveTypeInfo;

import org.apache.hadoop.hive.serde2.typeinfo.TypeInfo;

import org.apache.hadoop.io.IntWritable;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Comparator;

import java.util.List;

import java.util.PriorityQueue;

/**

* Returns top n values sorted by keys.

* <p/>

* The output is an array of values

*/

@Description(name = "top_k",

value = "_FUNC_(value, key, n) - Returns the top n values with the maximum keys",

extended = "Example:n"

+ "> SELECT top_k(value, key, 3) FROM src;n"

+ "[3, 2, 1]n"

+ "The return value is an array of values which correspond to the maximum keys"

)

public class UDAFTopK extends AbstractGenericUDAFResolver {

// class static variables

static final Log LOG = LogFactory.getLog(UDAFTopK.class.getName());

private static void ensurePrimitive(int paramIndex, TypeInfo parameter) throws UDFArgumentTypeException {

if (parameter.getCategory() != ObjectInspector.Category.PRIMITIVE) {

throw new UDFArgumentTypeException(paramIndex, "Only primitive type arguments are accepted but "

+ parameter.getTypeName() + " was passed as parameter " + Integer.toString(paramIndex + 1) + ".");

}

}

private static void ensureInt(int paramIndex, TypeInfo parameter) throws UDFArgumentTypeException {

ensurePrimitive(paramIndex, parameter);

PrimitiveTypeInfo pti = (PrimitiveTypeInfo) parameter;

switch (pti.getPrimitiveCategory()) {

case INT:

return;

default:

throw new IllegalStateException("Unhandled primitive");

}

}

private static void ensureNumberOfArguments(int n, TypeInfo[] parameters) throws SemanticException {

if (parameters.length != n) {

throw new UDFArgumentTypeException(parameters.length - 1, "Please specify exactly " + Integer.toString(n) + " arguments.");

}

}

@Override

public GenericUDAFEvaluator getEvaluator(TypeInfo[] parameters) throws SemanticException {

ensureNumberOfArguments(3, parameters);

//argument 0 can be any

ensurePrimitive(1, parameters[1]);

ensureInt(2, parameters[2]);

return new TopKUDAFEvaluator();

}

public static class TopKUDAFEvaluator extends GenericUDAFEvaluator {

static final Log LOG = LogFactory.getLog(TopKUDAFEvaluator.class.getName());

public static class IntermObjectInspector {

public StandardStructObjectInspector topSoi;

public PrimitiveObjectInspector noi;

public StandardListObjectInspector loi;

public StandardStructObjectInspector soi;

public ObjectInspector oiValue;

public PrimitiveObjectInspector oiKey;

public IntermObjectInspector(StandardStructObjectInspector topSoi) throws HiveException {

this.topSoi = topSoi;

this.noi = (PrimitiveObjectInspector) topSoi.getStructFieldRef("n").getFieldObjectInspector();

this.loi = (StandardListObjectInspector) topSoi.getStructFieldRef("data").getFieldObjectInspector();

soi = (StandardStructObjectInspector) loi.getListElementObjectInspector();

oiValue = soi.getStructFieldRef("value").getFieldObjectInspector();

oiKey = (PrimitiveObjectInspector) soi.getStructFieldRef("key").getFieldObjectInspector();

}

}

private transient ObjectInspector oiValue;

private transient PrimitiveObjectInspector oiKey;

private transient IntermObjectInspector ioi;

private transient int topN;

/**

* PARTIAL1: from original data to partial aggregation data:

* iterate() and terminatePartial() will be called.

* <p/>

* <p/>

* PARTIAL2: from partial aggregation data to partial aggregation data:

* merge() and terminatePartial() will be called.

* <p/>

* FINAL: from partial aggregation to full aggregation:

* merge() and terminate() will be called.

* <p/>

* <p/>

* COMPLETE: from original data directly to full aggregation:

* iterate() and terminate() will be called.

*/

private static StandardStructObjectInspector getTerminatePartialOutputType(ObjectInspector oiValueMaybeLazy, PrimitiveObjectInspector oiKeyMaybeLazy) throws HiveException {

StandardListObjectInspector loi = ObjectInspectorFactory.getStandardListObjectInspector(getTerminatePartialOutputElementType(oiValueMaybeLazy, oiKeyMaybeLazy));

PrimitiveObjectInspector oiN = PrimitiveObjectInspectorFactory.writableIntObjectInspector;

ArrayList<ObjectInspector> foi = new ArrayList<ObjectInspector>();

foi.add(oiN);

foi.add(loi);

ArrayList<String> fnames = new ArrayList<String>();

fnames.add("n");

fnames.add("data");

return ObjectInspectorFactory.getStandardStructObjectInspector(fnames, foi);

}

private static StandardStructObjectInspector getTerminatePartialOutputElementType(ObjectInspector oiValueMaybeLazy, PrimitiveObjectInspector oiKeyMaybeLazy) throws HiveException {

ObjectInspector oiValue = TypeUtils.makeStrict(oiValueMaybeLazy);

PrimitiveObjectInspector oiKey = TypeUtils.primitiveMakeStrict(oiKeyMaybeLazy);

ArrayList<ObjectInspector> foi = new ArrayList<ObjectInspector>();

foi.add(oiValue);

foi.add(oiKey);

ArrayList<String> fnames = new ArrayList<String>();

fnames.add("value");

fnames.add("key");

return ObjectInspectorFactory.getStandardStructObjectInspector(fnames, foi);

}

private static StandardListObjectInspector getCompleteOutputType(IntermObjectInspector ioi) {

return ObjectInspectorFactory.getStandardListObjectInspector(ioi.oiValue);

}

private static int getTopNValue(PrimitiveObjectInspector parameter) throws HiveException {

if (parameter instanceof WritableConstantIntObjectInspector) {

WritableConstantIntObjectInspector nvOI = (WritableConstantIntObjectInspector) parameter;

int numTop = nvOI.getWritableConstantValue().get();

return numTop;

} else {

throw new HiveException("The third parameter: number of max values returned must be a constant int but the parameter was of type " + parameter.getClass().getName());

}

}

@Override

public ObjectInspector init(Mode m, ObjectInspector[] parameters) throws HiveException {

super.init(m, parameters);

if (m == Mode.PARTIAL1) {

//for iterate

assert (parameters.length == 3);

oiValue = parameters[0];

oiKey = (PrimitiveObjectInspector) parameters[1];

topN = getTopNValue((PrimitiveObjectInspector) parameters[2]);

//create type R = list(struct(keyType,valueType))

ioi = new IntermObjectInspector(getTerminatePartialOutputType(oiValue, oiKey));

//for terminate partial

return ioi.topSoi;//call this type R

} else if (m == Mode.PARTIAL2) {

ioi = new IntermObjectInspector((StandardStructObjectInspector) parameters[0]); //type R (see above)

//for merge and terminate partial

return ioi.topSoi;//type R

} else if (m == Mode.COMPLETE) {

assert (parameters.length == 3);

//for iterate

oiValue = parameters[0];

oiKey = (PrimitiveObjectInspector) parameters[1];

topN = getTopNValue((PrimitiveObjectInspector) parameters[2]);

ioi = new IntermObjectInspector(getTerminatePartialOutputType(oiValue, oiKey));//type R (see above)

//for terminate

return getCompleteOutputType(ioi);

} else if (m == Mode.FINAL) {

//for merge

ioi = new IntermObjectInspector((StandardStructObjectInspector) parameters[0]); //type R (see above)

//for terminate

//type O = list(valueType)

return getCompleteOutputType(ioi);

}

throw new IllegalStateException("Unknown mode");

}

@Override

public Object terminatePartial(AggregationBuffer agg) throws HiveException {

StdAgg stdAgg = (StdAgg) agg;

return stdAgg.serialize(ioi);

}

@Override

public Object terminate(AggregationBuffer agg) throws HiveException {

StdAgg stdAgg = (StdAgg) agg;

if (stdAgg == null) {

return null;

}

return stdAgg.terminate(ioi.oiKey);

}

@Override

public void merge(AggregationBuffer agg, Object partial) throws HiveException {

if (partial == null) {

return;

}

StdAgg stdAgg = (StdAgg) agg;

stdAgg.merge(ioi, partial);

}

@Override

public void iterate(AggregationBuffer agg, Object[] parameters) throws HiveException {

assert (parameters.length == 3);

if (parameters[0] == null || parameters[1] == null || parameters[2] == null) {

return;

}

StdAgg stdAgg = (StdAgg) agg;

stdAgg.setTopN(topN);

stdAgg.add(parameters, oiValue, oiKey, ioi);

}

// Aggregation buffer definition and manipulation methods

@AggregationType(estimable = false)

static class StdAgg extends AbstractAggregationBuffer {

public static class KeyValuePair {

public Object key;

public Object value;

public KeyValuePair(Object key, Object value) {

this.key = key;

this.value = value;

}

}

public static class KeyValueComparator implements Comparator<KeyValuePair> {

public PrimitiveObjectInspector getKeyObjectInspector() {

return keyObjectInspector;

}

public void setKeyObjectInspector(PrimitiveObjectInspector keyObjectInspector) {

this.keyObjectInspector = keyObjectInspector;

}

PrimitiveObjectInspector keyObjectInspector;

@Override

public int compare(KeyValuePair o1, KeyValuePair o2) {

if (keyObjectInspector == null) {

throw new IllegalStateException("Key object inspector has to be initialized.");

}

//the heap will store the min element on top

return ObjectInspectorUtils.compare(o1.key, keyObjectInspector, o2.key, keyObjectInspector);

}

}

public PriorityQueue<KeyValuePair> queue;

int topN;

public void setTopN(int topN) {

this.topN = topN;

}

public int getTopN() {

return topN;

}

public void reset() {

queue = new PriorityQueue<KeyValuePair>(10, new KeyValueComparator());

}

public void add(Object[] parameters, ObjectInspector oiValue, PrimitiveObjectInspector oiKey, IntermObjectInspector ioi) {

assert (parameters.length == 3);

Object paramValue = parameters[0];

Object paramKey = parameters[1];

if (paramValue == null || paramKey == null) {

return;

}

Object stdValue = ObjectInspectorUtils.copyToStandardObject(paramValue, oiValue, ObjectInspectorUtils.ObjectInspectorCopyOption.WRITABLE);

Object stdKey = ObjectInspectorUtils.copyToStandardObject(paramKey, oiKey, ObjectInspectorUtils.ObjectInspectorCopyOption.WRITABLE);

addToQueue(stdKey, stdValue, ioi.oiKey);

}

public void addToQueue(Object key, Object value, PrimitiveObjectInspector oiKey) {

final PrimitiveObjectInspector keyObjectInspector = oiKey;

KeyValueComparator comparator = ((KeyValueComparator) queue.comparator());

comparator.setKeyObjectInspector(keyObjectInspector);

queue.add(new KeyValuePair(key, value));

if (queue.size() > topN) {

queue.remove();

}

comparator.setKeyObjectInspector(null);

}

private KeyValuePair[] copyQueueToArray() {

int n = queue.size();

KeyValuePair[] buffer = new KeyValuePair[n];

int i = 0;

for (KeyValuePair pair : queue) {

buffer[i] = pair;

i++;

}

return buffer;

}

public List<Object> terminate(final PrimitiveObjectInspector keyObjectInspector) {

KeyValuePair[] buffer = copyQueueToArray();

Arrays.sort(buffer, new Comparator<KeyValuePair>() {

public int compare(KeyValuePair o1, KeyValuePair o2) {

return ObjectInspectorUtils.compare(o2.key, keyObjectInspector, o1.key, keyObjectInspector);

}

});

//copy the values to ArrayList

ArrayList<Object> result = new ArrayList<Object>();

for (int j = 0; j < buffer.length; j++) {

result.add(buffer[j].value);

}

return result;

}

public Object serialize(IntermObjectInspector ioi) {

StandardStructObjectInspector topLevelSoi = ioi.topSoi;

Object topLevelObj = topLevelSoi.create();

StandardListObjectInspector loi = ioi.loi;

StandardStructObjectInspector soi = ioi.soi;

int n = queue.size();

Object loiObj = loi.create(n);

int i = 0;

for (KeyValuePair pair : queue) {

Object soiObj = soi.create();

soi.setStructFieldData(soiObj, soi.getStructFieldRef("value"), pair.value);

soi.setStructFieldData(soiObj, soi.getStructFieldRef("key"), pair.key);

loi.set(loiObj, i, soiObj);

i += 1;

}

topLevelSoi.setStructFieldData(topLevelObj, topLevelSoi.getStructFieldRef("n"), new IntWritable(topN));

topLevelSoi.setStructFieldData(topLevelObj, topLevelSoi.getStructFieldRef("data"), loiObj);

return topLevelObj;

}

public void merge(IntermObjectInspector ioi, Object partial) {

List<Object> nestedValues = ioi.topSoi.getStructFieldsDataAsList(partial);

topN = (Integer) (ioi.noi.getPrimitiveJavaObject(nestedValues.get(0)));

StandardListObjectInspector loi = ioi.loi;

StandardStructObjectInspector soi = ioi.soi;

PrimitiveObjectInspector oiKey = ioi.oiKey;

Object data = nestedValues.get(1);

int n = loi.getListLength(data);

int i = 0;

while (i < n) {

Object sValue = loi.getListElement(data, i);

List<Object> innerValues = soi.getStructFieldsDataAsList(sValue);

Object primValue = innerValues.get(0);

Object primKey = innerValues.get(1);

addToQueue(primKey, primValue, oiKey);

i += 1;

}

}

}

;

@Override

public AggregationBuffer getNewAggregationBuffer() throws HiveException {

StdAgg result = new StdAgg();

reset(result);

return result;

}

@Override

public void reset(AggregationBuffer agg) throws HiveException {

StdAgg stdAgg = (StdAgg) agg;

stdAgg.reset();

}

}

}

@Override

public ObjectInspector init(Mode m, ObjectInspector[] parameters) throws HiveException {

super.init(m, parameters);

if (m == Mode.PARTIAL1) {

//for iterate

assert (parameters.length == 3);

oiValue = parameters[0];

oiKey = (PrimitiveObjectInspector) parameters[1];

topN = getTopNValue((PrimitiveObjectInspector) parameters[2]);

//create type R = list(struct(keyType,valueType))

ioi = new IntermObjectInspector(getTerminatePartialOutputType(oiValue, oiKey));

//for terminate partial

return ioi.topSoi;//call this type R

} else if (m == Mode.PARTIAL2) {

ioi = new IntermObjectInspector((StandardStructObjectInspector) parameters[0]);

//type R (see above)

//for merge and terminate partial

return ioi.topSoi;//type R

} else if (m == Mode.COMPLETE) {

assert (parameters.length == 3);

//for iterate

oiValue = parameters[0];

oiKey = (PrimitiveObjectInspector) parameters[1];

topN = getTopNValue((PrimitiveObjectInspector) parameters[2]);

ioi = new IntermObjectInspector(getTerminatePartialOutputType(oiValue,

oiKey));//type R (see above)

//for terminate

return getCompleteOutputType(ioi);

} else if (m == Mode.FINAL) {

//for merge

ioi = new IntermObjectInspector((StandardStructObjectInspector) parameters[0]);

//type R (see above)

//for terminate

//type O = list(valueType)

return getCompleteOutputType(ioi);

}

throw new IllegalStateException("Unknown mode");

}](https://image.slidesharecdn.com/everyday-flink-151019140956-lva1-app6891/85/Michael-Hausler-Everyday-flink-24-320.jpg)

![MapReduce

@Override

public int compare(byte[] b1, int s1, int l1, byte[] b2, int s2, int l2) {

return BinaryData.compare(b1, s1, l1, b2, s2, l2, pair);

}

public int compare(AvroKey<Pair<Long,Long>> x, AvroKey<Pair<Long,Long>> y) {

return ReflectData.get().compare(x.datum(), y.datum(), pair);

}](https://image.slidesharecdn.com/everyday-flink-151019140956-lva1-app6891/85/Michael-Hausler-Everyday-flink-26-320.jpg)