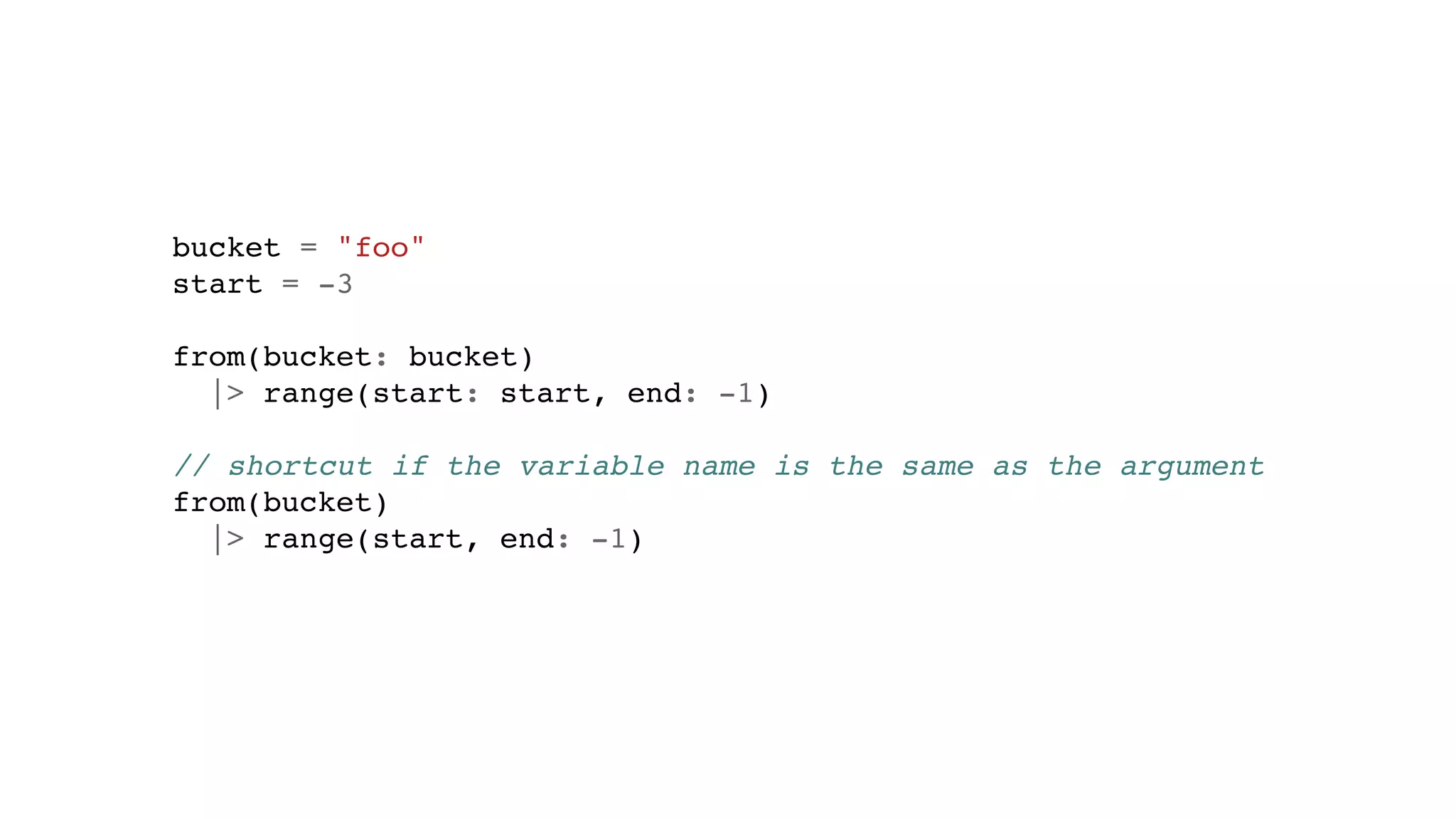

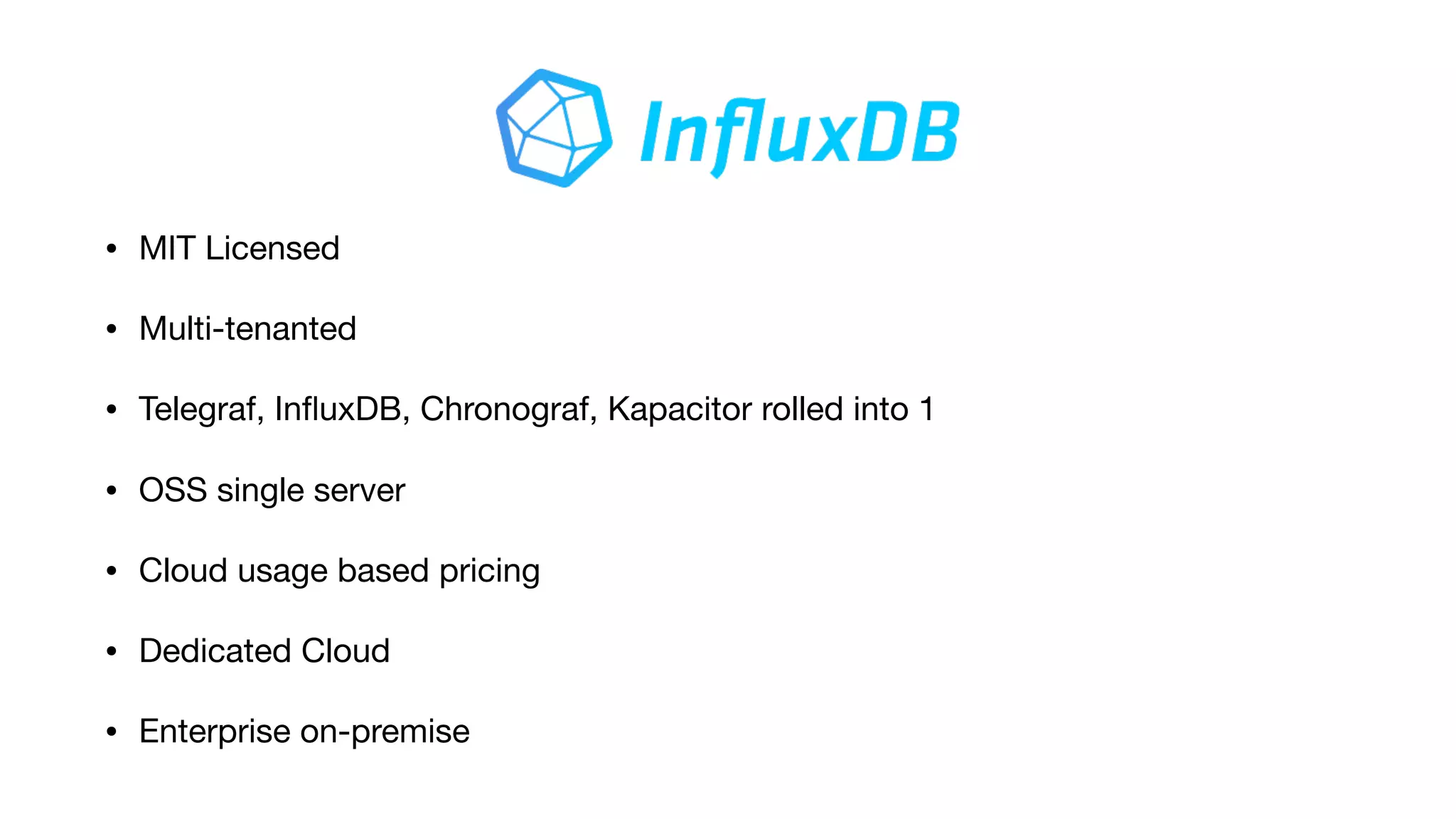

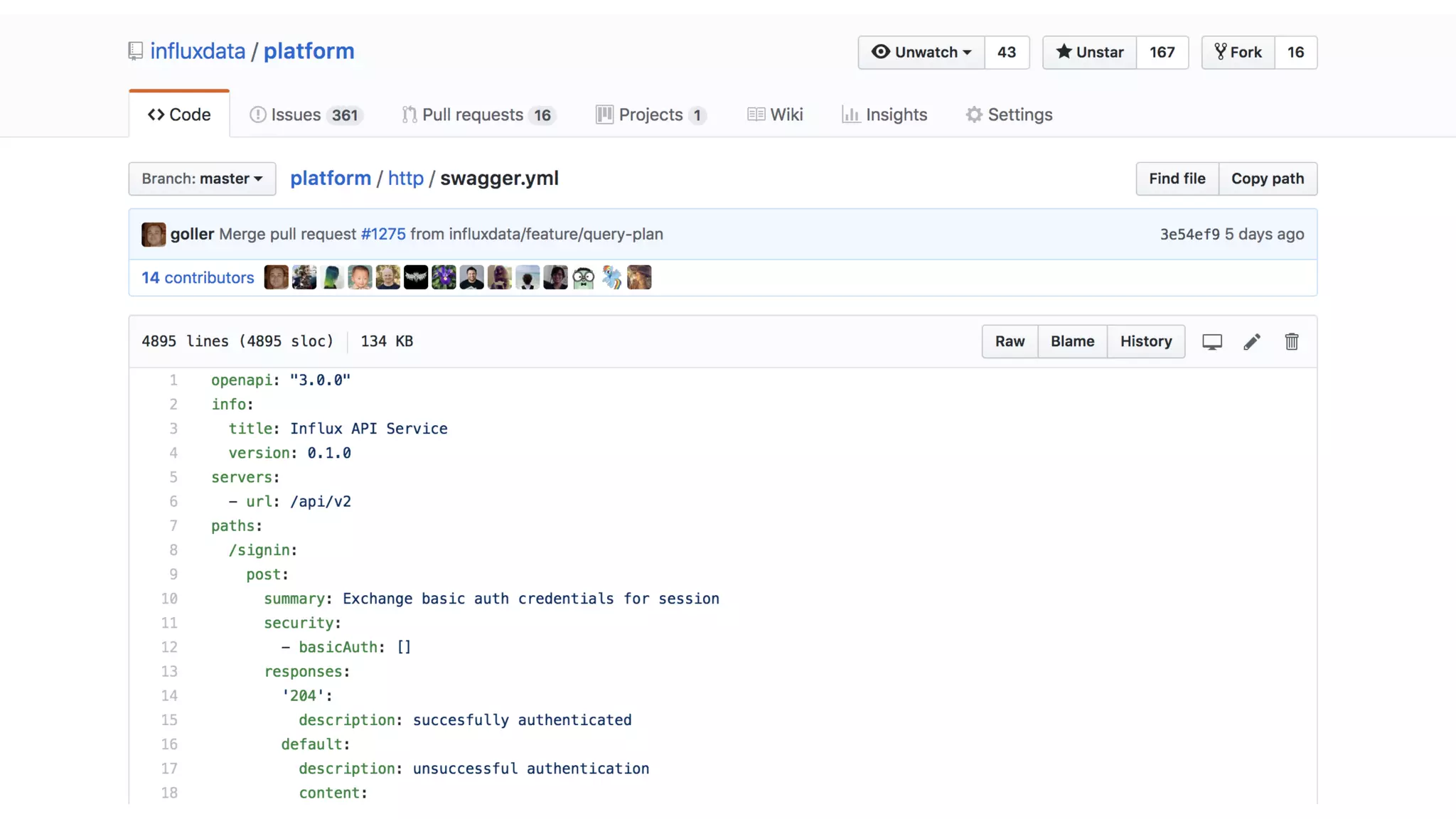

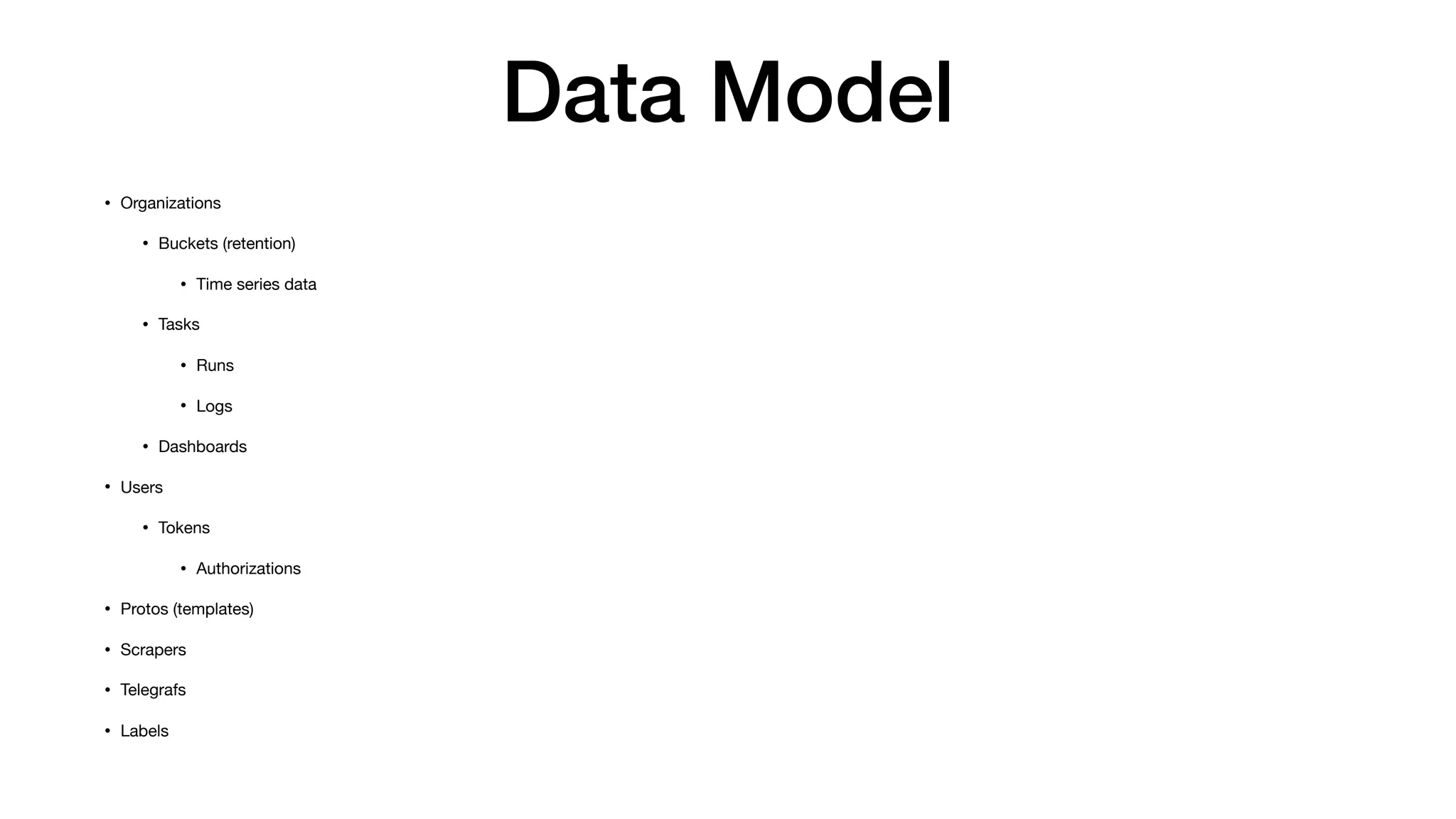

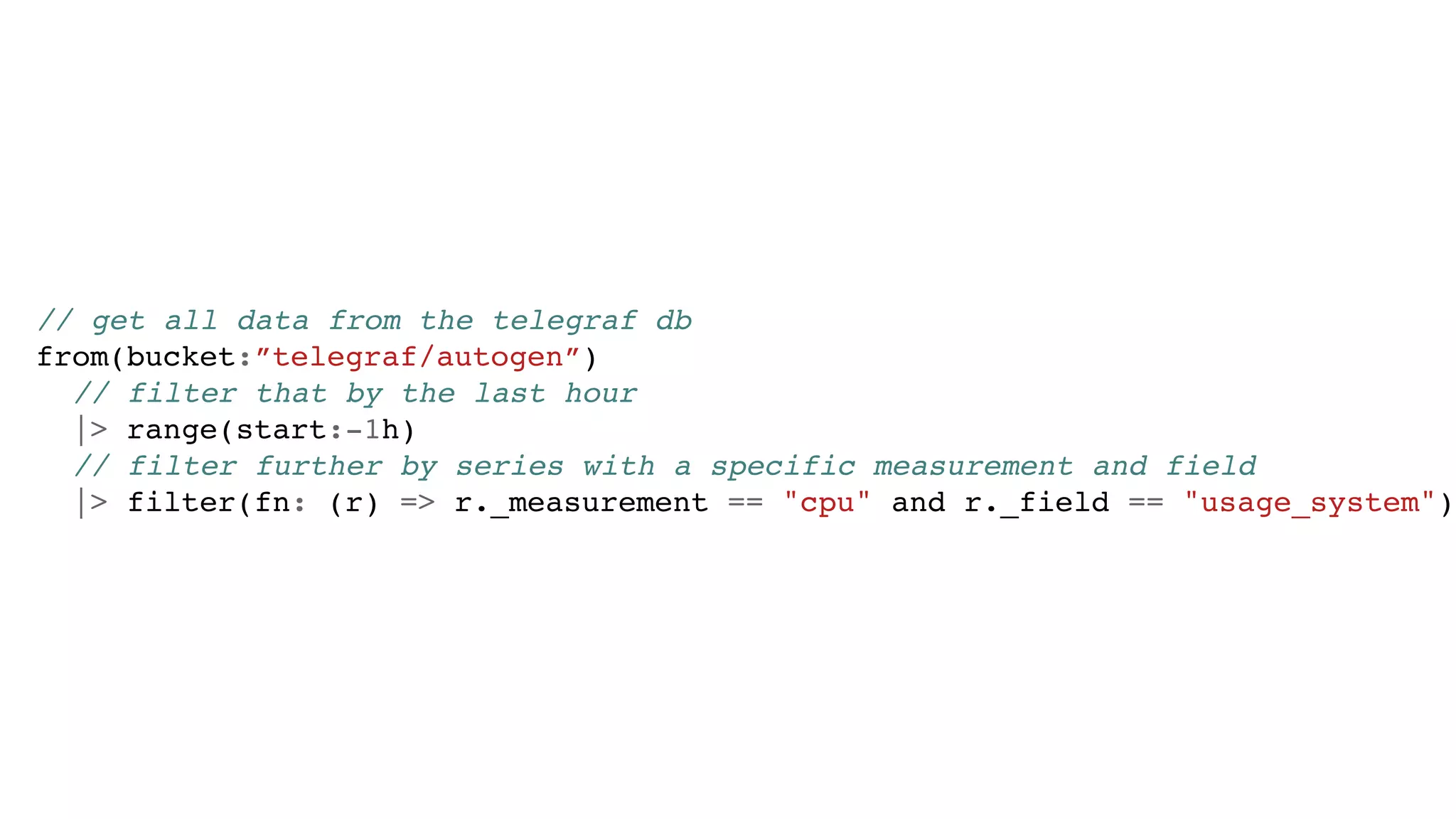

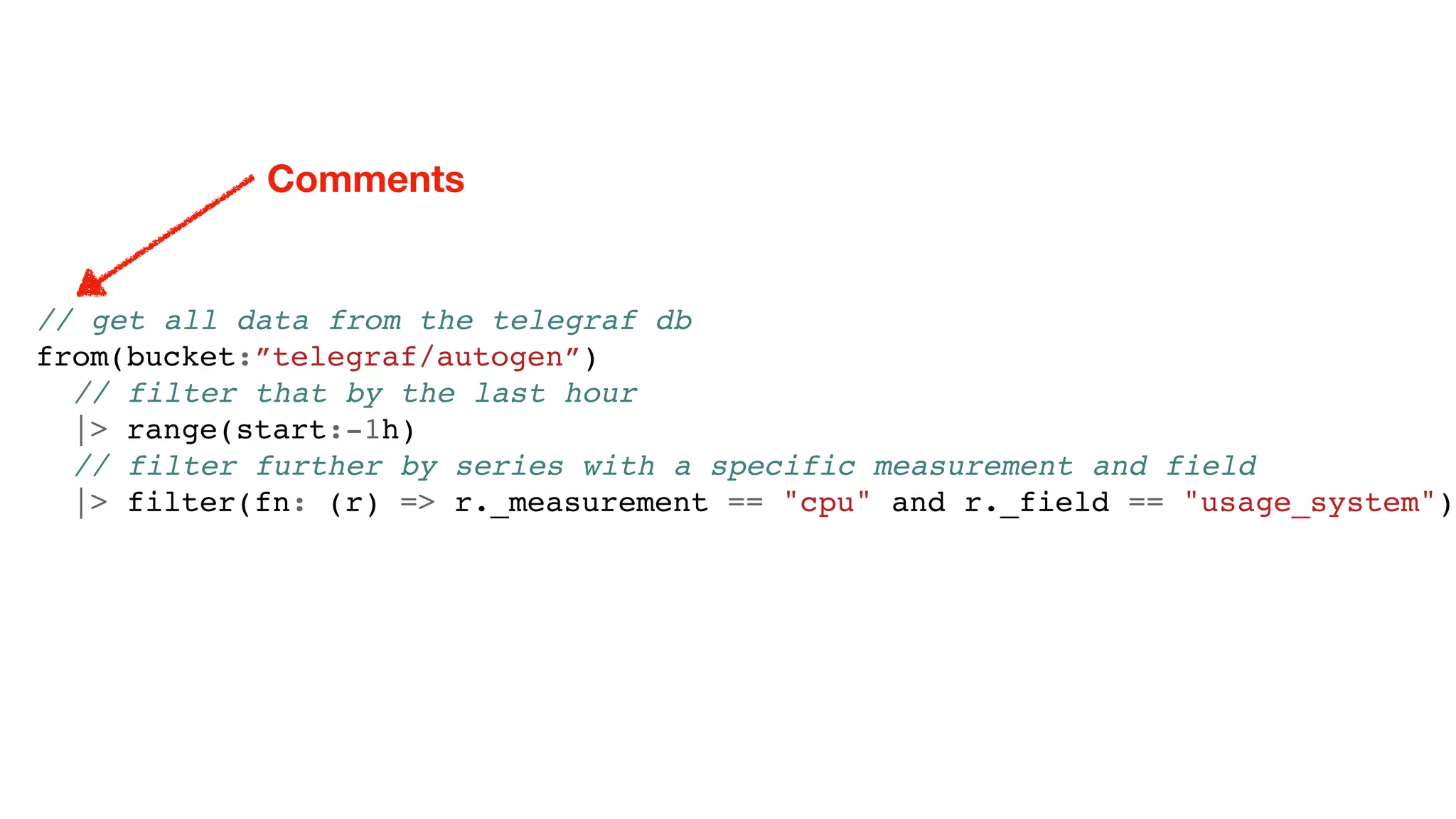

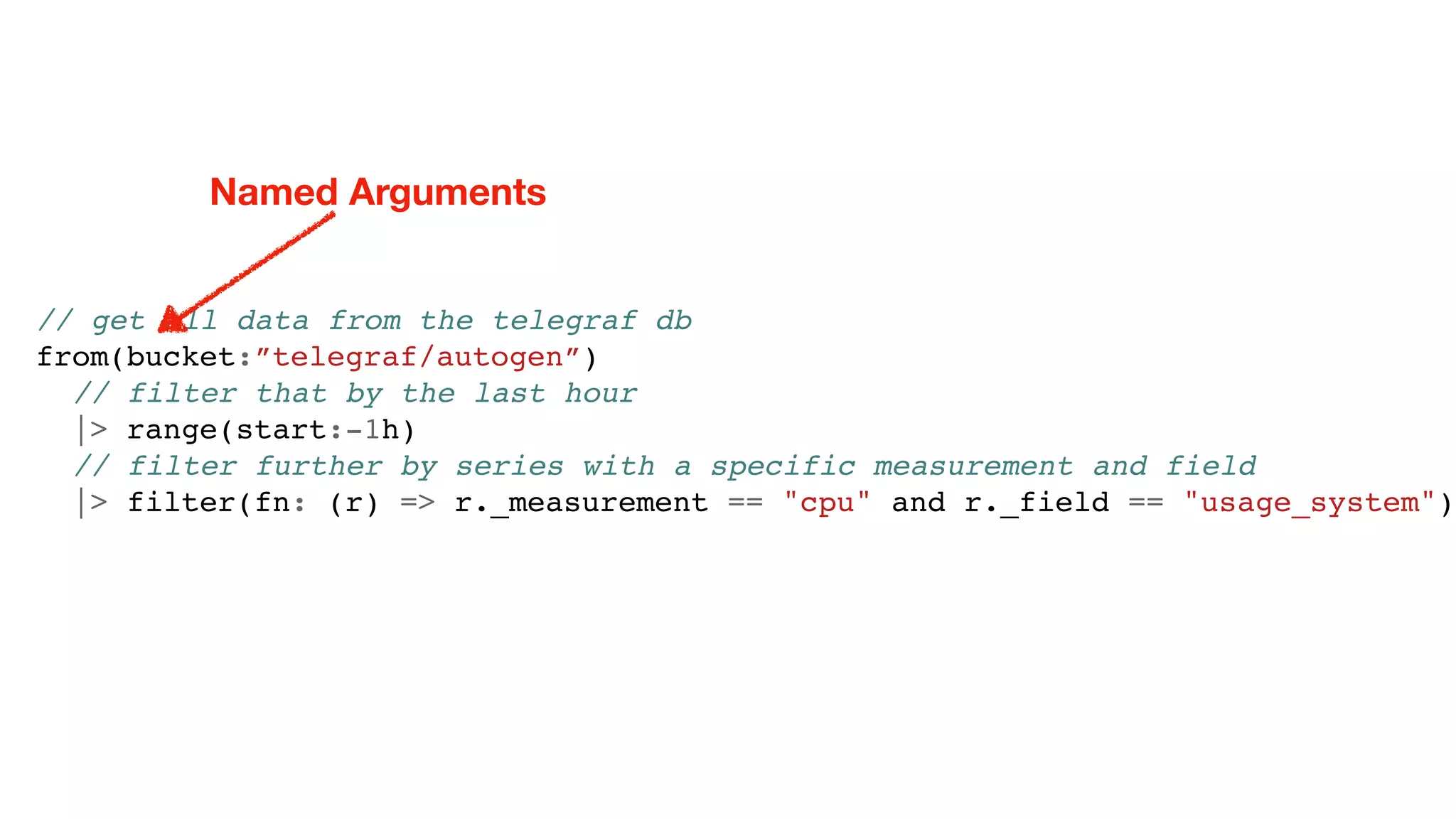

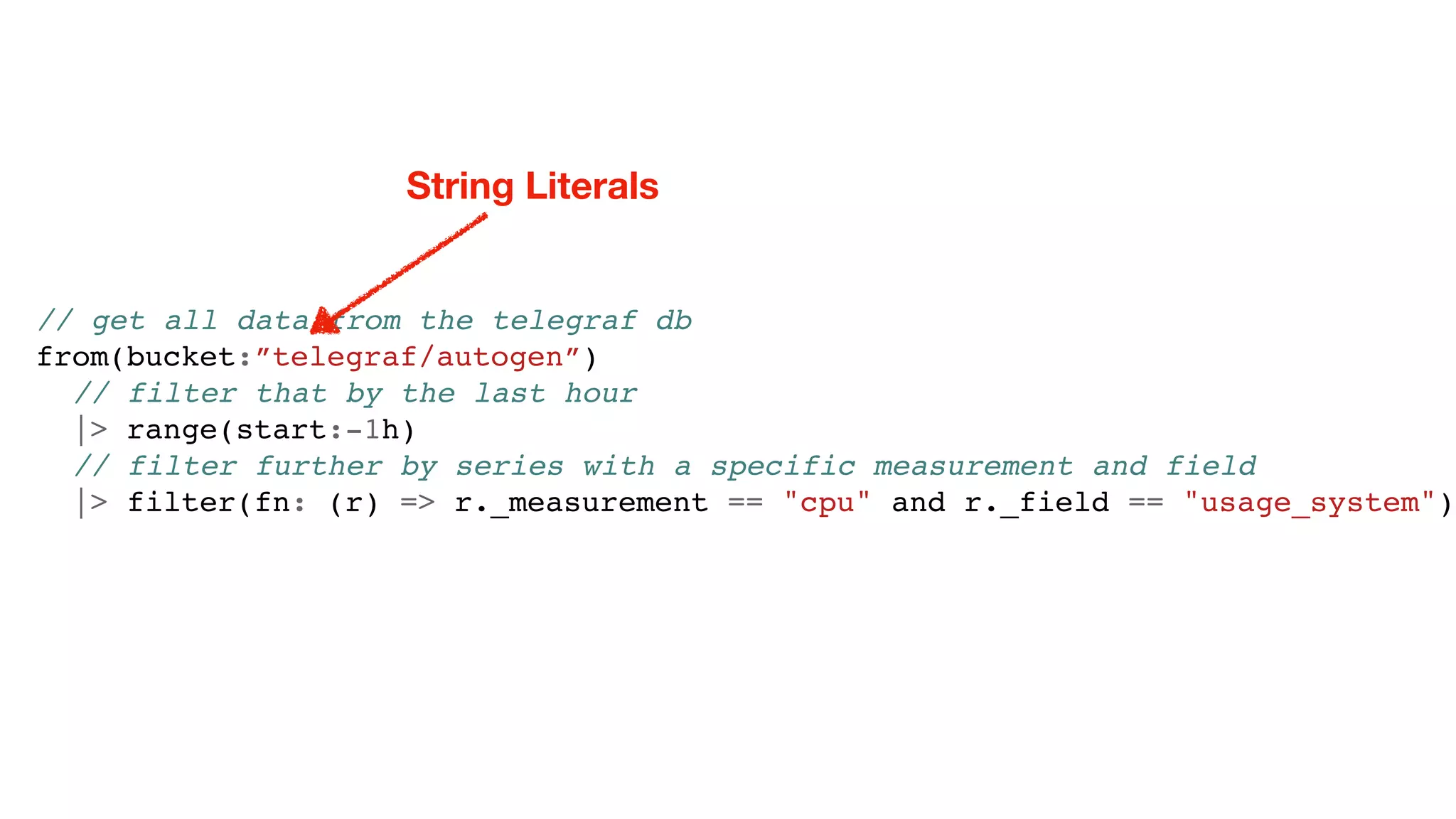

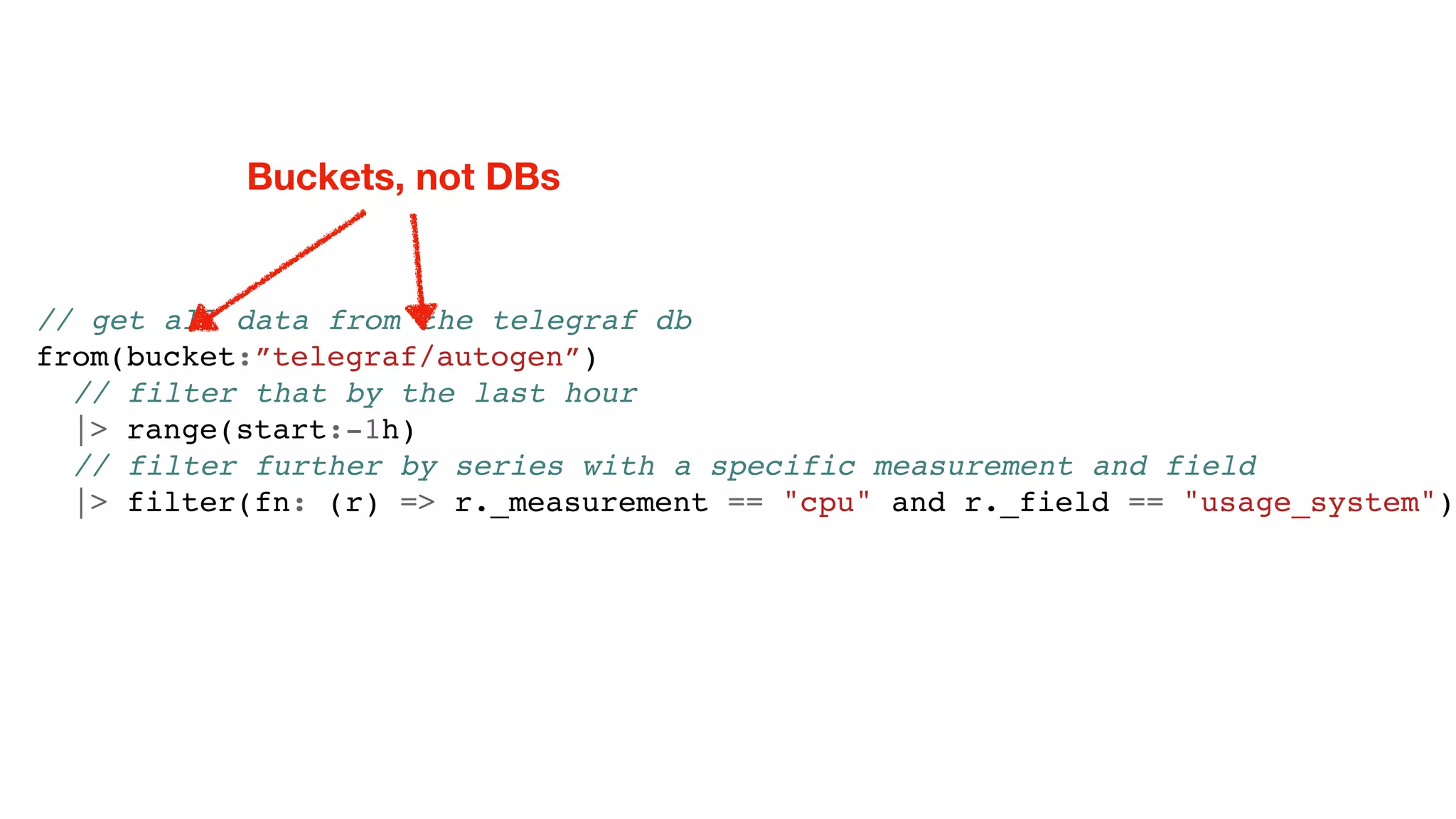

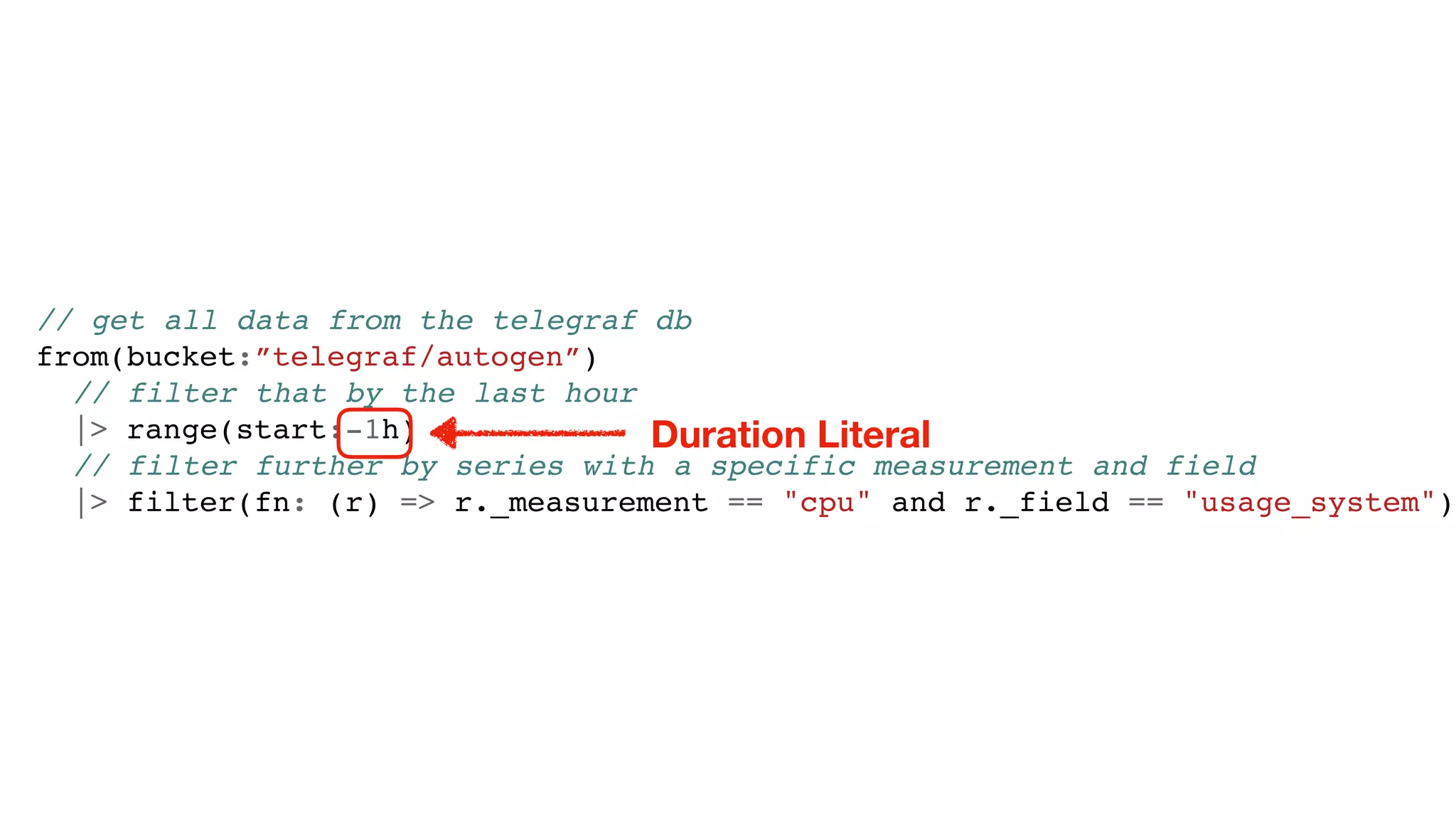

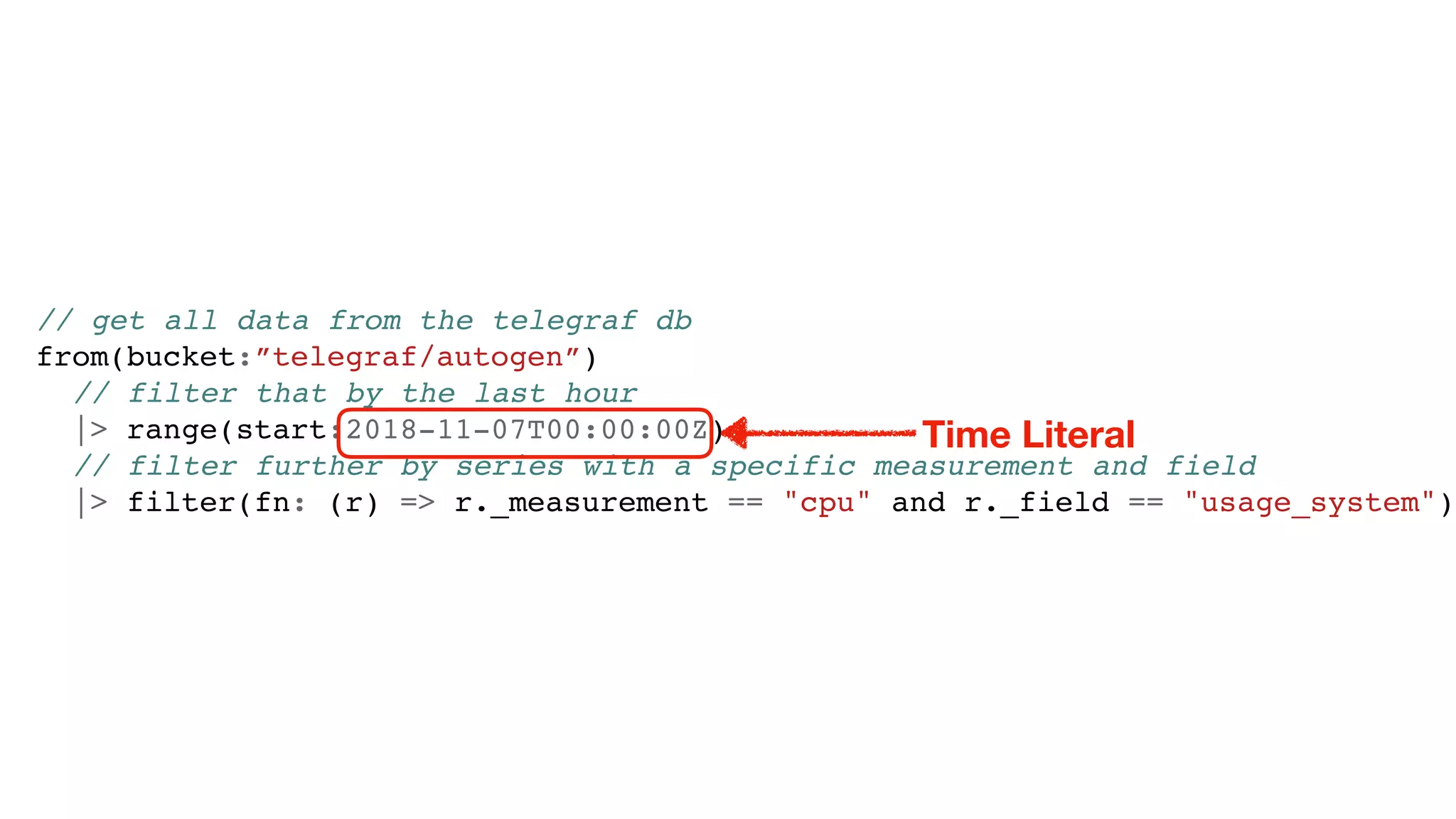

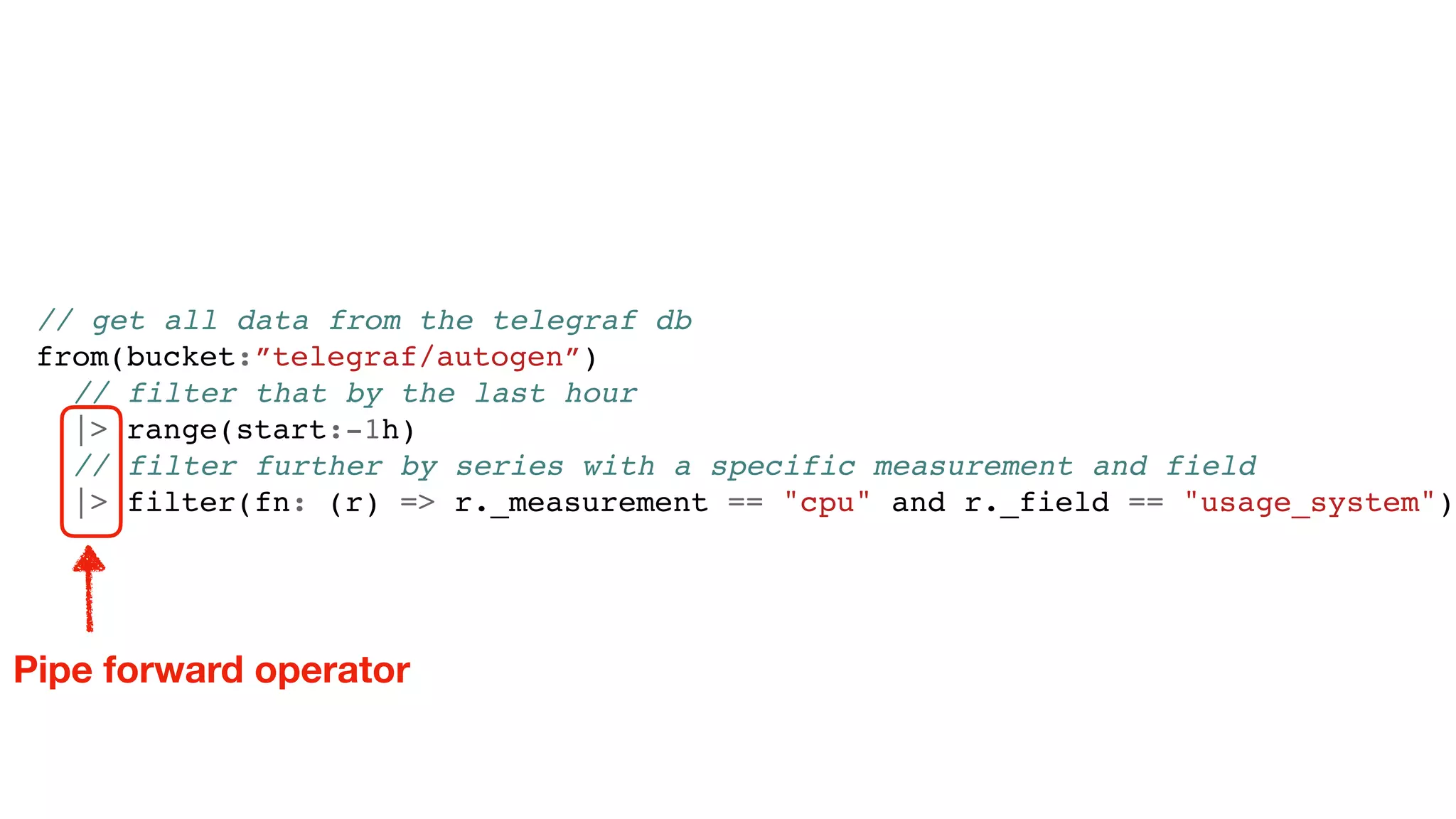

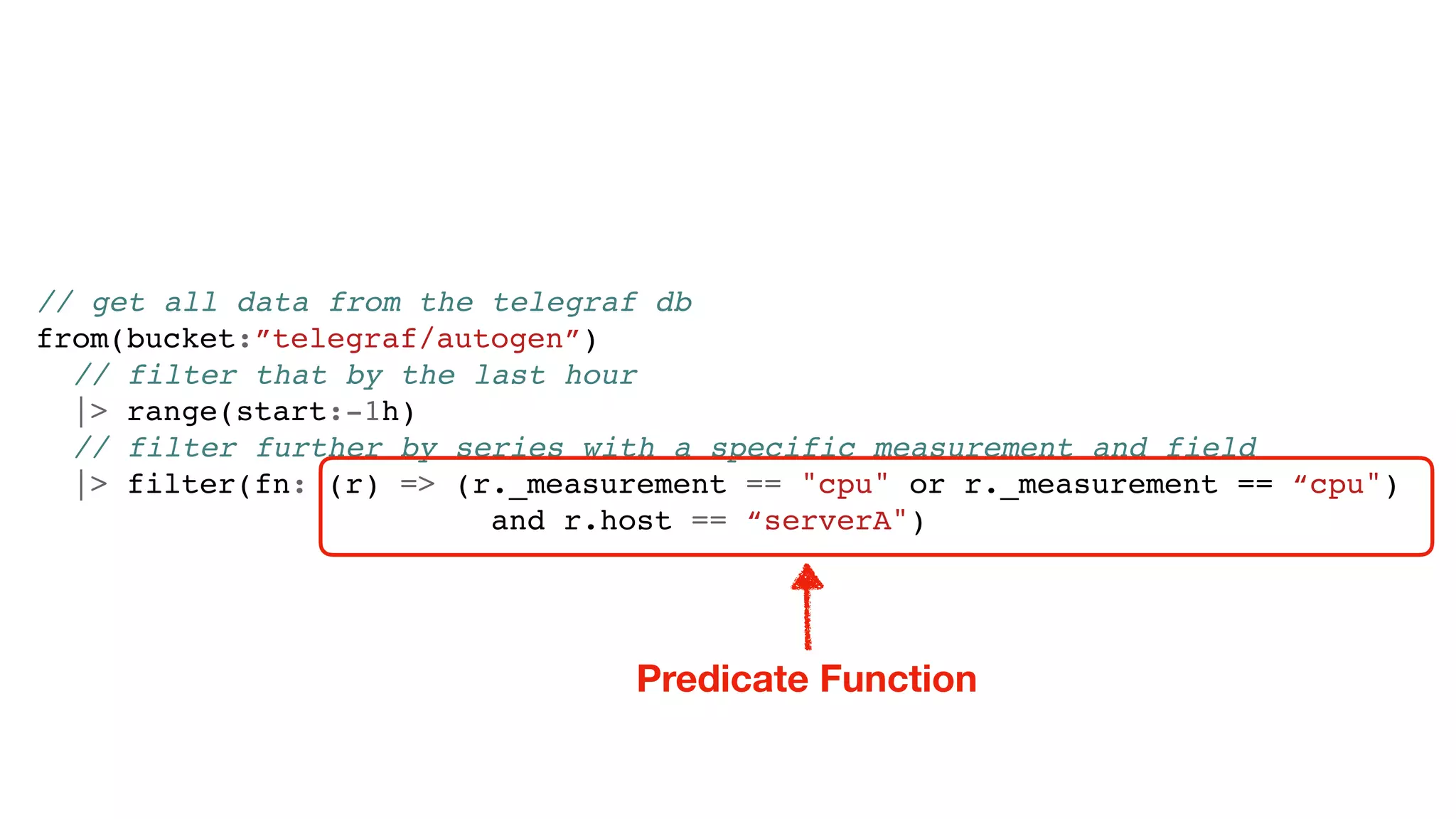

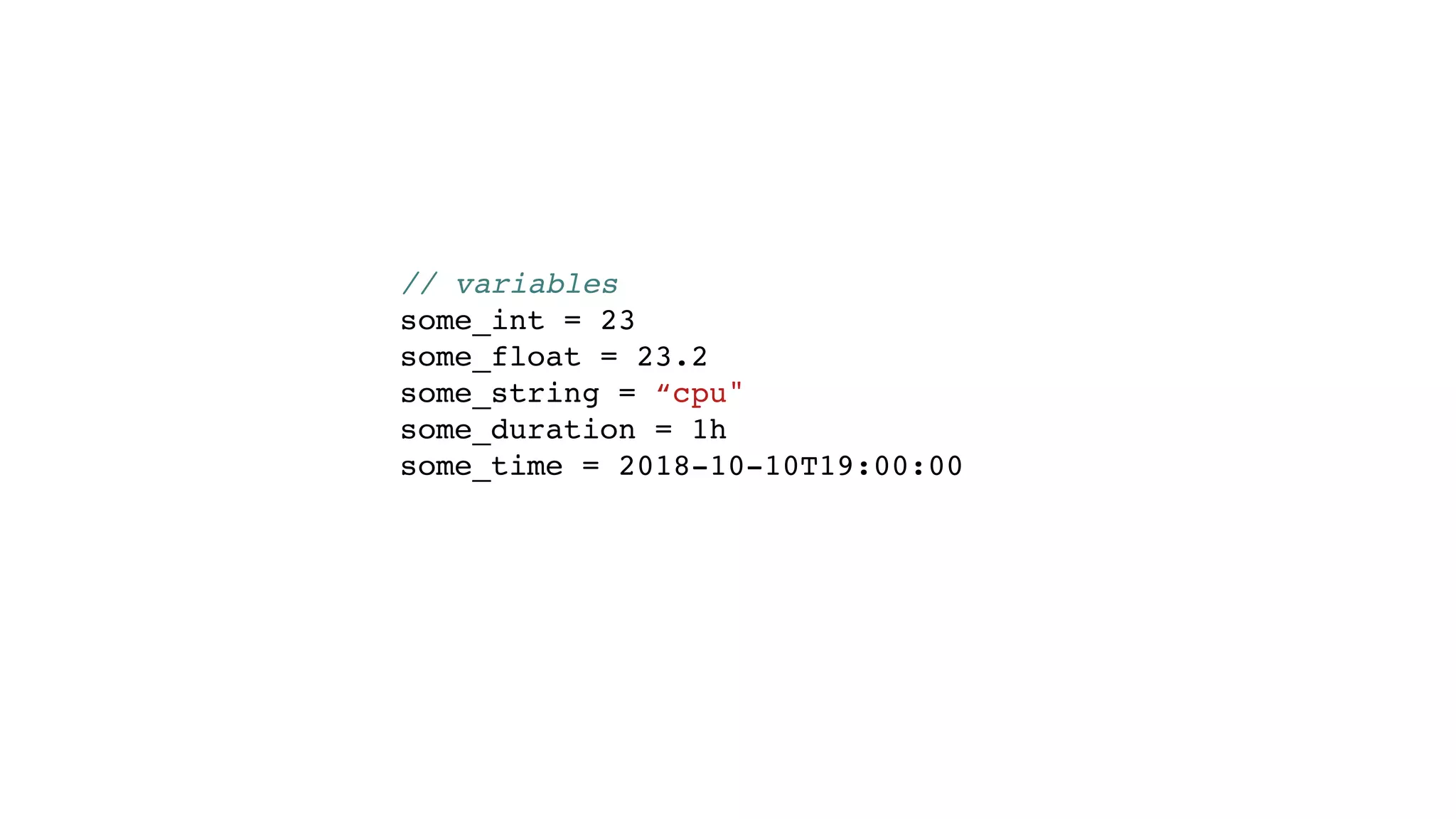

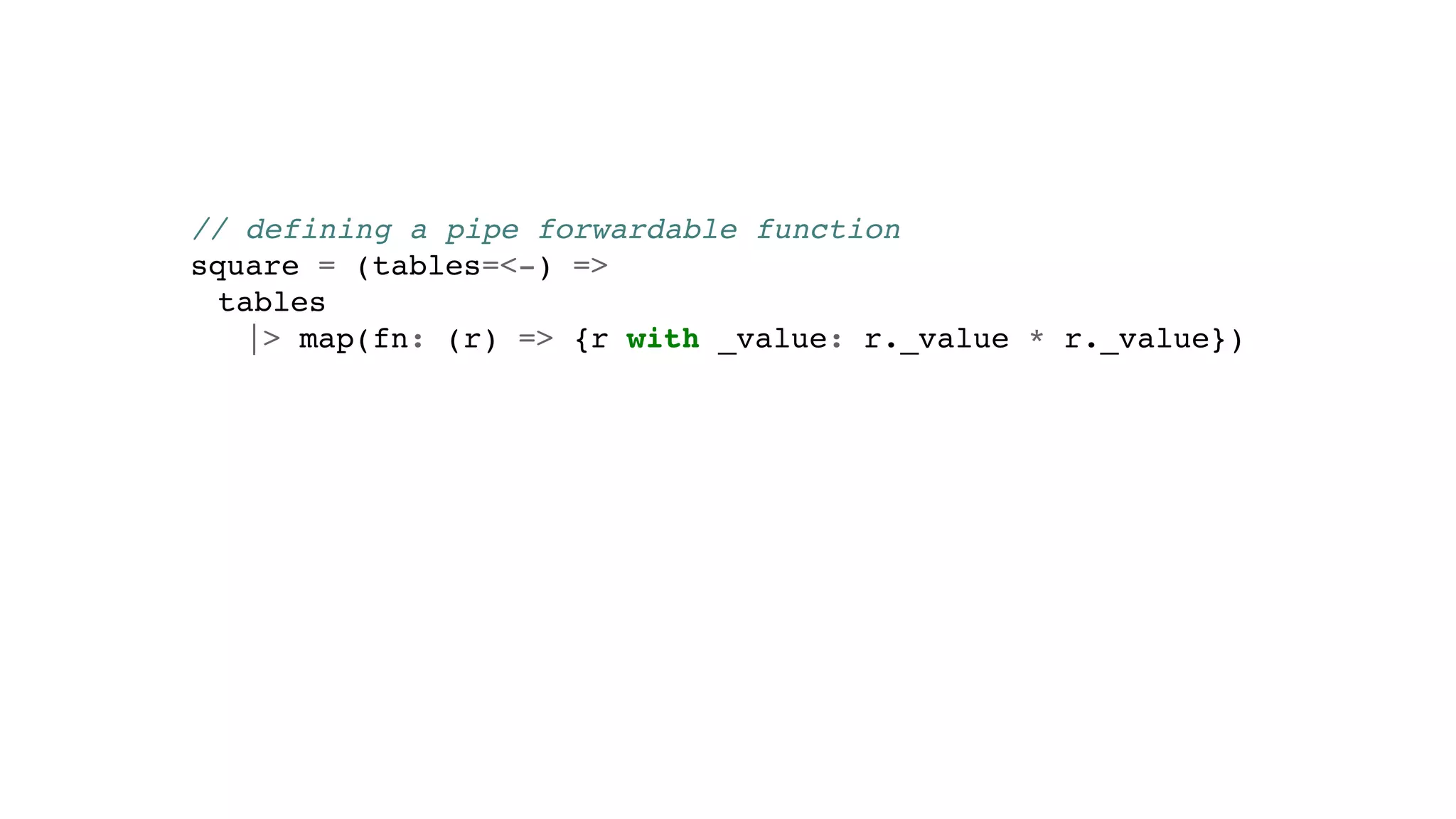

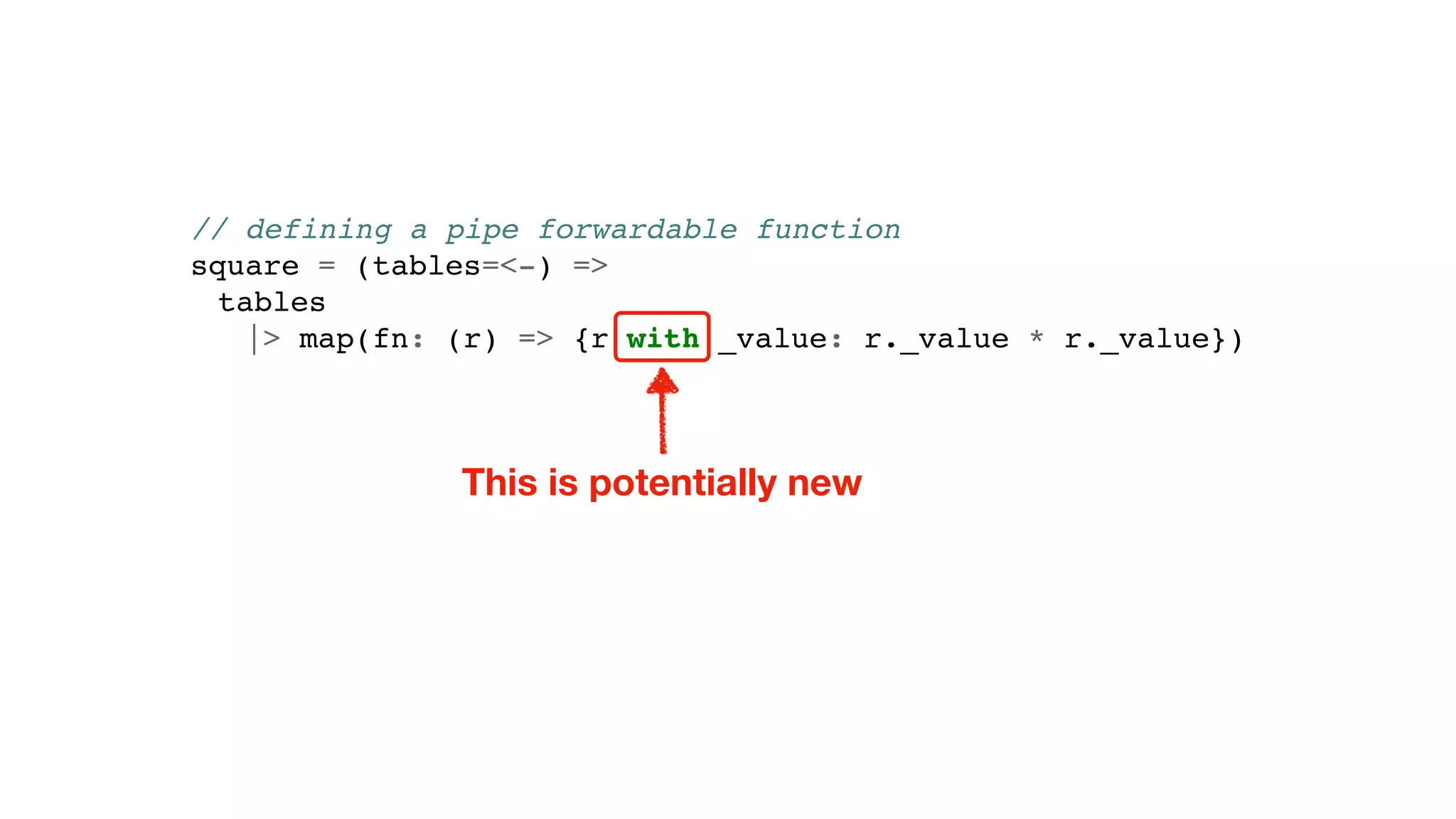

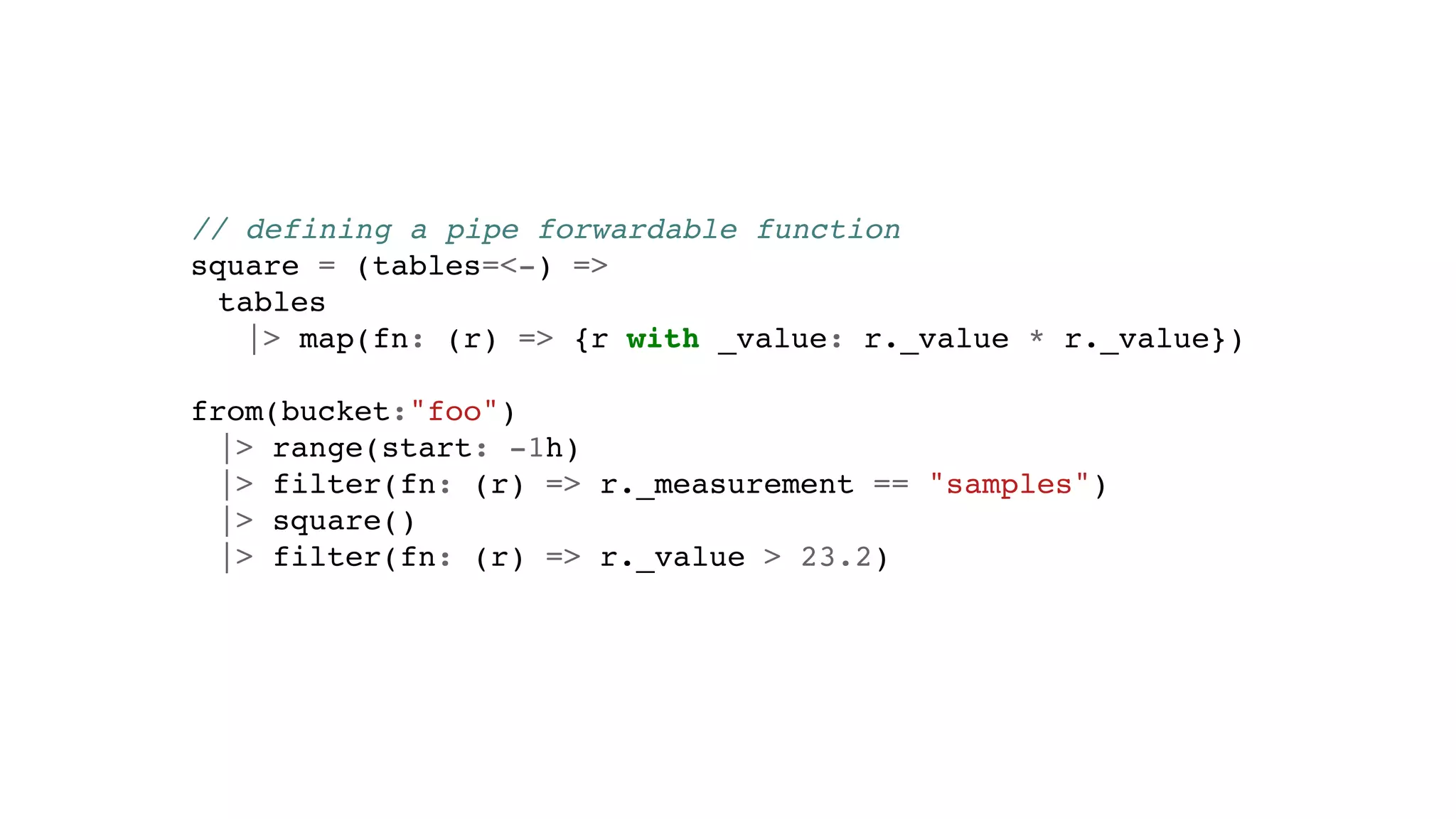

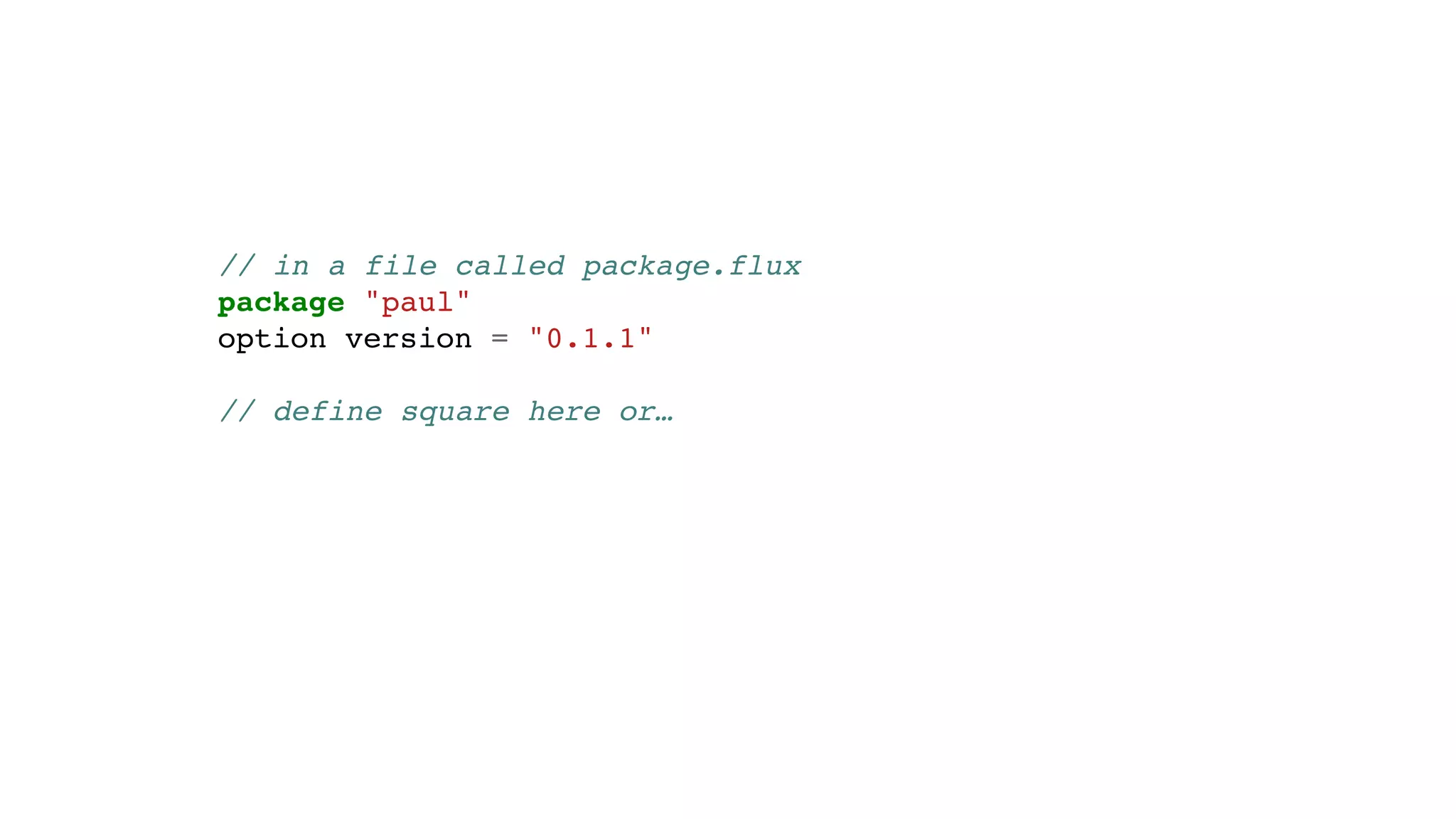

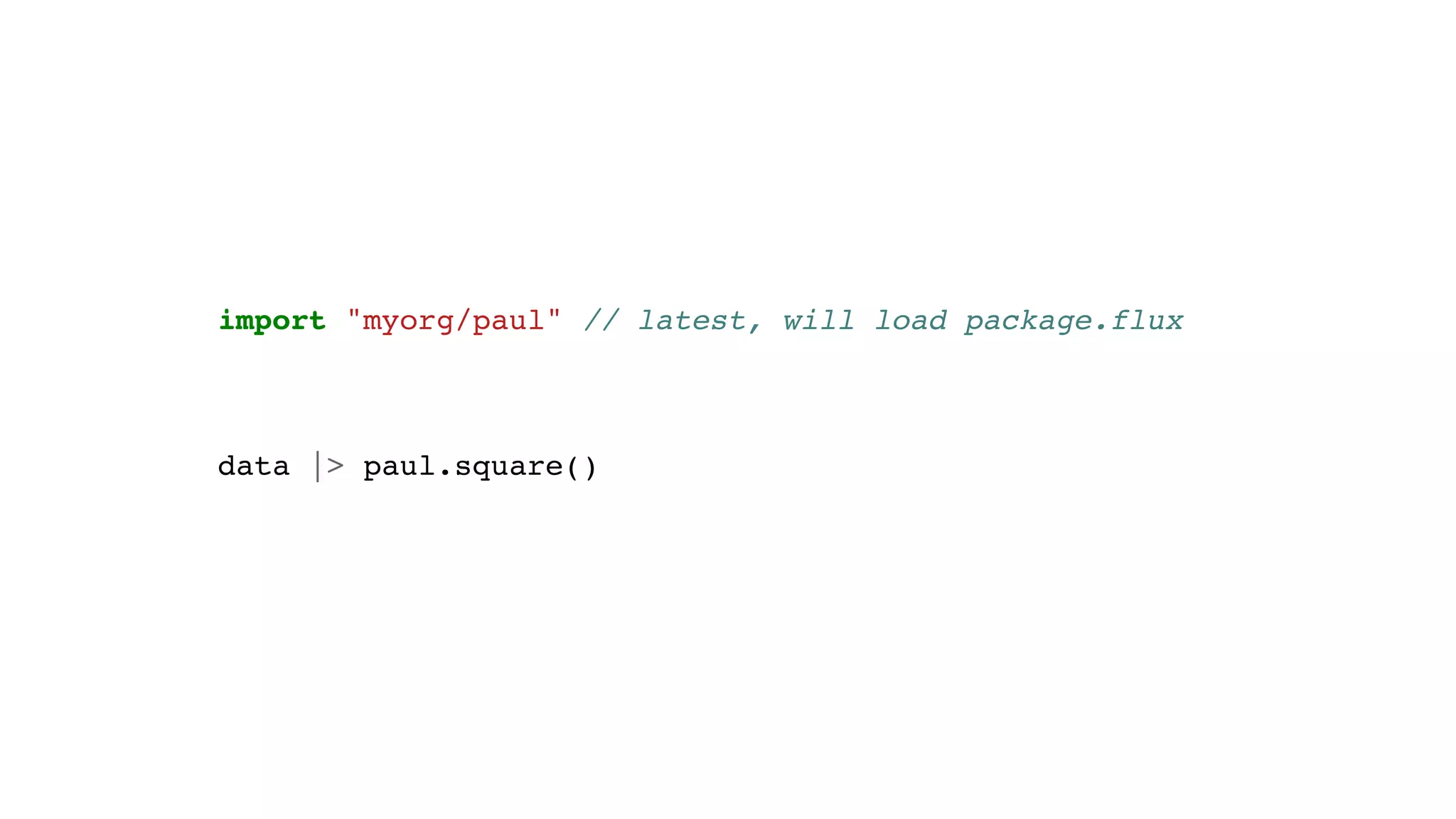

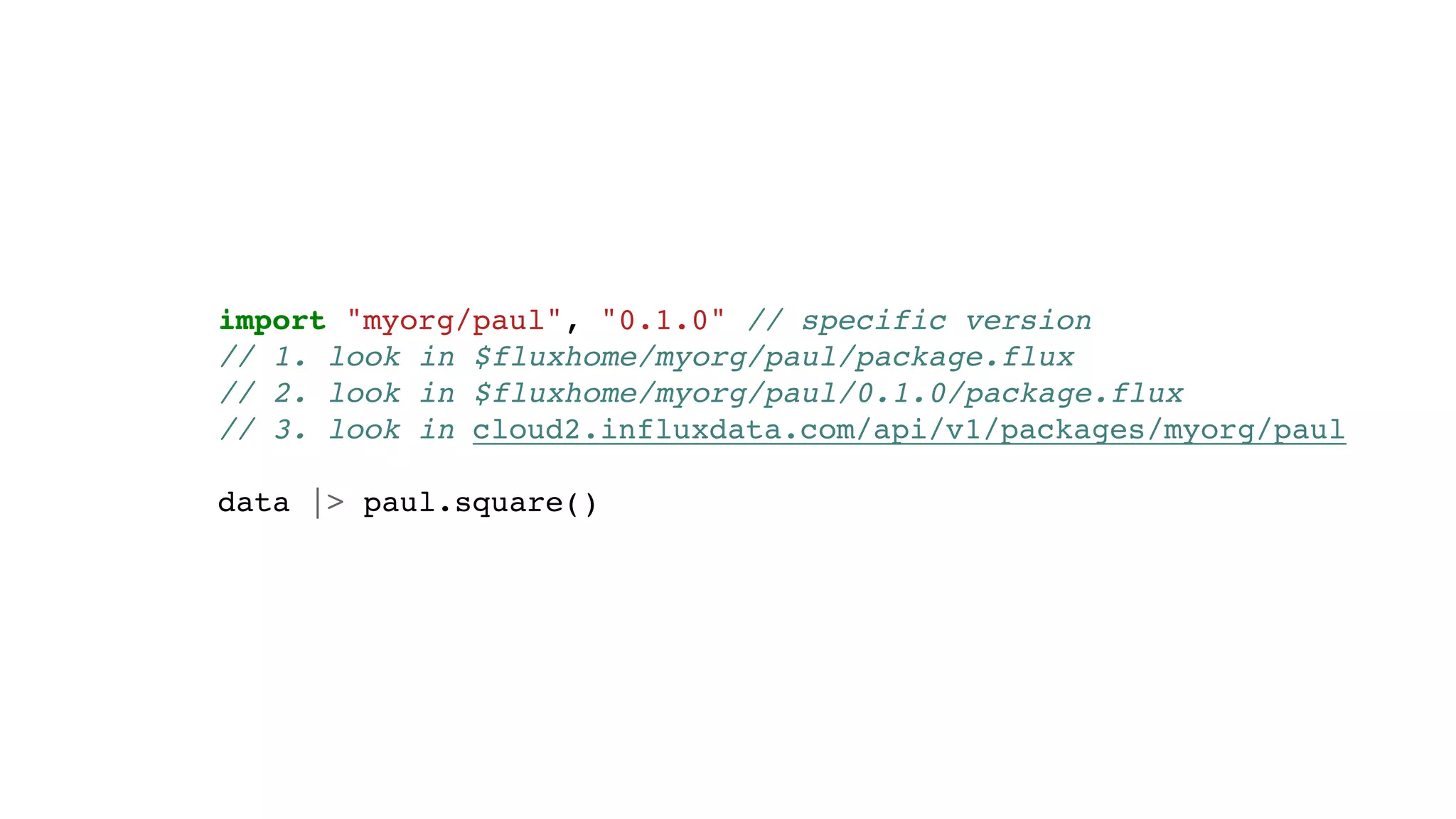

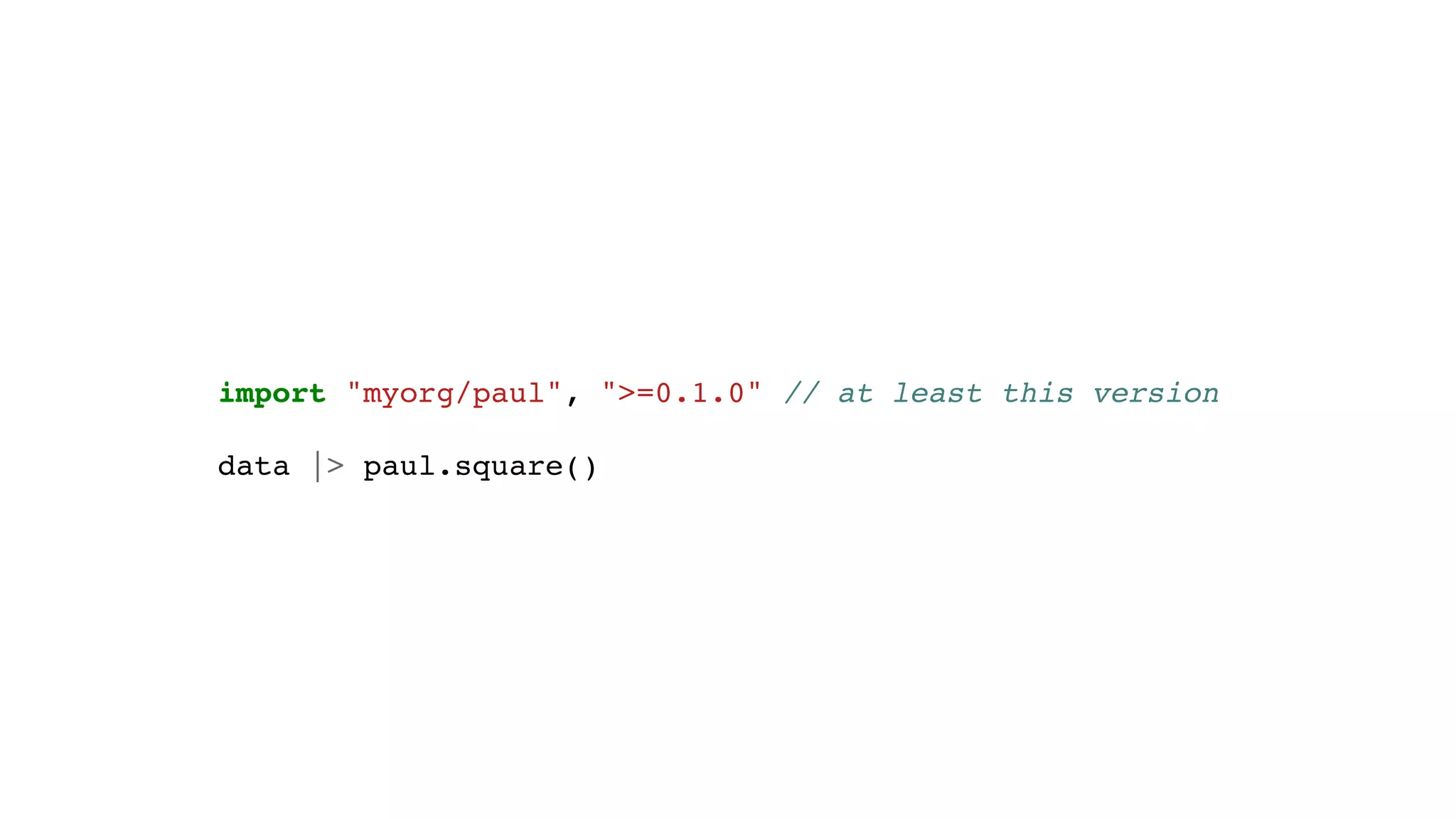

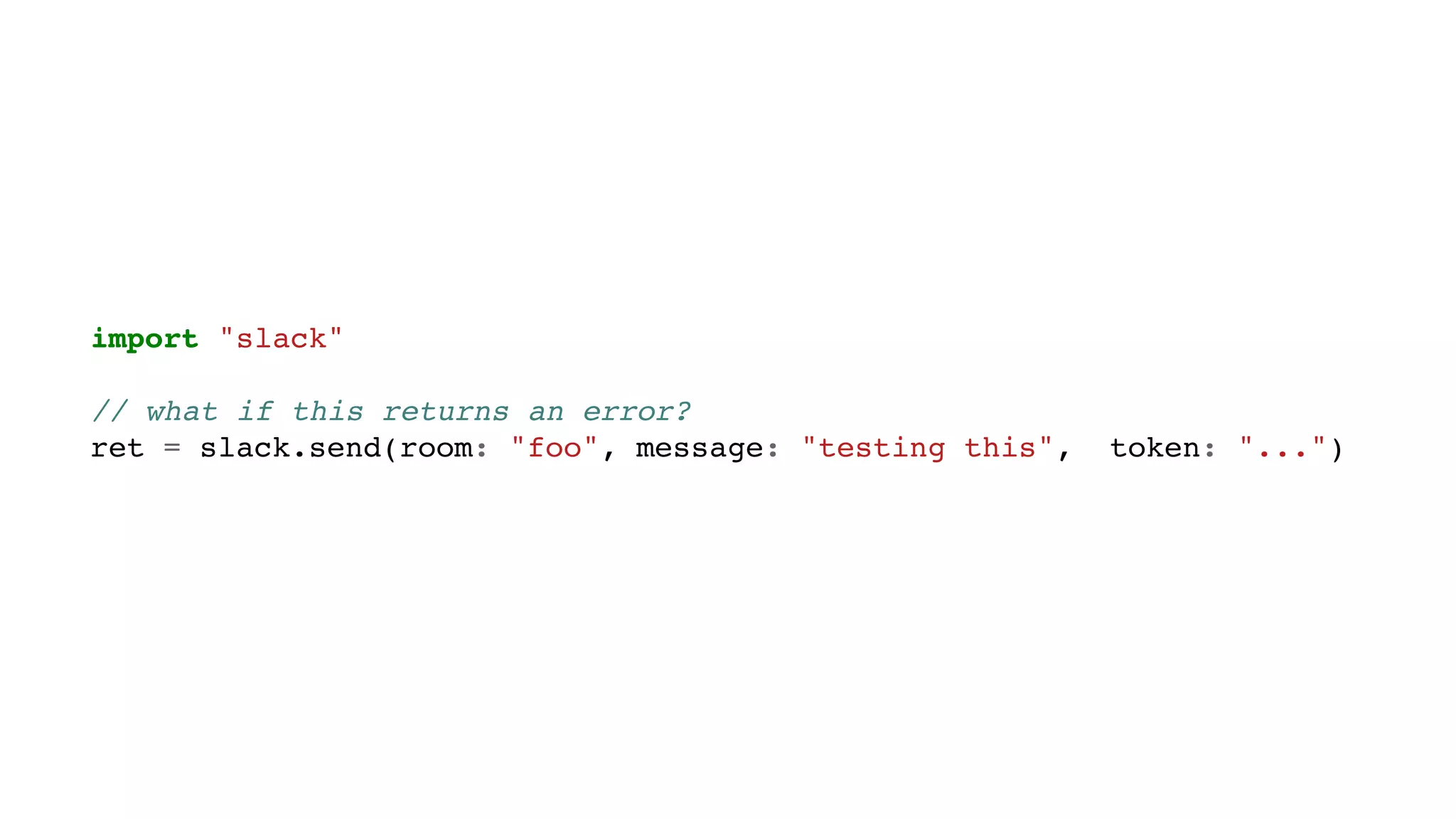

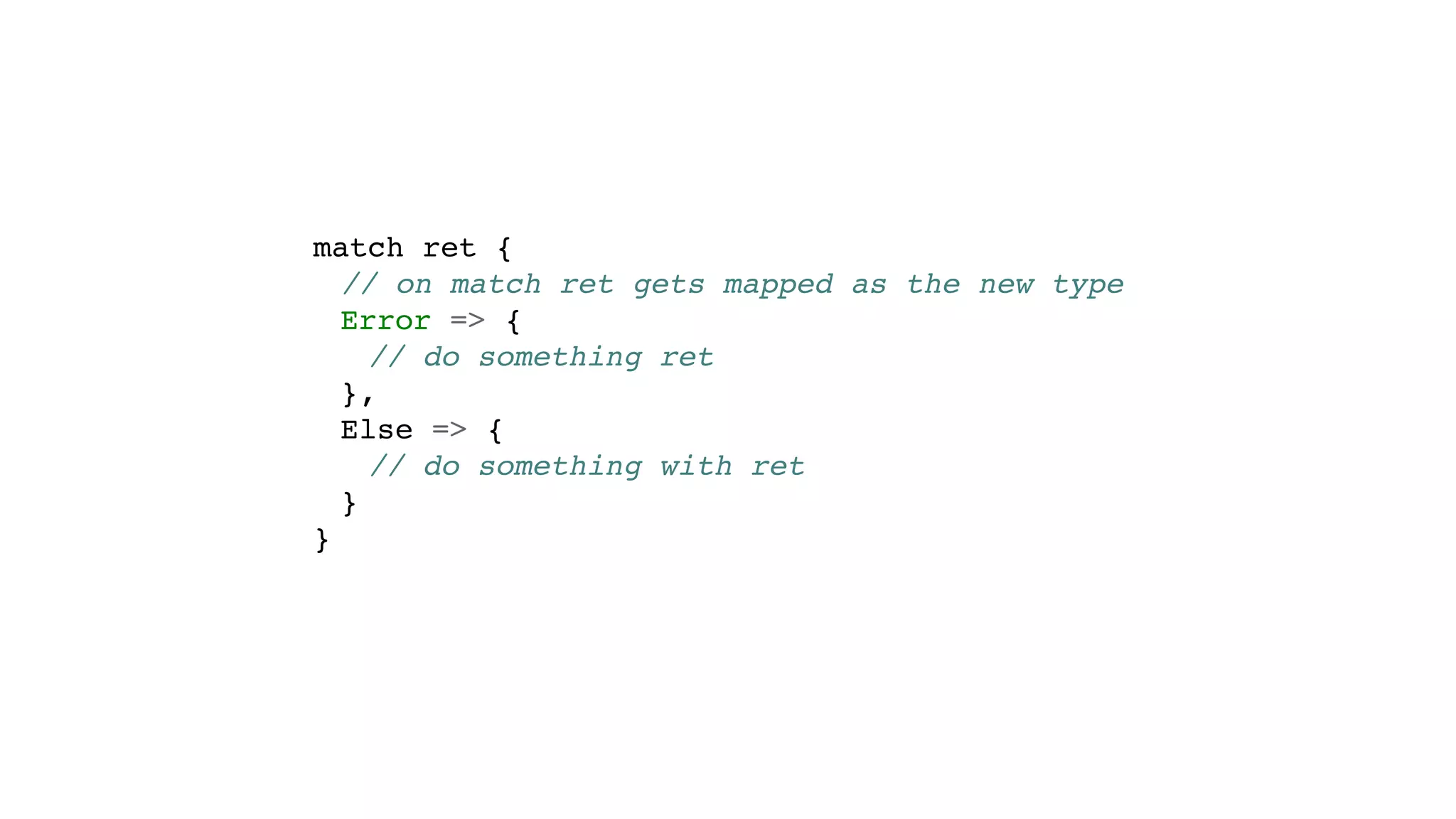

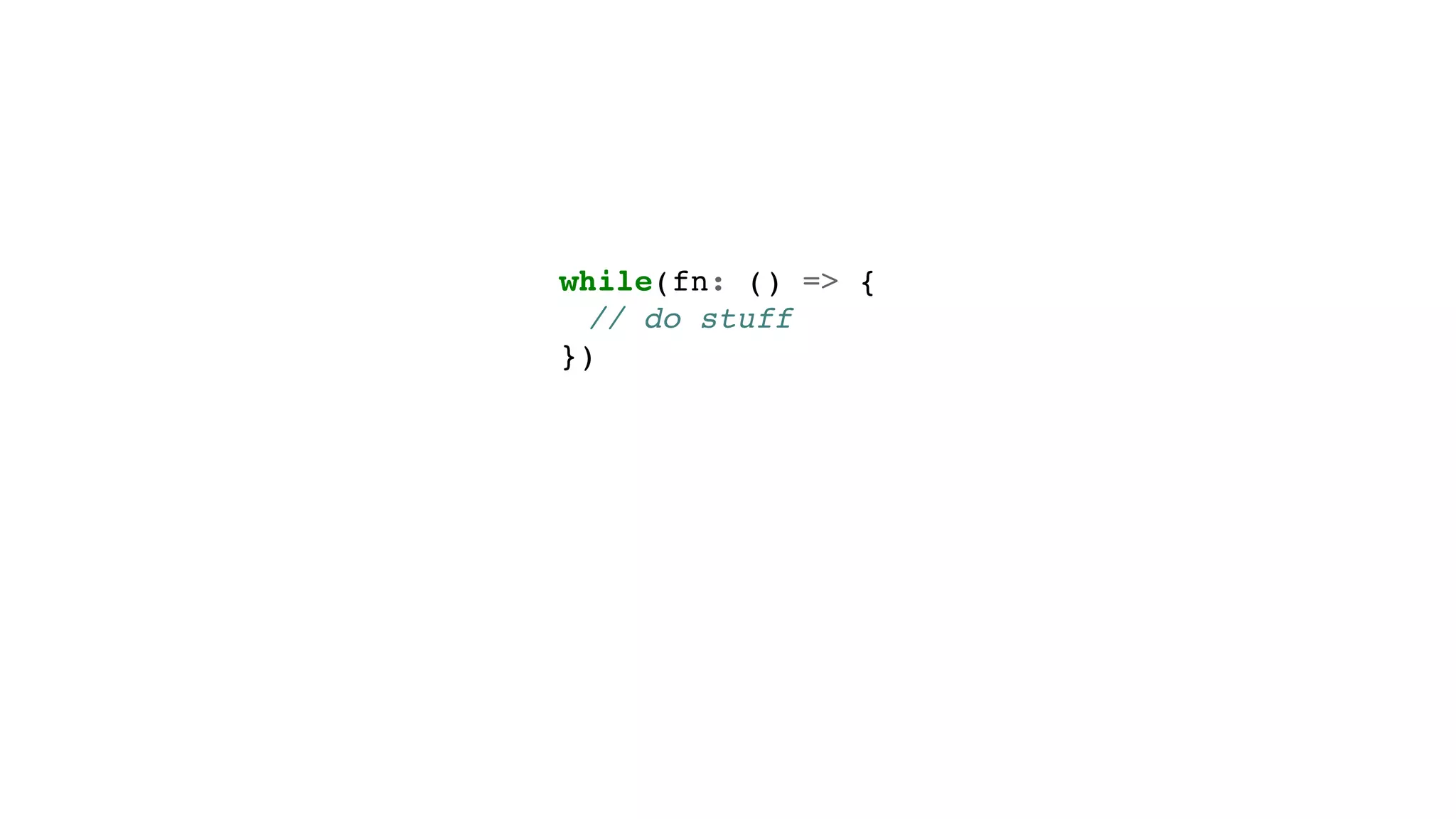

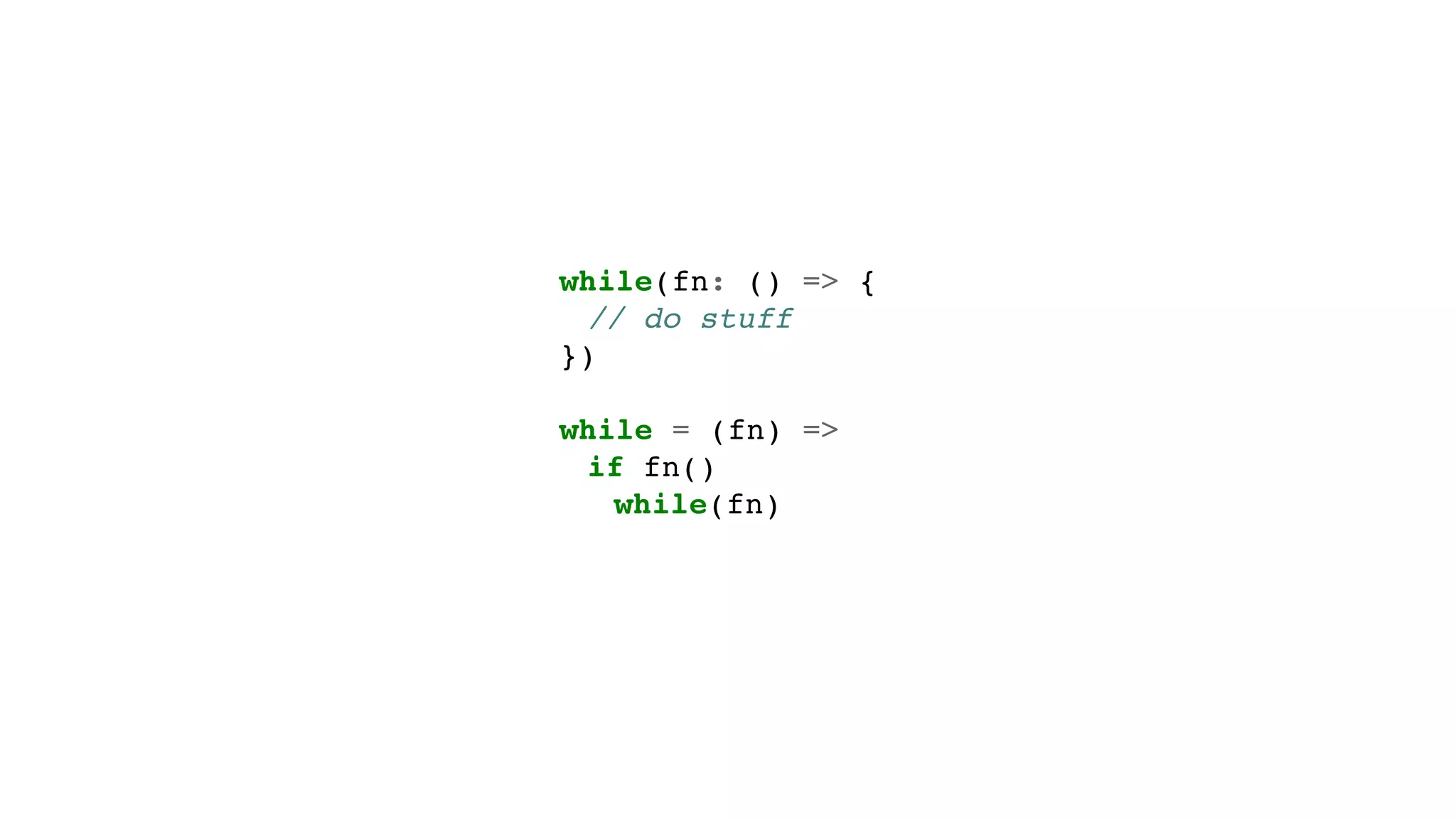

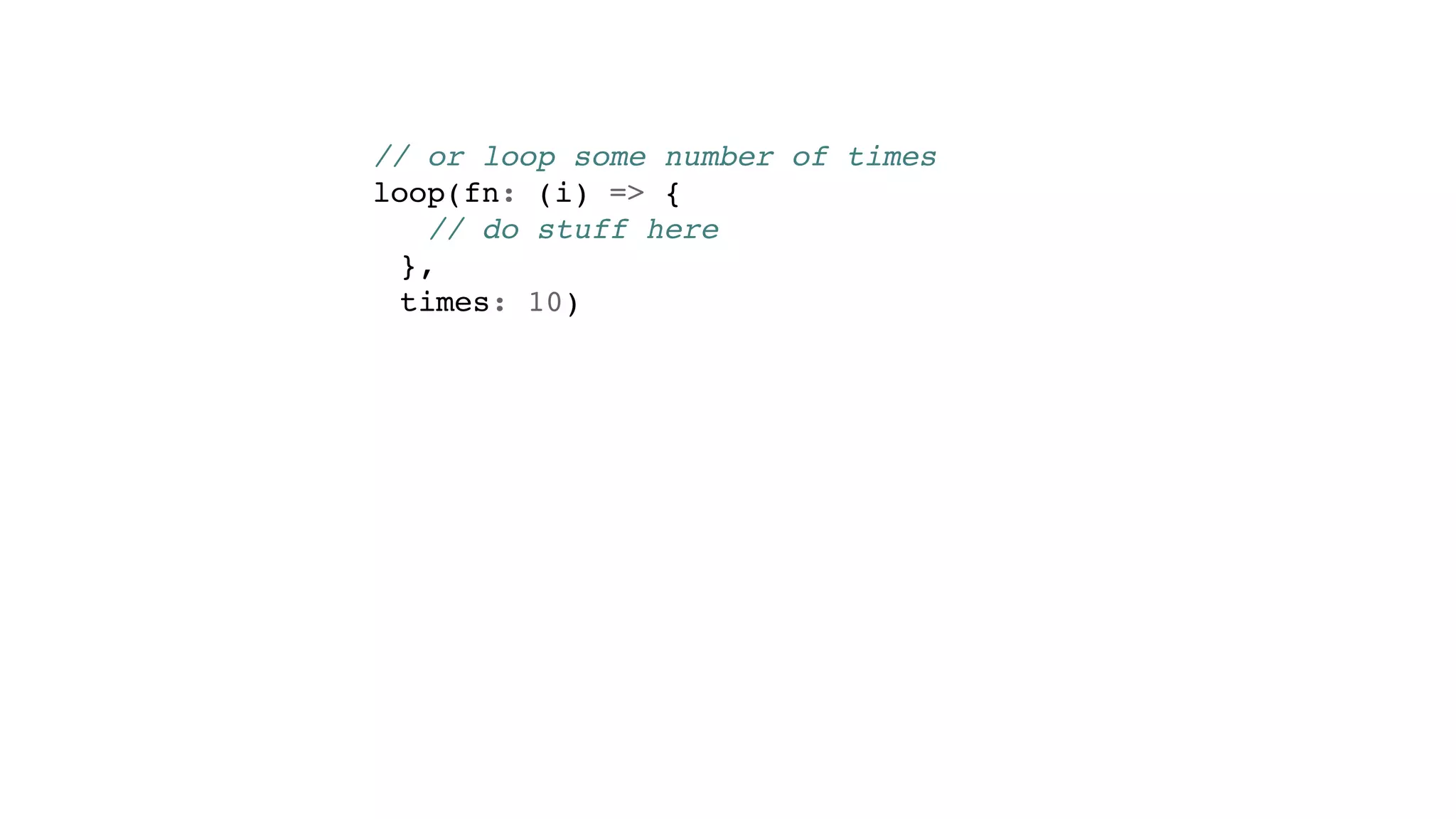

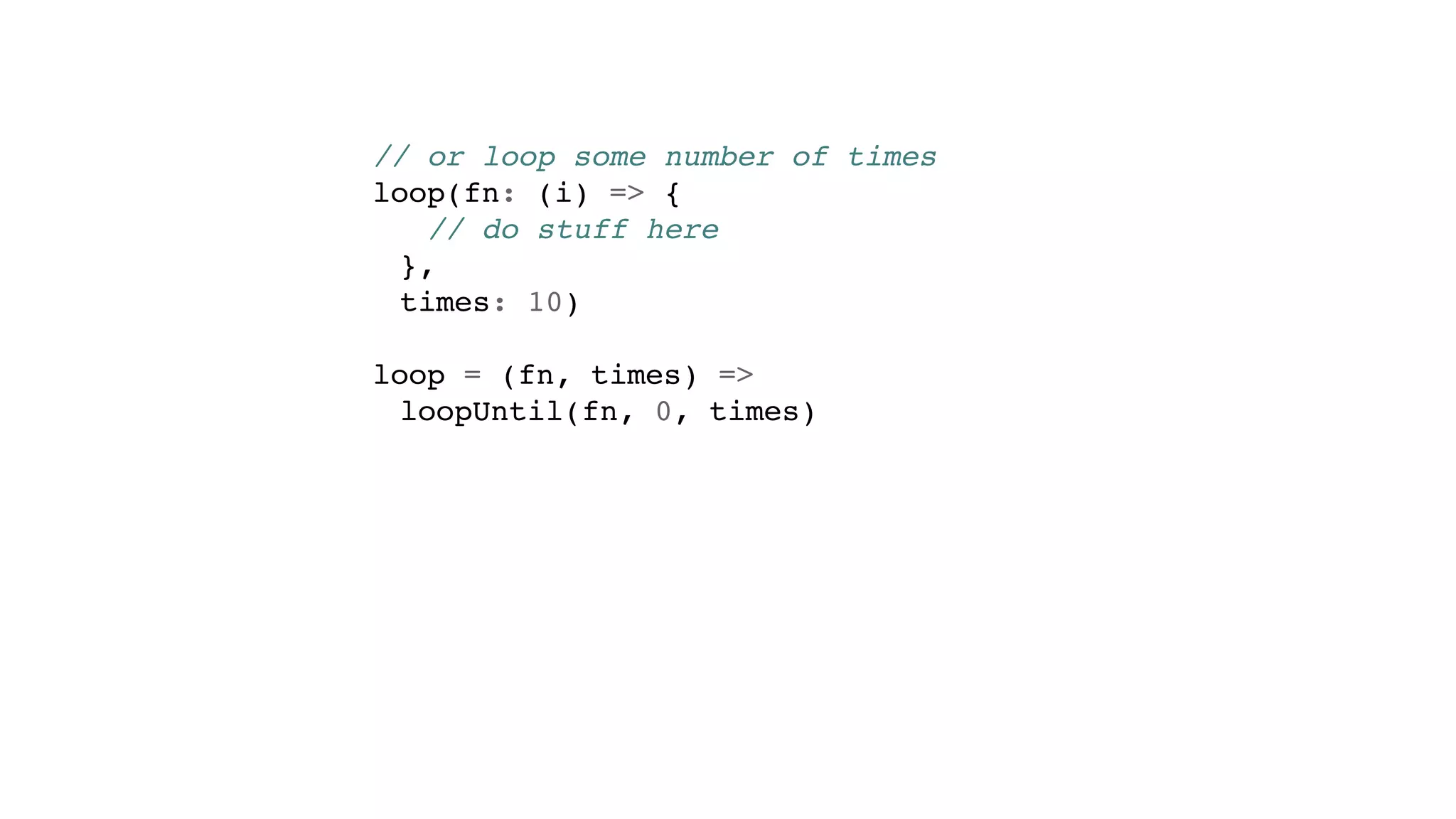

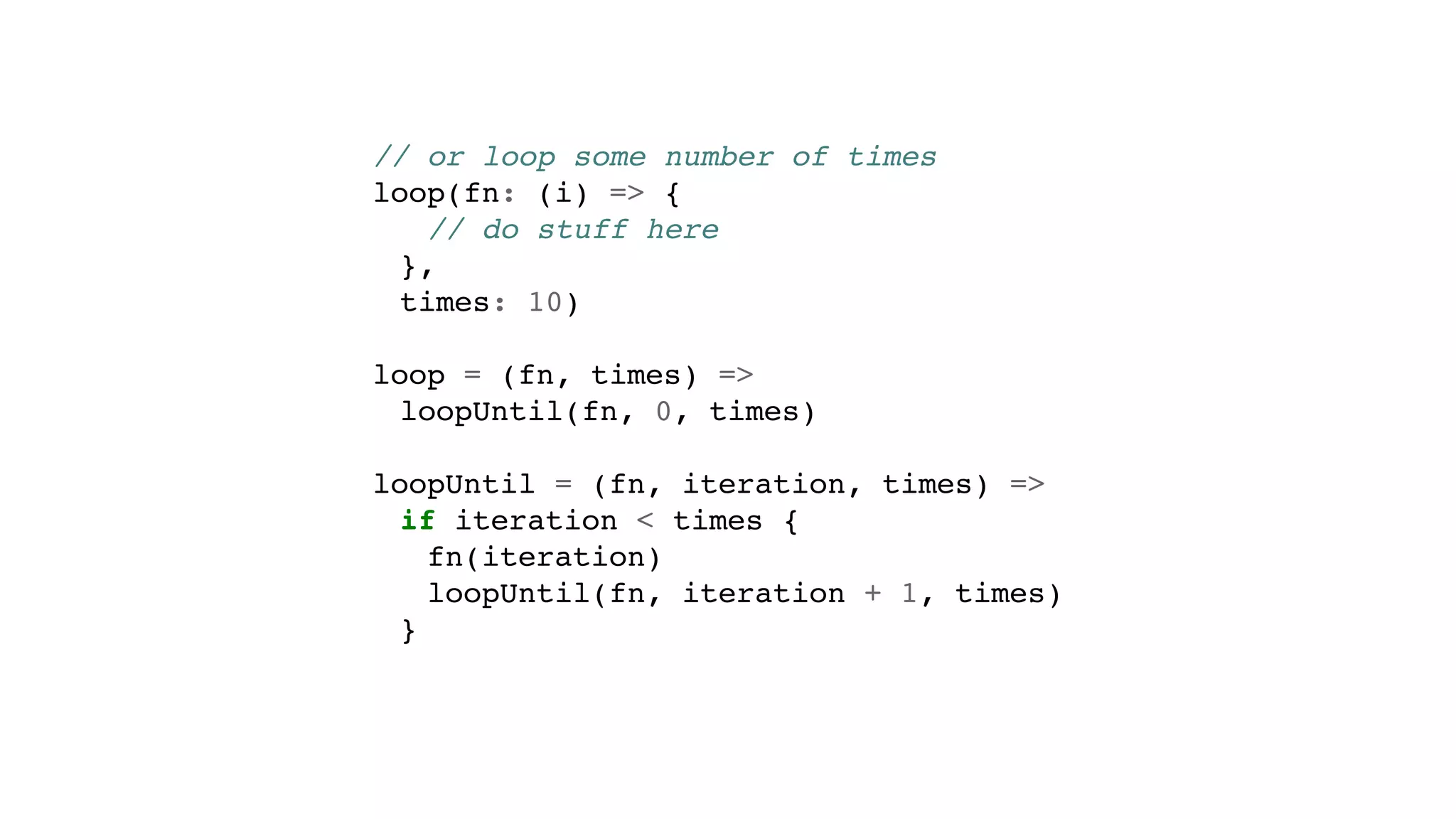

The document provides a comprehensive overview of Flux and InfluxDB 2.0, detailing its functional data scripting language, features like multi-tenancy, and migration paths. It discusses structures such as buckets, tasks, and roles, as well as code examples for data retrieval, filtering, and processing tasks. Notably, it covers various functional elements such as loops, error handling, and packages for building and managing data scripts.

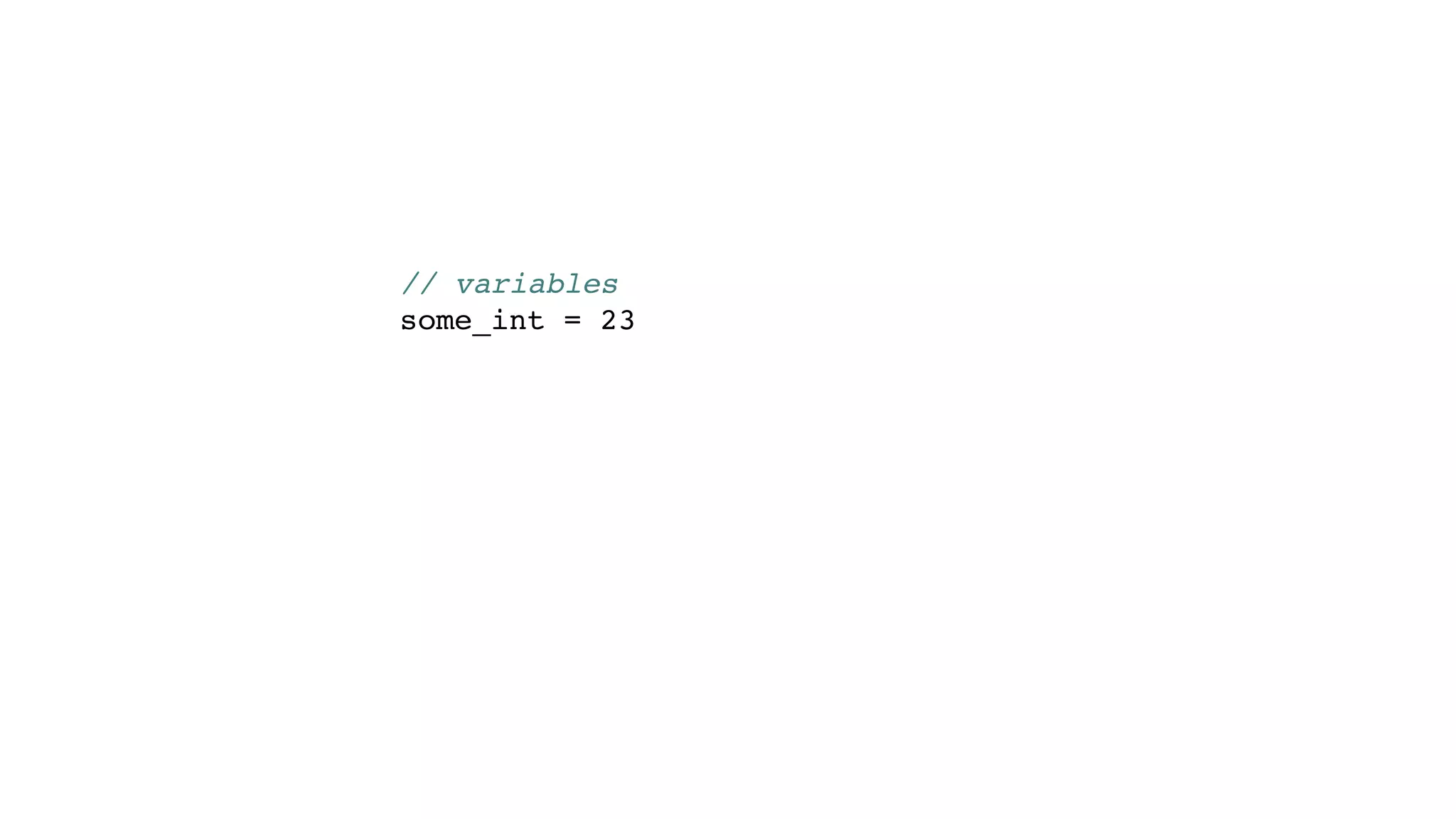

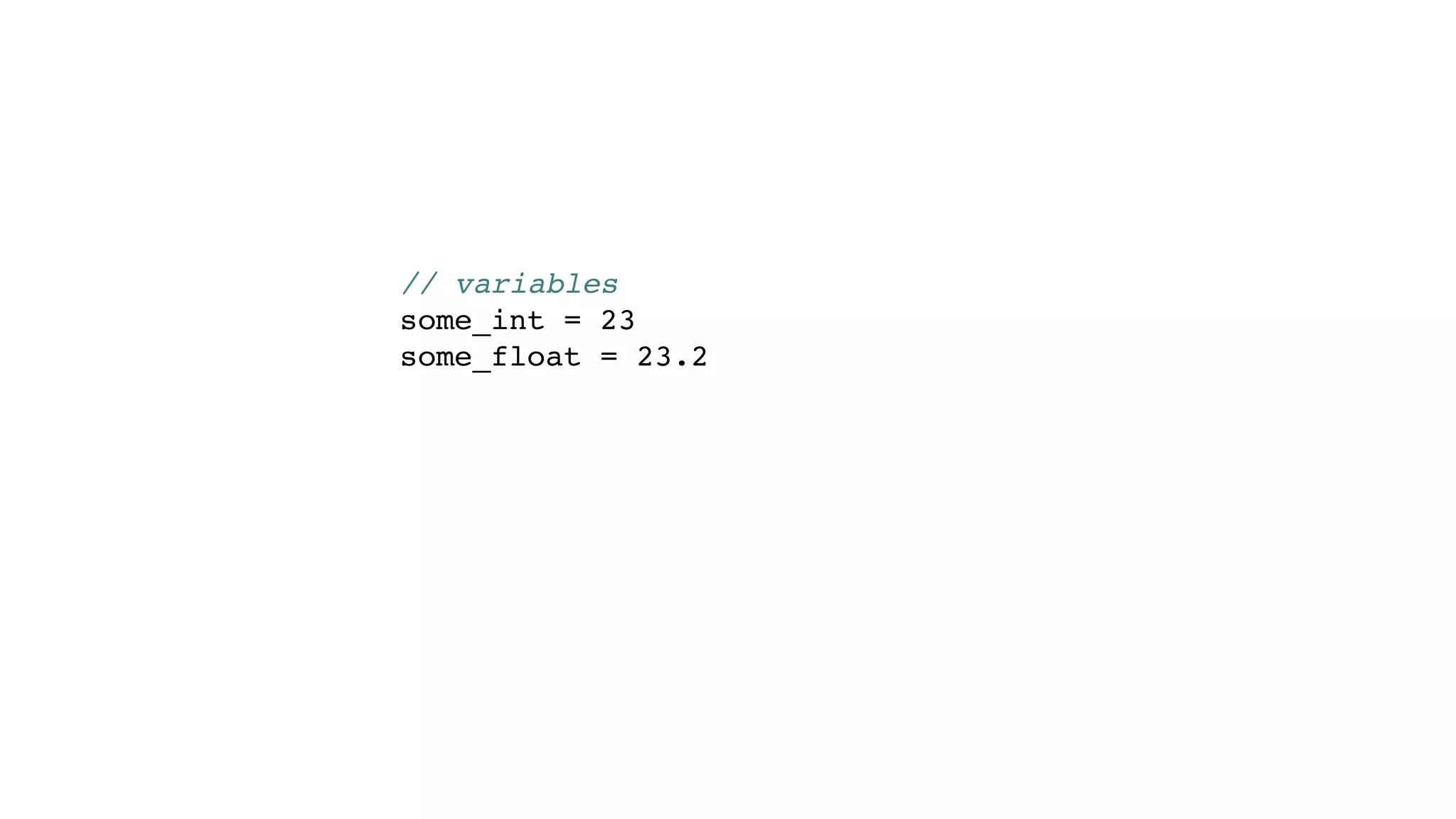

![// variables

some_int = 23

some_float = 23.2

some_string = “cpu"

some_duration = 1h

some_time = 2018-10-10T19:00:00

some_array = [1, 6, 20, 22]](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-37-2048.jpg)

![// variables

some_int = 23

some_float = 23.2

some_string = “cpu"

some_duration = 1h

some_time = 2018-10-10T19:00:00

some_array = [1, 6, 20, 22]

some_object = {foo: "hello" bar: 22}](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-38-2048.jpg)

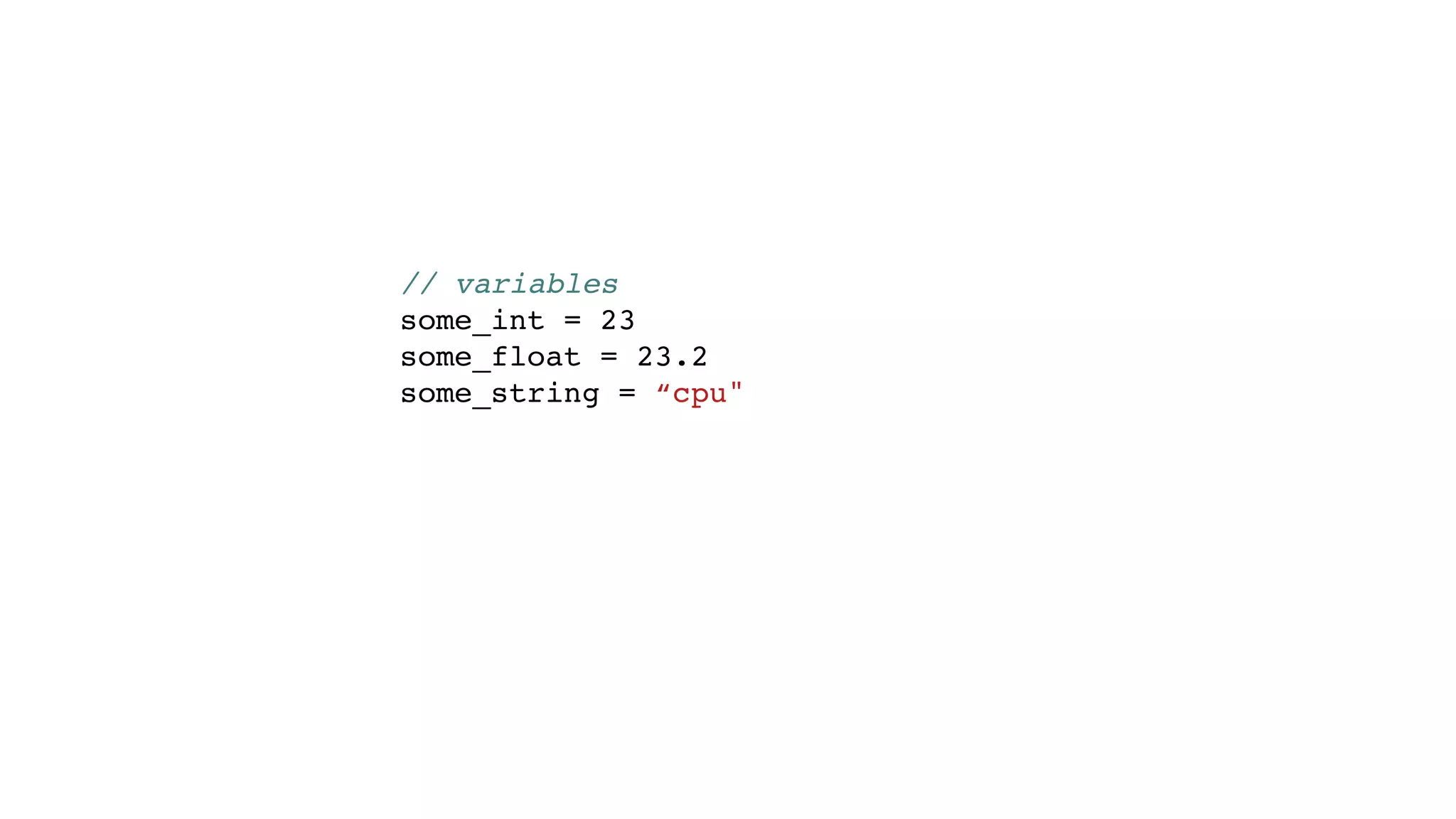

![option task = {

name: "email alert digest",

cron: "0 5 * * 0"

}

import "smtp"

body = ""

from(bucket: "alerts")

|> range(start: -24h)

|> filter(fn: (r) => (r.level == "warn" or r.level == "critical") and r._field == "message")

|> group(columns: ["alert"])

|> count()

|> group()

|> map(fn: (r) => body = body + "Alert {r.alert} triggered {r._value} timesn")

smtp.to(

config: loadSecret(name: "smtp_digest"),

to: "alerts@influxdata.com",

title: "Alert digest for {now()}",

body: message)](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-45-2048.jpg)

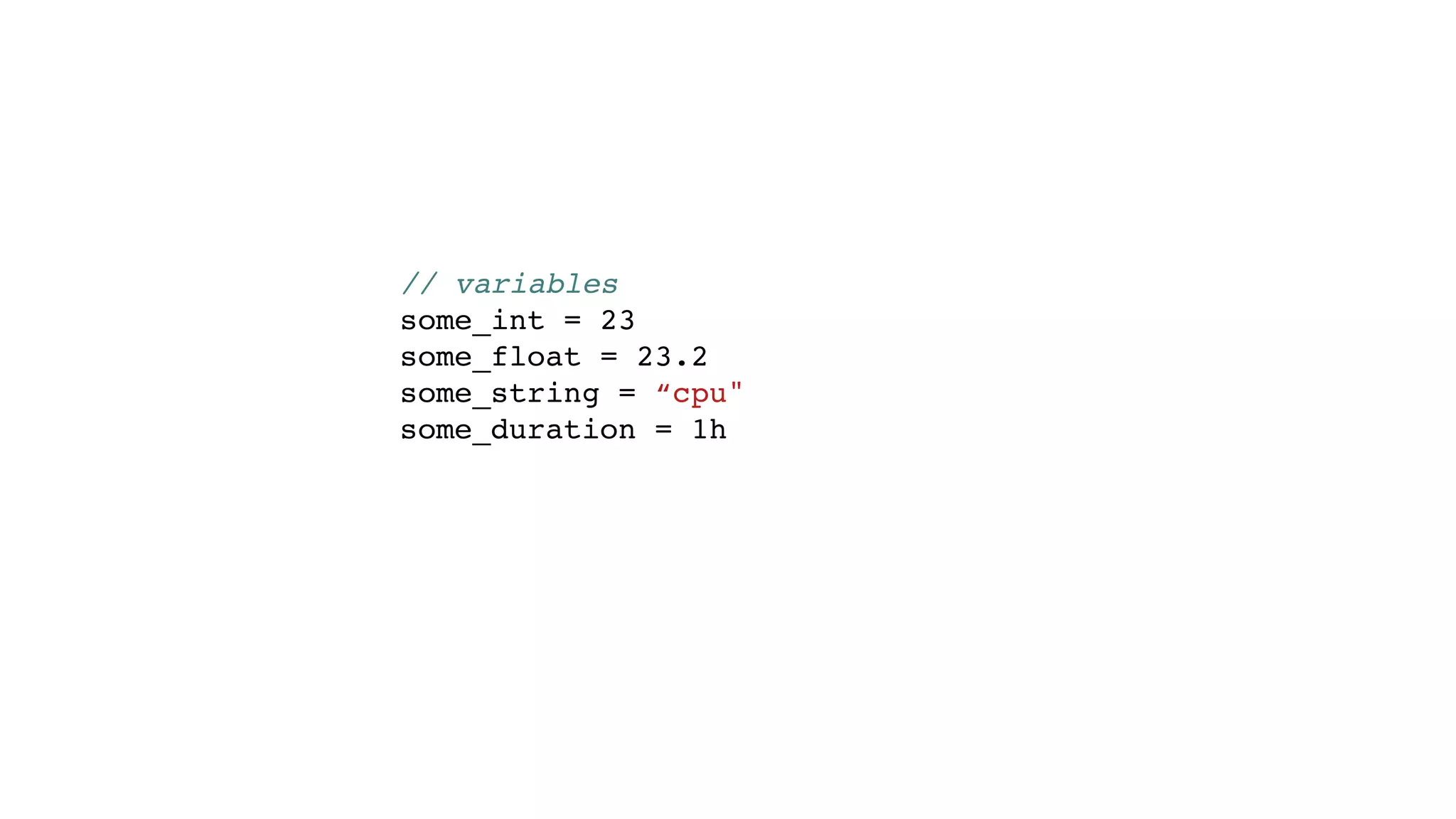

![option task = {

name: "email alert digest",

cron: "0 5 * * 0"

}

import "smtp"

body = ""

from(bucket: "alerts")

|> range(start: -24h)

|> filter(fn: (r) => (r.level == "warn" or r.level == "critical") and r._field == "message")

|> group(columns: ["alert"])

|> count()

|> group()

|> map(fn: (r) => body = body + "Alert {r.alert} triggered {r._value} timesn")

smtp.to(

config: loadSecret(name: "smtp_digest"),

to: "alerts@influxdata.com",

title: "Alert digest for {now()}",

body: message)

tasks](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-46-2048.jpg)

![option task = {

name: "email alert digest",

cron: "0 5 * * 0"

}

import "smtp"

body = ""

from(bucket: "alerts")

|> range(start: -24h)

|> filter(fn: (r) => (r.level == "warn" or r.level == "critical") and r._field == "message")

|> group(columns: ["alert"])

|> count()

|> group()

|> map(fn: (r) => body = body + "Alert {r.alert} triggered {r._value} timesn")

smtp.to(

config: loadSecret(name: "smtp_digest"),

to: "alerts@influxdata.com",

title: "Alert digest for {now()}",

body: message)

cron scheduling](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-47-2048.jpg)

![option task = {

name: "email alert digest",

cron: "0 5 * * 0"

}

import "smtp"

body = ""

from(bucket: "alerts")

|> range(start: -24h)

|> filter(fn: (r) => (r.level == "warn" or r.level == "critical") and r._field == "message")

|> group(columns: ["alert"])

|> count()

|> group()

|> map(fn: (r) => body = body + "Alert {r.alert} triggered {r._value} timesn")

smtp.to(

config: loadSecret(name: "smtp_digest"),

to: "alerts@influxdata.com",

title: "Alert digest for {now()}",

body: message)

packages & imports](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-48-2048.jpg)

![option task = {

name: "email alert digest",

cron: "0 5 * * 0"

}

import "smtp"

body = ""

from(bucket: "alerts")

|> range(start: -24h)

|> filter(fn: (r) => (r.level == "warn" or r.level == "critical") and r._field == "message")

|> group(columns: ["alert"])

|> count()

|> group()

|> map(fn: (r) => body = body + "Alert {r.alert} triggered {r._value} timesn")

smtp.to(

config: loadSecret(name: "smtp_digest"),

to: "alerts@influxdata.com",

title: "Alert digest for {now()}",

body: message)

map](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-49-2048.jpg)

![option task = {

name: "email alert digest",

cron: "0 5 * * 0"

}

import "smtp"

body = ""

from(bucket: "alerts")

|> range(start: -24h)

|> filter(fn: (r) => (r.level == "warn" or r.level == "critical") and r._field == "message")

|> group(columns: ["alert"])

|> count()

|> group()

|> map(fn: (r) => body = body + "Alert {r.alert} triggered {r._value} timesn")

smtp.to(

config: loadSecret(name: "smtp_digest"),

to: "alerts@influxdata.com",

title: "Alert digest for {now()}",

body: message) String interpolation](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-50-2048.jpg)

![option task = {

name: "email alert digest",

cron: "0 5 * * 0"

}

import "smtp"

body = ""

from(bucket: "alerts")

|> range(start: -24h)

|> filter(fn: (r) => (r.level == "warn" or r.level == "critical") and r._field == "message")

|> group(columns: ["alert"])

|> count()

|> group()

|> map(fn: (r) => body = body + "Alert {r.alert} triggered {r._value} timesn")

smtp.to(

config: loadSecret(name: "smtp_digest"),

to: "alerts@influxdata.com",

title: "Alert digest for {now()}",

body: message)

Ship data elsewhere](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-51-2048.jpg)

![option task = {

name: "email alert digest",

cron: "0 5 * * 0"

}

import "smtp"

body = ""

from(bucket: "alerts")

|> range(start: -24h)

|> filter(fn: (r) => (r.level == "warn" or r.level == "critical") and r._field == "message")

|> group(columns: ["alert"])

|> count()

|> group()

|> map(fn: (r) => body = body + "Alert {r.alert} triggered {r._value} timesn")

smtp.to(

config: loadSecret(name: "smtp_digest"),

to: "alerts@influxdata.com",

title: "Alert digest for {now()}",

body: message)

Store secrets in a

store like Vault](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-52-2048.jpg)

![// in a file called package.flux

package "paul"

option version = "0.1.1"

// import the other package files

// they must have package "paul" declaration at the top

// only package.flux has the version

import “packages”

packages.load(files: ["square.flux", "utils.flux"])](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-56-2048.jpg)

![// in a file called package.flux

package "paul"

option version = "0.1.1"

// import the other package files

// they must have package "paul" declaration at the top

// only package.flux has the version

import “packages”

packages.load(files: ["square.flux", "utils.flux"])

// or this

packages.load(glob: "*.flux")](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-57-2048.jpg)

![records = [

{name: "foo", value: 23},

{name: "bar", value: 23},

{name: "asdf", value: 56}

]](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-66-2048.jpg)

![records = [

{name: "foo", value: 23},

{name: "bar", value: 23},

{name: "asdf", value: 56}

]

// simple loop over each

records

|> map(fn: (r) => {name: r.name, value: r.value + 1})](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-67-2048.jpg)

![records = [

{name: "foo", value: 23},

{name: "bar", value: 23},

{name: "asdf", value: 56}

]

// simple loop over each

records

|> map(fn: (r) => {name: r.name, value: r.value + 1})

// compute the sum

sum = records

|> reduce(

fn: (r, accumulator) => r.value + accumulator,

i: 0

)](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-68-2048.jpg)

![records = [

{name: "foo", value: 23},

{name: "bar", value: 23},

{name: "asdf", value: 56}

]

// simple loop over each

records

|> map(fn: (r) => {name: r.name, value: r.value + 1})

// compute the sum

sum = records

|> reduce(

fn: (r, accumulator) => r.value + accumulator,

i: 0

)

// get matching records

foos = records

|> filter(fn: (r) => r.name == "foo")](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-69-2048.jpg)

![// <stream object>[<predicate>,<time>:<time>,<list of strings>]](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-76-2048.jpg)

![// <stream object>[<predicate>,<time>:<time>,<list of strings>]

// and here's an example

from(bucket:"foo")[_measurement == "cpu" and _field == "usage_user",

2018-11-07:2018-11-08,

["_measurement", "_time", "_value", “_field”]]](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-77-2048.jpg)

![// <stream object>[<predicate>,<time>:<time>,<list of strings>]

// and here's an example

from(bucket:"foo")[_measurement == "cpu" and _field == "usage_user",

2018-11-07:2018-11-08,

["_measurement", "_time", "_value", “_field”]]

from(bucket:"foo")

|> filter(fn: (row) => row._measurement == "cpu" and row._field == "usage_user")

|> range(start: 2018-11-07, stop: 2018-11-08)

|> keep(columns: ["_measurement", "_time", "_value", “_field"])](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-78-2048.jpg)

![from(bucket:"foo")[_measurement == "cpu"]

// notice the trailing commas can be left off

from(bucket: "foo")

|> filter(fn: (row) => row._measurement == "cpu")

|> last()](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-79-2048.jpg)

![from(bucket:"foo")["some tag" == "asdf",,]

from(bucket: "foo")

|> filter(fn: (row) => row["some tag"] == "asdf")

|> last()](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-80-2048.jpg)

![from(bucket:"foo")[foo=="bar",-1h]

from(bucket: "foo")

|> filter(fn: (row) => row.foo == "bar")

|> range(start: -1h)](https://image.slidesharecdn.com/session1-fluxandinfluxdb2-190317204004/75/Flux-and-InfluxDB-2-0-by-Paul-Dix-81-2048.jpg)