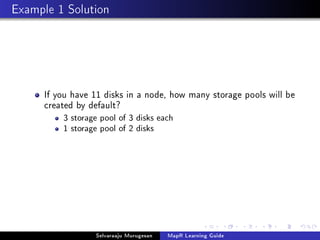

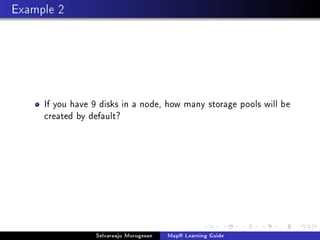

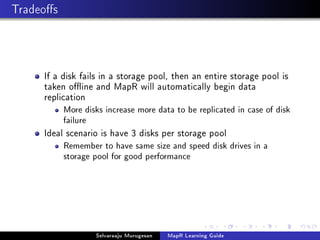

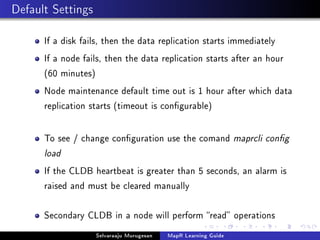

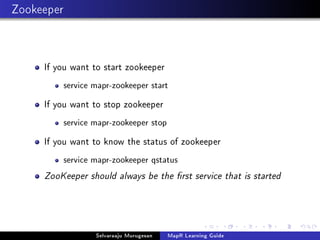

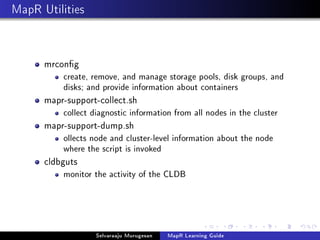

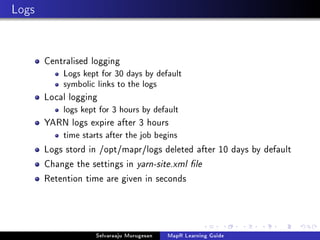

MapR clusters disks into storage pools for data distribution. By default, storage pools contain 3 disks each. The mrconfig command can be used to create, remove, and manage storage pools and disks. Each node supports up to 36 storage pools. Zookeeper should always be started before other services and is critical for high availability. Logs are centrally stored for 30 days by default and can be configured through yarn-site.xml.