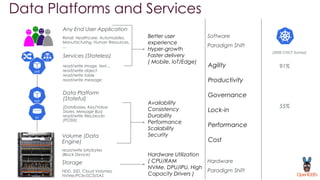

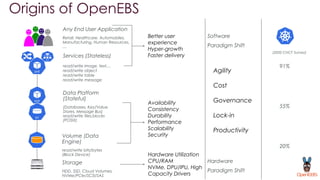

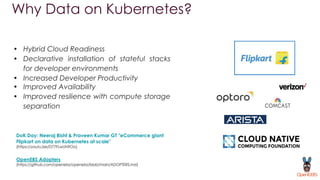

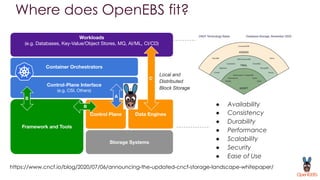

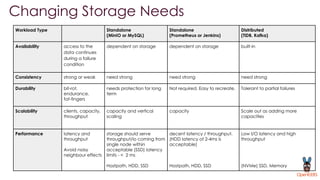

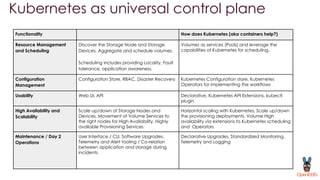

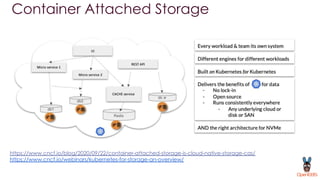

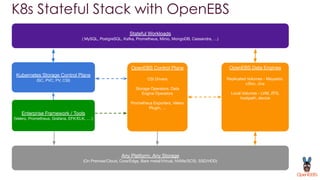

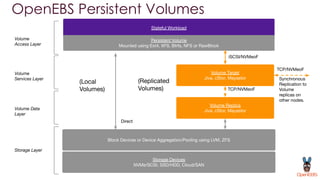

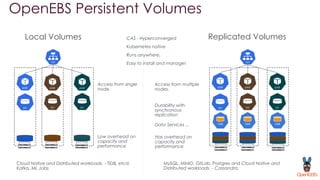

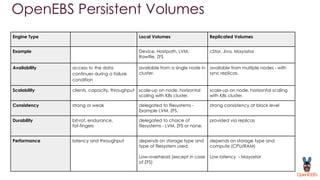

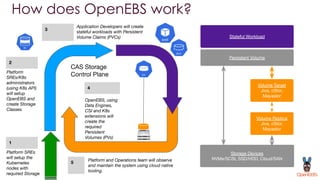

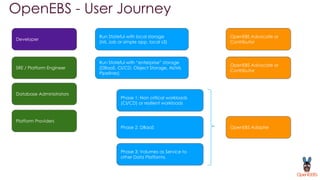

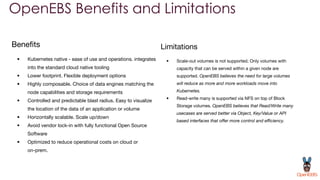

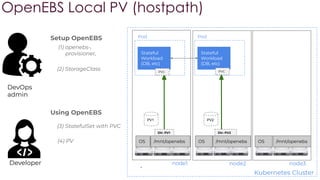

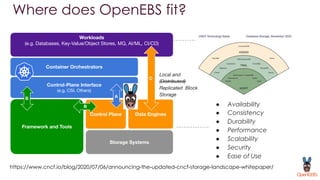

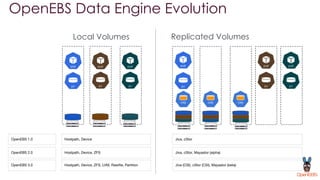

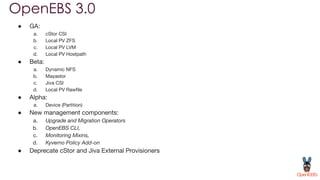

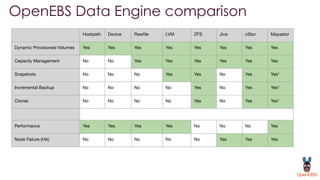

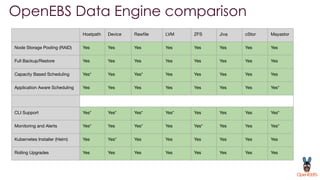

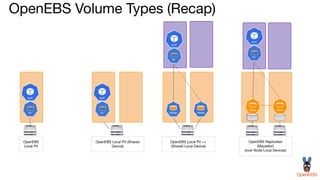

OpenEBS is an open source container attached storage solution for Kubernetes that simplifies running stateful workloads. It provides containerized storage that is native to Kubernetes using features like CSI, dynamic provisioning of volumes, and integration with common DevOps tools. OpenEBS offers both local and replicated volume types to meet different use cases for availability, performance, and scalability. Developers can use OpenEBS volumes like any other Kubernetes storage by creating persistent volume claims in their applications.