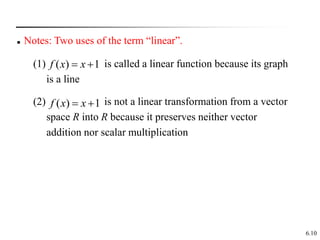

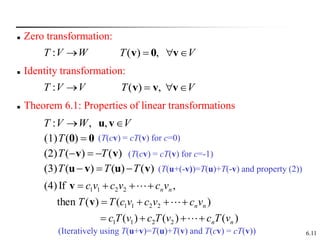

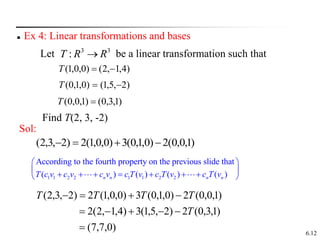

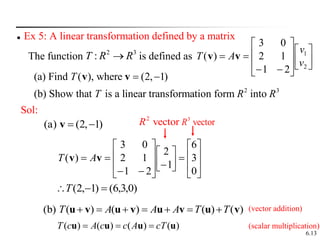

This document discusses linear transformations. It begins by defining a linear transformation as a function between vector spaces that preserves vector addition and scalar multiplication. It provides examples of linear transformations and functions that are not linear transformations. It then discusses properties of linear transformations including the zero and identity transformations. It introduces the concept that a linear transformation can be represented by a matrix and that matrix multiplication defines a linear transformation. It concludes by stating that a linear transformation defined by a matrix satisfies the properties of being linear.

![6.65

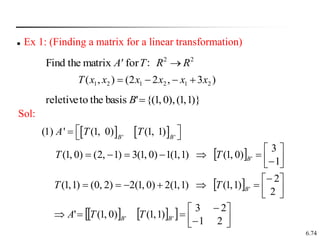

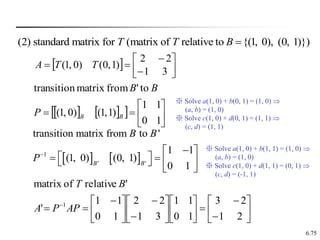

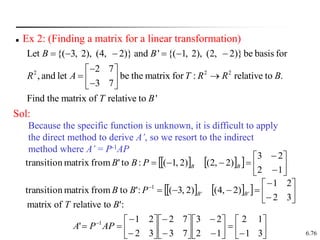

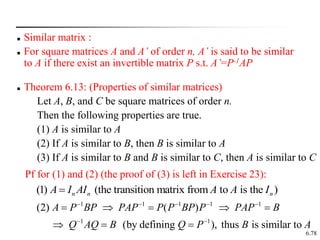

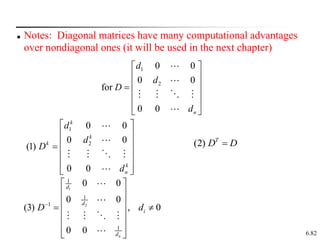

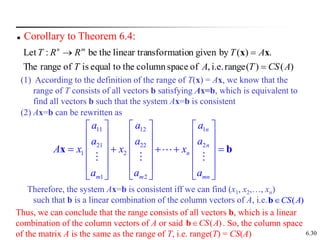

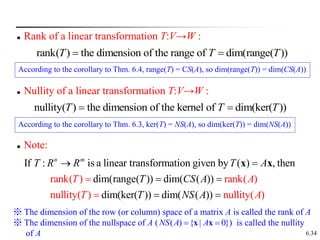

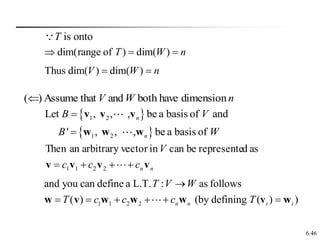

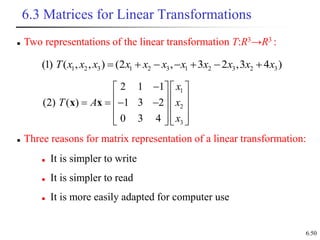

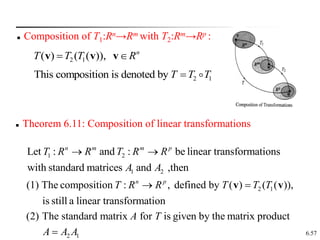

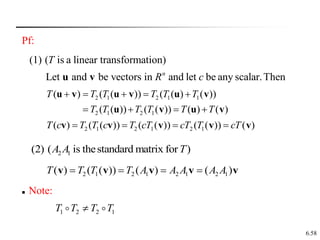

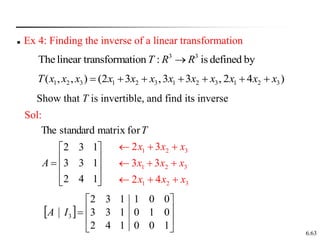

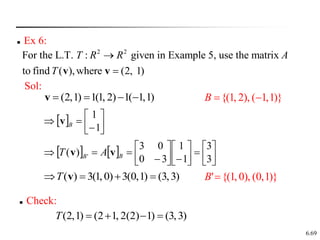

The matrix of T relative to the bases B and B‘:

1 2

: (a linear transformation)

{ , , , } (a nonstandard basis for )

The coordinate matrix of any relative to is denoted by [ ]

n

B

T V W

B V

B

v v v

v v

A matrix A can represent T if the result of A multiplied by a

coordinate matrix of v relative to B is a coordinate matrix of v

relative to B’, where B’ is a basis for W. That is,

where A is called the matrix of T relative to the bases B and B’

'

( ) [ ] ,

B

B

T A

v v

1

2

1 1 2 2

if can be represeted as , then [ ]

n n B

n

c

c

c c c

c

v v v v v](https://image.slidesharecdn.com/linear-transformations-2017-03-19-14-38-49-230425174726-58d12d97/85/linear-transformations-2017-03-19-14-38-49-pdf-65-320.jpg)

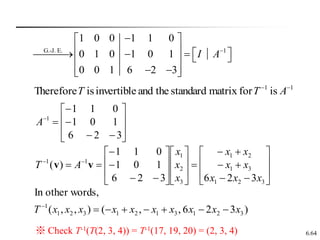

![6.67

'

is such that ( ) [ ] for every in

B

B

T A V

v v v

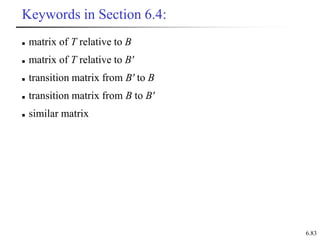

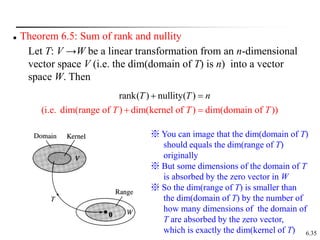

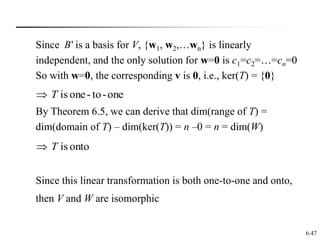

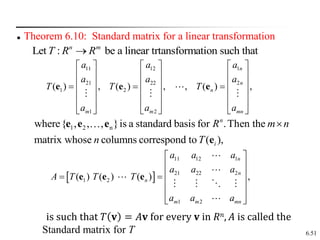

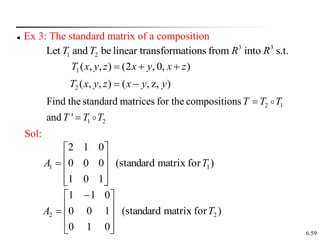

11 12 1

21 22 2

1 2

1 2

[ ( )] [ ( )] [ ( )]

n

n

B B n B

m m mn

a a a

a a a

A T T T

a a a

v v v

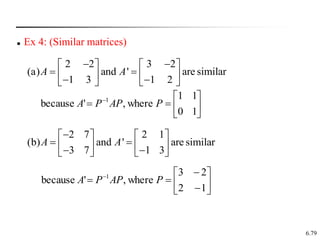

※The above result state that the coordinate of T(v) relative to the basis B’

equals the multiplication of A defined above and the coordinate of v

relative to the basis B.

※ Comparing to the result in Thm. 6.10 (T(v) = Av), it can infer that the

linear transformation and the basis change can be achieved in one step

through multiplying the matrix A defined above (see the figure on 6.74

for illustration)](https://image.slidesharecdn.com/linear-transformations-2017-03-19-14-38-49-230425174726-58d12d97/85/linear-transformations-2017-03-19-14-38-49-pdf-67-320.jpg)

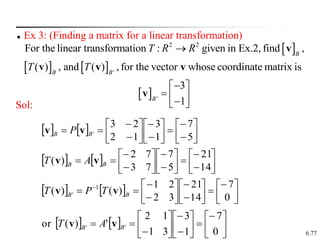

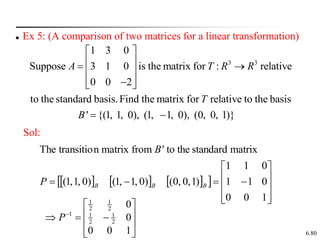

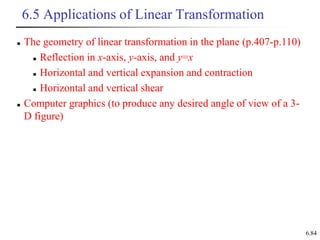

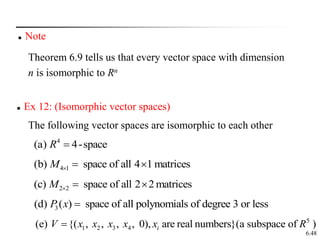

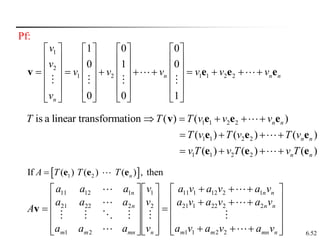

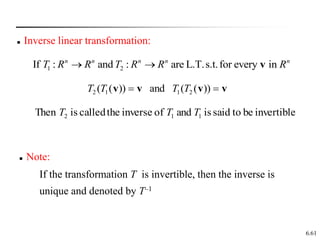

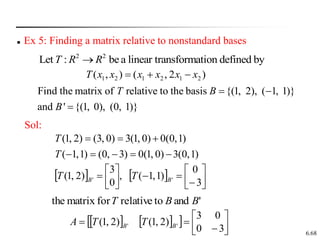

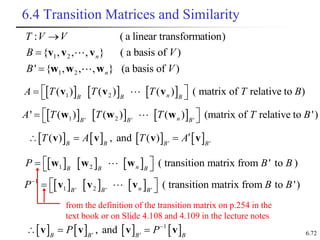

![6.73

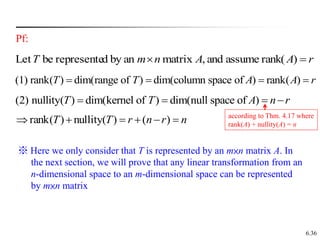

Two ways to get from to :

' '

(1) (direct): '[ ] [ ( )]

B B

A T

v v

'

B

v '

)

( B

T v

1 1

' '

(2) (indirect): [ ] [ ( )] '

B B

P AP T A P AP

v v

direct

indirect](https://image.slidesharecdn.com/linear-transformations-2017-03-19-14-38-49-230425174726-58d12d97/85/linear-transformations-2017-03-19-14-38-49-pdf-73-320.jpg)