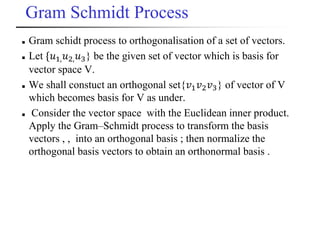

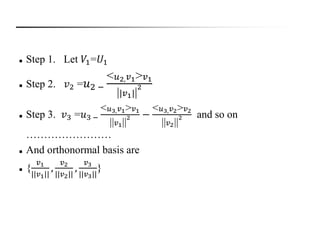

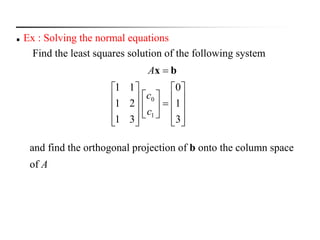

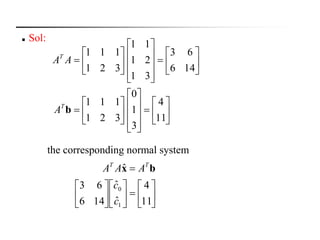

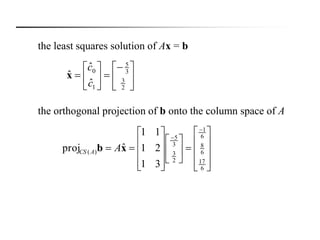

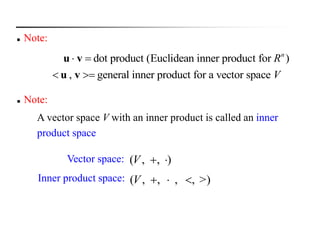

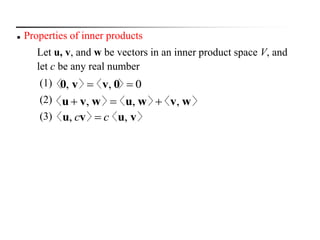

(1) The document discusses inner product spaces and related linear algebra concepts such as orthogonal vectors and bases, Gram-Schmidt process, orthogonal complements, and orthogonal projections.

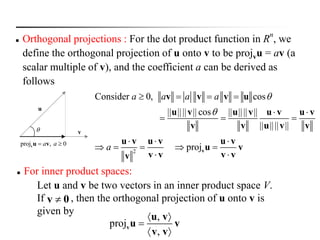

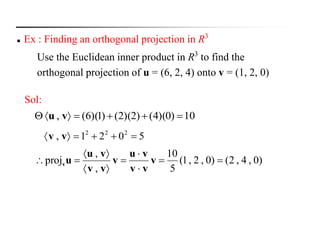

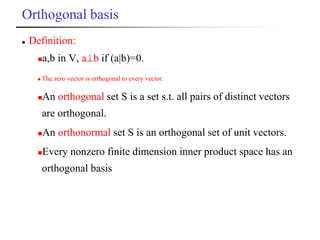

(2) Key topics covered include defining inner products and their properties, finding orthogonal vectors and constructing orthogonal bases, using Gram-Schmidt process to orthogonalize a set of vectors, defining and finding orthogonal complements of subspaces, and computing orthogonal projections of vectors.

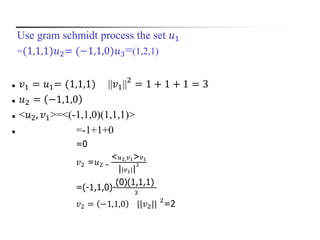

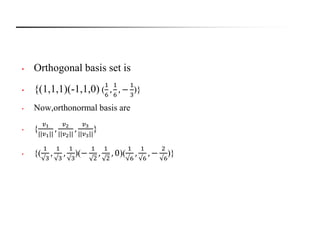

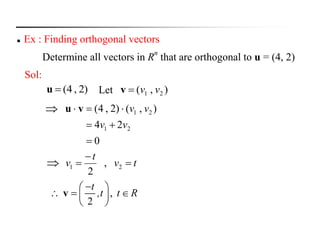

(3) Examples are provided to demonstrate computing orthogonal bases, orthogonal complements, and orthogonal projections in inner product spaces.

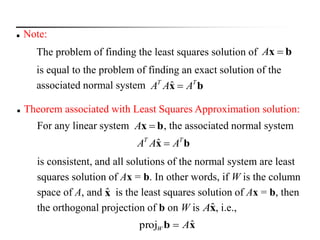

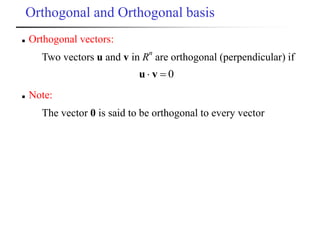

![Theorems :

If S={v1,v2,…,vn} is an orthogonal set of nonzero vectorin an

inner product space V then S is linearly independent.

Any orthogonal set of n nonzero vector in Rn is basis for Rn.

If S={v1,v2,…,vn} is an orthonormal basis for an inner product

space V, and u is any vector in V then it can be expressed as a

linear combination of v1,v2,…,vn.

u=<u,v1>v1 + <u,v2>v2 +…+ <u,vn>vn

If S is an orthonormal basis for an n-dimensional inner product

space, and if coordinate vectors of u & v with respect to S are

[u]s=(a1,a2,…,an) and [v]s=(b1,b2,…,bn) then

||u||=√(a ) + (a ) + …+(a )

d(u,v)=√(a1-b1)2 + (a2-b2)2 +…+ (an-bn)2

<u,v>=a1b1 + a2b2 +…+ anbn

=[u]s.[v]s.

2 2 2

1 2

n](https://image.slidesharecdn.com/ips1-170503173350/85/Inner-Product-Space-13-320.jpg)