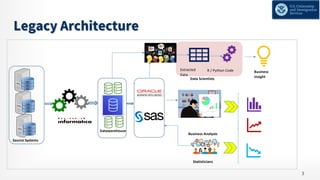

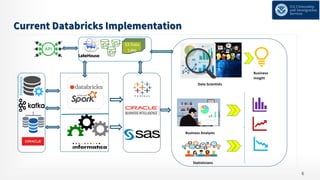

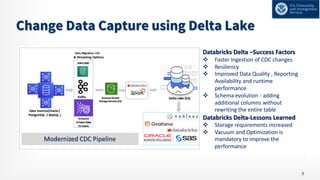

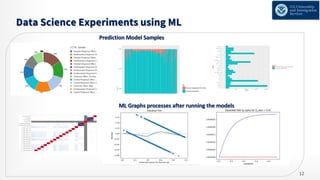

The document details the modernization journey of an ETL pipeline using Databricks, addressing previous challenges with long development cycles, data loads, and architecture limitations. It highlights achievements such as faster data ingestion, integration capabilities, automated monitoring, and enhanced data quality through Delta Lake. Additionally, it outlines the implementation of a unified data analytical platform and security governance measures while showcasing the success criteria and benefits of the new system.