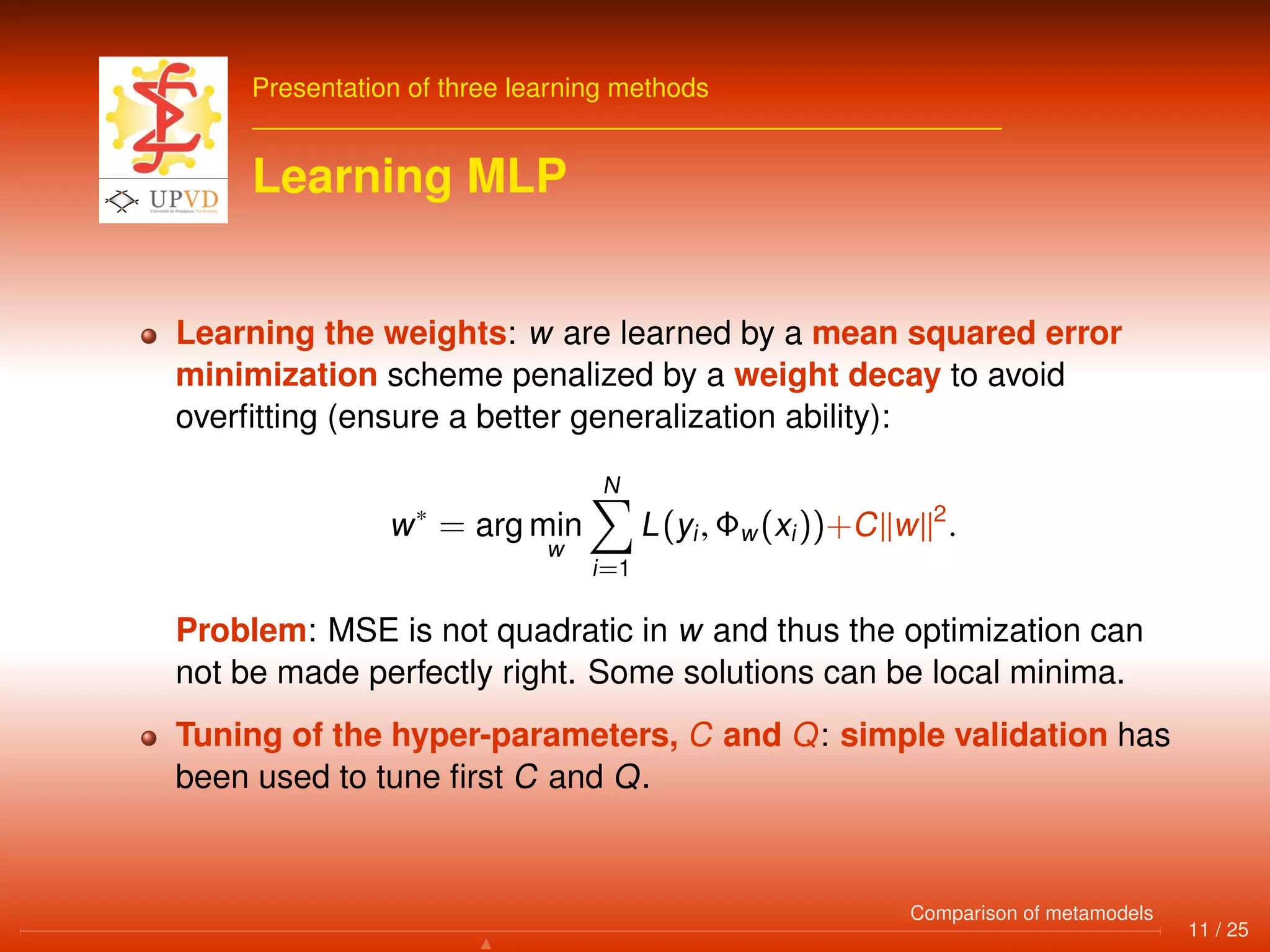

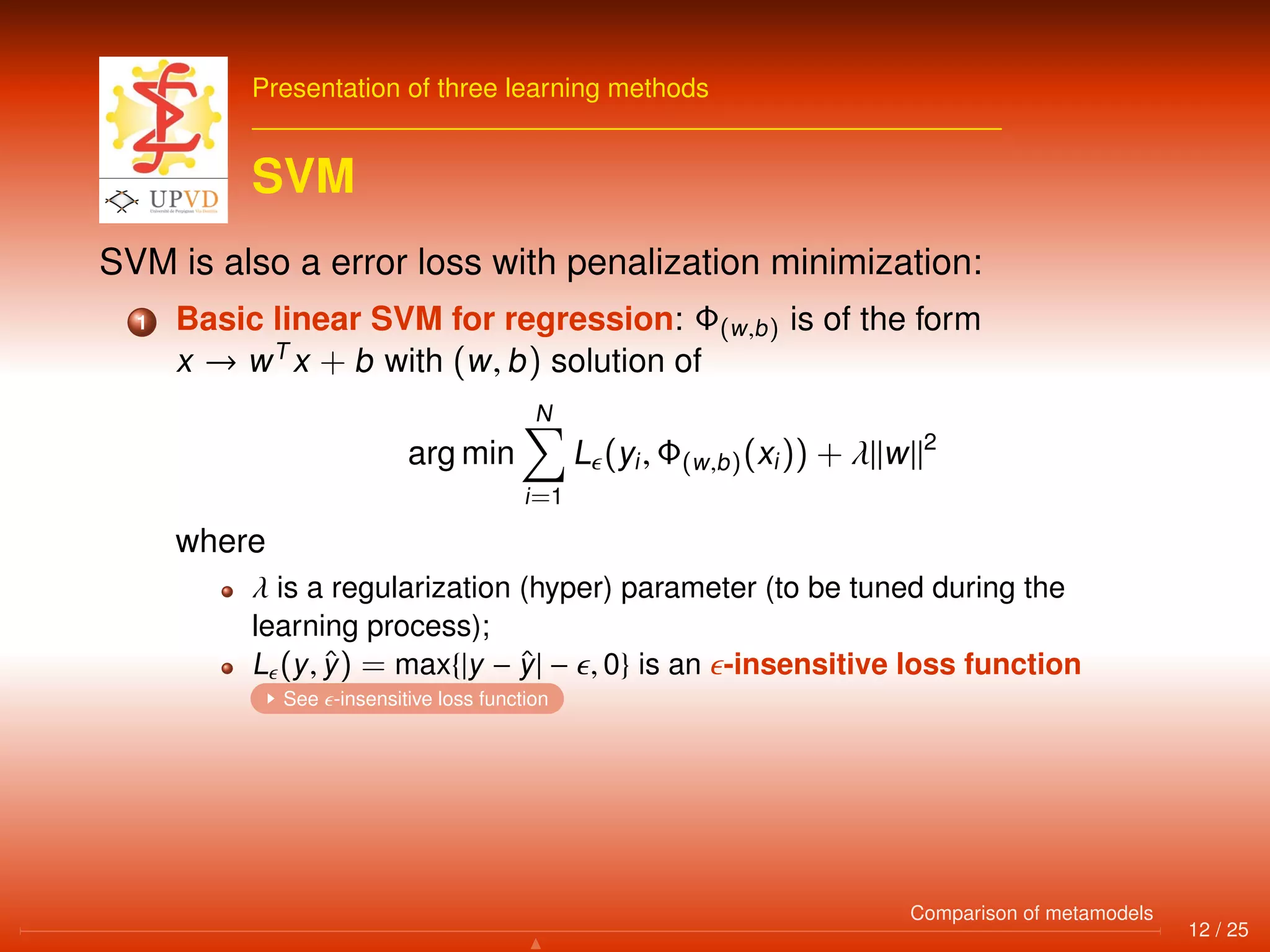

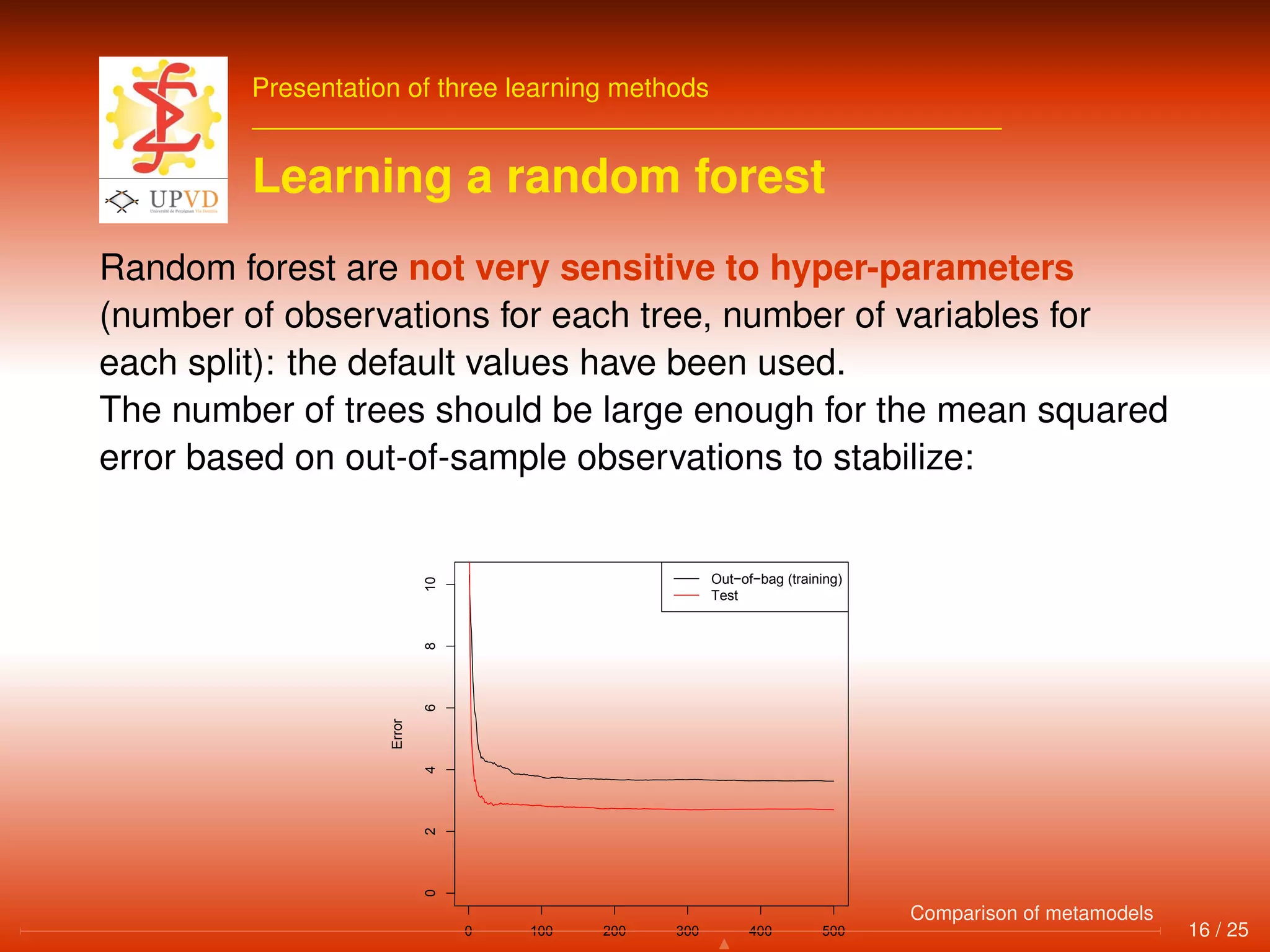

The document compares three machine learning methods - multi-layer perceptrons (neural networks), support vector machines (SVMs), and random forests - for predicting N2O fluxes and N leaching from various data inputs. It provides background on machine learning for regression problems, describes the three methods and how they are trained and tuned, and discusses the methodology and results of a study comparing the performance of these methods.

![Sommaire

1 Basics about machine learning for regression problems

2 Presentation of three learning methods

3 Methodology and results [Villa-Vialaneix et al., 2010]

2 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-2-2048.jpg)

![Basics about machine learning for regression problems

Sommaire

1 Basics about machine learning for regression problems

2 Presentation of three learning methods

3 Methodology and results [Villa-Vialaneix et al., 2010]

3 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-3-2048.jpg)

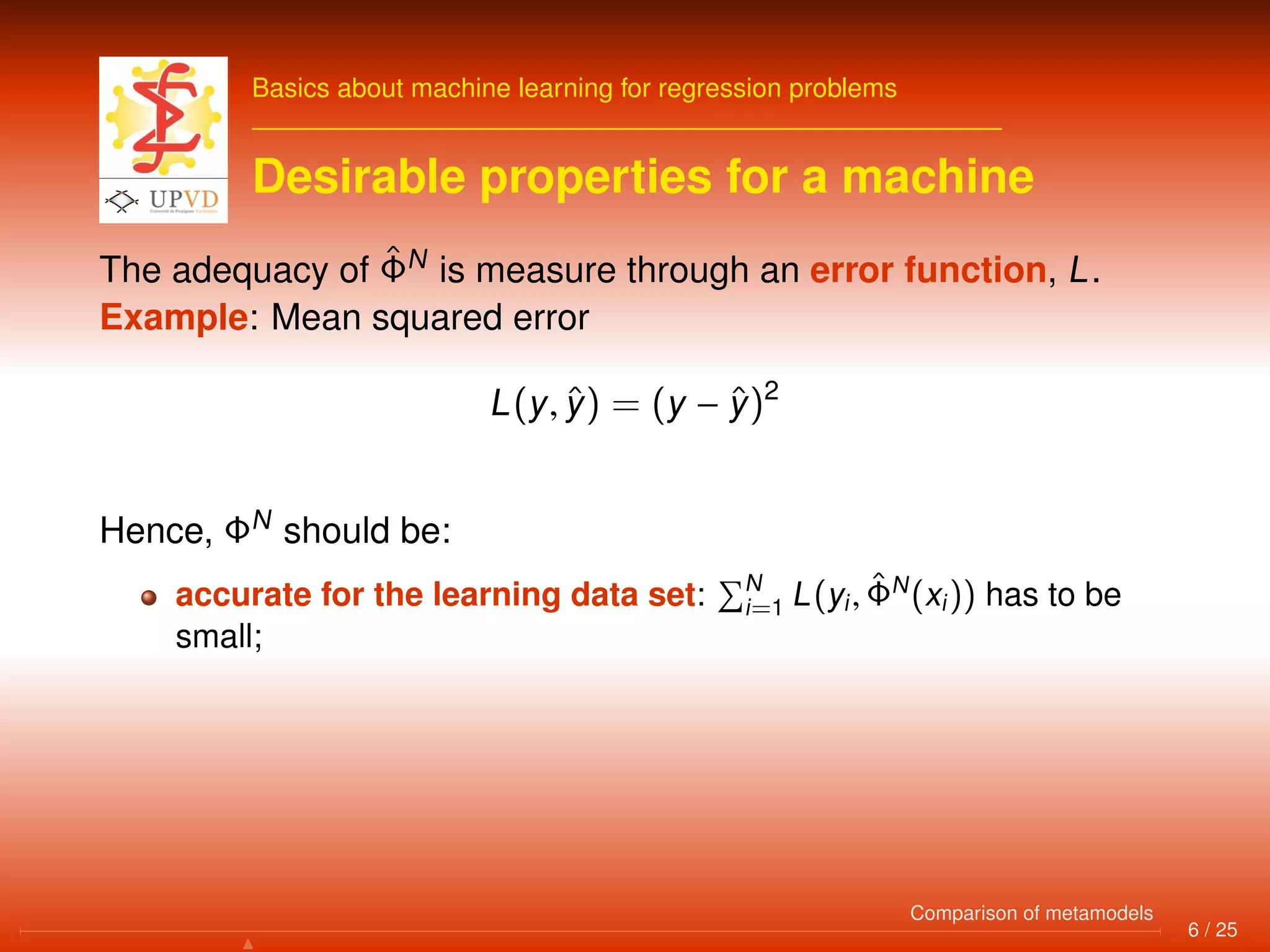

![Basics about machine learning for regression problems

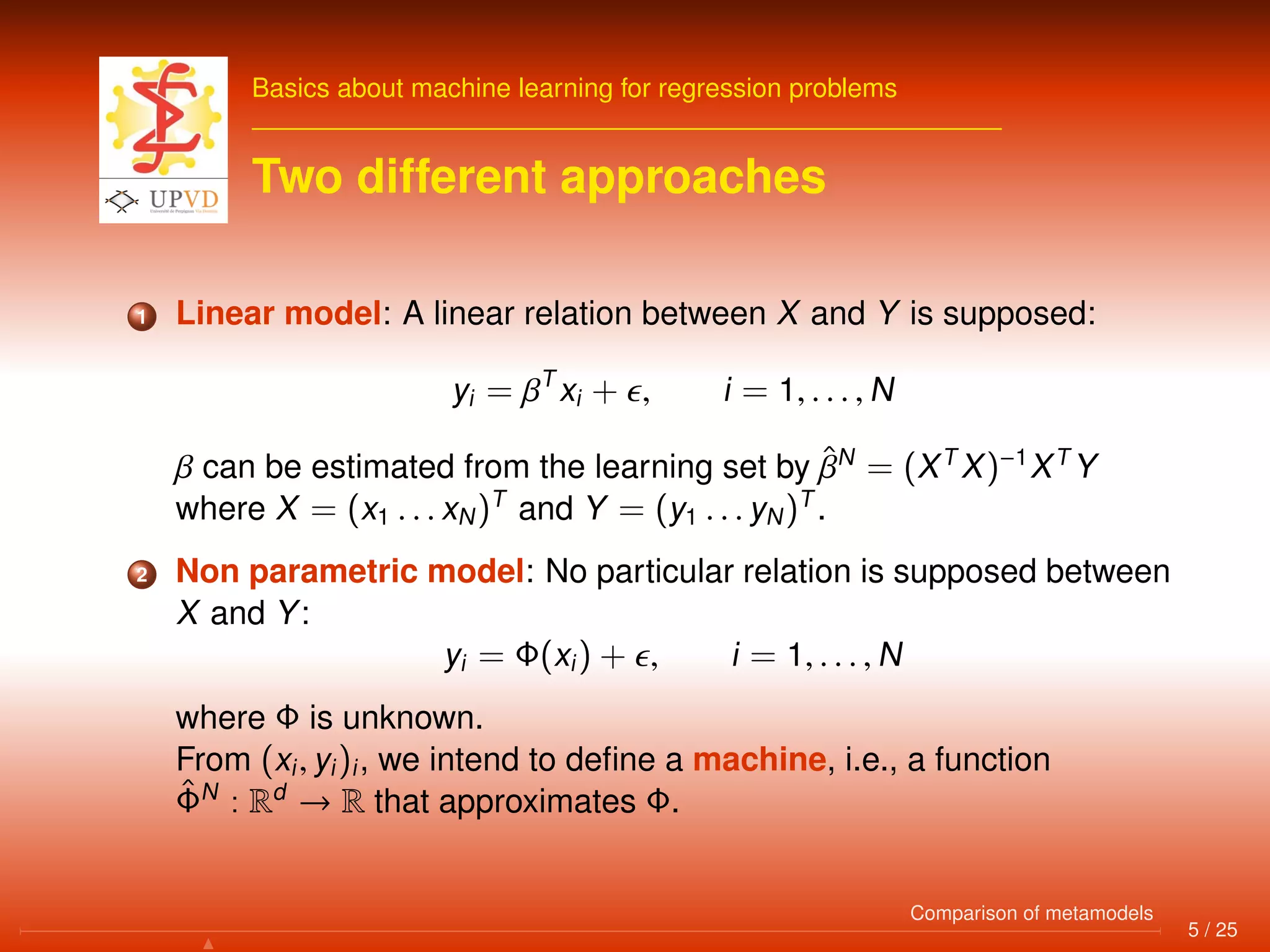

Desirable properties for a machine

The adequacy of ˆΦN

is measure through an error function, L.

Example: Mean squared error

L(y, ˆy) = (y − ˆy)2

Hence, ΦN

should be:

accurate for the learning data set: N

i=1 L(yi, ˆΦN

(xi)) has to be

small;

able to predict well new data (generalization ability):

M

i=N+1 L(yi, ˆΦN

(xi)) has to be small (but here, yi are unknown!).

These two objectives are somehow contradictory and a tradeoff

has to be found (see [Vapnik, 1995] for further details).

6 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-13-2048.jpg)

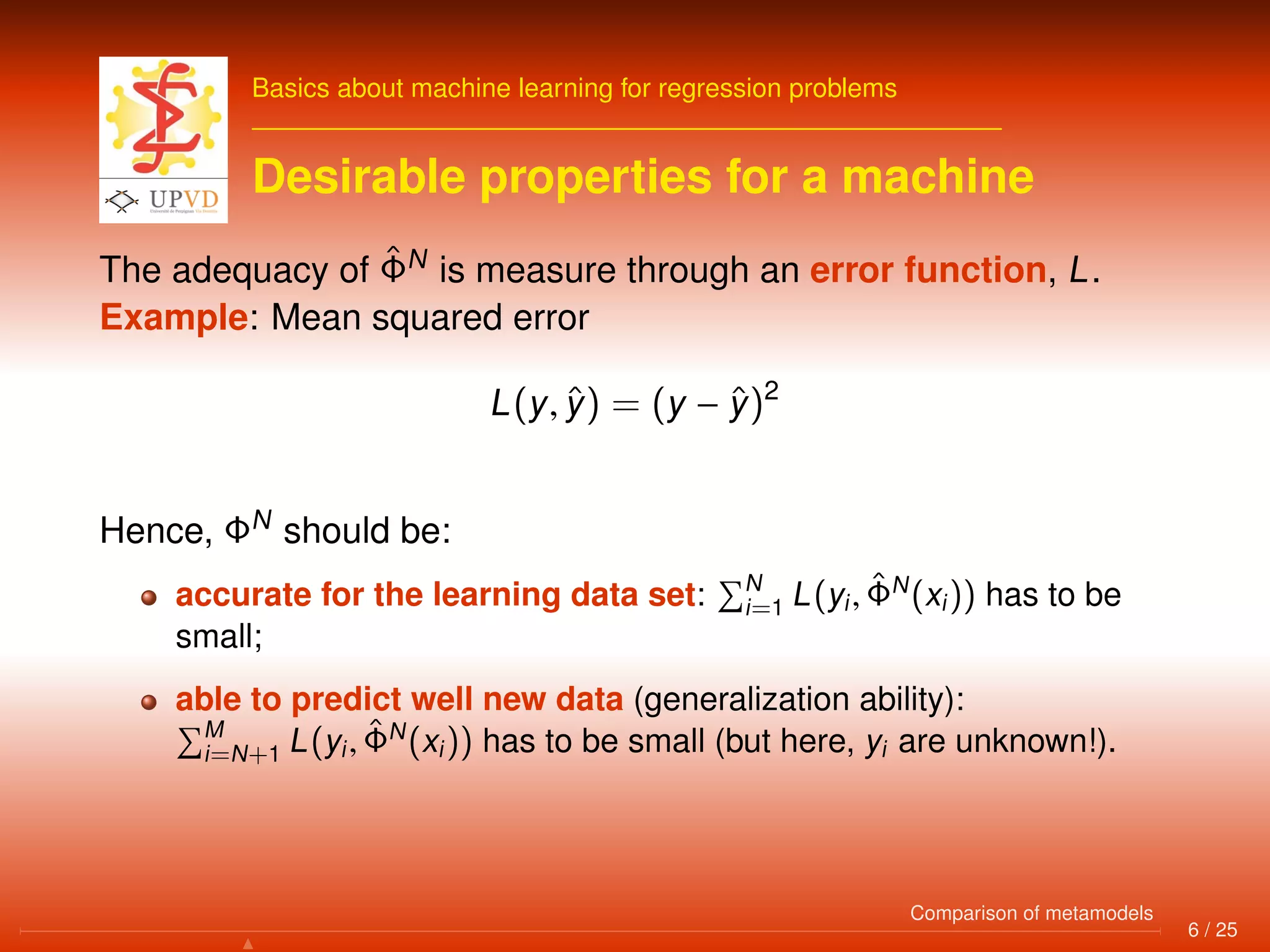

![Basics about machine learning for regression problems

Purpose of the work

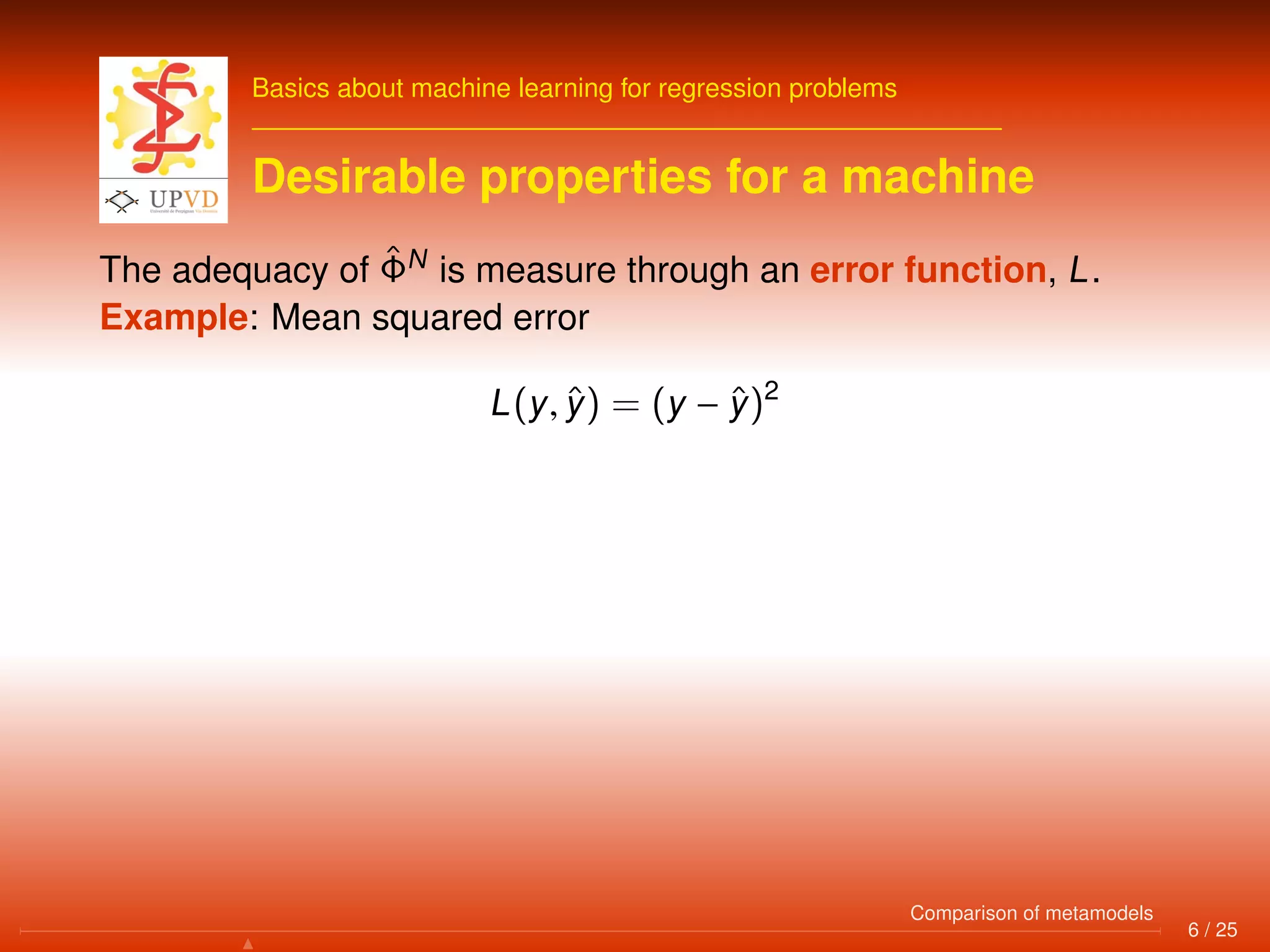

We focus on methods that are universally consistent. These

methods lead to the definition of machines ˆΦN

such that:

EL(ˆΦN

(X), Y)

N→+∞

−−−−−−→ L∗

= inf

Φ:Rd →R

L(Φ(X), Y)

for any random pair (X, Y).

1 multi-layer perceptrons (neural networks): [Bishop, 1995]

2 Support Vector Machines (SVM): [Boser et al., 1992]

3 random forests: [Breiman, 2001] (universal consistency is not

proven in this case)

7 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-15-2048.jpg)

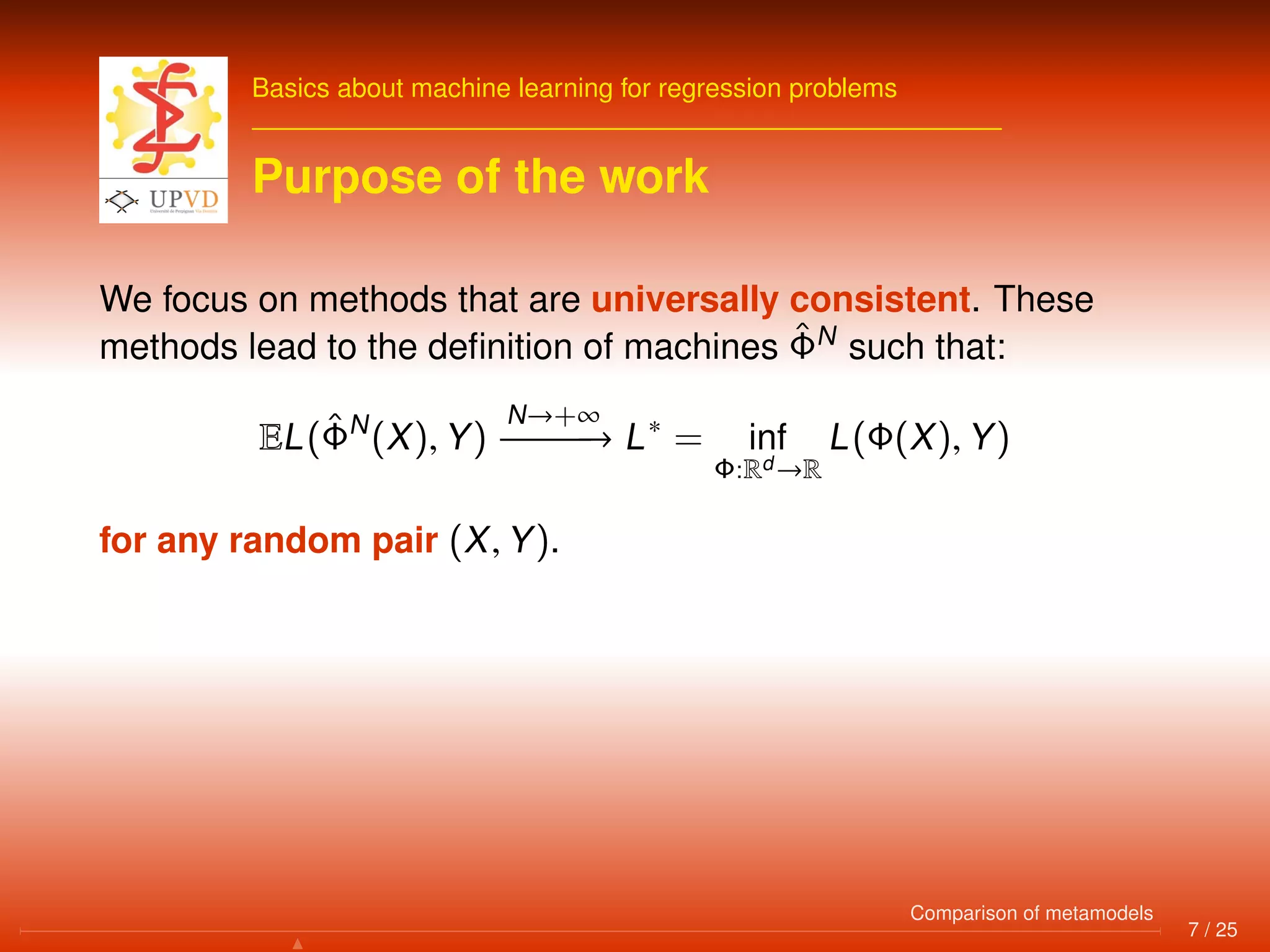

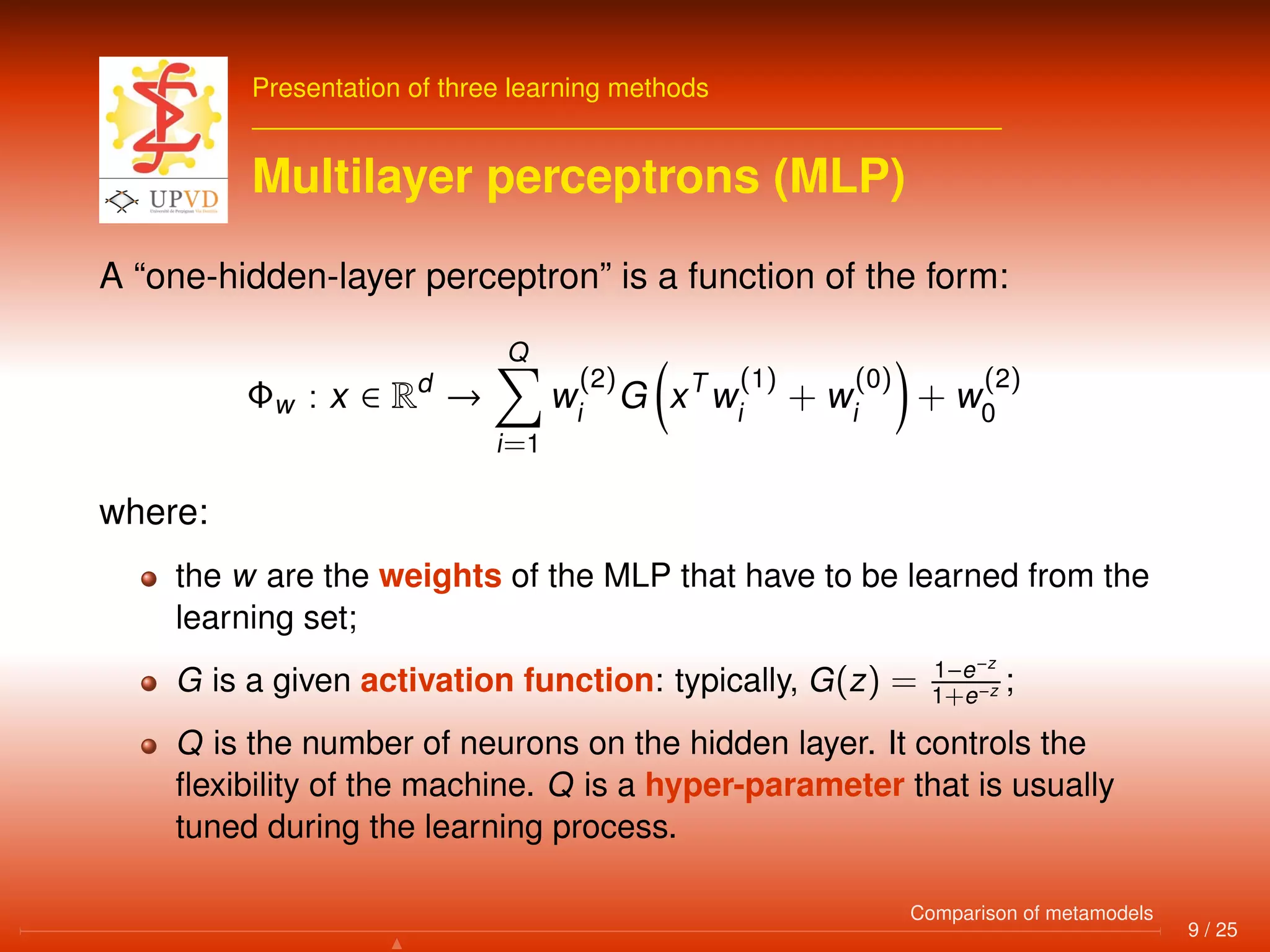

![Presentation of three learning methods

Sommaire

1 Basics about machine learning for regression problems

2 Presentation of three learning methods

3 Methodology and results [Villa-Vialaneix et al., 2010]

8 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-16-2048.jpg)

![Methodology and results [Villa-Vialaneix et al., 2010]

Sommaire

1 Basics about machine learning for regression problems

2 Presentation of three learning methods

3 Methodology and results [Villa-Vialaneix et al., 2010]

17 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-35-2048.jpg)

![Methodology and results [Villa-Vialaneix et al., 2010]

Data

5 data sets corresponding to different scenarios coming from the

geobiological simulator DNDC-EUROPE.

S1: Baseline scenario (conventional corn cropping system): 18 794

HSMU

S2: like S1 without tillage: 18 830 HSMU

S3: like S1 with a limit in N input through manure spreading at 170

kg/ha y: 18 800 HSMU

S4: rotation between corn (2y) and catch crop (3y): 40 536 HSMU

S5: like S1 with an additional application of fertilizer in winter:

18 658 HSMU

18 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-36-2048.jpg)

![Methodology and results [Villa-Vialaneix et al., 2010]

Data

11 input variables

N FR (N input through fertilization; kg/ha y);

N MR (N input through manure spreading; kg/ha y);

Nfix (N input from biological fixation; kg/ha y);

Nres (N input from root residue; kg/ha y);

BD (Bulk Density; g/cm3

);

SOC (Soil organic carbon in topsoil; mass fraction);

PH (Soil PH);

Clay (Ratio of soil clay content);

Rain (Annual precipitation; mm/y);

Tmean (Annual mean temperature; C);

Nr (Concentration of N in rain; ppm).

18 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-37-2048.jpg)

![Methodology and results [Villa-Vialaneix et al., 2010]

Data

2 outputs to estimate from the inputs:

N2O fluxes;

N leaching.

18 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-38-2048.jpg)

![Methodology and results [Villa-Vialaneix et al., 2010]

Methodology

For every data set, every output and every method,

1 The data set has been split into a training set and a test set (on a

80%/20% basis);

19 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-39-2048.jpg)

![Methodology and results [Villa-Vialaneix et al., 2010]

Methodology

For every data set, every output and every method,

1 The data set has been split into a training set and a test set (on a

80%/20% basis);

2 The machine has been learned from the training set (with a full

validation process for the hyperparameter tuning);

19 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-40-2048.jpg)

![Methodology and results [Villa-Vialaneix et al., 2010]

Methodology

For every data set, every output and every method,

1 The data set has been split into a training set and a test set (on a

80%/20% basis);

2 The machine has been learned from the training set (with a full

validation process for the hyperparameter tuning);

3 The performances have been calculated on the basis of the test

set: for the test set, predictions have been made from the inputs

and compared to the true outputs.

19 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-41-2048.jpg)

![Methodology and results [Villa-Vialaneix et al., 2010]

Numerical results

MLP RF SVM LM

N2O Scenario 1 90.7% 92.3% 91.0% 77.1%

Scenario 2 80.3% 85.0% 82.3% 62.2%

Scenario 3 85.1% 88.0% 86.7% 74.6%

Scenario 4 88.6% 90.5% 86.3% 78.3%

Scenario 5 80.6% 84.9% 82.7% 42.5%

N leaching Scenario 1 89.7% 93.5% 96.6% 70.3%

Scenario 2 91.4% 93.1% 97.0% 67.7%

Scenario 3 90.6% 90.4% 96.0% 68.6%

Scenario 4 80.5% 86.9% 90.6% 42.5%

Scenario 5 86.5% 92.9% 94.5% 71.1%

20 / 25

Comparison of metamodels](https://image.slidesharecdn.com/jrc2010-03-18-140715042100-phpapp02/75/A-comparison-of-three-learning-methods-to-predict-N20-fluxes-and-N-leaching-42-2048.jpg)

![Methodology and results [Villa-Vialaneix et al., 2010]

Graphical results

Random forest (S1, N2O)

q

qqqqqqqqq qqq

q

q

q

q

qq

q

q

q

q

qq

q

qq

q

q

qqq

qqq

q

qq

qq

qq

qq

q

q

q qqq

q

qqq

q

q

q

qqq

q

q

qq

q

q

qqq

q

qq

q

q

qqq

q

q

q

qq

q

q

q

qq

q

q

qq

q

q

q

qq

qq

q

q

q

qq

q

q

q

q

q q

q

q

q

q

q

q

q

q

q

q

qqq

qq

qqq

qq

q

q

q

q qq

qqqq

q

q

q

qq

q

q

q

q

q

q

q

qq

q

q

q

qq

q

q

q

q

q

q

q

q q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

qqqq

q

q

qq q

q

q

qq

q

q

q

qq

q

q

q

q

q q

q

q

q

qq

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

qq

q

q

q

qq

q

q

q

q

qq

qq

q

qq

q

qq

q

qq

qqq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

qqqqq

q

q

q

qqqqqq

q

q

q

q

qq

q

q

q q

q

q

q

qq

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

qqqq

q

q

q

q

q

q

q

q q

qqq

q

q

q

q

q

qq

qq

q

qq q

qq

q

q

q

q

q

q

q

qq

q

q

qqq

q

q

q

qqq

q

q

q

q

q

q

q

q

qq

qqq

qq

qq

q

qq

q

q

qq

qq

q

q

q

q

q

q

qq

q

q

q

qqq

qq

q

q

q

qq

qq

q

q

q

qq

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqqq

qq

q

qqq

q

q

q

q

qq

qq

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

qq

q

q

qq

q q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqqq q

q

q

q

q

q

q

q qq

q

q

q

q

qqq

qq

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q q

q

q q

q

q

q

q

q

q

qqq

qq

qq

q

q

q

q

q

qq

q

qqq

q

q

q

qqq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

qq

q

q

q

q

q

qq

q

qq

q

q

q

q

qq

q

qq

q

q

q

q

qq

q

q

q

qq

q

q

q

q

q qq

q

q q

qq

q

q

q

q

q

q

q

q

qq

qq

qq

qq

q

q

q

q

q

qqq

q

qq

q

q

q

qq

q

qq

q

q

qqq

q

q

qq

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

qqq

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

qq

qq

q

qq

q qq

q

q

q

q

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

q

qq q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

q q

qq

q

qq

q

q

q q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q q

q

qqq

q

qq

q q

q

q

q

q

q

q

q q

q q

q

q

q

q

q

q

q

q

q

q

q

q

qqqq

q

q

q

q

q

qq

q

qq

q

q

q

qqq

q

qq q

q

q

q

q

qq

q

q

q

q

q

q

q

qq

q

qq

qqq

q

q

q

qq

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

qq

qq

q

q

q

qq

q

q

q

q

q

q

q

q

qqq

q

q

qq

q

q

q

q

q

q

q

q

qq

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq

q

q

qq

q

q

qq

qq

q

qq

q

q

qq

qqqq

q

qq

qq

q

qq

q

q

qq

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q q

q

q

q

q

q

q

q

qq

q q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

qqqqq

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q q

q

q

q

qq

q

q

q

qq

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

qq

q

qq

q

q

q

q

qq

q

q

q q

q

q

q

q

q

qqqq

q

qq

q

q

q

q

qq

q

qq

q

qq

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

qqq

q

q

qqq

q

qqq

q

qqqqqqq

qq

q

q

q

q

q

q

qqqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

qq

q

q

q

qqqq

q

q qqq

q

q

q

qq

q

q

q

qq

q

q

q

qq

q

q qqqq

qq

qq

q

q q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

qq

q

q

qq

q

q

q

q

q

q

qqqq

q

qq

q

q

q

q

q

qq

q

q

q

qq

q

q

q

q

q q

qq

qq

q

q

q

q

q

q

q

qq

q

qq

q

q

q

qq

q

q

qq q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

qq

q

q

q

qq

qq

qq

q

q

q

q

qq q

q

q

q

qq

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq

q

q

q

qq

q

q

q

q

qq

q q

q

q

q

q

q

q

q

q

qq

q

q

q

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

qq

q

q

q

q

q

q

qq

q

q

q

qq

qq

q

q

q qq

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

qq

qq

q

q

q

q

q

q

q

q

q

q

q

q q

q q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

qq

q

q

q

qq

q

qq

q

qq

q

q qq

q

q

q q

q

q

q

q

q

q

q

q

qq

q

qq

qq

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q q

qqq

q

qq

q

qqqq

qqq

q

q

qq

q

q

q

qq

q

qq q

q

q

qq

q

q

q

q

q q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q q

q

q

q

q

q q

q

q

qq

q

q

q

q

q

q

qq

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq

q

qq

q

q

qq

qq q

qq

qqqq

qqq

qqqqqqq

q

q qq

q

qq

q q

q

q

q

qqqqq

q

q

q

q qq

qq q

q

q

q

qqqqqq

q

q

q

qqq

qqq

q

q

qqqq

q

q

q

qq

qqq

q qq

q

q

qq

q

q

q

q

q qq

qqq

q

qqq

q

q

q

qqqqq

q q

qq

qqq q

q

q

q

qq

q

q

q

q

q

q

q

q

qq q

qqqq

q

q

qqqqqq

qqqq

qq

qq

q

q

qqqqqqqqq

qq

qq

q

q

q

qqqqq

q

q

qqq

q

q

qqq

q

q qqq

q

qq

q

qqq

qqqq

q

q

q

qqqqq

q qq

qqqqqqqqq

qq

q q

qqqqqq

qqqq

qq

q

qq

qq

q

q

q

q

qq

q

qq

q

qqq

qqq

qqq

q

qqqqqq

q

q

q

qqqq

q

qq

q

qq

qq

q

q

qq

q

qqqqq

qq

q

q

q

q

qqqqq

q

q

qq

q

q

q

q

q

qqqq

q

q

qqqq

q

qq

qqqq

qq

qq

q

q qq

q

q

q

qq

q

qq

q

qq

q

qq

q

q

q

q

qq

q

qq

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qqqqqqqq

q q

qq

q

q

q

qq

qqqq

q

q

q

q q

q

q

q

qq

q

q

qq q

q

q

q

q

q

q

qq

qq

q

q

qq

q

q

qq

qq

q

q

q

q

q

qq

q

q

q

q

q

q

q

qq

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

qq

q

q

q

qq qq

q

q

q

q q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

qq

q

q

q

q

qq

q

q

q

q

qq

qq

q

qq

q

q

q

q

qqqq

q

q

q

q

qq

q

q

qqqqq

q

q

qq

q

q

q

qq

q

qq

qqq

q

q

qq

q

q

q

q

qqq

q

qqq

q

q

q

q

q

qqqq

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq

q

q

q

q

qq

q

qq

q

q

q

qq

qq

q

q

qqq

q

q

qq

qq

q

q

q

q

q

q

q

q

q

qq

q

qq

q

q

q

q

q

q

qq

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

q

qqqq

q

q

q

q

q

q

qq

q q

q

q

q q

q

q

q

q

q

qq

q

qq

q

q

q

q

q

q

q

qq

q

q

q

q

q

qqq

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

qq

qq

q

q

q

q

qq

q

q

q

qq

q

qq

q

q

q

qqq

q

qqqq

q

q

q

qq

qq

qq

q

q

q

qqq

q

q

qq

qq

q

q

q

q

qq

q

q

q

qq

qqq

q

qqq

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqqq

qqq

qq

q

q

q

qq

q

qqqqqqqqqq

q

qqq

q

q

q

q

q

qq

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

qq

q

qq

q

q

q

q

q

q

q

q

qqq

qq

qq

q

q

q

q

q

q

q

q

q

q

q

qq

q

qq

q

q

q

qq

q

q

q

q

qqq

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

q q

q

q

q

q

q

qq

q

q

qq

q

q

q

qq

q

q

qq

q

q

q

q

qqqq

q

q

q

qq

q

q

q

q

q

qq

q

q

qqqqqqq

qq

q

q

qq

qq

q

qq

qq

q

qq

q

q

q

q

qqq

q

q

q

qq

qq

qq

q

q

q

q

q

q

q

qq

q

q

q

q

qq

q

qq

q

q

q

q

q

q

qq

q

q

q

qq

q

q

q

qqq

q q

q

qq

q

qq

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

qq

q

q

q

q

qq

q

q

q

q

q

q

q

qq

q

q

q

qq

qqqqqqq

q

qq

q

qqqq

q

q

qq

q

q

q

qq

q

q

q

qq

q

q

q q

q

q

q

qq

q

q

q

q

q

q

q

q

qqq

q

q

qq

q

q

qq

q

qq

qq

q

q

q

q

qq

qq

q

q

qq

q

q

q

qqq

qq

qq

q q

qqq

q

q

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

qqq

q

qqq q

q

q

q

q

q

qq

q

q

q

q

qq

q

qq

q

q

q

qq

q

q

q

q

q

q

q

qq

q

q

qqqqqq

q

qq

q

q

q

q

q

qq

q

qqq

q

q

q

q

q

q

q

q

qqqq

q

q

q

qq

q

q

qq

q

q q

qq

q

q

q

qq

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q qq

q

qq

q

q

q

q

q

q

q

q

q

qqqq

qq

q q

q

q

q

q

q

qq

q

q

q

qq

q

qq

q

q

q

q

q

q

q

q

q

q qq

q

qq q

qq

q

q

q

q

q

qq

q

q

q

qq

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

q

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

qq

q

q

q

q

q

q q

q

qq

q

q

q

q

qq

q

q

qq

q qq

q

q

q

q q

q

q

q

q

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqqq

qq

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

qq

q

qqq

q

q

q

q

q

qq

q

qq

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q q

q

q

q

q

q q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

qq

q

q

q

qq q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

qq q

q

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q q

q

qq

qq qqq

q

q

qqq

q

q

qq qq

q

q qq

q

q

qq q

q

q

qq

q

q

q

qq

q

q

q

q

q

qq

qq

qqqqqq

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q q

q

q

q

q

q q

q

qq

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

qq

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

qq

qq

q

q

q

qq

q

q

qq

qq

q

q

q

qq

q

q

q

q

q

qq

q

q

q

q

q q

q

q

q

qqq

q

q

qq

q

q

qq

qq

qq

q

q

q q

q

qq

q

q

q

qq

q

q

q

q

q

q

q

q

q q

q

q

q

q q

q

q

q

qq

q

qq

qq

q

q

q

qq

q

q

q

qq

q

q

q

q

q

q

q

qq

q

q

q

qqqq

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq

q

q

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

qqqqq

qq

q

q qq

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

qq

qq

q

q

q

qq

q

q

qq

q

q

q

q

q q

qq

q

q

q

q

q

qq

q

qq

q

q

qq

q

q

q

q

q

q

q

qq

qq

q

qq

qq

qq

q

q

q

q

q

q q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

qq

q

q

qqq

q

q

q

q q

qq

q

q

q

qq

q

qq

q

q

qq

q

qqq

q

qq

q

q

qqq

q

qq

qq q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

qqqq

q

q

q

qq

q

q

q

qq

q

q

q

q

q

q

q

qq

qq

q

qqq

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

qq

q

qq

q

q

qqq

q

qq

qq

q

q

q

q

q

qq

qq

q

q

q

qq

q

q

q

q

q

q

q q

qq

q

q

q

q

q

qqq qq

q

q

q

qq

q

q

q

q

qq

qqqqqq

q

qq

q

q

q

q

q

q

qqq

qq

qqq

qqqqqq

q

q

q

q

q

q

q

qq

q

qq

q

qq

q

qq

q

q

qqq

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qqqq

q

q

qq

q

q

qqqq

q

qq

q

q

qq

qqq

q

qqqq

qq

q

qq

q

qqq

q

qq

qq

qqq

qqq

qq

q

qqq

q

qq

qq

qq

q

q qq

qq

q

qq

qq

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqqqqq

qq

q

q

qq

q

qqq

qq

q

q

q

qqqqq

qq

qqq

qqqq

q qqq

q

q

q

qqq

qqq

q

q

q

qq

qq

qqq

q

qq

qq

q

q

q

q

q

q q

q

qqq

q

qq

q

q

qq

qq

q

q

q

q

q

q

q

qqq

qq

qqqqq qq

qqqqqqq

qqq

q

q

q

q

q

qqq

qqq

qq

q

qqq

qqq

q q

q

q

qqqq

qq

q

qq

q

qq

q

q

q q

qqqqq

qqq

q

qqq

q

qq

q

qqqqq

qqq

q

q

qq

qq

qq

q

qq

q

q

q qq

q

qqq

q

q

q

q

q

q

q

qq

q

qqqq

q

qq

q

q

qq q q

q

q

qq

qq

qq

q

qqq

qq

q

q

q

q

q

qq

q

q

qqq

q

qq

q

q

q

qq

q

q

q

q

q

q

q

q

qq

q

qq

qq

q

q

q

qq

qq

qqq

q

q

q

q

q

q

qq

q

qq

qq

qqqqq

qqq

q

qqqqq

qq

qq

q

qqq

qqq

qqqq

q

q

q

qq

q

qq

q

q

q

q

q

q

q

qq q

q

q

q

q

q

qq

q

qqqq

q

qq

qqq

q

q

q

qq

q

q

q

q

q

q

q

qq

q

qq

q

q

q

qq

q

q

qq

q

q

q

q

q

qq

qq

qq

qqqq

q

q

q

qq

q

q

q

qq

qq

qq

q

q

q

q

q

q

q

q

qqq

q

q

qq

q

qq

q

qqqq

q

q

qqqq

qqqqq

qq

qq

q

q

q

q

qqq

qq

qq

q

q

q

q q

q

qqq

q

qqq

q

q

qq

qqqqqqqq

q

qqq

qq

q

q

q

q

qqqq

q

q

qq

q

qqq

q

qqqqq

qqqq

q

q

q

qq

qqq

q

qqqqqqq

qqqqq

q

qqq

q

qq

q

qq

q

q

q

qqq

q

qqq

qqq

qqq

q

q

qqq

q

q

q

qq

q

q

q

qq

q q

q

qqqqqqq

q

qq

qq q

qq

q

qq

q q

q

q

q

q

q

q

q qqq

q

q

qqqq

qqqqqqqqqqqqqqqqqqqqq

qqqq

q

q

q

qq

qqqqq

q

q

qq

qqq

qqq

q

q

q

q

q

qq

q

q

q

q

qq

q

q

q

q

q

qqq

q

q

qq

qq

q

qq

q

qq

qqq

q

qqqq

qq

q

q

q

qqqq

q

q

q

qq

q

qqqq

q

q

q

q

qq

q

qq

qqqqqq

q

qqqqqq

qqqqq

q

q qq

qq

q

q

qqqqq

q qq

q

qq

qq

qqqq

qq

q

qq

qq

qqq

q qqq

q

qqqq

q

qq

q

q

qqqq

qq

q q

qq

q

q

q

q

q

qqqqqqqqqqqqq

qq

q

qq q

qq

q

qqqq

q

qq

q

q

q

qqq qq

q

qqqqq

q

q

qqqqq

qq

qqq

qq

qq

q

qq

q

q

q

q

q

qq

qq

qqq

qq

q

qqq

q

q

q qq

q

q

qqqq

qqqqqq

q

qqq

qq

qqqq

qqq

qq

q

qqqq

qq

q

q

q

qqq

q q

q

qqqq

q q

q

q

qqqq

qqqqq

q

q

q

qq

q

qqqq

q

q

q

q

q

q

qqqqq

q

q

q

q

q

q

q

q

qqq

q

qq

q

qq

q

q

qq

q

q

q

qqq

qqqq

qqq

q

qq

qqq

qqqqqq

q

q

q

q

q

q

q

qq

q

q

q

q

q

qq

q

q

qqq

q

q

q

qq

qqqqqqqqqqq

qq

q

qq

q

qq

qqqqq

q

qqqq

q

q

q

q

q

q

qqq

q

q

q

qq qqqq

q

q

q

q

qqqqqqq

q

q

q

q

q

qqqqq

q

q

qq

qqq

q

qqq

q

qq

q

q

q

q

qq

qqqqqqqq

q

q

q

qq

q

qq

q

qq

qq

qq

q

q

q

q

q

q

q

qq

qqqqq

q

qq

qqqq

q

q

qq

qqq

q

qq

q

qqqqqq

q

q

q

q

q

q

qqq

q

q

q

q

q

qq

qq

q

qq

q

qqqqqqqqqqqqq

qq

qqq

qq

qq

q

q

q

qqqq

qq

qq

q

qq

qqq

q

q

q

q

q

qqq

qq

qqq

qq

q

qqq

q

q

qqqqqq

q

q

q qqq

q

q

q

q

q

qqqqq

qqq

qq

q

q

q

q

qq

qqqqq

q

q

q

qqq

q

q

q

q q

q

qqqq

qqq

q

q

qqqqq

q

qqqqqqqqq

q

q

q

qq

q

q

q

qqqqq

qq

q

qq

qqqq

qq

q

qqqqq

q

qq

q

qq

q

qq

qq

qq

q

qq

qq

q

qq

q q

qqqq

q

qqqq

qq

q

qq qq

qqq

q

qqqq

q

qq

qqq

qq

q

q

q

q

qqq

qqq

q

q

qqqqq

q

q

q

q

q q

q

qq

q

q

q

qq

q

q

q

q

q

qq

q q

q

qqqq

q

qqq

q

q

q

q

qqq

qqq

q

q

qqqqq

q

qqqqqqqqqqq

qqq

qq

q q

q

q

qq

qqq

q

q q

q

q

qqqq

q

q

qqqq q

qqqq

q

qq

qq

q

qq

q

q

q

qqqqqqqq

qq

qq

q

q qqqq

q

q

q

q

q

q

q

qqqq

qq

qqqqq

q

q

q

q

q

q

q

qq

q

qqqqqqq

q

qq

q

q

qq

qq

qq

qq

qq

q

qqq

q

q

q

qq

q

qq

q

q

q

qq

q

q

q

q

q

q

q q

q

qq

q

q

q

q

q

q

q

qq

qq

q

q

qq

q

q

q

q

q

q

q

q

q

q

qqqqq

qqq

qq

q

qqq

q

qqqq

q

q

q

q

qqq

q

qq

qqq q

q

qqqqqqqq

qqqqqq

q

q

qqq qqq

qq

q

q

qq

q

qqqq qq

qqq

qqq

q

qq

qq

qq

q

qq

q

q

qq

q

q

q

q

qq

qqq

q

q

qq

q

qqq

q

qqq qq

q

qqq

qq

qqqq

qqq

q

q

q

qq

qq

qqq

q

qq

q

q qqqqqqqqqqqq

qq

q

q q

qqqqqqq

qq

q

qqqq

qqq

q

q

q

qq

q

qq

q

qqqqq

qqqqq

qqqqqq

q

qqqqqqq

q

q

q

qqqqqqq

q

qqq

q

q

qq

qq

qq

qq

qq

qq

qqqqq

qqqq

q

q

qq

q

qqqqq

qq

q

q

qq

qq

q

qq

qq

q

q

qqq

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

qq

qq

qqqq

qqq

q q

q

qqq

qq

q

q

qq

q

qq

qq

q

qq

q

q

qqq

q

qq

q

qq

qq

qq

q

q

q

qqqq

q

q

qqq

qqq

qqq

q

qq

q

q

qq

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qqqq

qqqqq

q

q

q

qq

q

q

q

qq

q

qq

qq

qq

q

qq

q

q

q

qq

qqqqq

q

q

qq

q

qq

qq

q

qqqq

q

q

q

q

qq

q

q

qq

q

qqq

qq

qq

qq

q

q

qq

qq

q

q

q

q

q

q

q

q

qqq

qq

qqqqq

qqq

qq

qq

q q

qqq

qqqqq

q

q

q

q

q

qqqqqqqqqqqq

qqq

q

qq

qq

qq

q

q

q

q

qq

q

qq

q

q

q

q

q

qq

q

q

qqq

qq

qq

qq

q

q

qq

q

q

q

qq

q

q

qq

q

q

q

qq

q

q

q

q

qq

qq

qqqq

q

q

q

q

q

q

qqqqqqq

qqq

q

q

q

q

q

qqqq

q

q

q

q

q

qq

qqq

q

q

q

q

q

q

qqq

q

q

q

q

q

q

qq

q

q

qq

qqq

q

q

qq

q

qqq

qq

qqq

q

q

qqqqq

qqqqqq

qqq qq

qqqqqq

q

qqqqqqq

q

qqqqqq

qq

qqq

qqqqqqqqq

q

q

qq

q

q

q

qqqq

qqq

q

qq

qqqq

q

q

q

qqq

q

q

qq

qq

qqq

qqq

qq

qqqq

q

qq

qqqq

qq

q

qq

q

q

q

q

qq

qqq

q

qq

qq

q

q

q

q

qq

q

qqqqqqqq

q

q

qqq qq

q

q

qqq

qqqqq

q

q

qqq

q

q

q

qq

q qqq

qqq

q

qqq

q

q

q

q

q

q

q

qqq

q

q

qqqqq

q

q

q

q

q

q

q

qqq

q

q

q

qqq

q

qqq

q

q

q

q

q

q

qq

qqq

q

q

q

q qqq

q

q

q

qq

q

q

qq

q

q

q

q

q

qq

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

qq

q

q

q

qq

q

qqq

qqqq

qqq

q

q

q

q

qq

qq

q

q

q

q

q

q

q

q

q

q

q

qqqq

q

q

qq

qq

q

qq

q

qq

q

q

q

qqq

q

qqqq

q

qqq

q

qq

q q

qqqqq

q

q

q

q

qqq

qqqqqqqq

q

qqq

qq

q

q

q

q

q

qq

q

qq

q

q

qq

q

q

q

qqq

q

q

q

qq

qq

q

q

q

q

q

q

qq

q

q

q

qq

qq

q

q

q

qqq

qqq

q

q

qqqq

q

q

qq

q

q

q

q

q

q

q

qq

qq

q

q

q

qqq

qqq

q

q

qqq qq

qq

qq

q

q

q

q

q

q

q

qq

q

q

qqq

q qq

q

qqq

q

q

q

qq

q

q

q

q

q

q

q

q q

qq

q

qq

q q

q

q

q

qq

q

q

q

qqqqq

q

qqq

qqq

qq

qqq

q

q

q

q

q

q

qqqq

q

q

q

q

qqqqqqqq

qqq

qqq

qqqqqqq

qq

q

q

q

q

qq

q

q

q

q

qq

q

q

q

q

q

qqq

q

q

q

q

qq

q

qqq

q

q

qq

q

q

q

qqqq

q

qqq

q

qq

qq

q

qqqqqq

q

qq

qqqqqqqq

q

q

qqqq

q

q

q

q

q

qqqq

q

qq

q

qqq

qq

q

qqqq

q

q

q

q q

qq

qq

qqqq

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

qqq

q

qq

q

q

qq

qqqqq

q

q

q

qq

q

q

q

qqq

qqq

qqqqqq

q

q

q

q

q

q

q

qqqqq

q

q

qqq

qqq

q

qqq

qq

qqq

qq q

q

qqq

qq

q

q

qq

qq

q

qqq

qqq

q

qq

q

q

q

q

qqqqq

qq q

qqqqqq

q

q

q

q

qqq

qqq

q

q

qqq

q

q

qq

q

q

qqq

q

q

qq

qq

q q

q

q

q

q

qqq

q

q

q

q

qq

q

qqq

qq

q

q

q

q

q

qq

q

qq qq

qqq

qqq q

q

qq

q

q

q

q

q

q

q

q

qq

q

qq

q

q

q

q

qq

q

q

q

q

q

q

q q

qq

q

q

q

q

qq

q

q

qq

qqqqqq

qqq

q

qqqqqq

q

q

qq

qqqqq

qq

q

q

q

q

q

qqq

q

q

q

qq

q

qq

q

q

qq

q

qq

q

q

q

q

qq

q

qqqq

q

q

qqq

qq

q

q

q

q

q

q

q q

qqq

q

q

q

q

q

qq

qq q

qqq

q

qqq

q q

qq

q q

qqqqq

q

q

qq

qq

q

qqqqqq

q

q

q

qq

qq

qqqqq

qq

q

q

q

qq

q

q

q

qq

q

qq

qqq

q

q

q

qq

q

q

q

q

q

q

q

q

qq

q

q

qqqq

q

q

q

q

qqq

q

qq

qq

qq

q

qqq

q

qq

q

q

q

q

q

q

qqq

q

qq

q

q

q

q

q

qq

qqq qq qq

qqqq

qqqqqqq

qq

q

qqq

qqq

q

q

qq

q

q

q

q

q

qq

qq

q

q

q

qq

q

qqqq

qq

q

q

q

q

q

q

q

qq

q

q

qq

q

q

q

q

qqqqq

q

q

q

q

q

q

qqq

qq

q

qq

q

q

q

q q

q

q

qqq

q

qq

q

q

q

q

q

q

q

q

qqq

q

q

q

q

qq

qq

q

q

q

q

q

q

q

q

q

qq

q

qqq

q

q

qqqq

q

q

q

q

q qq

q

q

q

qq

q q

q

q

q

qq

q

q

qqq

q

qq

q

qqq

q

q

q

qq

qqq

q

qq

q

qqq

q

q

q

q

q

q

q

q

qq qq

qqq

q

q

q

qq

q

q qqq

q

q

qq

q

qq

qqqqqqq

q

qqq

qqq

q

q

q

q

q

q

q

qq

q

q

q

q

q

qqqq

q

qq

q

qq

qq

qq

q

q

q

q

qq

q

q

qq

q

q

q

q

q

q

qq

qq

q

q

qqqqq

q

q

qqqqq

q

q

qq

qq

qqqqqqq

q

q

q

qqqq

q

q

qq

q

q

q

q

q

q

q

q

q

q

qqqqq

qqqq

q

q

qq

qqqq

qq

qq

q

qq

q

qq

qq

q

q

q

qqq

q

q

q

q

q

q

q

q q

q

qq

q

q

q

q

q

q

qq

q

q

qq

q

q

qqqqqqq

q

qq

q

q

q

qq

q q

qqq

q

qqqq

q

q

q

q

qq

qq

qqqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

qqqqq

q

q

qqq

q

q

q

q

qqq

q

qq

qqqqq

q

qq

q

qq

q

q

q

qqqqqqq

q

qq

q

qq

q

q

q

qqqq

q qq

q

q

q

qqq

q

qqq

q

qqq

q

q

q

qq

q

q

q

q

q

q

qqqqqqq

q

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

qq

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

qq

q

q

qq

q

qq

q

q

q

qq

qq

q

qq

q

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

q

qq

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

qq

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

qq

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq q

q

qq

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q q

q

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

qq

qq

q

q

q

q

q

qqqq

q

q

q

q

q

q

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

qq

q

q

q

q

q

qq

q

q

qq

q

q

q

qq

q

q

q

q

q

q

q

q

qq

q

qq

q qq

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q q

q

q

q

q

q

q

q

qq

q

q

q

qq

q

qq qq

qqqq

q

q

qq

qq

q

q

q

q

q

qq

q

q

qq

q

q

q

qq

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q qq

q

q

q

q

qqq

q

qq

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

qq

q

qq

q

q

qq

q

q

q

qq

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

qq

q

q

qq

qqq

qqqqq

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q q

q

q

q

qq

qq

q

q

qqq

qq

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

qq

qqq

qq

q

qqq

qqqq

q

qq

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

qqq

q

qq

qq

q

q

q

q

qqq

qq

qqq

qqqqqqqqq

q

q

q

qq

qqq

q

q

q

q

qqq q

qq

q

q

qqq

q

qq

q

q

q

q

q

q

q

q

q

q

q

qq

qq

qq

qq

q

qqq

qqq

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q

q

q

q q

q

q

q

qqq

q

qq

q

q

qq

q

q

q

qq

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qqq

q

qqqq

q

qqqqq

q

qqq

q

q

q

q q

q

q q

q

qq

q

q

q

q

q q

q

q

qq

qq

qq

q

q

q

q

qqq

q

qqqqqqq

q

q

q

q

q

q

qq q

q

q

qq

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

qq

q

qq

q

q

q

q

qq

q

q

q

q

q

q

q

qqq

q

q

qqq

q

qq

q

qq

q q

q

q

qqq

q

q

q qq

q

qq q

qq

q

q

qq

q

qq

q

q

qq

q

q

q

q

q

qq

q

q

qq

q

qq

q

q

qq

q

q

q

q

qqqq

q

q

qqqqqq

q

q

q

q

q

q

q

q

q

q

q

qqq

q

qq

q

q

qq

q

q

qqq

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

qq

q

q

q

q

qqq

q

q

q

q

q

q

q

q

qqq

q

qqq

q

q

q

qq

q

qq

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

qqqqq

q

qq

q

q

q

q

q

qq

q q

q

q

q

q

q

qq

q

qq

q

q

q

q

q

qq

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

qq

q

q

q

qq

qq q

qq

q

q

qqqq

q

q

qq

q

qq

qqq

qq

q

q

q

q

q

qqq

q

qqq

q

q

q

q

qqqqq

q

qq

q

q

q

q

q

q

q

q

q

qqqqq

qq

q

q

q

q

q

qq

q

q

q

q

qq

q

q

qqq

q

qqqqqqqqqq

q

q

q

q

q

q

qq

qq

q

qqqqqq

q

q

q

qq

qqqqq

q

qqqq

q

qq

q

q

q

qqq

qqqqqqqq

qq

q

qqqqqqqqqqq

q

qqq

q

q

qq

q

q

q

q

q

qqqqqq

q

q

q

qq

q

q

q

q

q

q

q

qqq

q

qq

q

q

q

qqq

q

qq

q

qqq

q

qqq

q

qqq

q

qqq

q

q

q

q

q

q

qq

q

qqqq

q

q q

q

qq

q

qqqqqqqqqqqqqq

qq

q

q

qqq

q

q

q

qqq

qq

qqq

qq

q

q q

q

q

qq

q

q

q

q

qq

q

qq

qq

q

q

q

qq

q

q

q

q

qq

qq

q

q

q

q

q

qqq q

q

q

q

q

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

q

qqq

q

qqq

qqqqqq

q

q

qq

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q q

q

qq

q

q

q

qqq

q

q

q

q q

q

q

qqq

q

q

q

qqq

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

qq

qqqqq

q

q

qq

q

qq

qq qqq

q

qq

q

q

q

q q

q

qq

qqq

q

q

qqqqq

qq

q

q qq

qqqq

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq

qq

q

q

qqqq

q

q

q

q

q

q

qq

qq

q

q

qq

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

qq q

q

qqq

q

q

q

q

qqq

q

q

qq

q

q

q

qq

qq

qq

q

q

qq

q

q

q

q

qq

q q

q

q

q

q

q

q

qq

q

qqq

q

q

q

q

q

qqqq

q

qq

q

q

q

q

q

q

qq

qq

q

q

qq

q

q

q

qq

qqq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

qq

q

q

q

q

qq

q

q

q

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q q qq

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

qq

q

q

q

qq

q q

q

q

q

q q

q

q

q

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

qqq

q

q

qq

qq

q

q

qq

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q q

q q

q q

q

q

q q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

qq

qq

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q

qqq

q

q

q

q

q

q

q

q

q

qqqq

qqqq

q

qq

q

q

qq

q

q

q

q

q

q

q

q

qq

q

q

q

q

q q

qq

q

q

q

q

q

q

qqq

q

qq

q

q

q

q

q

qq

q

q

q

qqqq

q

q

q

q

q

q

q

q

q

q q

q

q

q

q

qq

q

q

q

q

q

q

qq q

q

qqqqq

q

q

qqq

q

q

q

q

q

qq

qq

q

q

q

q

q

qq

q

q

q

q

q

q

qqq

qq

qq

q

q

qq

q

q

q

qqqqq

q

q

qq

qq

qq

q

qqqqq

q

q

qqq

q

q

q

q

qq

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

qq

q

q

q

qq

q

qq

q

qq

q

q

qq

q

q

q

q

q

q

qq

qq

q

q

q

q

q

qqqqq

q

qq

q

qqqq

qq

q

qq

q

q

q

q

q

q

qq

q

q

qq

qq

q

q

q

q

q

q

q

q

qqq

q

q

qq

qq

q

qq

q

q

q

q

q

qq

q

q q

qq

q

q

q

qq

q

q

q

q

q

qq

qq

q

q

q

q

q

qqqq

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q q

q

q

q

qq

q

qqq

qq

qqqqqq

qq

qq

q

q

qq

q

q

qq

q

q

qq

q

q

qq

q

q

q

q

q

q

qq

q

q

q

q

q

q

q

q

q

q

q

q

q

q

q q

qqqq

q

qqq

qqq

qq

q

q

q

q

q

q

q

q

q

q

q

q

qqqqqqq

q

q

q

q

q

q

q

q

q

qqqqqq

qqq

q

qqq

q

q

q

q

qq

q