Embed presentation

Download as PDF, PPTX

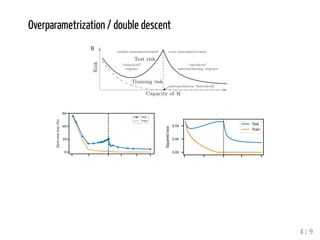

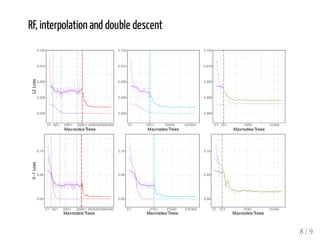

The document discusses the differences between overfitting and overparametrization in machine learning models. It explores how random forests may exhibit a phenomenon known as "double descent" where test error initially decreases then increases with more parameters before decreasing again. While double descent has been observed in other models, the document questions whether it is directly due to model complexity in random forests since very large trees may be unable to fully interpolate extremely large datasets.