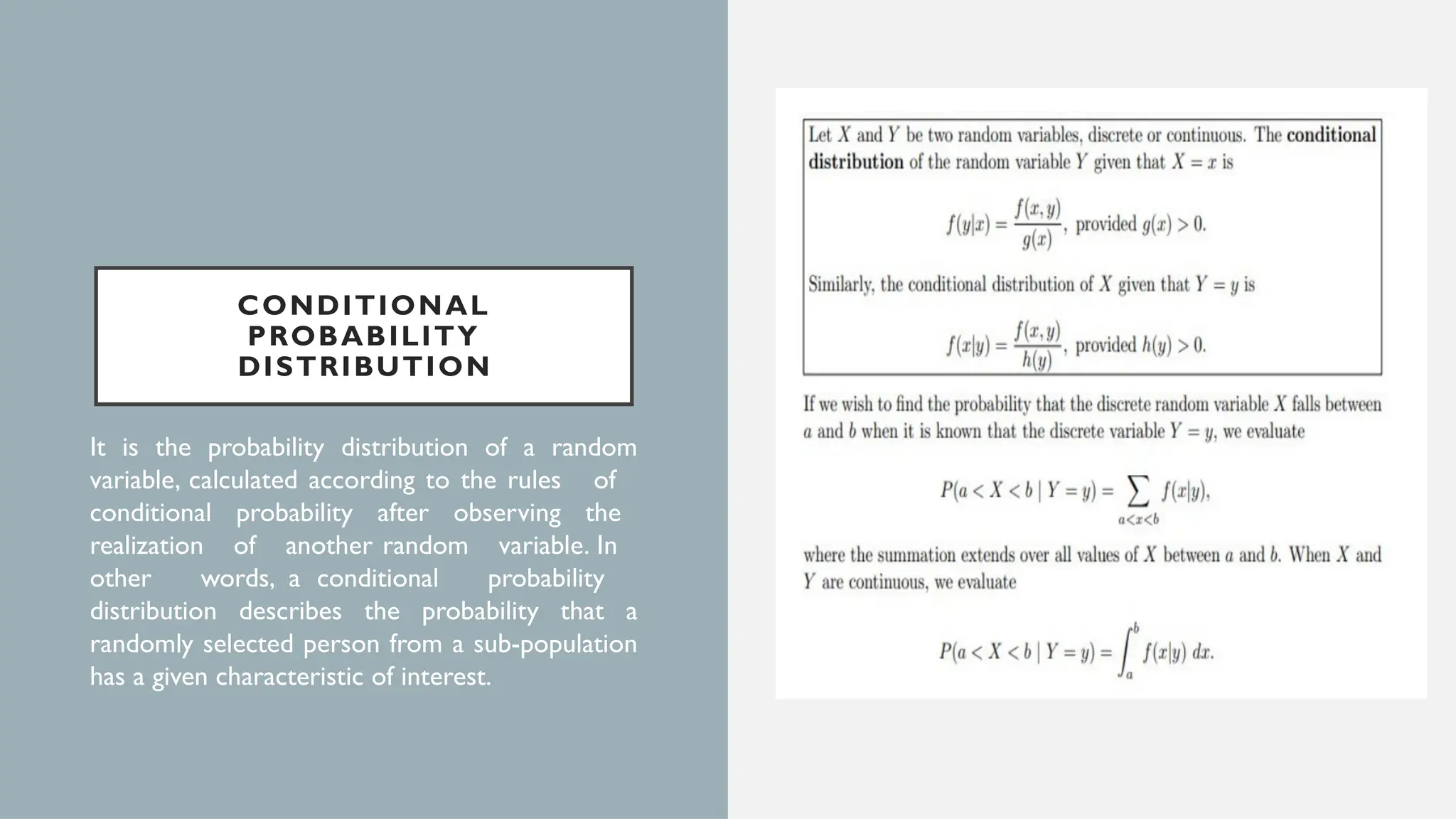

The document explains joint probability distributions for two or more random variables, including definitions, formulas, and examples. It discusses how joint distributions relate to marginal and conditional probabilities, the representation of probabilities in tables and through formulas, and showcases applications like finding the probability of specific outcomes. Additionally, it touches upon concepts such as joint probability mass functions and characterizations of marginal distributions.