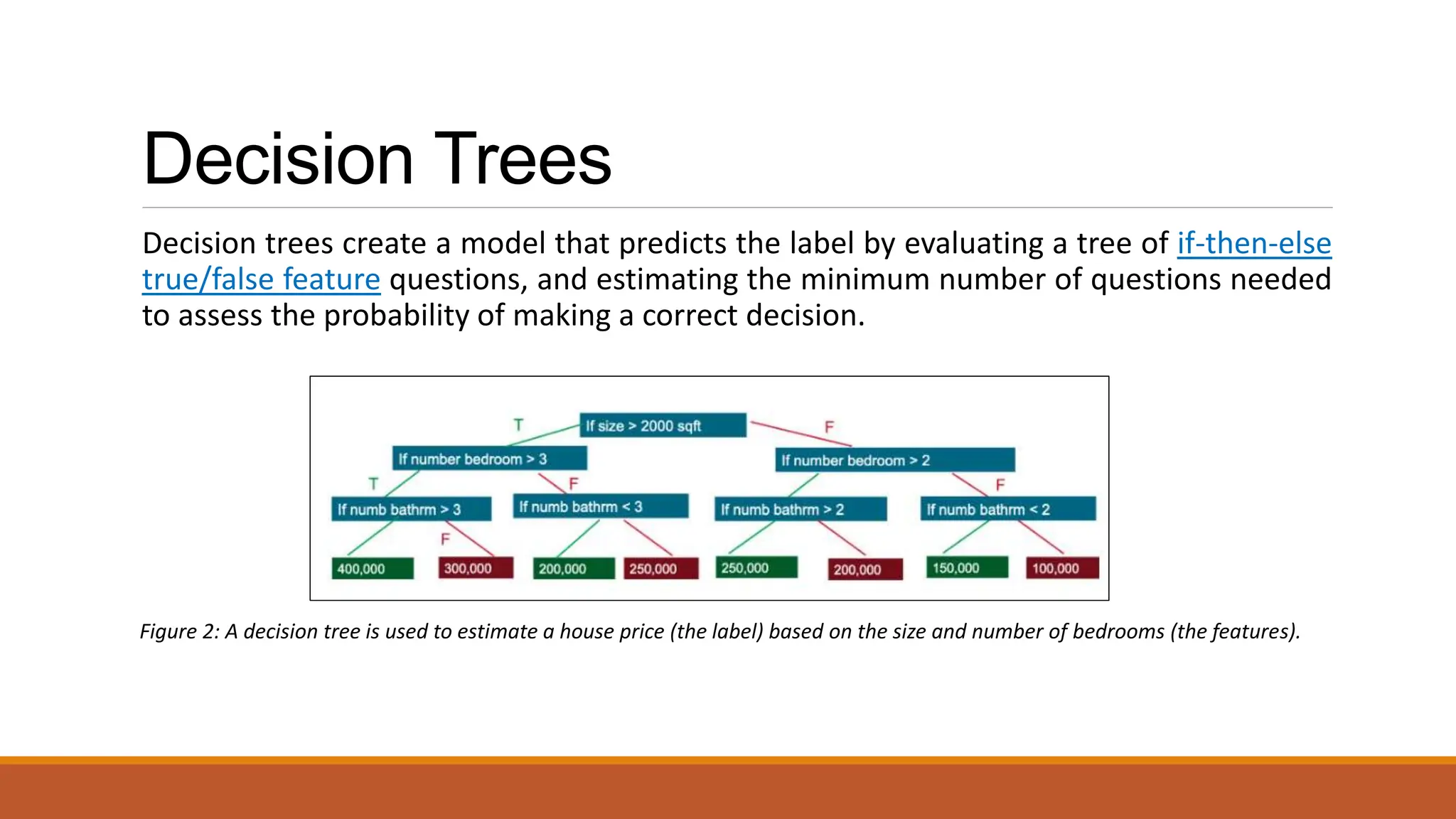

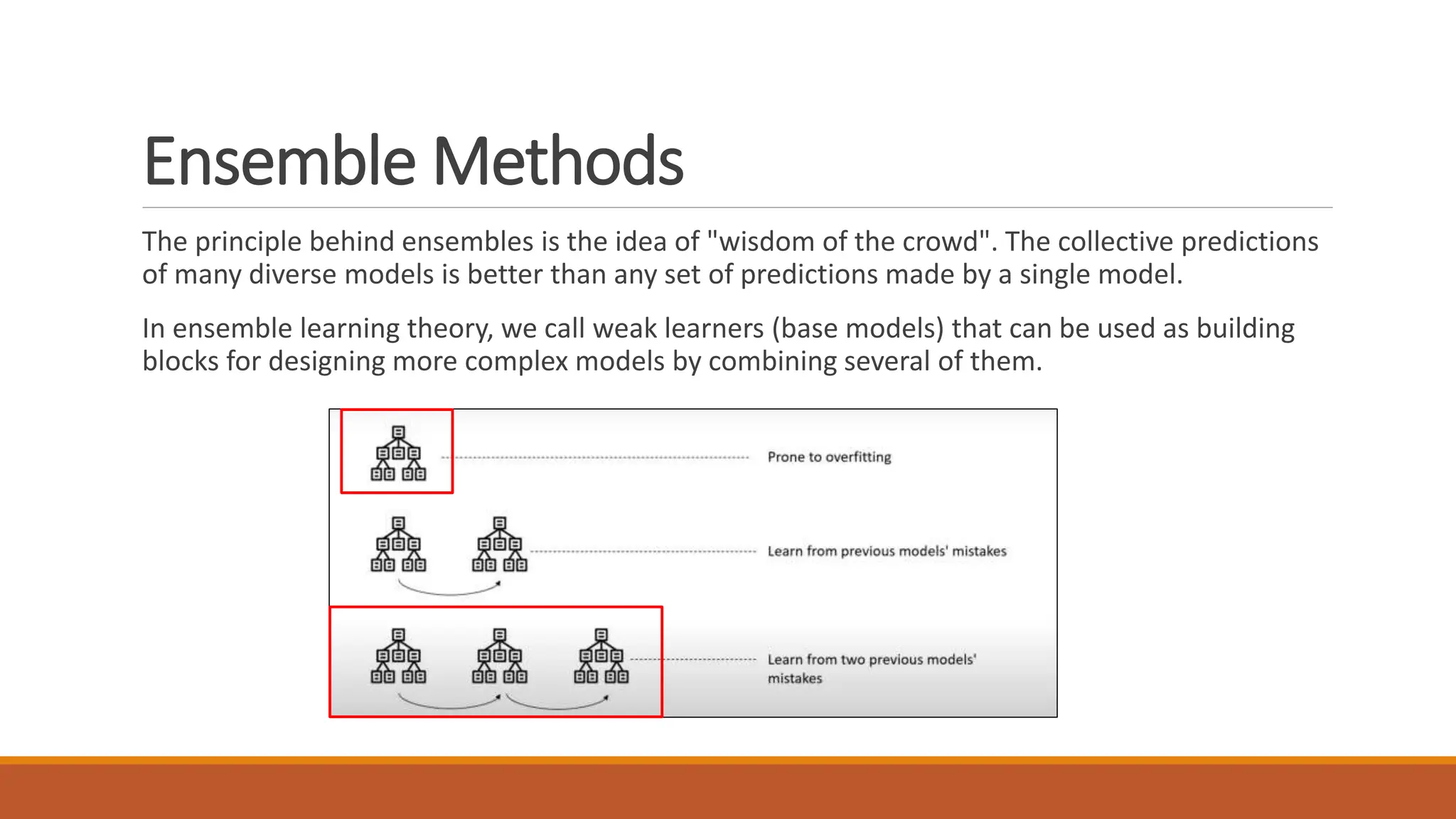

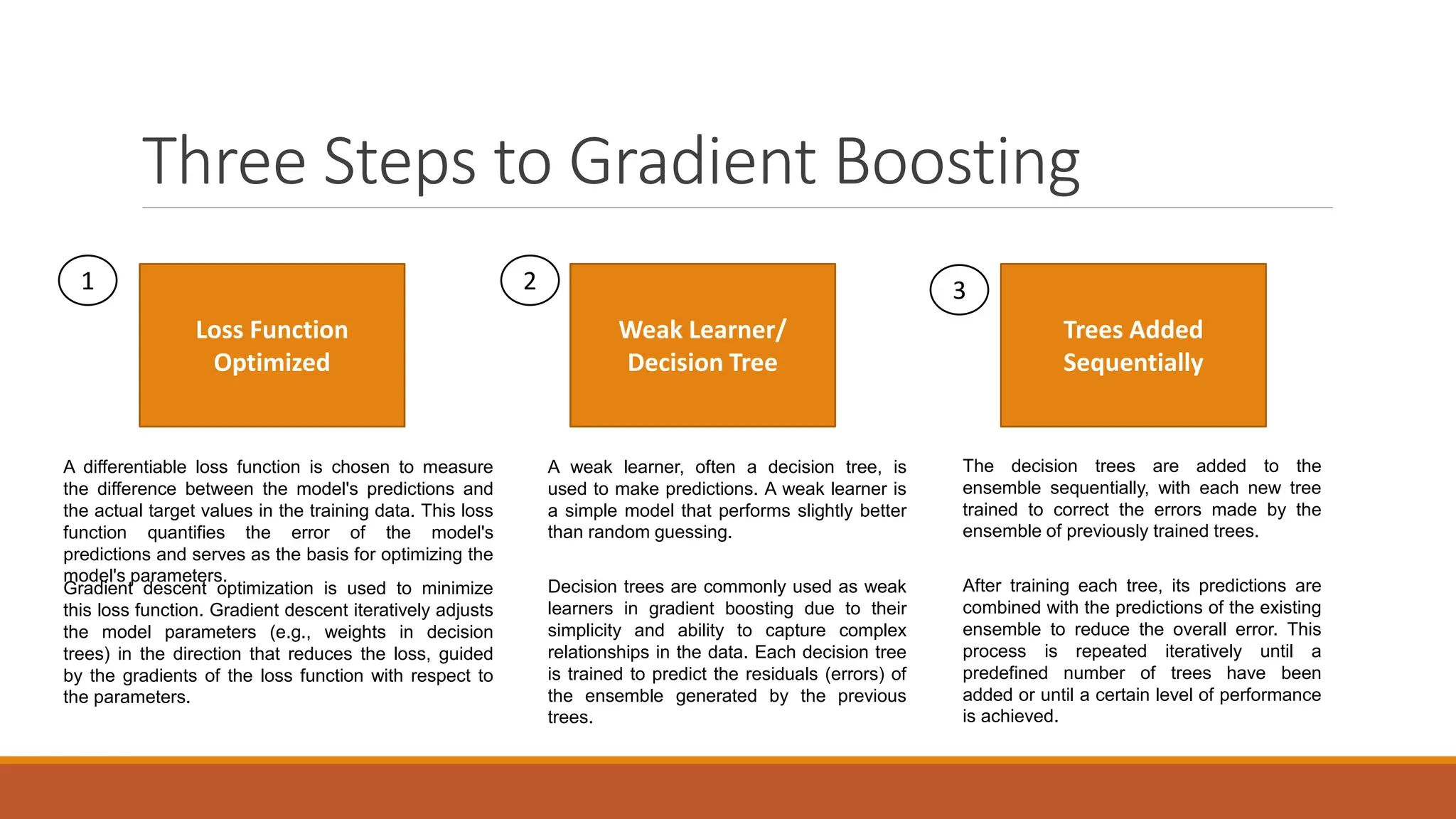

XGBoost is an advanced machine learning library for gradient-boosted decision trees, widely used for regression, classification, and ranking tasks. It uses principles of supervised learning, decision trees, and ensemble learning, specifically focusing on combining multiple weak models to enhance prediction accuracy. Key concepts include gradient boosting, loss function optimization, and the iterative process of adding decision trees to reduce overall error.