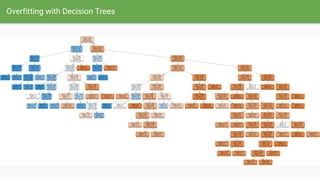

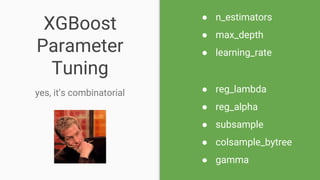

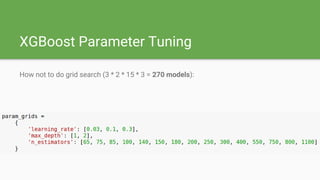

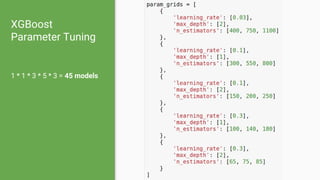

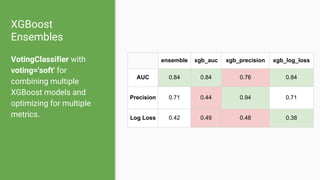

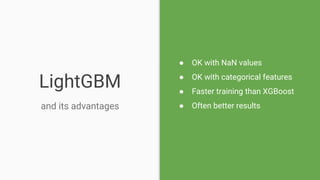

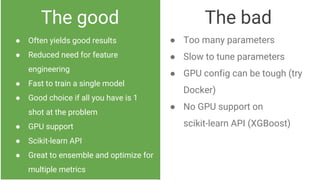

This document summarizes gradient boosting algorithms XGBoost and LightGBM. It covers decision trees, overfitting, regularization, feature engineering, parameter tuning, evaluation metrics, and comparisons between XGBoost and LightGBM. Key aspects discussed include XGBoost and LightGBM's tolerance of outliers, non-standardized features, collinear features, and NaN values. Parameter tuning, using RandomizedSearchCV and GridSearchCV, and ensembling models to optimize multiple metrics are also covered.