Apache Cassandra is an open-source distributed database designed to handle large amounts of data across commodity servers in a highly available manner without single points of failure. It uses a gossip protocol for cluster membership and a Dynamo-inspired architecture to provide availability and partition tolerance, while supporting eventual consistency.

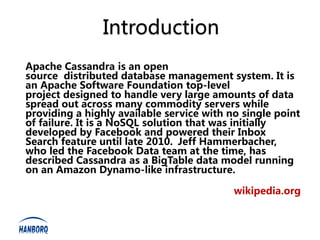

![Gossip

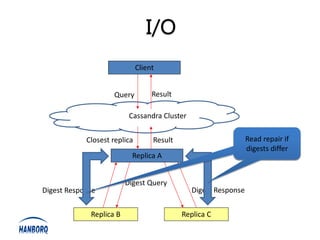

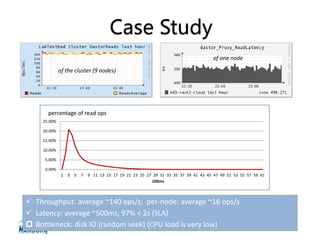

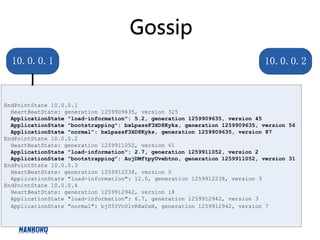

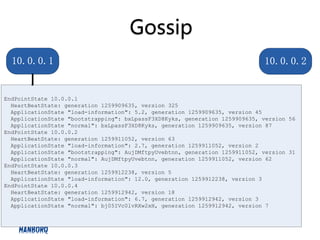

10.0.0.1 GOSSIP_DIGEST_SYN 10.0.0.2

10.0.0.1:1259909635:325

10.0.0.2:1259911052:61

10.0.0.3:1259912238:5

10.0.0.4:1259912942:18

10.0.0.1 GOSSIP_DIGEST_SYN_ACK 10.0.0.2

10.0.0.1:1259909635:324

10.0.0.3:1259912238:0

10.0.0.4:1259912942:0

10.0.0.2:

[ApplicationState "normal":

AujDMftpyUvebtnn,

Generation 1259911052,

version 62],

[HeartBeatState, generation 1259911052, version 63]](https://image.slidesharecdn.com/bcassandra-130319051115-phpapp01/85/Introduction-to-Cassandra-17-320.jpg)

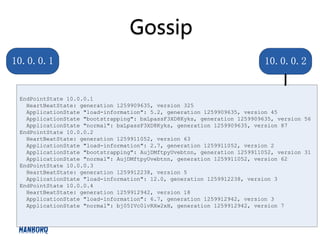

![Gossip

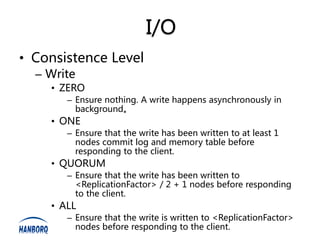

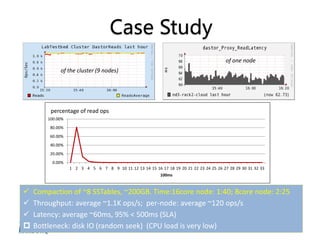

10.0.0.1 GOSSIP_DIGEST_SYN2 10.0.0.2

10.0.0.1:

[HeartBeatState,

generation 1259909635, version 325]

10.0.0.3:

[ApplicationState

"load-information": 12.0,

generation 1259912238, version 3],

[ HeartBeatState:

generation 1259912238, version 5]

10.0.0.4:

[ApplicationState

"load-information": 6.7,

generation 1259912942, version 3],

[ApplicationState

"normal": bj05IVc0lvRXw2xH,

generation 1259912942, version 7],

[HeartBeatState: generation 1259912942, version 18]

10.0.0.1 GOSSIP_DIGEST_SYN_ACK2 10.0.0.2](https://image.slidesharecdn.com/bcassandra-130319051115-phpapp01/85/Introduction-to-Cassandra-19-320.jpg)

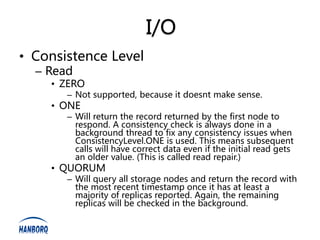

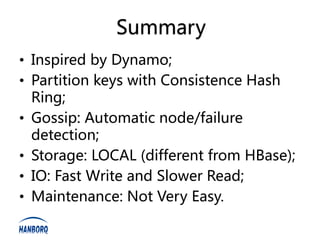

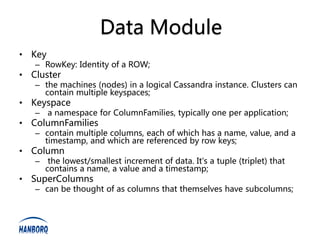

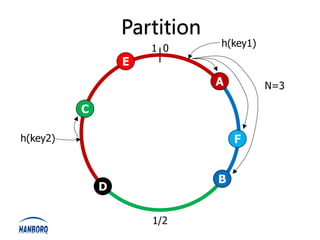

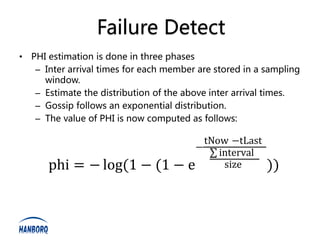

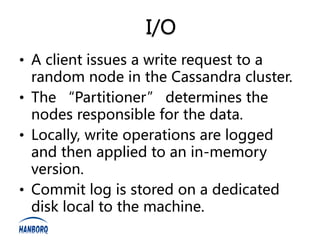

![I/O

Index Level-1

Consistent Hash Index Level-3

1 0 h(key1)

Sorted Map, BloomFilter

E 64KB (Changeable)

A mirror of data of Columns on Row

N=3

C K0 K0

h(key2) F Columns Columns Columns Columns

Key Index Block 0 Block 1

...

Block N

B

D

1/2

Index Level-4

Block Index

Range of B-Tree

K128 K128

Hash to Node

(Binary Search)

Columns Block 0 -> Position

BloomFilter Columns Block 1-> Position

of Keys on SSTable ...

K256 K256 Columns Block N -> Position

KeyCache

Inde Level-2

Block Index

B-Tree

K384 K384

(Binary Search)

K0

K128

K256 Totally 4 levels of indexing.

K384

Indexes are relatively small.

Key Position Maps Data Rows

Sparse Block Index

(Key interval = 128,

in Index file

[on disk, cachable]

in Data File Very fit to store data of a individuals,

[on disk]

changeable)

[in memory]

such as users, etc.

Good for CDR data serving.](https://image.slidesharecdn.com/bcassandra-130319051115-phpapp01/85/Introduction-to-Cassandra-27-320.jpg)