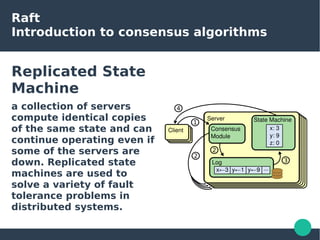

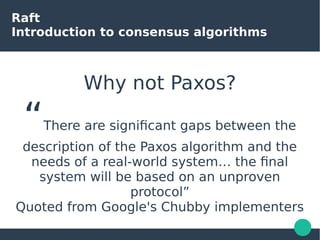

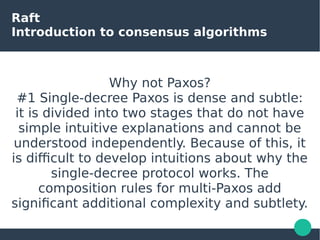

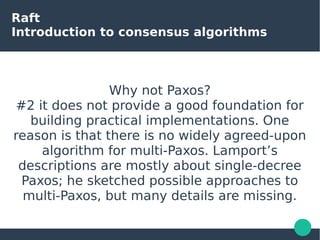

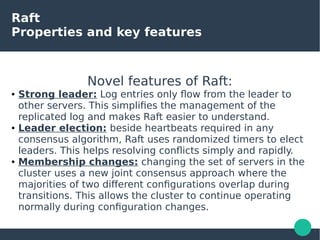

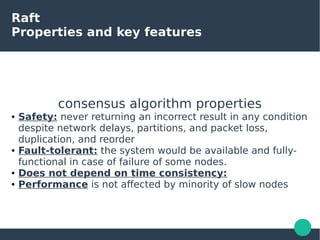

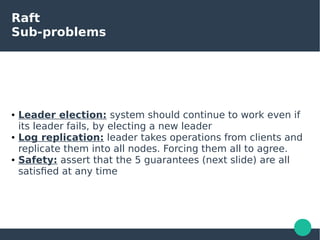

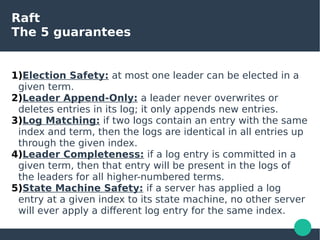

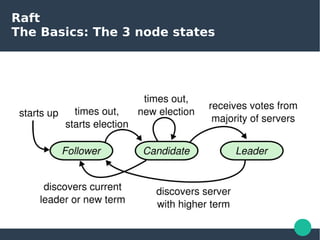

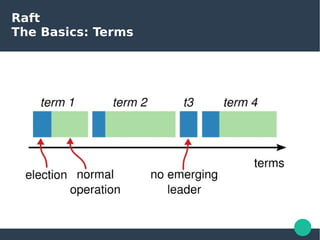

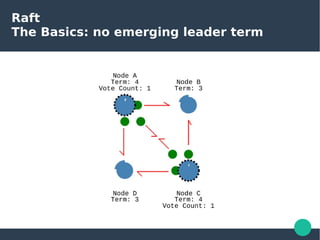

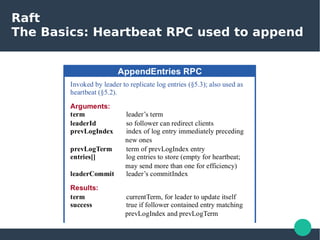

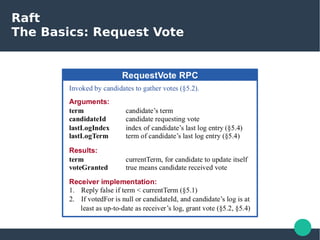

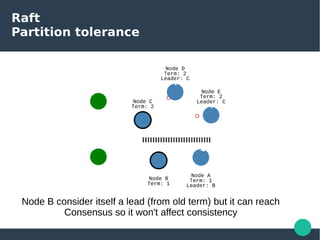

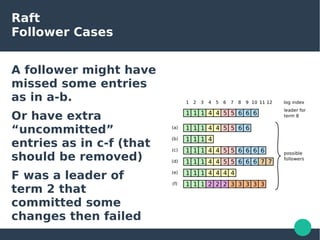

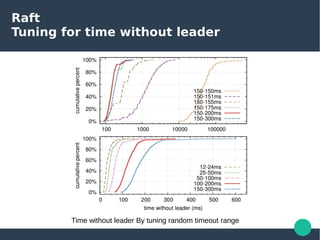

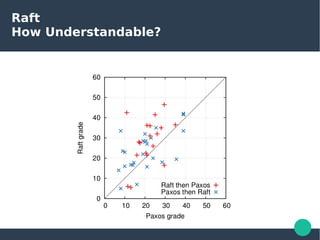

The document presents the Raft consensus algorithm, emphasizing its usability and structural differences from Paxos, aiming to provide an understandable solution for managing replicated logs in distributed systems. It discusses Raft’s unique features, including strong leadership, efficient leader election, and fault tolerance, along with its key properties and guarantees ensuring safety and consistency during consensus operations. The seminar showcases the relevance of Raft in real-world implementations, highlighting its application in distributed systems like Kubernetes and etcd.