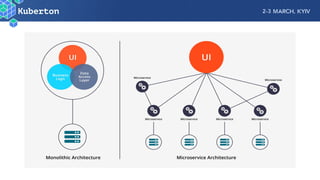

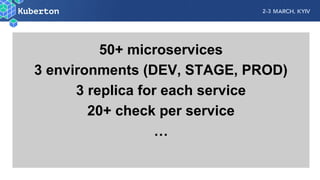

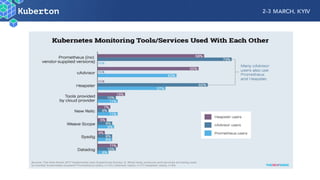

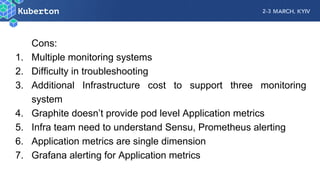

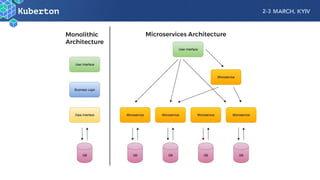

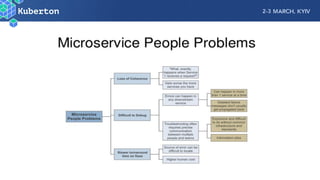

Microservices add complexity to monitoring that was not present with monolithic architectures. While microservices provide benefits, they also introduce significant monitoring challenges around communication between services. Prometheus has emerged as a powerful open source solution for monitoring microservices as it was designed to address issues of scale and flexibility that monitoring microservices requires.